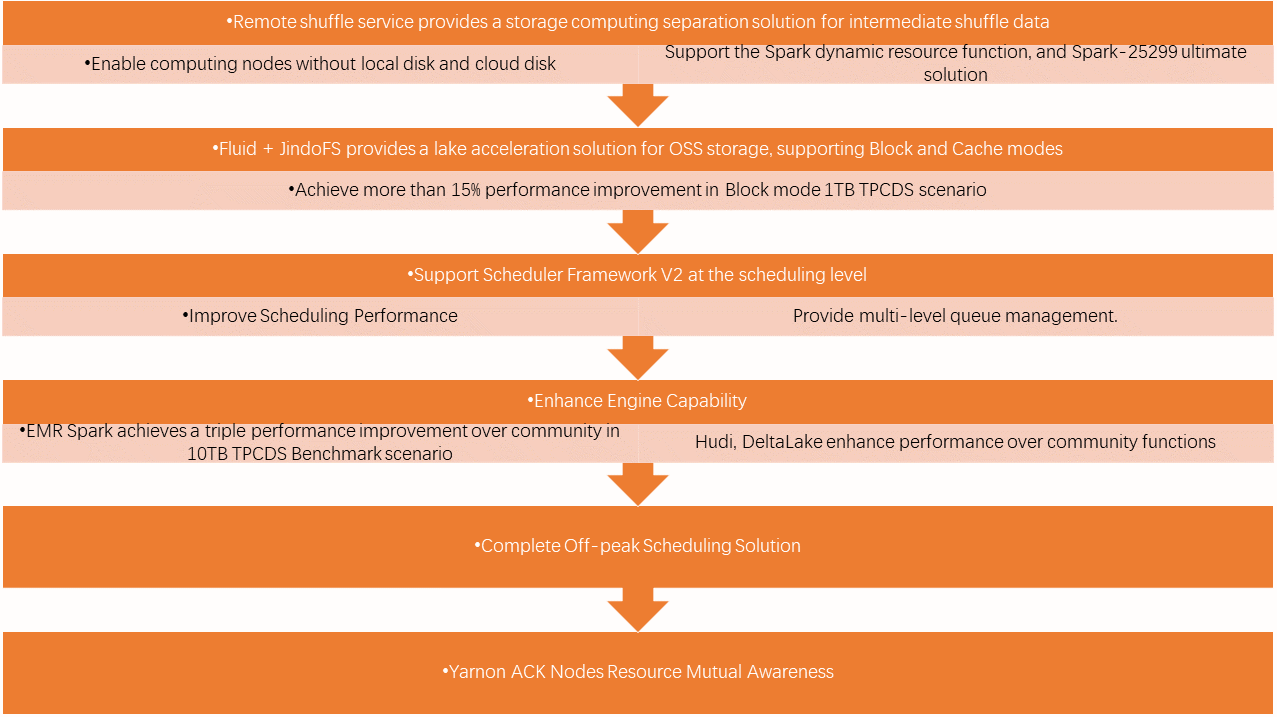

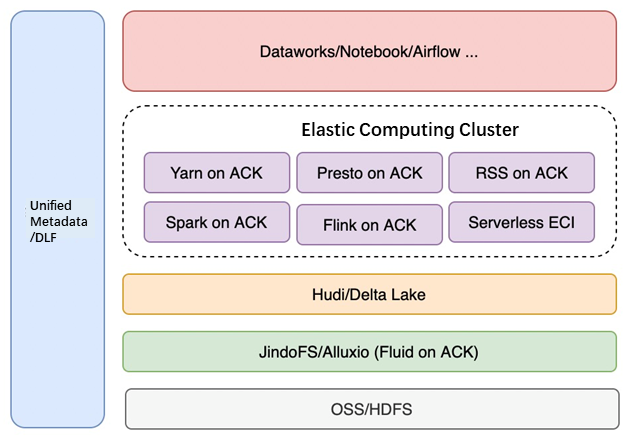

Building an HCFS file system with Object Storage System as the base:

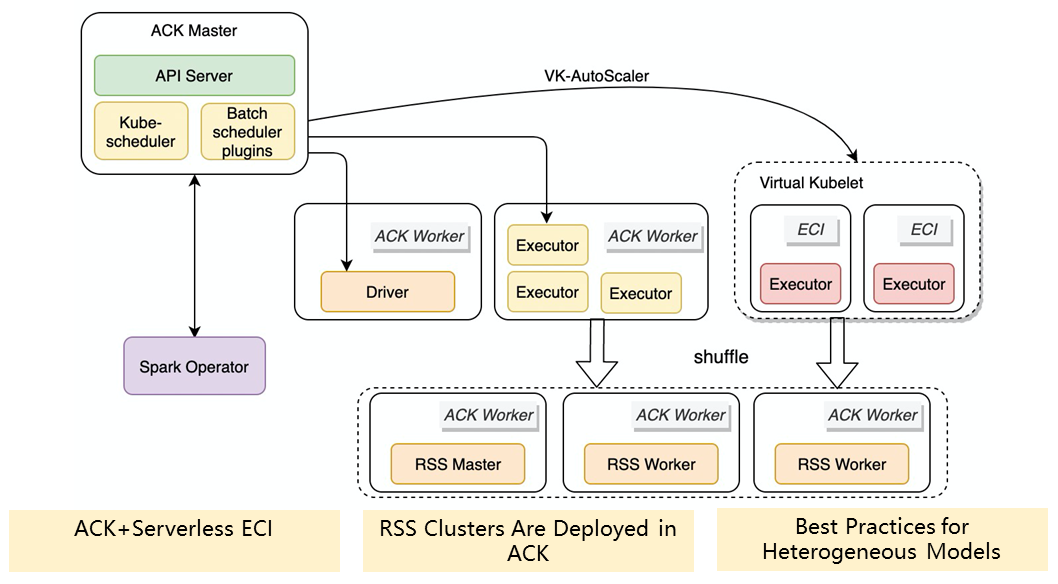

Solving Alibaba Cloud Kubernetes (ACK) hybrid heterogeneous models:

Supporting cross-room, cross-dedicated hybrid cloud effectively:

Resolving scheduling performance bottlenecks:

DLF + DDI Best Practices for One-Stop Data Lake Formation and Analysis

62 posts | 7 followers

FollowAlibaba EMR - May 11, 2021

Alibaba Cloud Native - January 25, 2024

Alibaba Cloud Native - March 5, 2024

Alibaba EMR - May 8, 2021

GAVASKAR S - June 21, 2023

Alibaba EMR - March 16, 2021

62 posts | 7 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba EMR