11.11 Big Sale for Cloud. Get unbeatable offers with up to 90% off on cloud servers and up to a $300 rebate for all products! Click here to learn more.

By Qing Gang.

Alibaba has developed and put into motion several different search engines on its various e-commerce platforms over the past 10 plus years. These search engines are the culmination of much technological and commercial value, which have been generated over the past decade. Behind many of search capabilities and scenarios of 1688.com, Alibaba's largest online wholesale marketplace in China, are the many capabilities of the search middle end employed in the background. In fact, there's a lot to say about the middle end and other technologies you cannot see on the surface.

In this article, we're going to explore the entire process behind searches, including all the related technologies, made using the primary search engine of 1688.com.

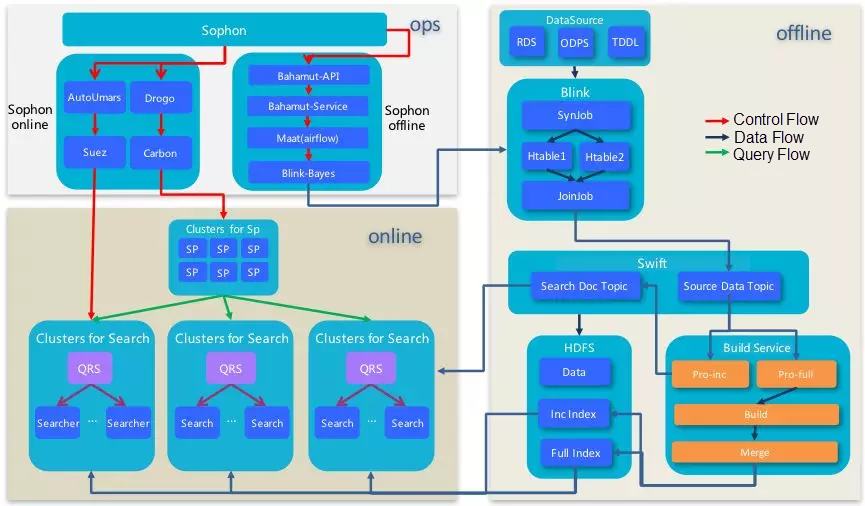

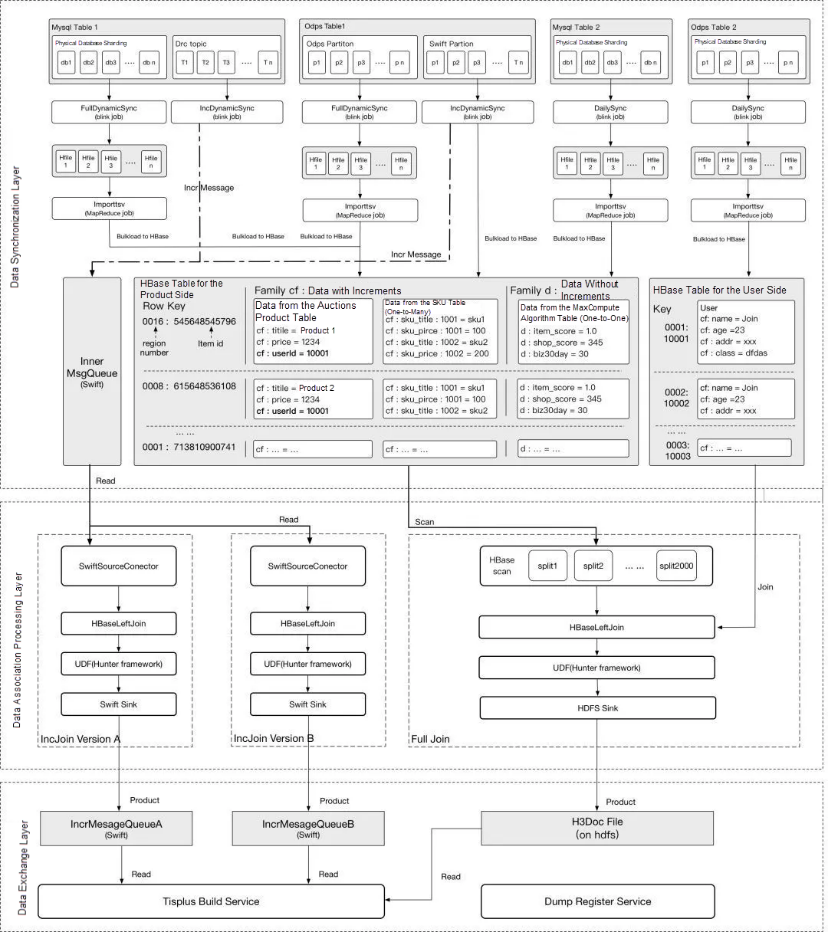

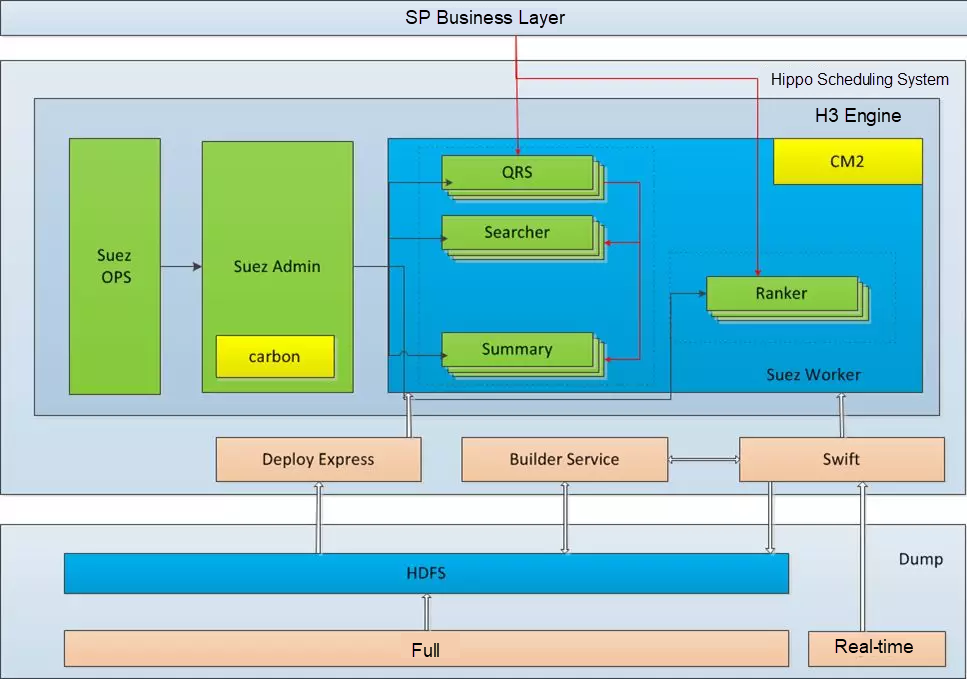

So, let's that a dive right into the overall architecture behind Alibaba's search engines. Alibaba search engines are divided into several parts, the data source aggregation (often referred to as "dumping" in the industry), full, incremental, and real-time indexing, and several integrated online services. Search services that both offer high availability and superior performance are provided for customers through several stages, from TisPlus to Bahamut (where MaaT is used to schedule workflows), and also to Blink, HDFS or Swift, Build Service, HA3, SP, and SW. Data sources are aggregated on the TisPlus and Blink platforms, Build Service and HA3 are completed on the Suez platform, and SP and SW are deployed based on the Drogo platform. The following figure show what the entire architecture looks like conceptually:

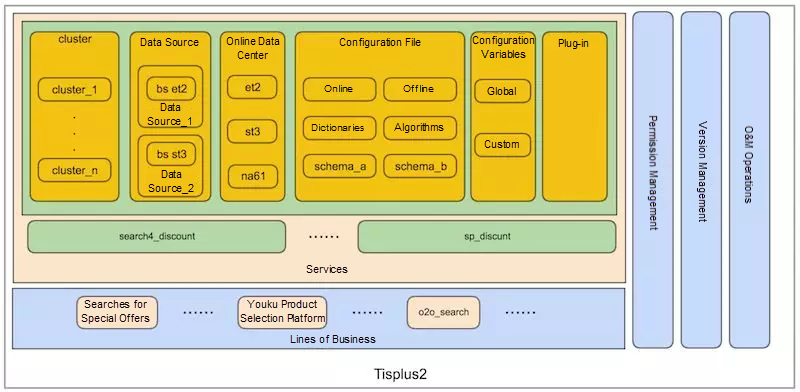

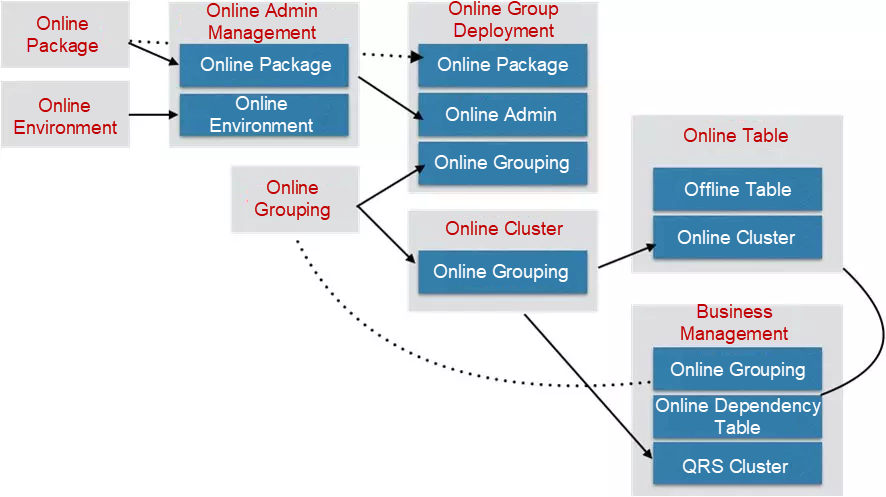

Currently, in 1688.com, search engines such as SPU, CSPU, Company, Buyoffer, and Feed and the Offer feature are maintained on TisPlus in offline mode. This platform mainly builds and maintains HA3 and SP. The following figure shows the overall architecture.

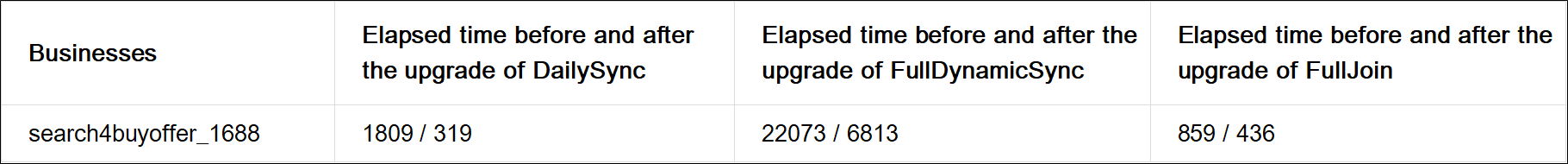

Data sources may fail to be produced occasionally during routine maintenance. This is most often because access permissions for the data source table expiring or because of zk data jitters. In terms of performance, the execution time of dumping is reduced after the Blink Batch model is introduced by the Alibaba search middle-end team. The following table lists the specific indicators using the Buyoffer engine as an example.

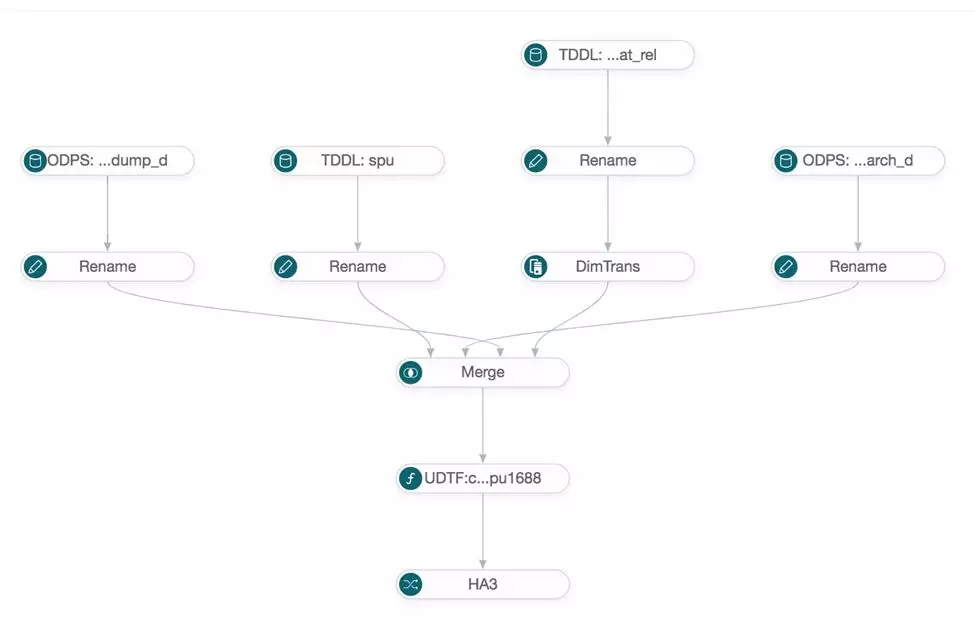

On the TisPlus platform, you can start offline dumping as instructed in the following figure.

The following figure shows an example of the directed acyclic graph (DAG) of data sources.

The below sections will explore the data source processing steps for offline dumping, which involve Bahamut, MaaT, and the data output.

Bahamut is a widget platform for offline data source processing. It translates data graphs spliced on the web end into executable SQL statements using JobManager. Currently, Bahamut supports four types of widgets:

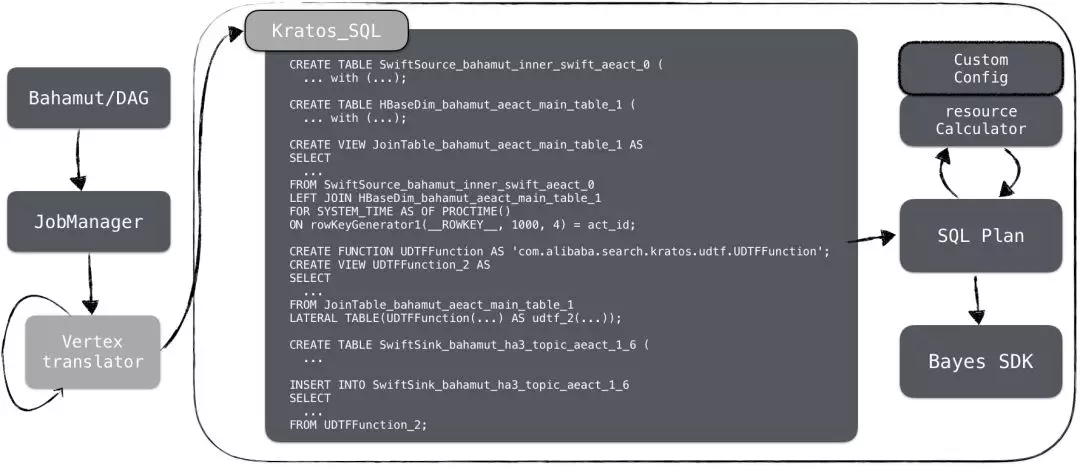

The following figure shows how data source processing works.

Next, what happens between the Bahamut and Blink stages is demonstrated in the following figure.

To make clear, this is what all goes on. Bahamut splits the task and throws it to the JobManager to convert the logical node to a physical node. After producing several nodes, Bahamut merges these nodes into a complete SQL statement. For example, as shown in the above figure, Kratos_SQL is a complete SQL statement for incremental Join, and is submitted with resource files by using BayesSDK. In addition, the platform provides a weak-personalized configuration function that allows users to control parameters such as the concurrency, node memory capacity, and allocated CPUs of a specific task.

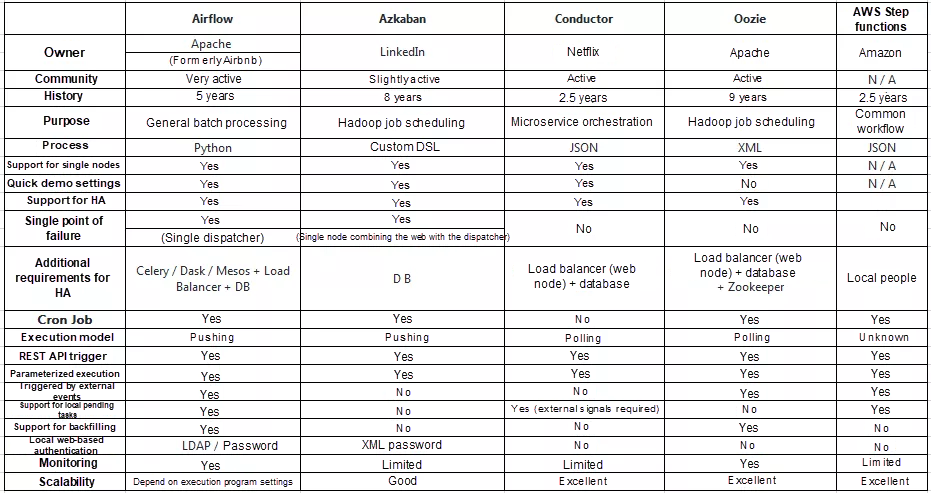

Next, let's discuss MaaT. MaaT is a distributed process scheduling system that was developed based on the open-source project Airflow. It has several powerful features, including visual editing, common nodes, Drogo-based deployment, cluster-specific management, and comprehensive monitoring and alerting.

A comparison between Airflow (which MaaT is based on) and other workflow systems is shown in the following table.

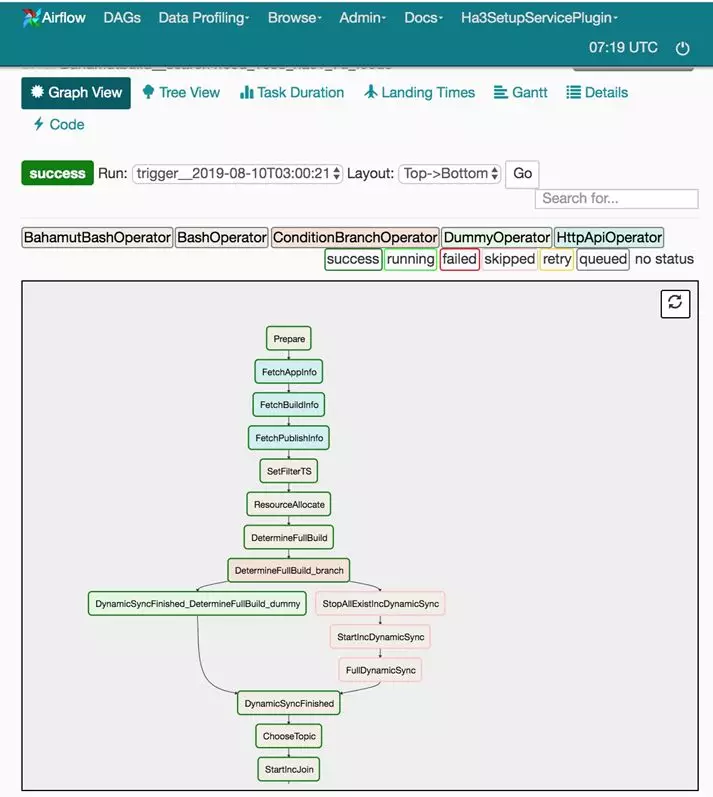

Next, the following figure shows the Maat scheduling page for the Feed engine.

When a task fails, you can "set specified steps to fail" on this page and re-execute all tasks, or find the cause of the task failure by viewing the log entries for a specific step.

After the steps outlined above, the data is output to Hadoop Distributed File System (HDFS) or Apsara Distributed File System's path (in full volume) and Swift Topic (in increments) in XML format (the isearch format). When the engine is to output a full volume of data, you can obtain the full volume of Doc files in the HDFS path for building. When the engine is to output only incremental data, you can directly obtain the incremental update message from Swift Topic and update it to the engine. The offline platform provides the TisPlus engine module with functions, such as table information query. The following data is the information contained in a HA3 table.

{

"1649992010": [

{

"data": "hdfs://xxx/search4test_st3_7u/full", // hdfs路径

"swift_start_timestamp": "1531271322", //描述了今天增量的时间起点

"swift_topic": "bahamut_ha3_topic_search4test_st3_7u_1",

"swift_zk": "zfs://xxx/swift/swift_hippo_et2",

"table_name": "search4test_st3_7u", // HA3 table name,目前与应用名称一样

"version": "20190920090800" // 数据产出的时间

}

]

}After the preceding steps, data is exported to HDFS and Swift in an XML format (specifically, the isearch format). Then, in the offline table on the suez_ops platform, set the data type to zk and configure zk_server and zk_path as required. Then, Build Service builds full, incremental, and real-time indexes and distributes them to the online HA3 cluster to provide services.

The following figure shows the logic for building an offline table on Suez.

Next, the following figure shows the logic for providing online services on Suez.

And last, the below sections describes the offline service (Build Service) and the online service (HA3).

Build Service (abbreviated BS) is a building system that provides full, incremental, and real-time indexes. Build Service provides five roles:

The roles admin, processor, builder, and merger run on Hippo as binary programs, and the rtBuilder role is provided for online services as a library.

A generationid is generated in a complete full or incremental process. This generation goes through the following steps in order: full, builder full, merge full, process inc, builder inc, and merge inc. After the inc step, builder inc and merger inc appear alternately. A "build too slow" error may occur before the HA3 upgrade in 1688.com when faulty nodes are allocated or the engine may get stuck at builder inc or merger inc.

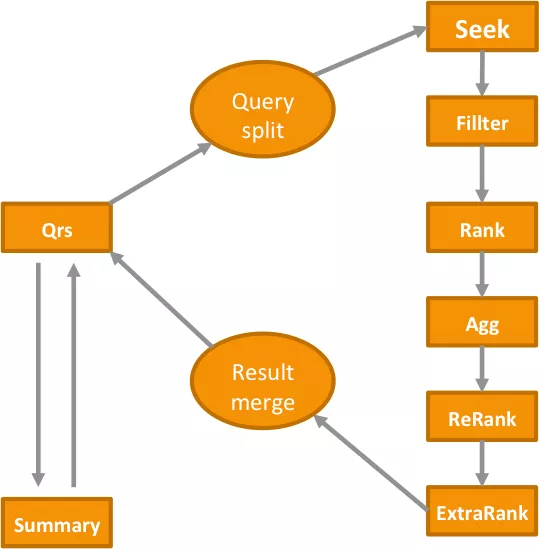

HA3 is a full-text search engine based on the Suez framework. It provides a wide range of online query, filter, sorting, and aggregation clauses, and supports user-developed sorting plug-ins. The following figure shows the service architecture.

The primary search engine in 1688.com consists of QRS, Searcher, and Summary.

Devices such as QRS, Searcher, and Summary are mounted to a CM2 (a name discovery server) to provide services. For example, QRS supports an external CM2 and can provide services to callers such as SP. Searcher and Summary support an internal CM2 and can receive requests from QRS and provide services such as recalling, sorting, and details extraction.

A query service for the caller needs to go through the following steps in order: QRS, query analysis, seek, filter, rank (rough ranking), Agg, ReRank (refined ranking), ExtraRank (final ranking), merger, and Summary (obtaining details). The following figure shows the process.

ReRank and ExtraRank are implemented by the Hobbit plug-in and the Hobbit-based war horse plug-in. The service provider can re-develop the features of the war horse plug-in as required and specify a weight for each feature to obtain the final product score.

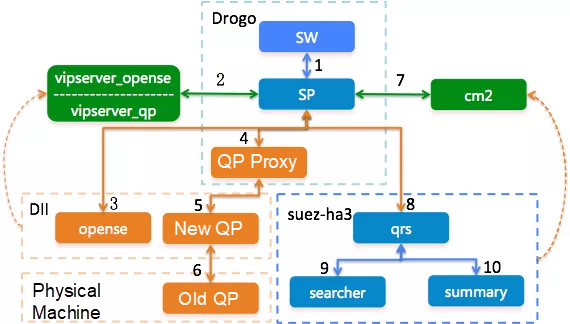

Based on the layer-2 scheduling service Carbon, Drogo is a control platform without data services. Both the SP and QP services in 1688.com are deployed on this platform.

The following figure shows the deployment of major service platforms on the search link in 1688.com.

Alibaba Clouder - April 1, 2021

ApsaraDB - June 3, 2021

ApsaraDB - February 22, 2022

Alibaba Clouder - September 1, 2020

Alibaba Clouder - August 10, 2018

Alibaba Clouder - October 23, 2020

ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn More