Table Store is a NoSQL Multi-model database developed by Alibaba Cloud. It provides PB-level structured data storage, 10 million TPS, and millisecond-level latency service. In real-time computing, Table Store provides powerful writing capability and multi-model storage forms, allowing it to be used not only as a computing result table, but also as a real-time computing source table.

Tunnel Service is a full incremental and integrated data consumption function provided by Table Store. It provides users with three types of distributed data real-time consumption tunnels: Stream, BaseData and BaseAndStream. In real-time computing, you can create a data tunnel for a data table to consume historical data and new data in the table in stream computing mode.

With the powerful write capability of the Table Store engine and the complete streaming consumption capability of Tunnel Service, you can easily integrate data storage and real-time processing.

Blink is a deeply improved real-time computing platform by Alibaba Cloud based on Apache Flink. Like Flink, Blink aims to integrate stream processing and batch processing. However, compared with the community version of Flink, Blink has many optimizations in stability, so it is more stable than Flink in some scenarios, especially in large-scale scenarios. Another major improvement in Blink is the implementation of the brand new Flink SQL technology stack. In terms of functionality, Blink supports almost all the syntax and semantics of current standard SQL. In terms of performance, Blink is also more powerful than the community version of Flink. Especially in the performance of SQL batch processing, the performance of the current Blink is more than 10 times that of the community version Flink. And, the performance of Blink can reach more than 3 times in scenarios, such as TPCDS, compared with that of Spark.

From the perspective of the technical architecture of users, the following can be achieved by combining Table Store and Blink:

This article introduces the best architecture practice of real-time computing based on Table Store and Blink, as well as the data analysis job based on Table Store and Blink.

We take a big data analysis system for situational awareness as an example to illustrate the advantages of Table Store and Blink based real-time computing architecture. If our client is the CEO of a large catering enterprise with chain stores all over the country, the CEO is very concerned about whether customers all over the country have received good service in the stores. For example, will Taiwanese customers and Sichuan customers have different taste evaluations? Have the dishes become less popular? To solve these problems, the CEO needs a big data analysis system, which, on one hand, can monitor the sales information of dishes in various regions in real time, and on the other hand, can regularly analyze historical data and provide changes in customer trends.

From a technical point of view, the client needs: 1. The real-time processing capability of customer data, continuous aggregation of new order information, and big screen display and daily report display; 2. The ability of offline analysis of historical data, to analyze offline data for situational awareness and decision-making recommendations.

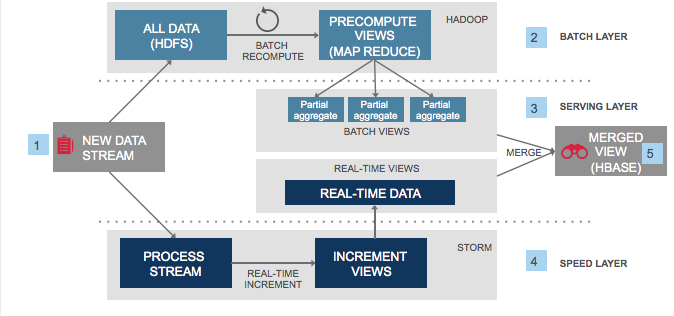

The classic solution is basically based on the Lambda Big Data Architecture. As shown in Figure 1, the user data needs to enter both the message queue system (New Data Stream, such as Kafka) as the input source for real-time computing tasks, and the database system (All Data, such as HBASE) to support the batch processing system. Finally, the results of both are written to the database system (MERGED VIEW) and displayed to the user.

Figure 1. Lambda Big Data Architecture

The disadvantage of this system is that it is too large and needs to maintain multiple distributed subsystems. Data must be written into the message queue and be imported into the database, and the dual-write consistency between the two must be processed or the synchronization scheme between the two must be maintained. In terms of computing, it is technically difficult and labor intense to maintain two sets of computing engines and develop two sets of data analysis code.

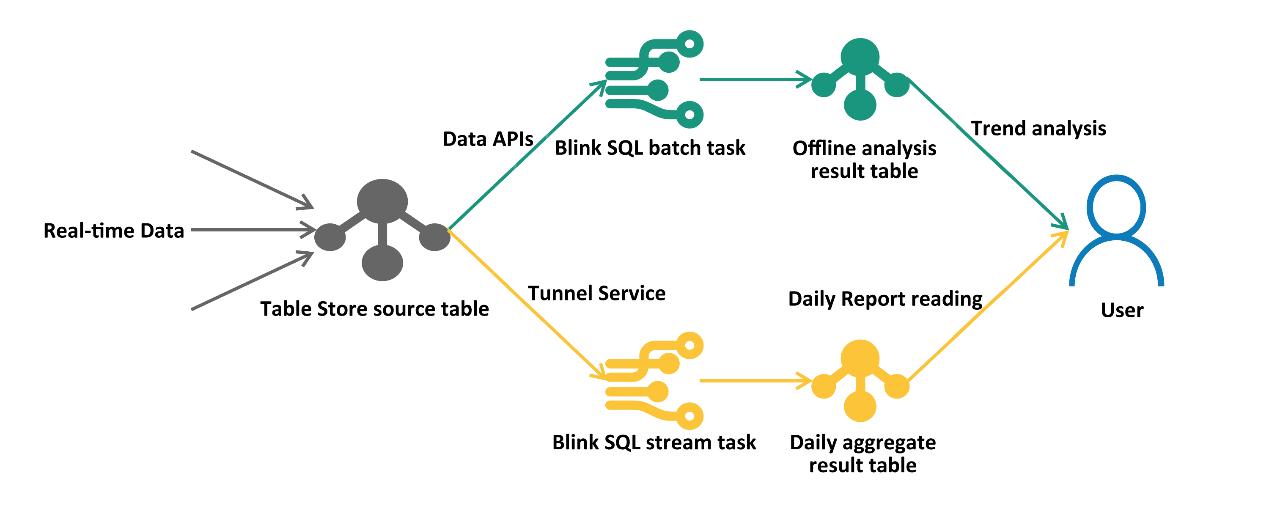

Using Table Store with powerful writing and real-time data consumption capability, and Blink with high performance SQL processing and stream-batch integration, the classic Lambda architecture can be simplified as shown in Figure 2, a real-time computing architecture based on Table Store and Blink:

Figure 2. Real-time computing architecture based on Table Store and Blink

The dependency systems introduced by this architecture is greatly reduced, and both the labor and resource costs decline significantly. Its basic processes include:

After introducing the architecture, we will quickly develop a real-time daily report computing SQL based on Table Store and Blink, to count the number of real-time meal orders and sales of meals in each city on a daily basis using streaming.

In the Table Store console, create a consumption order table consume_source_table(primary key: id[string]), create an Stream tunnel blink-demo-stream under Order Table-> Tunnel Management, and create a daily result summary table result_summary_day(primary key: summary_date[string]);

On the Blink development interface, create a consumption order source table, a daily result summary table, an aggregated view per minute, and write SQL:

--- Consumption order source table

CREATE TABLE source_order (

id VARCHAR,-- Order ID

restaurant_id VARCHAR, -- Restaurant ID

customer_id VARCHAR,-- Customer ID

city VARCHAR,-- City

price VARCHAR,-- Price

pay_day VARCHAR, -- Order Time yyyy-MM-dd

primary(id)

) WITH (

type='ots',

endPoint ='http://blink-demo.cn-hangzhou.ots-internal.aliyuncs.com',

instanceName = "blink-demo",

tableName ='consume_source_table',

tunnelName = 'blink-demo-stream',

);

--- Daily result summary table

CREATE TABLE result_summary_day (

summary_date VARCHAR,-- Summary date

total_price BIGINT,-- Total order amount

total_order BIGINT,-- Number of orders

primary key (summary_date)

) WITH (

type= 'ots',

endPoint ='http://blink-demo.cn-hangzhou.ots-internal.aliyuncs.com',

instanceName = "blink-demo",

tableName ='result_summary_day',

column='summary_date,total_price,total_order'

);

INSERT into result_summary_day

select

cast(pay_day as bigint) as summary_date, -- Time partition

count(id) as total_order, -- Client IP address

sum(price) as total_order, -- Client deduplication

from source_ods_fact_log_track_action

group by pay_day;Go online to aggregate SQL, and write order data into the Table Store source table. You can see the number of daily orders continuously updated by result_summary_day, and the large screen display system can directly interface with the root result_summary_day.

Compared with traditional open-source solutions, the big data analysis architectures based on Table Store and Blink has many advantages:

As an introductory piece, this article mainly introduces the advantages of the big data architecture using Table Store and Blink, as well as simple SQL demonstrations. Subsequently, more complex and close-to-scenario articles will be released one after another. Please check them out!

References

Seata: Simple Extensible Autonomous Transaction Architecture

Double 11 Real-Time Monitoring System with Time Series Database

2,593 posts | 793 followers

FollowAlibaba Clouder - September 27, 2019

AliCloud-TechLab - August 25, 2021

Alibaba Cloud Native Community - July 19, 2022

Alibaba Cloud Storage - February 27, 2020

XianYu Tech - December 13, 2021

Alibaba Clouder - November 12, 2018

2,593 posts | 793 followers

Follow Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Clouder