This week, Alibaba’s Qwen3-235B-A22B non-thinking mode (Qwen3-235B-A22B-Instruct-2507), secured third place on the competitive Chatbot Arena leaderboard just days after its launch; Meanwhile, Alibaba released its ungraded Qwen3-30B series with three streamlined models optimized for cost efficiency and development flexibility, empowering developers worldwide to harness cutting-edge AI without comprising performance.

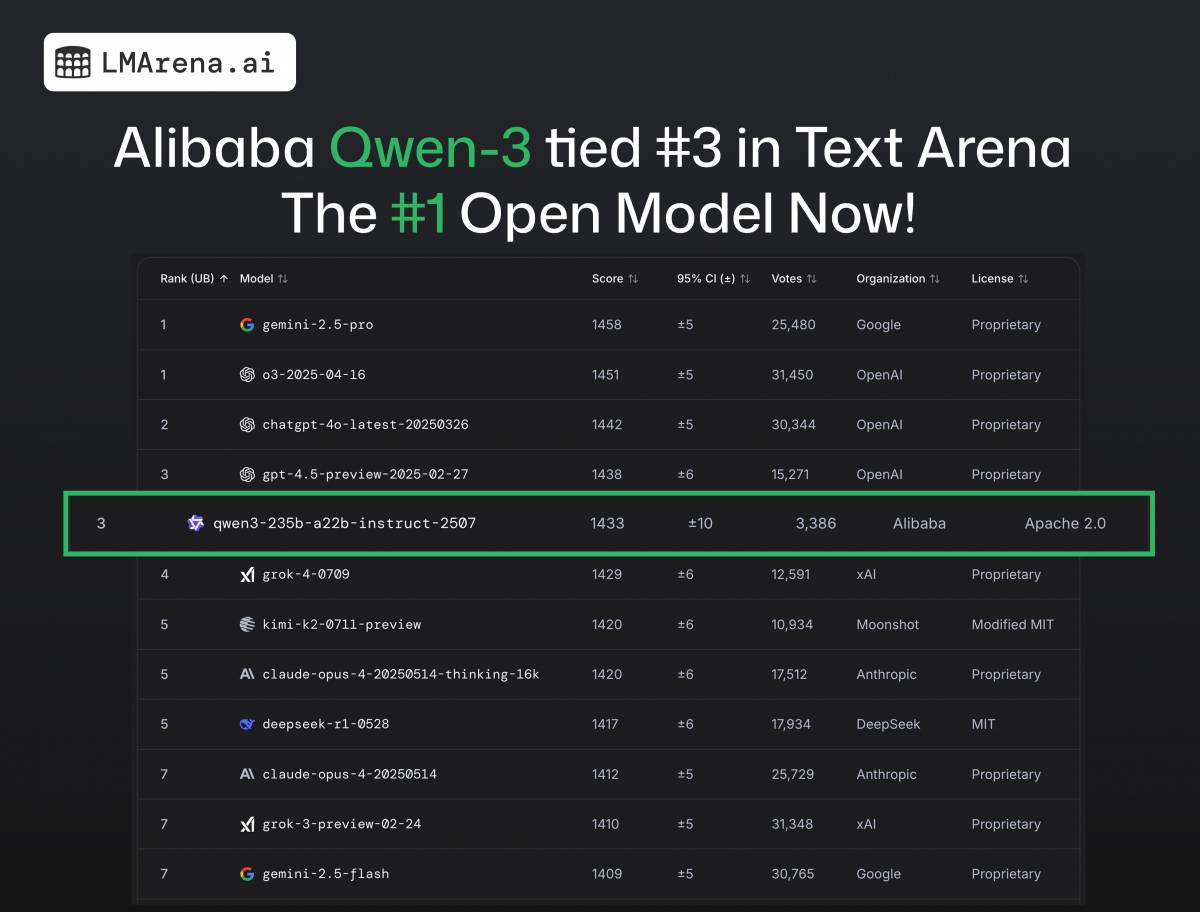

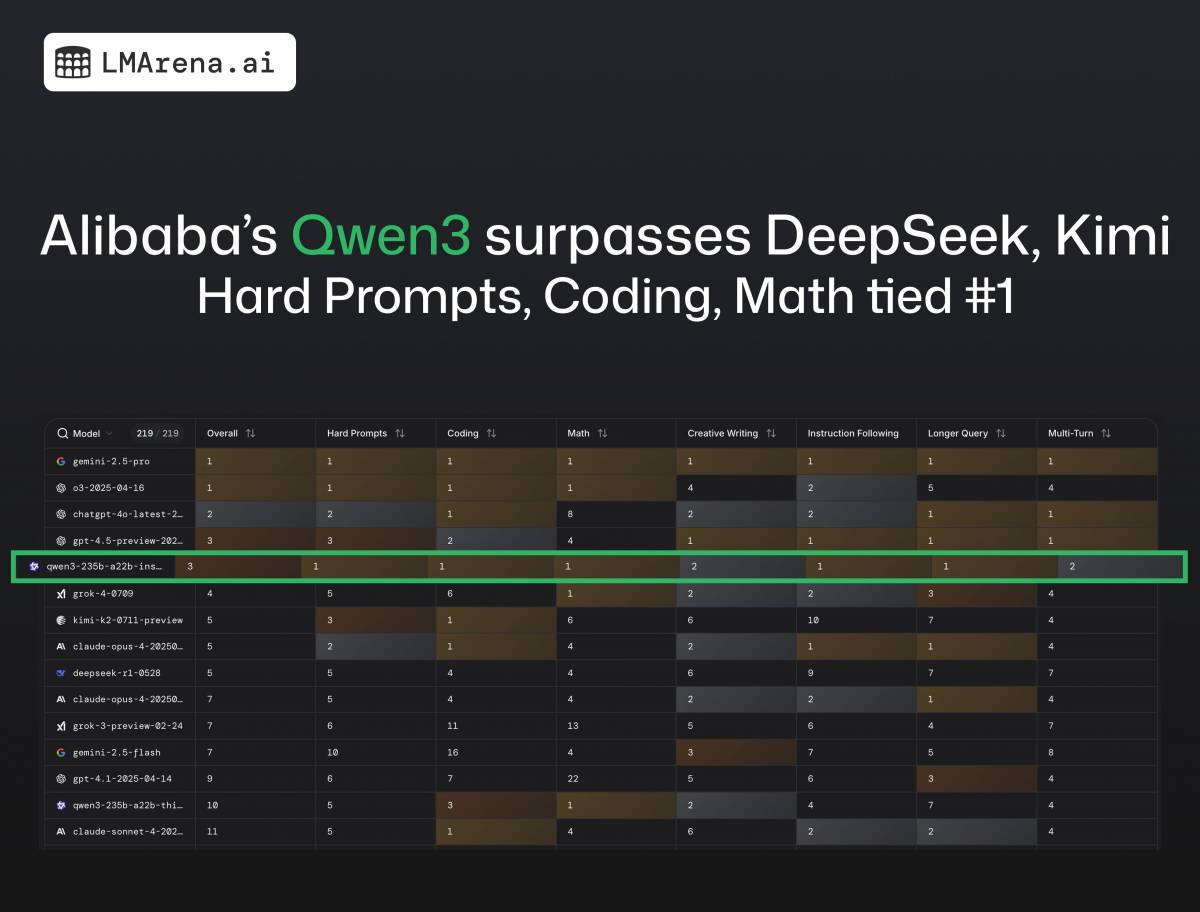

Qwen3-235B-A22B-Instruct-2507, Alibaba’s latest open-source large language model featuring 235 billion parameters and significant improvements in general knowledge, has secured third place on Chatbot Arena—tying with GPT-4.5 and Grok-4. Additionally, Qwen3 also ties for the first in Coding, Hard Prompts, Math, Instruction Following and Longer Query on Chatbot Arena as the top open model.

Qwen3 Undertakes Chatbot Arena Top 3

Chatbot Arena, a widely respected benchmark platform for LLMs, evaluates models through anonymous comparisons in a crowdsourced and randomized format. This achievement further underscores the strong performance and growing competitiveness of Alibaba’s open-source language models in the global AI landscape.

Qwen3 ties for the first in Coding, Hard Prompts, Math, Instruction Following and Longer Query as the top open model

With 256K long-context understanding capabilities, Qwen3-235B-A22B-Instruct-2507 exhibits significant improvements in general capabilities, including instruction following, logical reasoning, text comprehension, mathematics, science, coding and tool usage. It also showcases remarkable alignment with user preferences in subjective and open-ended tasks, enabling more helpful responses and higher-quality text generation.

In addition to open sourcing top-notched powerful large language models, Alibaba has been releasing upgraded lightweight yet powerful smaller-size models, which are easier to be deployed and run more cost efficiently for global developers. This week, the company unveiled a series of Qwen3 models with 30 billion parameters, including a thinking model (Qwen3-30B-A3B-Thinking-2507), a non-thinking model (Qwen3-30B-A3B-Instruct-2507) and a streamlined coding model (Qwen3-Coder-30B-A3B-Instruct).

This article was originally published on Alizila written by Crystal Liu.

Alibaba Cloud and FLock.io Host AI Hackathon for Student Developers from Leading Korean Universities

Introducing Qwen-Image: Novel Model in Image Generation and Editing

1,344 posts | 471 followers

FollowAlibaba Container Service - August 11, 2025

Alibaba Cloud Community - September 27, 2025

Alibaba Cloud Community - October 14, 2025

Alibaba Cloud Community - June 30, 2025

Alibaba Cloud Community - October 14, 2025

Alibaba Cloud Community - September 16, 2025

1,344 posts | 471 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community