By Jiang Xiaowei (Liangzai), Alibaba Cloud Researcher, and Jin Xiaojun (Xianyin), Alibaba Cloud Senior Technical Expert

When we talk about data warehouses, data lakes, and stream-and-batch centralized processing proposed by the Flink community, what problems can these technologies solve? Today, Alibaba Cloud researchers will explain them from the technical perspective.

Starting from real business scenarios, this article analyzes the problems incurred and ultimately answers the following questions: Why do you need a data warehouse or data lake solution? What are the pain points and core problems? How can you design a system to ensure a hitless implementation?

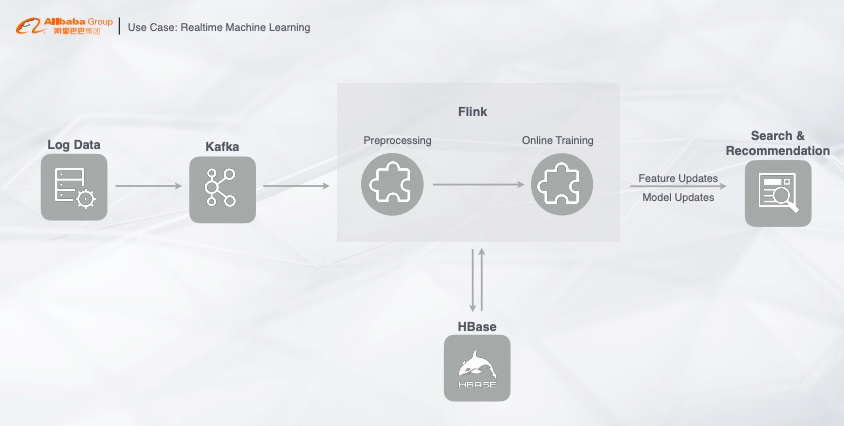

First, let's look at a typical real-time business scenario, which is also the business scenario for most real-time computing users. In fact, the entire processing path composes a typical stream computing architecture. Specifically, user behavior data or Binlog entries for database synchronization are written to Kafka. Then, these real-time data consumed by Kafka are subscribed by synchronous tasks created by Flink. During this process, several tasks need to be done. For example, in the pre-processing phase, there is an online training task, which interconnects certain dimension tables and attributes. Sometimes, these attributes can all be loaded to compute nodes, however, they can also be too large to be loaded. Therefore, HBase is additionally required for high-concurrency point lookup. Another example is that some samples can also be written into HBase to create an interaction, and eventually sampling data or training models generated in real time need to be pushed to the search engine or algorithm modules for further processing. This is a complete path of machine learning, which is quite typical.

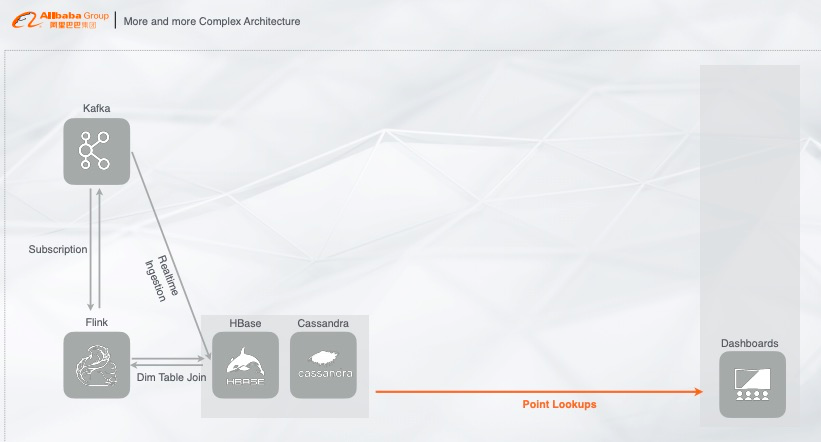

The aforementioned path can work with an offline path as complementary solutions. Although some companies have not yet established the real-time path and are using the offline path only, this set of paths is already a very mature solution. Then, what problems would occur by increasing the complexity of the online path? To test this, we need to make a change to the processing path. The path now writes real-time data to Kafka, and then performs real-time machine learning or metric computing with Flink. Next, it passes the computing results to online services, like HBase or Cassandra, for point lookup. Finally, the results will be delivered to the online dashboards for metrics data visualization.

However, this process involves a problem. HBase or Cassandra has a weak analysis capability and is poor in analyzing the data and samples generated online.

Then, what can be done to overcome this?

Some smart developers may use the following methods.

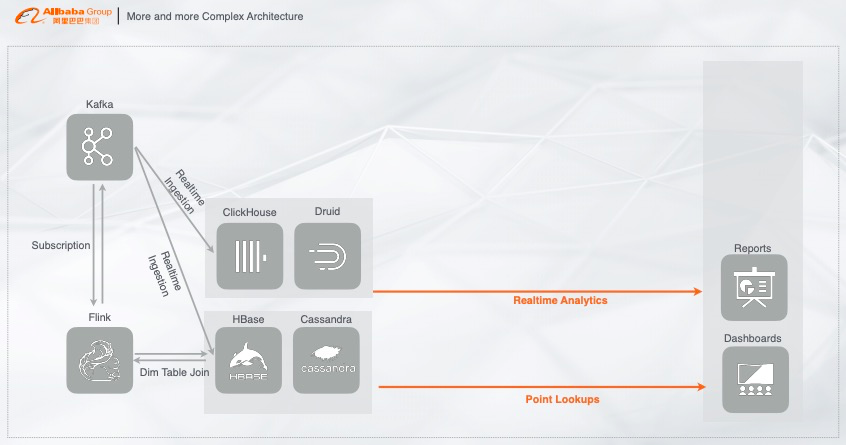

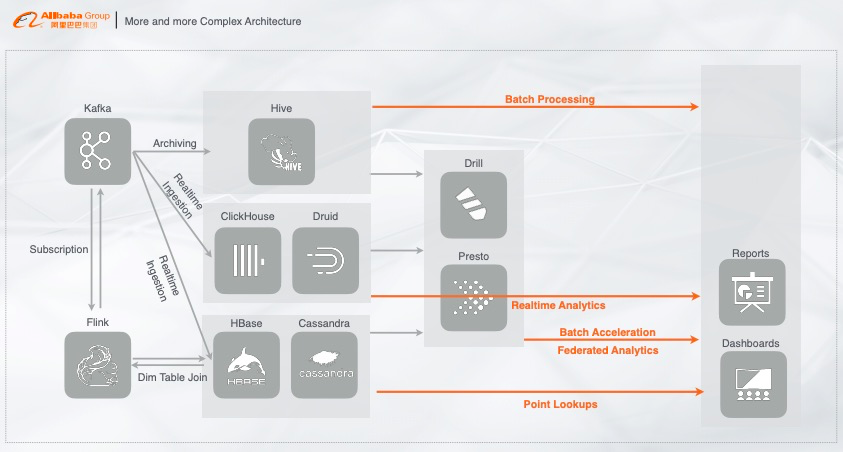

When HBase or Cassandra does not meet the analysis requirements, they redirect real-time data to a system with a strong data analysis capability, such as ClickHouse or Druid. These systems adopt a typical column-store architecture that can build indexes and use vectorization to accelerate columnar computing analysis. Then, these systems interface with analysis software to represent the resulting analysis data as real-time reports and enable real-time analysis and presentation. This facilitates real-time and efficient analysis.

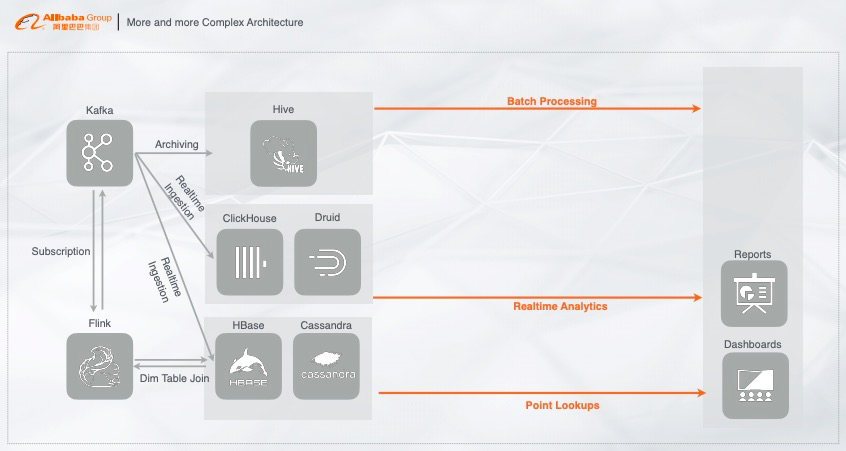

However, there are some additional requirements to the preceding architecture, that is, archiving the real-time data to an offline system and performing a full history-based analysis of the offline data. Based on this, the most common path used by developers is offline archiving real-time data to Hive and running offline algorithms in Hive, such as the T+1 algorithm. By leveraging offline data processing capabilities of Hive, the architecture meets the requirements of offline scenarios.

As the business has real-time data and offline data, what can we do when we need to perform a federated query on them both?

Among the open-source systems on the market, the systems most commonly used by developers are Drill and Presto. Multidata federated queries are performed through an architecture layer similar to Massively Parallel Processing (MPP). If the query is not efficient enough, Druid and ClickHouse can be used for acceleration.

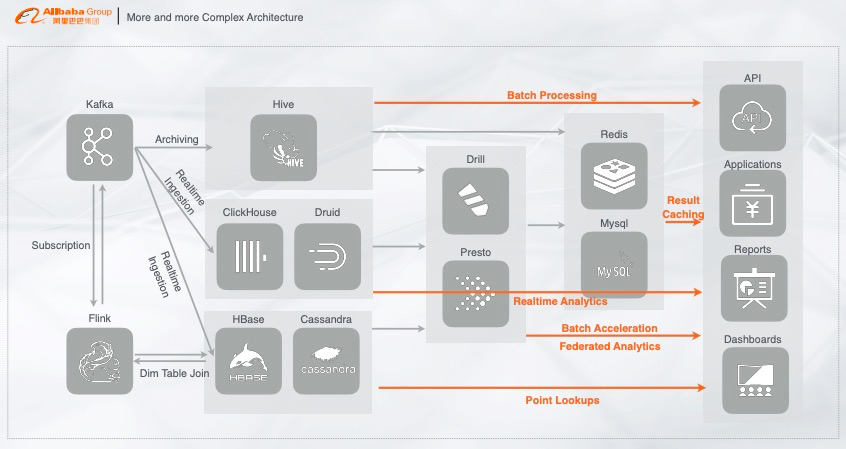

One problem with the preceding federated query path is that it is very difficult to achieve a high queries per second (QPS) value. For example, frontend calls require thousands of QPS. If all the queries go through the speed layer for efficiency improvement, the performance will definitely decline. Then, what can we do in this case? The most common solution is to add a cache at the speed layer to support high-concurrency requests. For this purpose, Redis or MySQL is often added to cache data so that the architecture can provide server-like capabilities and online services.

The aforementioned big data architecture is used by most companies. Also, many companies have extracted a part of the architecture based on their business scenarios, so that they can build a complete big data architecture that processes both real-time and offline data. Thus far, this seems to be a perfect solution for practical problems. However, upon thinking about it, we can identify some potential problems that can make the maintenance of the architecture increasingly difficult. So, what are these problems? Let's take a closer look at them.

This big data solution is essentially a Lambda architecture. In this architecture, raw data is a source, such as user behavior logs and Binlog entries, which is passed to two processing paths. One of them is a real-time path, that is, the speed layer. The real-time path writes data to a real-time storage system through stream processing. The other path is the offline path, which uses batch processing. The most typical offline path works as follows. First, it archives data to Hive. Then, it speeds up queries through the query layer, such as Spark. Finally, it interfaces with online applications, dashboards, or third-party business intelligence (BI) tools.

Regarding these open-source storage products on the market, we will verify whether they can meet the business needs one by one.

Hive is based on offline storage. HBase and Cassandra provide point lookup functions. Greenplum on MPP architecture is claimed to be Hybrid Transaction and Analytical Processing-oriented (HTAP-oriented). The emerging ClickHouse system is used for quick analysis. These storage products are all available as solutions.

However, each of these storage products is a data island. To achieve point lookup, a copy of data needs to be stored in HBase. To achieve the rapid analysis of column store, a copy needs to be stored in Druid or ClickHouse. To achieve batch processing, another copy needs to be stored in Hive. As a result, this leads to the following problems:

Data will be stored in multiple systems, increasing redundancy.

Each of these systems adopts a different data format. Therefore, data needs to be converted between them, which increases maintenance costs. Especially when the business grows to a certain scale, the maintenance costs increase dramatically.

Multiple systems need to be fully connected. As different products are developed in different ways, this requires extra efforts for learning a variety of systems, especially for newcomers. As a result, this increases learning costs.

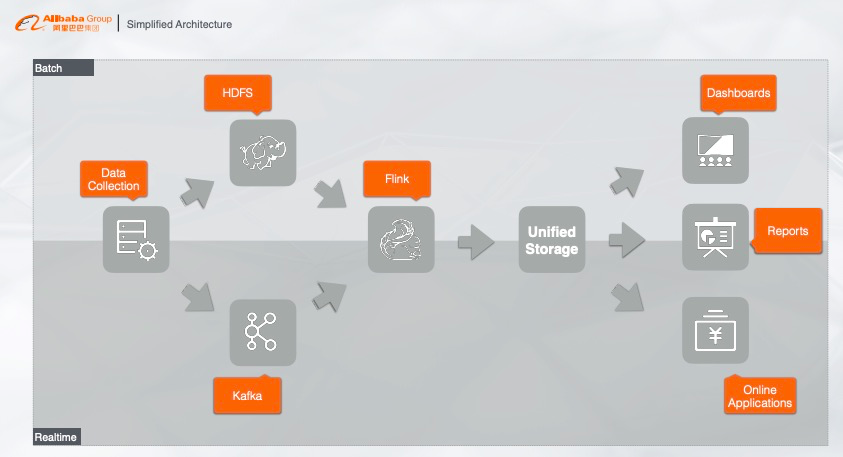

How do we solve the problems mentioned in the previous section in such a highly redundant and complex system? A useful practice is to simplify the Lambda architecture. The essence of the business is to perform real-time or offline processing (batch processing) on a data source. Starting from the business scenario, we want both real-time and offline data to be centrally stored in a single storage system, and the system must meet various business needs. This sounds illusory and complicated, because the system we want has to support a variety of scenarios. But if we could make it, the architecture would be perfect and essentially solve the computation of centralized stream and batch processing, as the same set of SQL statements can complete both stream processing and batch processing. Furthermore, by exploring these underlying principles, we can also solve the storage problem because both stream data and batch data can be stored in the same product.

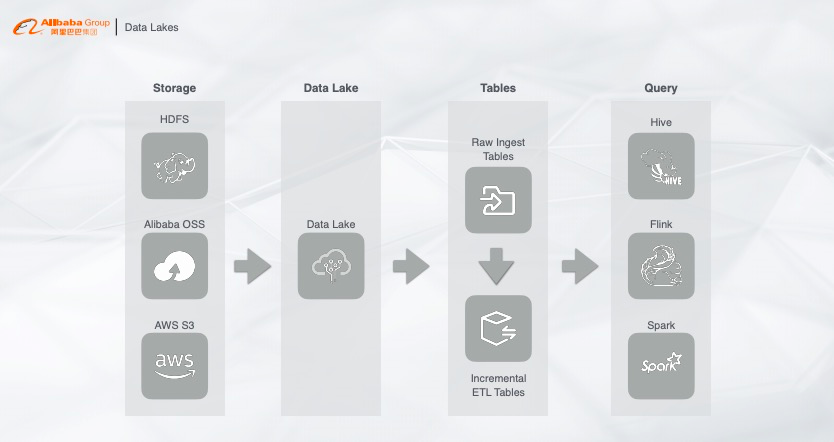

For simplified architectures, let's see some products released by the open source community, such as data lakes.

First, the collected data is stored in a centralized manner. No matter where the data was stored, HDFS, OSS, or AWS, the data is now saved in data lakes as increments. Then, a query product is selected for the query layer based on the business requirements, which can be Hive, Spark, or Flink. In this way, both real-time and offline data can be queried directly. The entire architecture looks great, but the problems are:

The open-source real-time write operation is actually incremental rather than real-time. The difference is that the written data can be immediately retrieved by a query after a real-time write, which however is not possible after an incremental write. Instead, an incremental write writes data in batches to improve throughput. Therefore, this solution can not fully meet the real-time requirements for the data.

This architecture is expected to perform both real-time analysis and dimension table queries in stream computing. But, will the data in the storage support a high-concurrency query through Flink? For example, a query with hundreds of millions of QPS.

The whole solution is based on an offline computing engine and only supports low concurrency. To support the concurrency of several thousand QPS, a lot of resources are required, which increases costs.

In summary, this solution cannot completely solve the problem at present and can only be used as a preliminary solution.

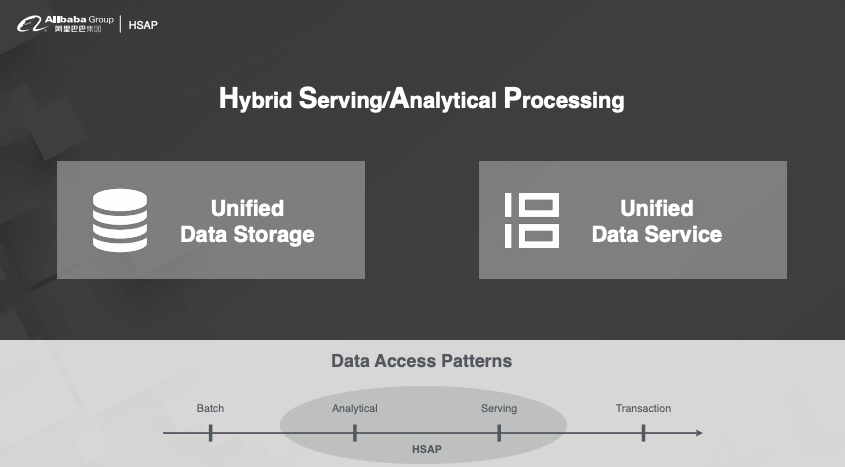

After making a detailed analysis of the preceding problems, according to the requirement of query concurrency or query latency, we have divided computing patterns into the following four categories.

At present, HTAP is popular on the market. After research, we found the optimization directions of Analytical (A) and Transaction (T) are different, therefore, HTAP is a pseudo proposition. To perform the T operation, the write path will be very complex, and the QPS can not meet the requirements. However, if we slightly lower the requirement for T, we find that Analytical and Serving (S) are closely related and can be used together. So we proposed a new architecture called HSAP, which abandons T and adopts S. Now, what we need to do is to store the data that used by Serving and Analytical in one system and process it through one set of analysis engines.

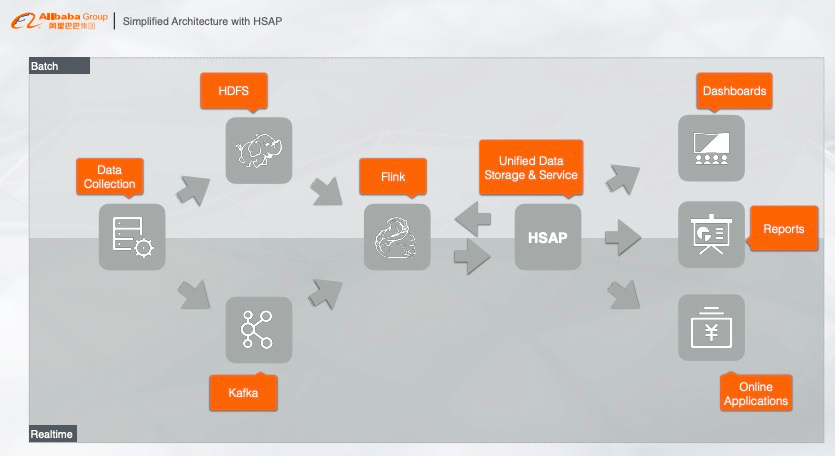

After interfacing the HSAP system with the preceding simplified architecture, a perfect big data architecture is born. The HSAP system performs batch processing for offline data, by joining dimension tables with Flink. Then, it connects to online applications to provide online services, such as reports and dashboards.

Then, how do we use online applications and systems after the introduction of the HSAP system? An ecosystem is required to reduce the difficulty of use. After repeated research, we identified the PostgreSQL ecosystem, as it has the following advantages:

PostgreSQL has a complete set of tools, which provide strong support both in development tools and BI analysis tools.

Generally, writing documents takes a lot of time. PostgreSQL has detailed documents, which can be directly reused to reduce the workload. At the same time, developers only need to follow the PostgreSQL documents to develop, helping reduce learning costs.

PostgreSQL supports diversified extensions, such as PostGis for saving geographical information to databases and Matlab. Developers can choose appropriate extensions based on their business needs.

Based on the preceding introduction, here comes the key topic today, which is the new-generation real-time interactive engine released on Alibaba Cloud. This engine is called Hologres. Why is it called Hologres? Hologres consists of Holographic and Postgres.

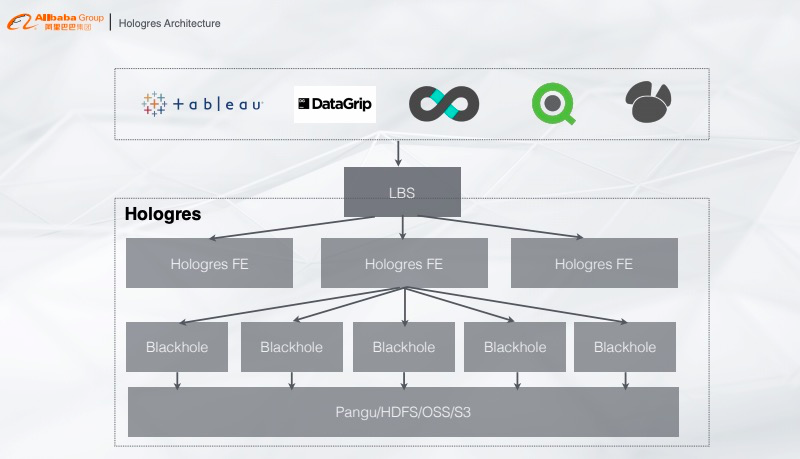

The architecture of Hologres is simple. From the bottom up, the bottom layer is a centralized storage system, which can be the Apsara distributed file system of Alibaba Cloud, HDFS, OSS, or S3. On the top of the storage layer is a computing layer, which provides computing services in an MMP-based architecture. On the top of the computing layer, the FE layer is used to distribute the plan to all computing nodes based on queries. At the top, the PostgreSQL ecosystem is connected. Queries on Hologres can be made when Java Database Connectivity (JDBC) or Open Database Connectivity (ODBC) drivers are ready.

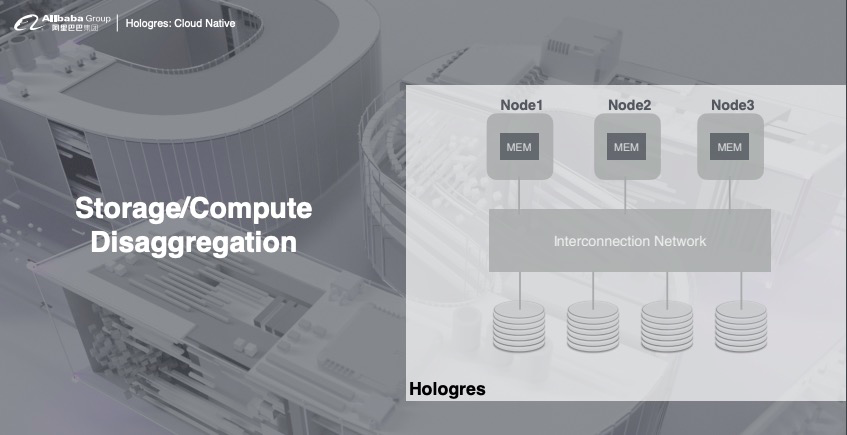

Hologres is designed for the disaggregation of storage and computing. Computing is completely deployed on Kubernetes, whereas the storage can be a shared storage, HDFS, or OSS in the cloud based on business requirements. In this way, you can elastically scale resources based on your business needs, and effectively solve concurrency problems caused by insufficient resources.

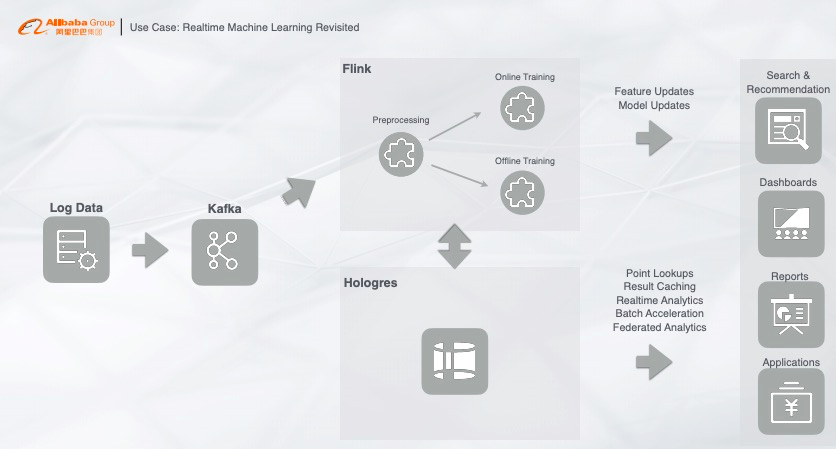

The following describes a typical application of Hologres in Alibaba. Data is written to and then pre-processed by Flink in real time, such as by going through real-time ETL processing or real-time training, and the processing results are directly written to Hologres. Hologres supports joint queries on dimension tables, result caching, complex real-time interactions, offline queries, and federated queries, which allows the entire business system to perform data operations only through Hologres. The online system can access data in Hologres through the PostgreSQL ecosystem. This eliminates the need to integrate with other systems, and the query and storage problems of the previously mentioned architecture.

Ultimately, to achieve a true real-time data warehouse without compromises, we need Flink and Hologres only. Flink is used for extract, transform, and load (ETL) processing of the stream and batch data. Then, Flink writes the processed data to Hologres for centralized storage and query. In this way, the business end can provide online services by directly connecting to Hologres.

Get to know our core technologies and latest product updates from Alibaba's top senior experts on our Tech Show series

2,593 posts | 793 followers

FollowAlibaba Clouder - September 27, 2020

Apache Flink Community - July 28, 2025

Alibaba Cloud MaxCompute - March 3, 2020

Alibaba Cloud Community - September 17, 2021

Apache Flink Community - June 11, 2024

Apache Flink Community China - August 12, 2022

2,593 posts | 793 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Clouder