By DataWorks Team

During the 2020 Double 11 shopping festival, cloud native real-time data warehouses were implemented in Alibaba's core data scenarios for the first time. This helped the team make business decisions in real-time full and perform millisecond-level processing on massive amounts of data. The data development efficiency of the search and recommendation business was quadrupled, and the package data procedures of Cainiao's logistics were optimized from an hour to three minutes. The original minute- and hour-level business of Kaola were completed in just one minute. This means that real-time analysis and decision-making of big data has become standard products in the rapidly changing market competition. Today, we are going to share Alibaba Cloud's real-time big data solutions to help enterprises make real-time decisions.

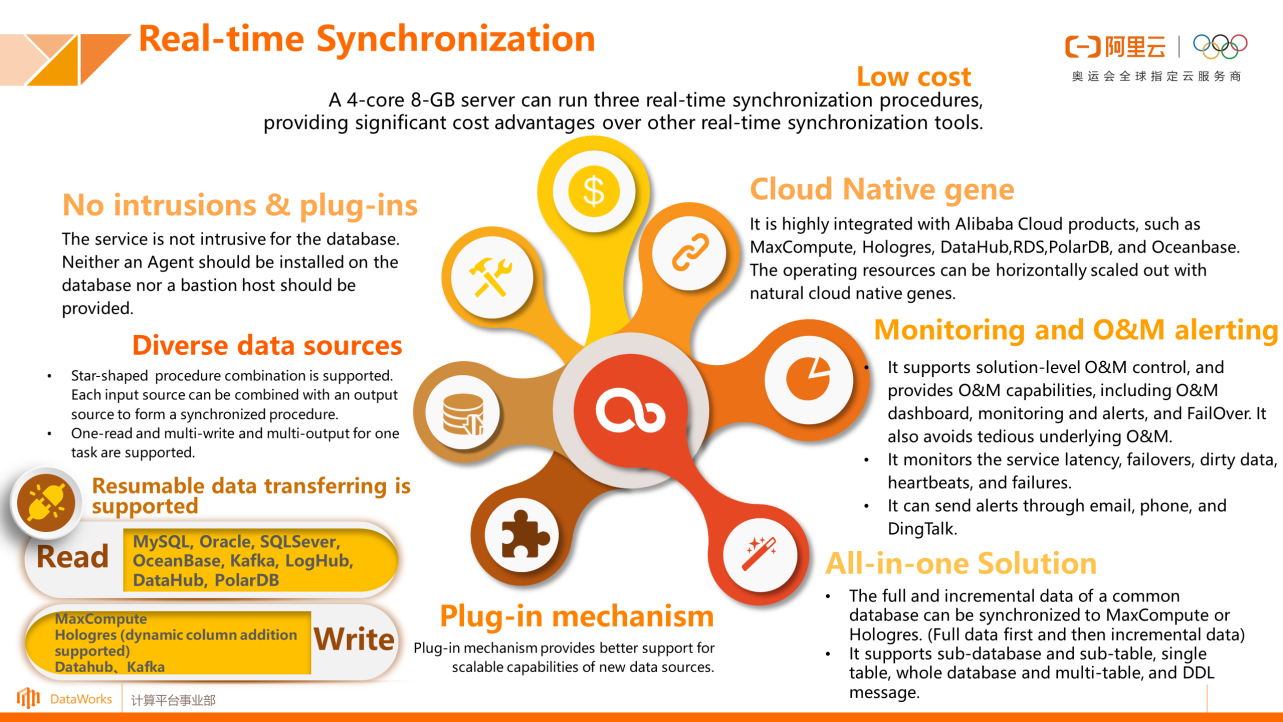

The first step of real-time analysis and decision-making is to synchronize data to the big data computing engine in real time. Alibaba Cloud DataWorks lets you achieve this by adopting an exclusive high-performance engine for data integration. With the same machine specifications, the real-time synchronization performance of RDS is up to twice that of other data synchronization solutions, and the price is 75% lower than that of others. By using DataWorks, enterprises can implement efficient and reliable real-time data synchronization with low costs.

DataWorks can be traced back to DataX 1.0 and 2.0 released in 2011. Eventually, DataX 3.0 was officially made available publicly. After that, the Data Integration service was established with unified features of public cloud, private cloud and Alibaba. In 2019, DataWorks was commercialized and the exclusive resource group was released. The pay-as-you-go and subscription billing modes were also available to users. In 2020, the real-time synchronization solution for full and incremental data was officially released.

In the real-time synchronization solution, data of MySQL, Oracle, IBM DB2, SQL server, PolarDB, and other relational databases can be fully synchronized offline to big data products, such as MaxCompute, Hologres, Elasticsearch, Kafka, and DataHub. The change information of the relational databases can be then extracted in real time and synchronized to big data products. For offline data warehouses like MaxCompute, data can be synchronized to Log tables, split to Delta tables, merged to Base tables, and finally written to MaxCompute. By doing so, the real-time incremental data synchronization can be achieved.

DataWorks can extract data from relational databases (MySQL, Oracle, PolarDB, and so on) through real-time database monitoring extraction. DataWorks can aggregate real-time message flow data through message subscription. The aggregated data can then be used for data processing, including data filtering, string replacement, and Groovy functions that will be supported in the future. This is also a relatively standard ETL process. The processed data can be output to multiple data sources.

By combining with real-time O&M monitoring and alerting system, a full and incremental data solution for the entire database is formed, thus, a complete process for real-time synchronization is realized. The process includes database full data synchronization, database real-time incremental data synchronization, and then automatic incremental data integration of big data. In addition, the real-time synchronization architecture is highly available.

DataWorks provides backups at both the control layer and the execution layer. If the scheduling or data transmission procedure is disconnected, the task can be urgently switched to another procedure to ensure stable task execution.

The real-time synchronization technology of data integration comes with a raw data collection mechanism. In the entire ETL procedure, the data unsupported on both the read and the write side can be collected and output to user-configured target ends through the plug-in center. In the target ends, including local logs, Loghub, and MaxCompute, data can be reprocessed.

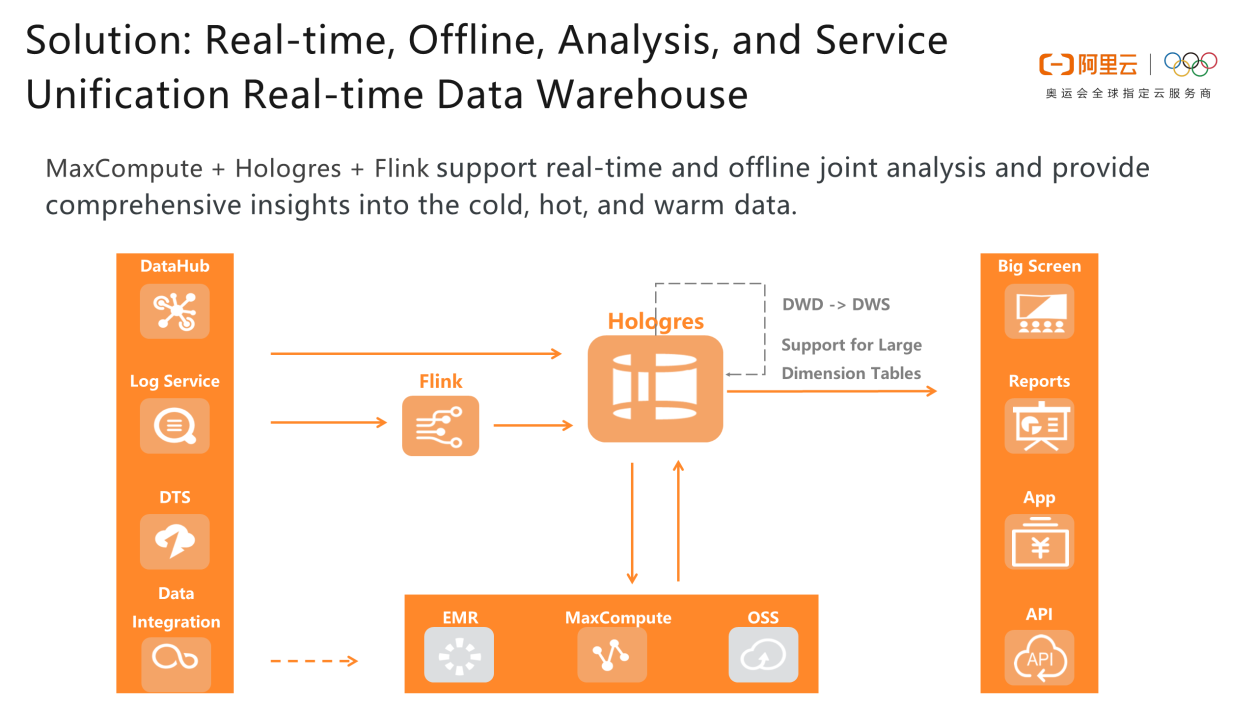

In the big data cloud migration solution, by integrating data, the offline and real-time data can be processed through offline engines (EMR and MaxCompute) and real-time engines (Hologres and Flink). Then, the data are collected by DataWorks for data development and data services, including model development in machine learning PAI platform. Finally, those data are accessible to data applications, including QuickBI, DataV, and Tableau.

Based on the cloud migration solution, a variety of scenario-based solutions were established, including the smart real-time data warehouse solution, real-time monitoring big screen solution, and data lake solution. The typical smart real-time data warehouse solution is suitable for large-scale real-time data querying in the Internet industry, such as e-commerce, gaming, and social networking.

The first step: DataWorks (batch + real time) and DataHub (real-time) are used for unified data collection.

The second step: Full-procedure data development is completed based on DataWorks, including data integration, data development and ETL, conversion, KPI computation, as well as scheduling, monitoring, and alerting of data jobs. DataWorks supports security control of the data development procedure and provides the unified data service API based on its data service module.

The third step: Flink is used to perform real-time ETL (optional) on real-time data as needed. The result is imported to Hologres to build real-time data warehouses and application marts. Hologres also provides real-time interactive query and analysis on massive data. In addition, Hologres provides real-time and offline query federation.

The fourth step: Alibaba Quick BI or third-party data analysis tools such as Tableau is used to implement data visualization, and build portal applications of data services in each business unit.

Based on this solution, the full procedures of Alibaba Cloud real-time data warehouses are seamlessly integrated with offline data warehouses. This solution can provide a cost-effective combination of one storage system and two kinds of compute (real-time compute and offline compute).

After data is synchronized through DataWorks, real-time data warehouses are needed to make full use of the data. In this section, we will give a detailed introduction to the real-time data analysis solution based on Hologres and Flink.

Hologres proposes the concept of "service-analysis unification" in real-time data warehouse. Under such concept, the big data engine supports both real-time OLAP insights and analysis as well as KV-style high-QPS point query services. It integrates real-time analysis with services, greatly simplifies the real-time data warehouse architecture, and facilitates real-time analysis and decision-making.

The rapid development of digital transformation has led to an explosive growth in data volumes. Requirements on data computing are increasing, such as lower latency, less resource consumption, higher efficiency, and higher accuracy. Quickly summarizing and analyzing these massive historical data and daily real-time incremental data for exploring business value exploration has become the most basic requirement for businesses.

Many companies have introduced batch processing and real-time computing for this issue. However, the offline batch data warehouse cannot coordinate with real-time analysis, so it cannot meet the requirements of service timeliness. The absolute real-time data warehouses are not practical as well, because only "near real-time" makes sense. Real-time analysis and near real-time analysis cannot be separated from the construction of real-time data warehouses.

Lambda is the most widely used architecture in the construction of real-time data warehouses. It partly solves the business problems of most enterprises in the initial stage of digital construction. However, with the rapid development of business, the surge of data volumes, and the changing business needs, there have been more and more problems in Lambda architecture, mainly in the following aspects:

1) Data is stored in multiple copies in different systems, resulting in space waste and data consistency problems that are difficult to solve.

2) The entire data procedure is composed of multiple engines and systems, with high development, maintenance, and learning costs.

3) In terms of use, different processing languages and interfaces are used in different processes, such as offline processing, real-time processing, and unified data service layer. It is not user-friendly.

4) The learning cost is very high, which increases the application cost.

Therefore, architecture simplification, cost optimization, data unification, low threshold for learning, business flexibility, and self-service analysis are all in urgent need. Enterprises expect a brand-new big data product that supports real-time data writing, real-time computing, and real-time insights. It should also support real-time and offline unification to reduce data movements. In addition, self-service analysis should be supported in business technology decoupling to simplify the overall business system architecture.

In this context, Hologres launched HSAP. HSAP refers to the Hybrid Serving & Analytical Processing. It not only supports real-time writing and querying in high-QPS point query scenarios, but also completes complex multi-dimensional analysis in a single system. HSAP can be considered as the combination of data warehouse and online data service. Enterprises need unified storage of real-time data and offline data, efficient query services, high-QPS queries, complex analysis, and federated query and analysis. The front-end applications need to be directly connected for instant analysis, unified data services, and data movement reduction. Hologres is affiliated to MaxCompute, a big data product developed by Alibaba. Based on HSAP, Hologres supports high-concurrency and low-latency analysis and processing of PB-level data. It also supports scenarios such as real-time data warehouses and big data interactive analytics. It features analysis-service unification, real-time design, storage-computing separation, and is compatible with the PostgreSQL ecosystem.

Hologres is now deeply integrated with Flink in full-procedure real-time data warehouse construction, and supports sink tables, source tables, and dimension tables in Flink. In terms of business, real-time ETL cleansing and conversion can be performed based on Flink. Detailed data, light summary data, and business summary data can be stored in Hologres. Then, these data can be queried in Hologres in real time and exported to a third-party analysis tool for real-time analysis.

MaxCompute and Hologres can be used to build second-level interactive data analytics. Hologres can be used by MaxCompute data warehouses to accelerate queries without data movement. The MaxCompute data warehouses can also connect to BI analysis tools, realizing real-time analysis of offline data. Quick import of MaxCompute data to Hologres is also supported for indexing and providing query services with higher QPS and faster query response.

Hologres, Flink, and MaxCompute can implement a "unified solution of real-time, offline, analysis, and service". Cold data is stored in MaxCompute, while hot data is stored in Hologres.

Meanwhile, through the deep integration with Proxima, the vector engine library of Alibaba DAMO Academy, Hologres can be applied to real-time recommendation scenarios for dependency feature query, real-time metric computing, and vector retrieval and recall. Hologres vector search can be deeply integrated with Proxima to provide high-performance vector search services. Combined with Flink and PAI, it can be used for real-time personalized recommendations, images, videos, faces, and so on, improving advertisement retention.

Currently, Hologres has been used by multiple customers in many scenarios for big data analysis and decision-making.

1) Xiaohongshu built a large-scale ClickHouse cluster before. After a period of operation, more disadvantages of ClickHouse have emerged, such as high cost, slow query, instability, and complicated cluster O&M. Hologres is used for the architecture with separated storage and computing. It can easily store 15 days of data and quickly query 7 or even 15 days of data, greatly improving query performance. It also has primary key deduplication (insert or ignore) with no impact on upstream failover, zero O&M, and very high customer satisfaction.

2) Cainiao intelligent logistics engine originally used the Flink + HBase + OLAP solution. Problems in this architecture, such as long data import time, resource waste, and data islands, have seriously plagued business staffs. After Hologres is used, the end-to-end data processing speed of 0.2 billion records in the entire procedure is optimized to 3 minutes. The development efficiency is greatly improved, and the overall hardware cost is reduced by 60%.

3) Alibaba customer experience department (CCO) originally used DataHub + Flink + OLAP + Lindorm data warehouse solution. This solution has the following problems: repeated task construction, data storage redundancy, metadata management, and complex processing procedures. During this year's Double 11, Hologres assisted CCO in building a real-time, self-service, and systematic real-time data warehouse for user experience. It perfectly assisted the Double 11 scenarios and supported more than thousands of service big screens. The peak traffic was cut by 30%, and the overall cost was saved by nearly 30%. The peak TPS of real-time writes in Flink was over 1 million per second, and the write latency was within 500 us. The average query latency during Double 11 was 142 ms, and 99.99% of queries were completed within 200 ms.

In addition to real-time data stored in big data engines, there are also a lot of unstructured log data. Alibaba Cloud Elasticsearch provides low-cost hot and cold data storage solutions in a fully hosted manner. This helps enterprises build a unified cloud monitoring and O&M platform for the real-time monitoring and analysis of large amounts of data. Thus, the efficiency of automated O&M can be improved.

Big data IT O&M for enterprises has developed from simple O&M tools to O&M platforms, then to automated O&M and fault prevention O&M. Now it is evolving towards intelligent O&M. However, there are still some problems in the existing big data O&M analysis methods, such as lots of atomic tools, high adoption cost, and tool coordination. Monitoring, logging, and tracing are not interdependent enough to give full play to their values, and the real business benefits depend on users' architectural capabilities.

In the full-observation scenario, the O&M and monitoring pain points are similar, for example, different methods for obtaining logs and metrics. Pain points also include high obtaining cost, difficulty in logs and metrics formatting, O&M scaling, peak stability, and the high cost of long-term storage for massive data. Difficult analysis of system temporal exceptions, search performance bottlenecks of log analysis tools, and high requirements for extensibility are also some of the common issues faced by enterprises.

To solve these problems, Elasticsearch was built. The open-source version of Elasticsearch is a Lucene-based distributed real-time search and analysis engine, which complies with Apache open-source terms. Elasticsearch provides distributed services, and large datasets for storage, query, and analysis in near real time. It is usually used to construct complex queries due to its fast query speed and simple use.

Elasticsearch is built on the Elastic Stack open-source ecosystem matrix. The matrix includes Beats (lightweight data collection tool), Logstash (data collection, filtering, and transmission tool), Elasticsearch, and Kibana (flexible visualization tool).

Elastic Stack basically solves six pain points in the full-observation scenario:

1) Beats obtains logs and metrics, and provides Beats Agents that support Autodiscover for unified collection of all types of data.

2) Elastic Stack provides various methods for formatting logs and metrics, including open-source software and log/metric collection templates in network formats. It does not require formatting and has extended components for real-time data processing. It also provides various UDFs and plug-ins.

3) High stability: Based on the distributed architecture, the basic cluster throughput and performance, cross-data center deployment, geo-redundancy, and scenario-based kernel optimization are guaranteed.

4) Low cost: Elastic Stack provides a four-layer data storage mode for hot, warm, cold, and frozen data. By using special storage compression, the storage cost is significantly reduced.

5) Elastic Stack provides all-in-one services for log analysis, monitoring, and tracing. It optimizes engines for time sequence scenarios and ensures the performance of time sequence log monitoring and analysis.

6) Extensibility: Elastic Stack is based on distributed architecture and flexible and open RestAPI and Plugin architecture. The open source community also provides a wide range of methods for connecting the new technology stacks.

ELK built on such open-source ecosystem can achieve unified analysis of logs, metrics, APM, and business data on one platform. ELK can establish unified visual views, alignment time, filtering conditions, unified rule-based monitoring and alerting, and intelligence monitoring and alerting of unified machine learning. It can connect to open-source processing tools, such as Spark and Flink, to conduct more format unifications. Then, ELK stores them on Elasticsearch and provides to Kibana for visual data monitoring and alerting. The relational analysis and machine learning are performed to make full use of the data scattered across all layers of the system, thus maximizing the data value.

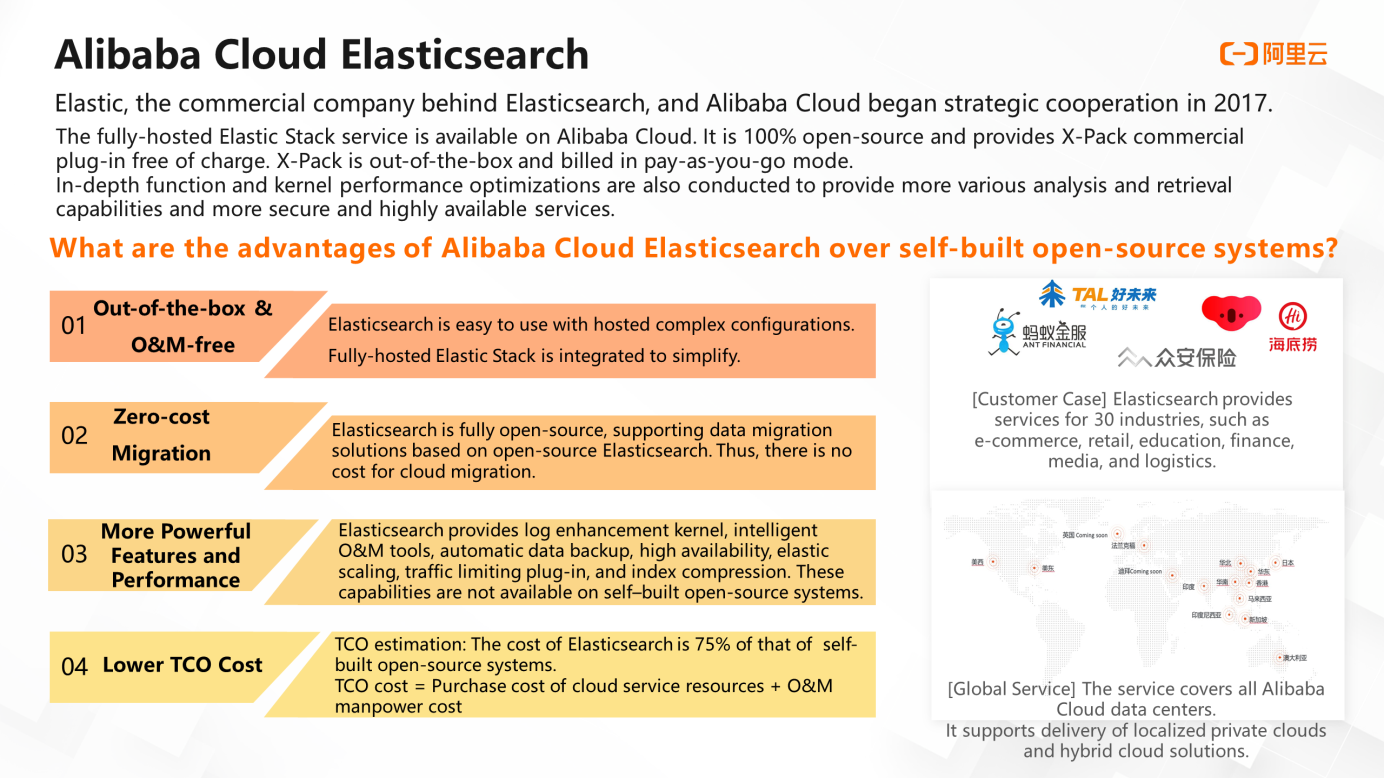

Elastic, the commercial company behind Elasticsearch, and Alibaba Cloud began the strategic cooperation in 2017 to provide a fully-hosted Elastic Stack service on Alibaba Cloud. Elastic Stack is 100% open-source, and provides free X-Pack commercial plug-ins. It is out-of-the-box and billed in pay-as-you-go mode. In-depth function and kernel performance optimizations are also conducted to provide a larger variety of analysis and retrieval capabilities, as well as more secure and highly available services.

Compared with self-built open-source systems, Elasticsearch can be used out-of-the-box with no O&M. Enterprises can migrate to the Cloud with zero costs. Elasticsearch has more powerful features and performance. In addition, the estimated TCO cost of Elasticsearch is only 75% of that of user-built systems.

For stability, when the traffic peak arrives, the exclusive Qos plug-in of Elasticsearch for traffic limiting controls the index-level read and write traffic control. When the query/write of a single index is under excess pressure, the traffic can be downgraded based on workload priorities to keep the traffic in a proper range.

In terms of cost, Alibaba Cloud Elasticsearch provides fully-hosted elastic O&M to avoid the resource waste during off-peak hours. This can be realized through purchasing the elastic data nodes, configuring scheduled scaling in the console, and dynamically scaling based on business traffic.

In addition, Alibaba Cloud Elasticsearch with enhanced log separates computing and storage, and uses NFS shared storage as the underlying storage for nodes. It uses primary and secondary shards, where primary shards are in readable and writable mode and secondary shards are in read-only mode. This 100% reduces storage costs, improves write performance, and supports elastic scaling within seconds.

Alibaba Cloud will launch Alibaba Cloud Elasticsearch time sequence writing for Serverless in 2021, which can greatly reduce the cost of time sequence/log scenarios. Users do not need to worry about the writing resources and pressure of the Elasticsearch cluster. Physical resources are deployed through cloud Serverless when the service request changes. Users can use the resources on demand at the pay-as-you-go basis. This new version of Elasticsearch features ultra-high automatic scaling capabilities and low-cost local computing and storage nodes, reducing data storage costs.

In terms of business, as one of the key customers of Alibaba Cloud Elasticsearch, the Tomorrow Advancing Life (TAL) cloud live business supports online classes with millions of participants and teacher-student interaction. TAL ensures no video lag and HD video with only 500 ms of latency. However, as the number of monitoring metrics increases, it is difficult to guarantee the real-time quality of live streaming. To ensure the customer experience, TAL needs to conduct data permission analysis with smaller granularity in the large data pool. It also needs to deal with high traffic and fluctuations in the education industry during summer and winter vacations.

Elasticsearch provides collection of various heterogeneous data sources, template-based log parsing and processing, and data permission segmentation in field level for the TAL. It allows users to flexibly customize the permission system and is interconnected with the enterprise's own permission system. It also supports smooth scaling and hot cluster changes and has no impact on services. With these features, Elasticsearch guarantees the real-time quality and stability of live streaming in large-traffic scenarios for customers.

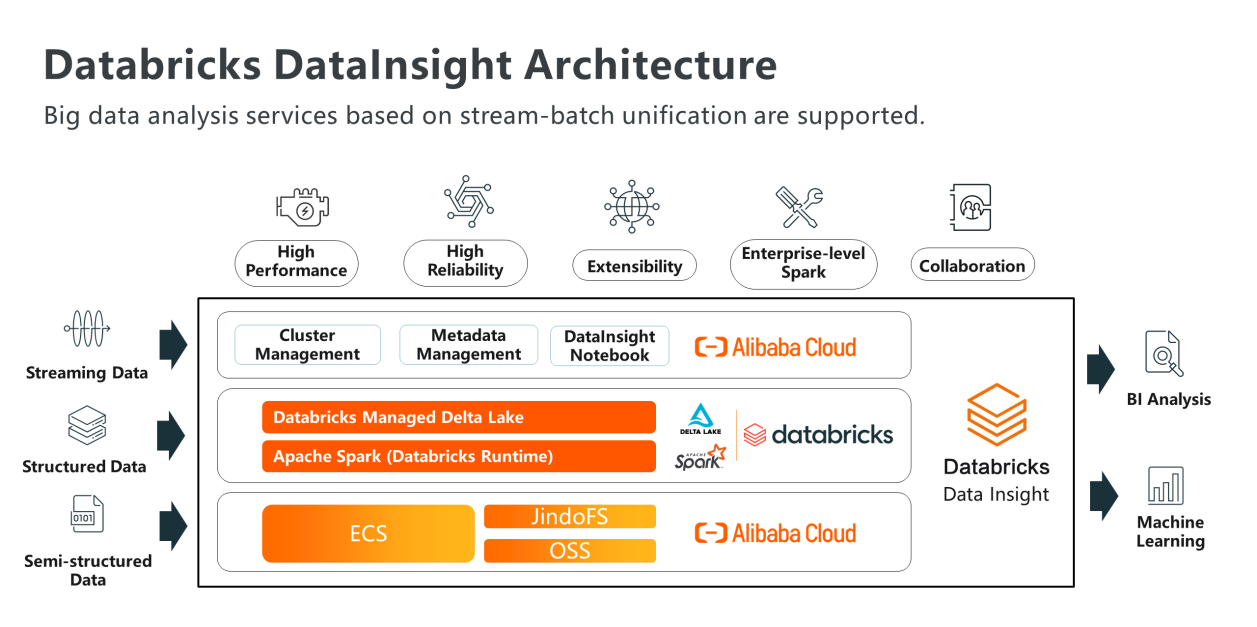

In addition to Hologres and Flink to build real-time data warehouses, many companies are using the Hadoop ecosystem to build big data analysis platforms. They have also used offline data warehouses for a long time. Alibaba Cloud Databricks DataInsight (DDI) can build the real-time data warehouse with stream-batch unification based on the Hadoop ecosystem. Enterprises' existing architectures can be upgraded to guarantee real-time analysis and decision-making.

Problems always exist in building a big data platform based on the Hadoop ecosystem:

1) No kernel personnel to conduct performance optimization, as well as high technical requirements, and lack of O&M personnel.

2) The maintenance cost of clusters is high, and the data storage cost in HDFS increases over time.

3) Batch and streaming data must be processed simultaneously, resulting in complex technical architecture, difficult maintenance, and many bugs.

4) The data needs to be added, deleted, and modified, and it is difficult to provide transactional assurance under big data.

5) Data engineers and data analysts have their own developing environments which are difficult to share, and it is hard for them to work together.

The corresponding solution for enterprises is basically to purchase expert services, or directly add computing resources. They can also use fully-hosted cloud computing services, or adopt multi-engine collaboration, where one for streaming data, one for batch data. These solutions usually need to be carried out with multiple products.

Enterprises want to use a single product to meet these data analysis requirements. After obtaining the data lake or Kafka event data, an engine is expected to be used between the streaming analysis and BI reports. The engine has a data lake architecture that supports storage-compute separation. It can also process streaming data and batch data at the same time and support incremental data writing.

For this reason, Alibaba Cloud introduced DDI. DDI is a product jointly developed by Alibaba Cloud and Databricks. Databricks, an American unicorn technology company, is the commercial company of Apache Spark. In 2020, it is in the leading quadrant in the Gartner 2020 Magic Quadrant for Data Science and Machine-Learning Platforms. It has more than 5,000 customers and more than 450 partners worldwide. With Alibaba Cloud DDI, enterprises can build real-time data warehouse with batch-stream unification based on Hadoop ecosystem.

DDI has many advantages in the ETL and data science, including 50% of performance improvement in running standard test sets than open source services and the use of common data storage format Parquet. Parquet is highly extensible and supports custom deployment, meeting users' customization requirements. DDI uses enterprise-level Spark, and is perfectly compatible with open-source Spark, while no API modification is needed for migration.

DDI provides Z-Order optimization, reduces 95% of data read, and improves the performance by 20 times. The performance of common tables and cache querying is improved by 30 times. With PB-level scalability and Notebook for interaction and collaboration, DDI can meet the requirements of data engineers and scientists to edit jobs and share results. Big data and AI are unified on the same platform by DDI, and the data are shared by the underlying layer.

DDI is the Data Lake analytics component under the Delta architecture and the real-time data warehouse with stream-batch unification. DDI provides real-time big data analysis and decision-making capabilities for industries. In the financial industry, enterprises need to use data and Machine Learning (ML) to achieve rapid iteration of consumer mobile applications, in order to attract more customers.

DDI notebook provides data sharing capabilities and stream-batch unification architecture. Thus, customers' needs to process and identify streaming and batch data of millions of users are met, and interfaces are unified. With DDI, customer APP engagement has increased by 4.5 times, and event processing time has been shortened from 6 hours to 6 seconds. Moreover, one Data Lake has replaced more than 14 original databases, greatly improving the performance.

In the new retail industry, enterprises need to collect supply chain data in real time, accelerate data processing, and ensure real-time decision-making (quickly detect problems and reduce financial losses). After a real-time data warehouse is built using DDI, the data latency is reduced from 2 hours to 15 seconds. The data procedure is streamlined, and the amount of business code is reduced accordingly. The Python code is reduced from 565 lines to 317 lines, and the YML configuration code is reduced from 252 lines to 23 lines.

Real-time big data analysis and decision-making are currently hot topics. Enterprises expect technologies to support faster response to business needs. Alibaba Cloud also wants to enable enterprises to use data faster and better through DDI, and to respond to the data needs of enterprises in a timely manner.

Products mentioned in this article, such as DataWorks, Hologres, Realtime Compute for Apache Flink, Elasticsearch, and DDI, can all be found on the Alibaba Cloud official website at https://www.alibabacloud.com/

Communication Authorization Service: Ensuring Reliable and Secure Communication with Blockchain

When AI Meets the Exhibition - An "Ongoing Process" for a Holographic World

31 posts | 5 followers

FollowAlibaba Clouder - June 10, 2020

Lana - April 14, 2023

Alibaba Clouder - June 21, 2018

Alibaba Cloud Community - November 1, 2021

ApsaraDB - July 2, 2020

Alibaba Cloud MaxCompute - January 22, 2021

31 posts | 5 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Cloud New Products