This tutorial shows how to create, deploy, and start Flink Java jobs for both stream and batch processing on Real-time Compute for Apache Flink.

Prerequisites

If you use a RAM identity, ensure you have the required permissions for the consoles. For more information, see Permission management.

You have created a Flink workspace. For more information, see Create a workspace.

Step 1: Develop a Java job

Realtime Compute for Apache Flink's console does not provide a development environment for Java jobs. Therefore, you must develop, compile, and package your job locally. For details, see Develop a Flink Java job.

Ensure the Flink version used for local development match the engine version you select in Step 3: Deploy the job. Also, set the right scope for your dependencies.

This tutorial provides a sample JAR that counts word frequencies and a sample dataset. Download these files to follow the next steps.

Sample JAR: Click FlinkQuickStart-1.0-SNAPSHOT.jar to download.

To study the source code, click FlinkQuickStart.zip to download and compile it.

Sample dataset: Click Shakespeare to download the Shakespeare data file.

Step 2: Upload the job JAR

Log on to the Realtime Compute for Apache Flink management console.

Find the target workspace and click Console in the Actions column.

In the left navigation manu, click Artifacts.

Click Upload Artifact to upload the sample JAR and data file.

For this tutorial, upload the FlinkQuickStart-1.0-SNAPSHOT.jar and Shakespeare files downloaded in Step 1. For more information, see Manage artifacts.

Step 3: Deploy the job

Streaming job

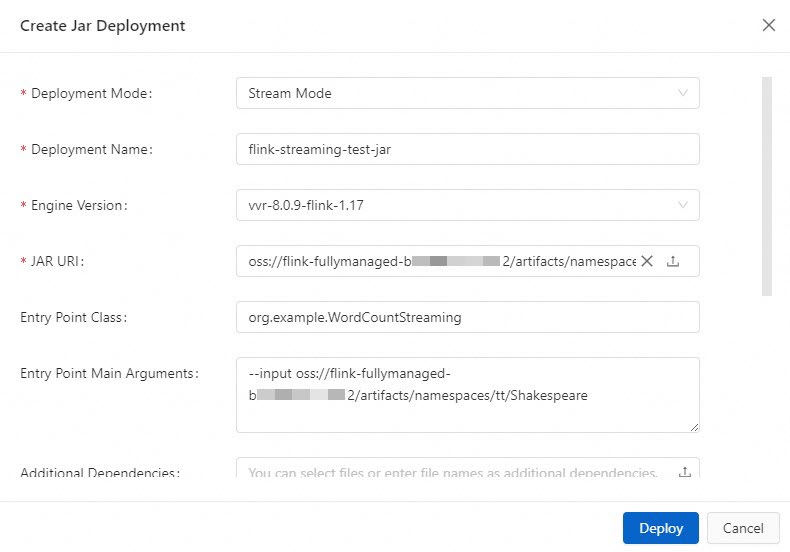

In the left navigation menu of the Development Console, choose . On the Deployments page, click Create Deployment > JAR Deployment.

Fill in the deployment information.

Parameter

Description

Example

Deployment Mode

Select Stream Mode.

Stream Mode

Deployment Name

Enter a name for the job.

flink-streaming-test-jar

Engine Version

The job's VVR engine version.

We recommend that you use an engine version tagged with RECOMMENDED or STABLE for better reliability and performance. For more information, Release notes and Engine version.

vvr-8.0.9-flink-1.17

JAR URI

Select the FlinkQuickStart-1.0-SNAPSHOT.jar file you uploaded in Step 2. You can also click the upload icon

to upload your own JAR package.

to upload your own JAR package.If the file is already in artifacts, you can select it directly.

NoteVVR 8.0.6+ versions can only access the OSS bucket associated with your Flink workspace.

Entry Point Class

The entry point class of your program. If you have not specified a main class, enter its fully qualified name here.

For this tutorial, the sample

FlinkQuickStart-1.0-SNAPSHOT.jarcontains code for both streaming and batch jobs, so you must specify the entry point for the streaming job.org.example.WordCountStreaming

Entry Point Main Arguments

The arguments passed to the main method.

For this tutorial, enter the URI of the sample dataset

Shakespeare. You can copy it from the Artifacts page.--input oss://<Name of the associated OSS bucket>/artifacts/namespaces/<Name of the workspace>/ShakespeareDeployment Target

From the dropdown list, select a target resource queue or session cluster. For more information, see Manage queues and Step 1: Create a session cluster.

ImportantJobs deployed to session clusters do not support monitoring, alerting, or automatic scaling. They are intended exclusively for development and testing, not production environments. For more information, see Debug a SQL draft.

default-queue

For more information about configuration parameters, see Create a deployment.

Click Deploy.

Batch job

In the left navigation menu of the Development Console, choose . On the Deployments page, click Create Deployment > JAR Deployment.

Fill in the deployment information.

Parameter

Description

Example

Deployment Mode

Select Batch Mode.

Batch Mode

Deployment Name

Enter a name for the JAR job.

flink-batch-test-jar

Engine Version

The job's VVR engine version.

We recommend using versions tagged with RECOMMENDED or STABLE for better reliability and performance. For more information, see Release notes and Engine version.

vvr-8.0.9-flink-1.17

JAR URI

Select the FlinkQuickStart-1.0-SNAPSHOT.jar file you uploaded in Step 2. You can also click the upload icon

to upload your own JAR package.

to upload your own JAR package.-

Entry Point Class

The entry point class of your program. If your JAR package does not specify a main class, enter its fully qualified name here.

The sample JAR package contains code for both streaming and batch jobs, so you must specify the entry point for the batch job.

org.example.WordCountBatch

Entry Point Main Arguments

The arguments passed to the main method.

For this tutorial, enter the URI for the input data file

Shakespeareand the output data filebatch-quickstart-test-output.txt.NoteYou only need to specify the full path and name for the output file; you do not need to create it in advance. The output file is created in the same directory as the input file.

--input oss://<Name of the associated OSS bucket>/artifacts/namespaces/<Name of the workspace>/Shakespeare--output oss://<Name of the associated OSS bucket>/artifacts/namespaces/<Name of the workspace>/batch-quickstart-test-output.txtDeployment Target

Select a target resource queue or session cluster. For more information, see Manage queues and Step 1: Create a session cluster.

ImportantJobs deployed to session clusters do not support monitoring, alerting, or automatic scaling. They are intended exclusively for development and testing, not production environments. For more information, see Debug a SQL draft.

default-queue

For more information about configuration parameters, see Create a deployment.

Click Deploy.

Step 4: Start the job

Streaming job

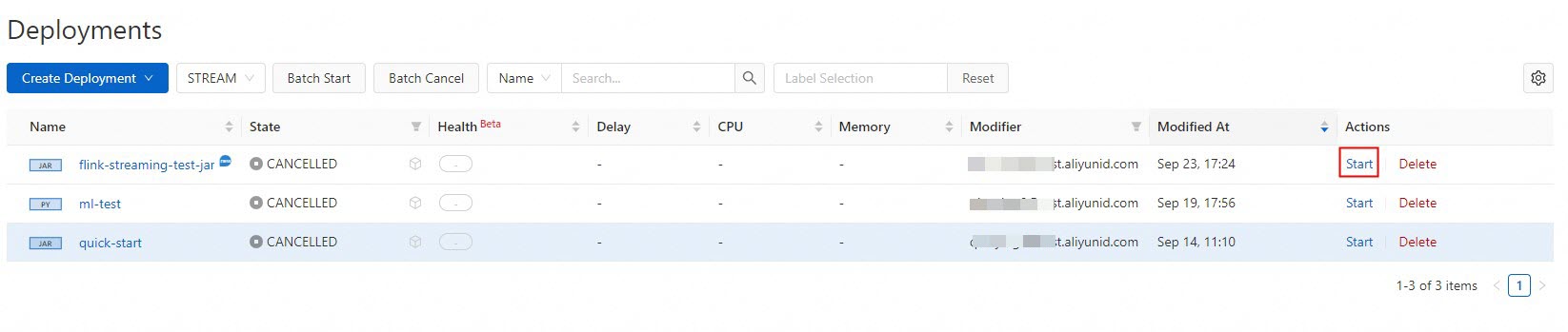

In the left navigation menu of the Development Console, choose . On the Deployments page, find your job deployment and click Start in the Actions column.

In the Start Job panel, select Initial Mode and click Start. For more details, see Start a job deployment.

When the job status changes to RUNNING, view the results.

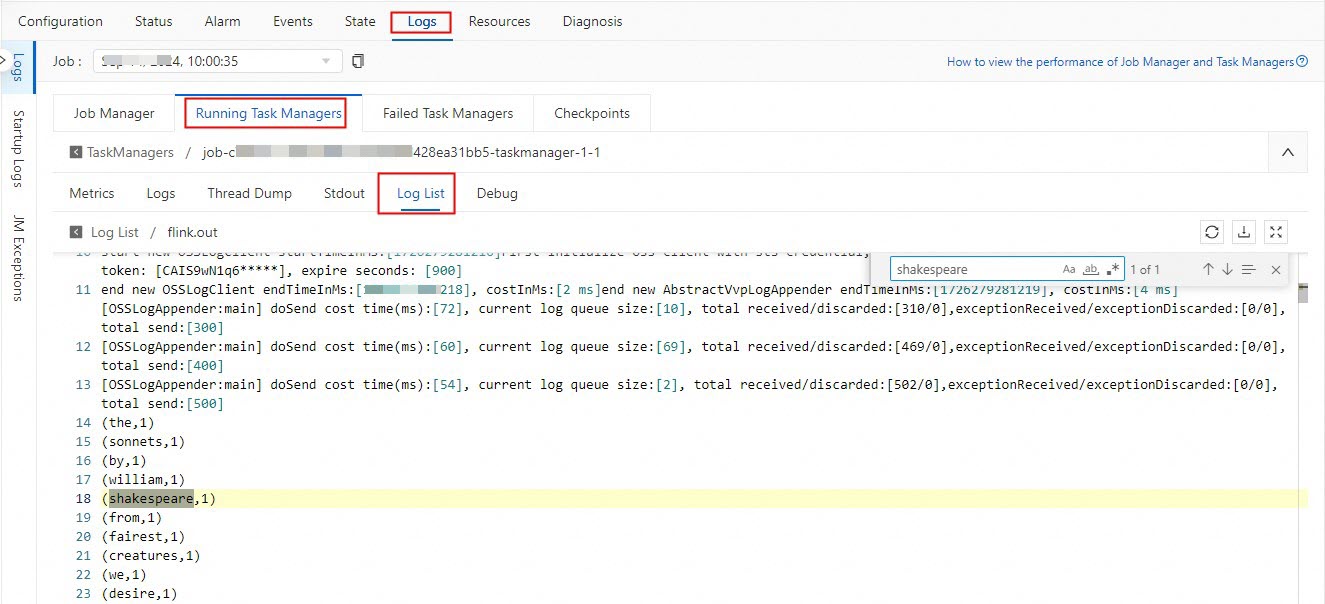

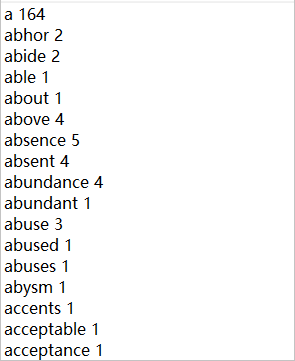

In the TaskManager

.outlog file, searchshakespeareto see the results. See the following screenshot for details.

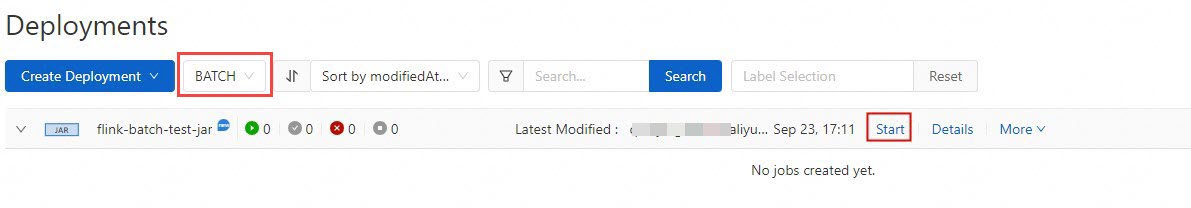

Batch job

In the left navigation menu of the Development Console, choose . On the Deployments page, find your job deployment and click Start in the Actions column.

In the Start Job dialog, click Start. For more information, see Start a job deployment.

After the job status changes to FINISHED, view the results.

Log on to the OSS console and view the computing result in the oss://<Name of the associated OSS bucket>/artifacts/namespaces/<Name of the workspace>/batch-quickstart-test-output.txt directory.

The number of result entries for the streaming job and the batch job may differ because the TaskManager.out log displays a maximum of 2,000 entries. For more information about this limitation, see Print connector.

Step 5: (Optional) Stop the job

In several circumstances, you may want to cancel a job: apply changes to the SQL draft, restart the job without states, or update static configurations. For more information, see Cancel a deployment.