If you want to intuitively view and analyze the model training process and results on a visualized UI, you can use TensorBoard in your training code to read logs and use the TensorBoard feature of Deep Learning Containers (DLC) to view the training data. This topic describes how to create and manage TensorBoard instances.

Prerequisites

You have associated a dataset with the DLC job. Only DLC jobs that are associated with datasets can use TensorBoard to view the analysis report. You can click the name of a DLC job to go to the Overview tab to check whether the job is associated with a dataset.

You have stored summary logs by using TensorBoard in the training code based on the sample code in the example below.

Example

In this example, the following dataset and TensorBoard configurations are used for the DLC job.

Configure an Object Storage Service (OSS) dataset:

OSS URI:

oss://w*********.oss-cn-hangzhou-internal.aliyuncs.com/dlc_dataset_1/Mount path:

/mnt/data/

Specify the storage location of summary logs by using SummaryWriter in TensorBoard:

SummaryWriter('/mnt/data/output/runs/mnist_experiment'). Complete sample code:

Create a TensorBoard instance

Log on to the PAI console. Select the region where your instance resides in the top navigation bar and select a workspace. Then, click Enter Deep Learning Containers (DLC).

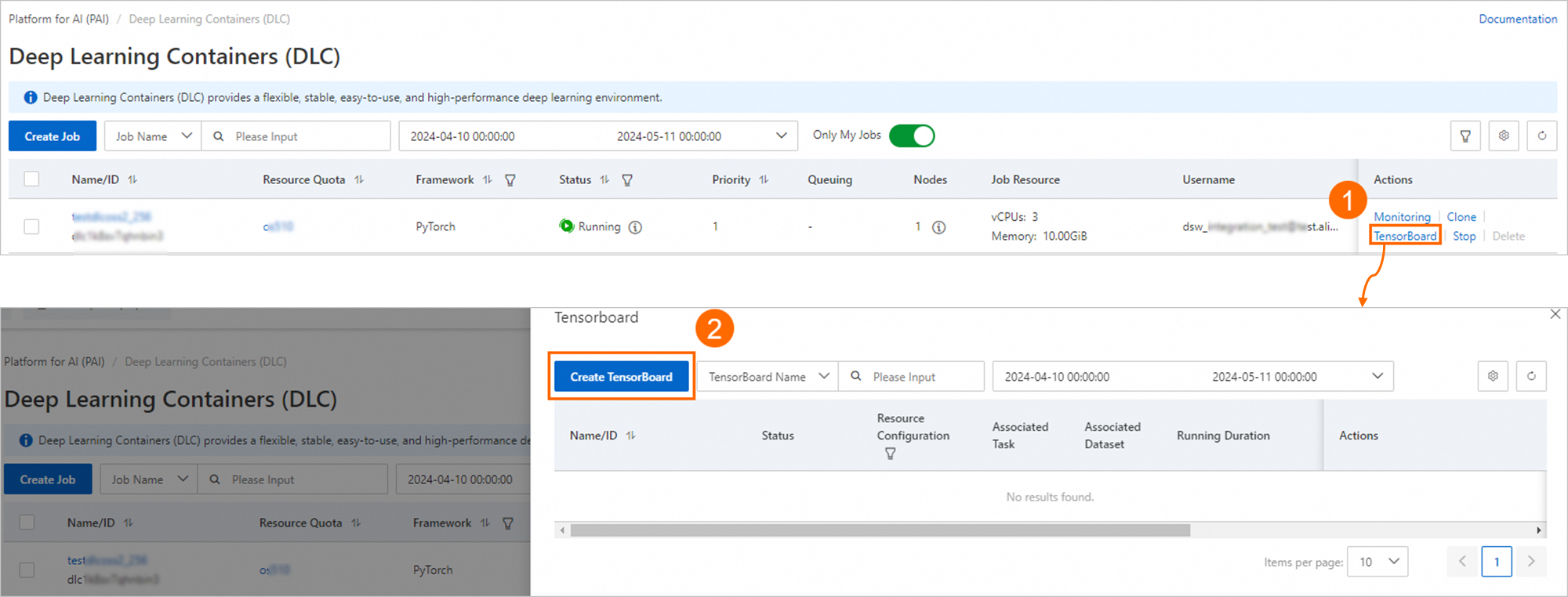

On the page that appears, click TensorBoard in the Actions column of the job that you want to manage. In the TensorBoard panel, click Create TensorBoard.

On the Create TensorBoard page, configure the parameters and click OK. The following tables describe the parameters.

Basic Information

Parameter

Description

Name

The name of the TensorBoard instance.

Configuration Type

Mount Type: You can select Mount Dataset, Mount OSS, and By Task. We recommend that you select Mount Dataset.

Summary Path: The path in which the TensorBoard summary logs are stored. You can obtain the complete path from the SummaryWriter class in the training code.

Sample configurations for the example:

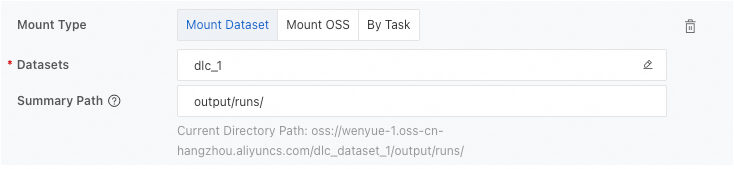

Mount Dataset: Select a dataset and enter the relative path of the summary directory in the dataset.

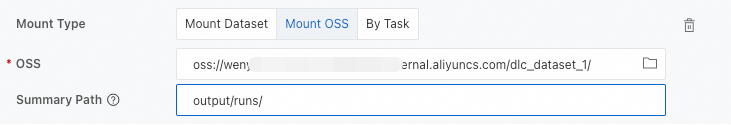

Mount OSS: Select an OSS storage path and enter the relative path of the summary directory in OSS.

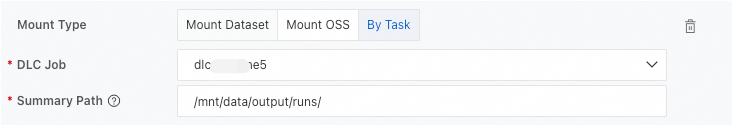

By Task: Select a desired DLC job and enter the complete path of the log files in the container.

Resource Configuration

The following table describes the supported resource types.

Resource type

Description

Free Quota

The system provides you with a certain amount of free resources. Each instance can use up to 2 vCPUs and 4 GiB of memory. If the amount of the free quota cannot meet your business requirements, you can disable instances that run on free quotas to release free resources and use the released free resources to create the TensorBoard instance.

General Computing

Public Resources: uses the pay-as-you-go billing method. Only general computing uses public resources. You can select an instance type based on your business requirements.

Resource Quota: uses the subscription billing method. You must purchase computing resources and create quotas before you specify this parameter. You must configure the following parameters together with this parameter:

NoteThis feature is available only to users in the whitelist. If you want to use this feature, contact your account manager to configure the whitelist.

Priority: the priority of a TensorBoard instance. Valid values: 1 to 9. The value 1 indicates the lowest priority.

Job Resource: the resources that you use to run a TensorBoard instance. The resources include the number of vCPUs and the memory. The unit of the memory size is GiB.

VPC

If you use Public Resources to create a TensorBoard instance, the virtual private cloud (VPC)-related parameters are available.

If you do not configure a VPC, Internet connection is used. In this case, the system may stutter during TensorBoard instance startup or when you view the reports due to the limited bandwidth of the Internet connection.

To ensure sufficient network bandwidth and stable performance, we recommend that you configure a VPC.

Select a VPC, a vSwitch, and a security group in the current region. After you complete the configuration, the cluster in which the TensorBoard instance runs can access the services in the selected VPC and use the security group that you specified to control access.

ImportantIf the TensorBoard instance uses a dataset that requires a VPC, such as a Cloud Parallel File Storage (CPFS) dataset or a NAS dataset that has a mount target in the VPC, you must configure a VPC.

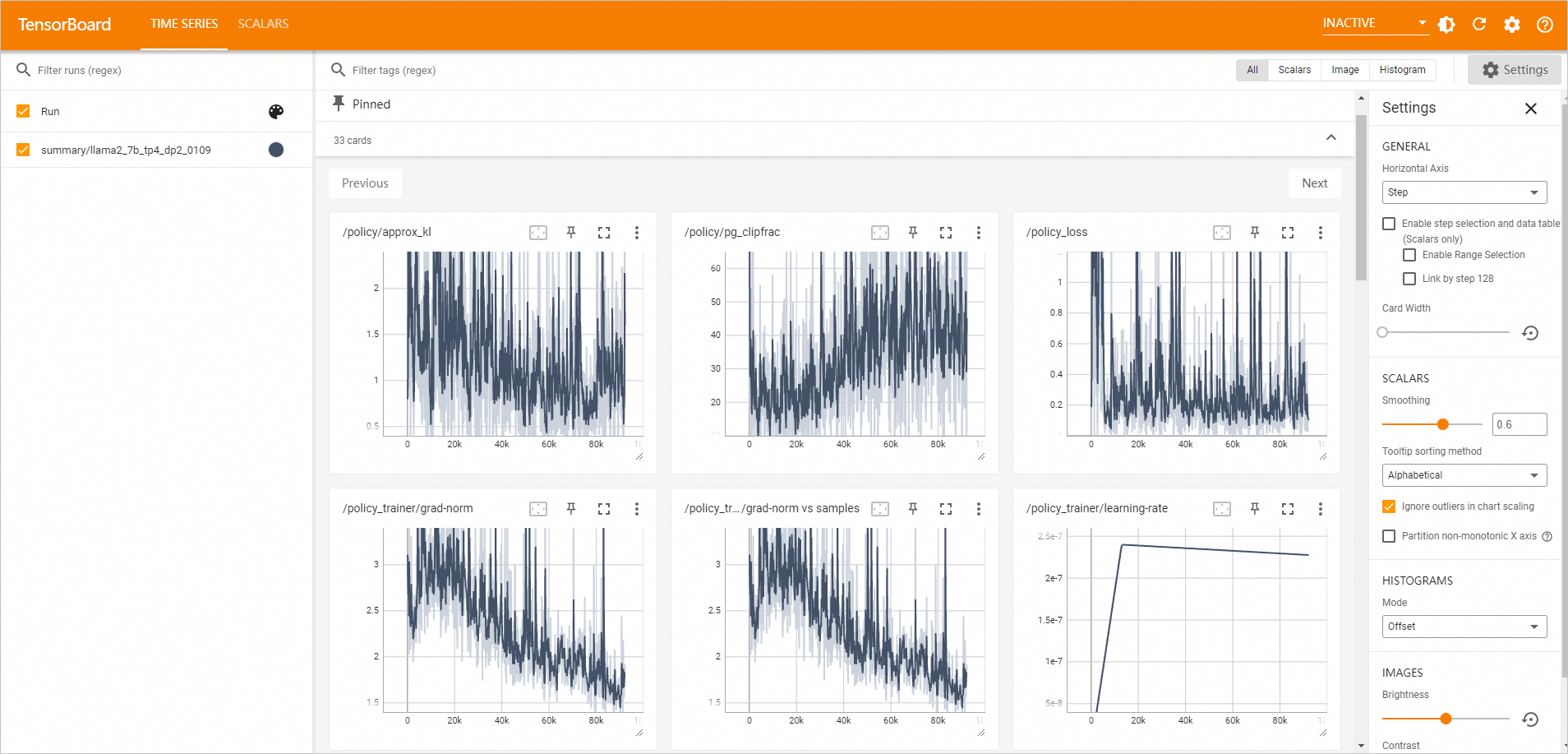

Go to the TensorBoard page to view the analysis report.

In the left-side navigation pane of the workspace page, choose .

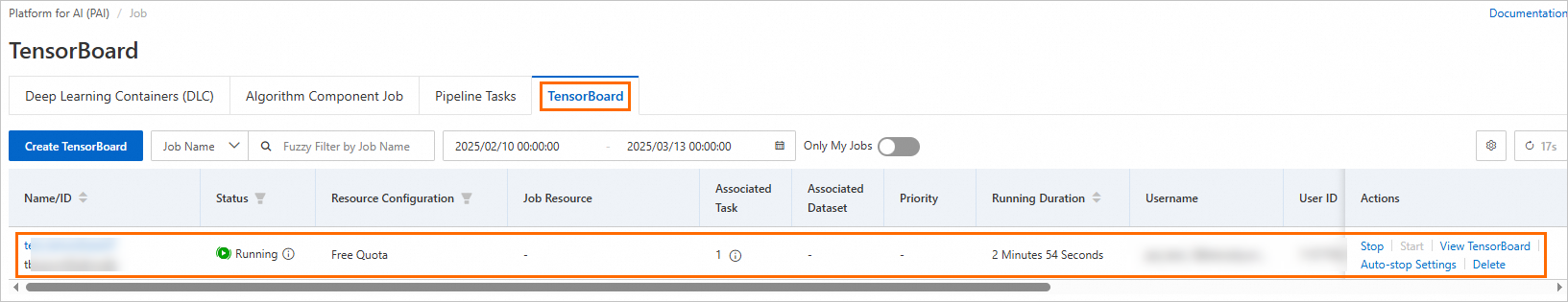

On the TensorBoard tab, if the Status of the TensorBoard instance is Running, click View TensorBoard in the Actions column.

The TensorBoard page appears.

Manage a TensorBoard instance

Perform the following steps to manage a TensorBoard instance:

Log on to the PAI console. Select the region where your instance resides in the top navigation bar and select a workspace. Then, click Enter Jobs.

On the TensorBoard tab of the page that appears, perform the following operations to manage a TensorBoard instance.

Start a TensorBoard instance

Click Start in the Actions column to restart a stopped TensorBoard instance.

View the details of a TensorBoard instance

Click the name of the TensorBoard instance. On the TensorBoard instance details page, view Basic Information and Configuration Information.

View associated DLC jobs

View the number of the DLC jobs that you associate with the TensorBoard instance. On the Tensorboard tab, move the pointer over the

icon in the Associated Task column to view the ID of the associated DLC job. Click the ID to go to the details page of the DLC job.

icon in the Associated Task column to view the ID of the associated DLC job. Click the ID to go to the details page of the DLC job.View associated datasets

View the number of the datasets that you associate with the TensorBoard instance. On the Tensorboard tab, move the pointer over the

icon in the Associated Dataset column to view the ID of the associated dataset. Click the ID to go to the details page of the dataset.

icon in the Associated Dataset column to view the ID of the associated dataset. Click the ID to go to the details page of the dataset.View the running duration

View the running duration of the TensorBoard instance. The running duration starts when the instance is started. After you stop the TensorBoard instance, the running duration is reset. On the Tensorboard tab, view the running duration of the TensorBoard instance in the Running Duration column.

Stop a TensorBoard instance

Click Stop in the Actions column of the TensorBoard instance.

Click Auto-stop Settings in the Actions column of the TensorBoard instance to specify the time at which you want the instance to automatically stop.

References

You can create a TensorBoard instance for a DLC job on the page. For more information, see Create and manage TensorBoard instances.