Model compression is the technique of minimizing the size and computational complexity of machine learning models through various strategies, aiming to preserve their predictive accuracy. This is increasingly vital as deep learning models grow in complexity and size, particularly for environments with limited resources, where model compression can significantly reduce storage and computing resource usage.

Introduction

Model Gallery of Platform for AI (PAI) offers model quantization using Weight-only Quantization technology. It supports MinMax-8Bit and MinMax-4Bit quantization strategies, which convert the floating-point parameters of models into 8-bit or 4-bit integers. This approach aims to decrease the size and computational complexity of models, facilitating the efficient deployment of deep learning models in environments with limited resources while maintaining robust performance.

Compress a model

Train a model.

To compress a model, you must first train it. For more information, see Train models.

After you train a model, click Compress in the upper right corner of the job details page.

Configure the compression task.

The following table describes the key parameters:

Parameter

Description

Compression Method

Only Model Quantization is supported, which is based on Weight-only Quantization technology. This technique converts the weight parameters of the model to a reduced bit width, thereby lowering video memory requirements during inference.

Compression Strategy

MinMax-8Bit: Uses min-max scaling to quantize the model to an 8-bit integer format.

MinMax-4Bit: Uses min-max scaling to quantize the model to a 4-bit integer format.

For information about the other parameters, see Training models.

Click Compress.

Follow the on-screen instructions to go to the Task Details page, where you can view the basic information, real-time status, task logs, and other details of the compression task.

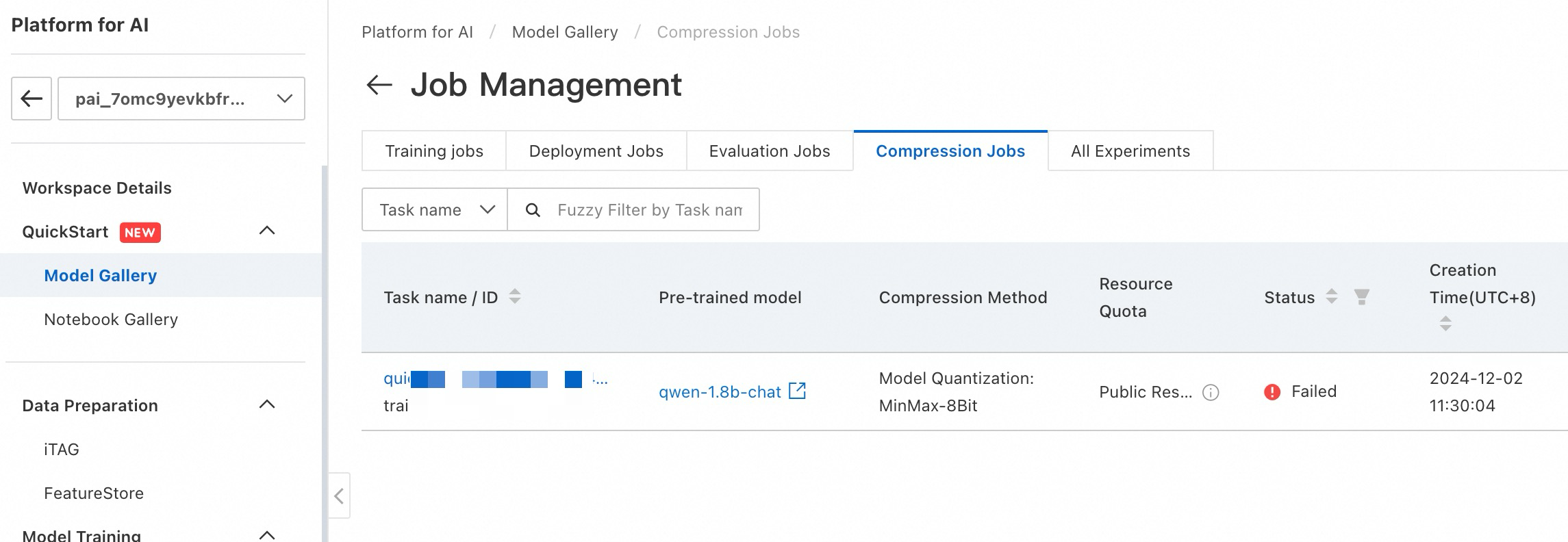

Viewing Compression Tasks

To view compression tasks, go to Model Gallery > Job Management > Compression Jobs.