The Wan2.5 image editing model supports one to three image inputs and one to four image outputs. You can use text instructions to perform single-image editing with consistent subjects, object detection and segmentation, and multi-image fusion.

Getting started

Prerequisites

Before making a call, obtain an API key and set the API key as an environment variable. To make calls using the SDK, install the DashScope SDK.

Example code

The process for calling the API with single or multiple image inputs is nearly identical.

This example shows multi-image fusion. Pass two images in the images array. The model will output one fused image based on the text prompt.

Input prompt: Place the alarm clock from image 1 next to the vase on the dining table in image 2.

Input image 1 | Input image 2 | Output image |

|

|

|

Synchronous call

Ensure that the DashScope Python SDK is version 1.25.2 or later and the DashScope Java SDK is version 2.22.2 or later.

Outdated versions may trigger errors, such as "url error, please check url!". Install or upgrade the SDK.

Python

This example supports three image input methods: public URL, Base64 encoding, and local file path.

Request example

import base64

import mimetypes

from http import HTTPStatus

from urllib.parse import urlparse, unquote

from pathlib import PurePosixPath

import dashscope

import requests

from dashscope import ImageSynthesis

import os

# The following is the URL for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

# If you have not configured an environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx"

# The API keys for the Singapore and China (Beijing) regions are different. Get an API key: https://www.alibabacloud.com/help/en/model-studio/get-api-key

api_key = os.getenv("DASHSCOPE_API_KEY")

# --- Image input: Use Base64 encoding ---

# The Base64 encoding format is data:{MIME_type};base64,{base64_data}

def encode_file(file_path):

mime_type, _ = mimetypes.guess_type(file_path)

if not mime_type or not mime_type.startswith("image/"):

raise ValueError("Unsupported or unrecognized image format")

with open(file_path, "rb") as image_file:

encoded_string = base64.b64encode(image_file.read()).decode('utf-8')

return f"data:{mime_type};base64,{encoded_string}"

"""

Image input methods:

Choose one of the following three methods.

1. Use a public URL - Suitable for publicly accessible images.

2. Use a local file - Suitable for local development and testing.

3. Use Base64 encoding - Suitable for private images or scenarios that require encrypted transmission.

"""

# [Method 1] Use a public image URL

image_url_1 = "https://img.alicdn.com/imgextra/i3/O1CN0157XGE51l6iL9441yX_!!6000000004770-49-tps-1104-1472.webp"

image_url_2 = "https://img.alicdn.com/imgextra/i3/O1CN01SfG4J41UYn9WNt4X1_!!6000000002530-49-tps-1696-960.webp"

# [Method 2] Use a local file (supports absolute and relative paths)

# Format requirement: file:// + file path

# Example (absolute path):

# image_url_1 = "file://" + "/path/to/your/image_1.png" # Linux/macOS

# image_url_2 = "file://" + "C:/path/to/your/image_2.png" # Windows

# Example (relative path):

# image_url_1 = "file://" + "./image_1.png" # Use your actual path

# image_url_2 = "file://" + "./image_2.png" # Use your actual path

# [Method 3] Use a Base64-encoded image

# image_url_1 = encode_file("./image_1.png") # Use your actual path

# image_url_2 = encode_file("./image_2.png") # Use your actual path

print('----sync call, please wait a moment----')

rsp = ImageSynthesis.call(api_key=api_key,

model="wan2.5-i2i-preview",

prompt="Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

images=[image_url_1, image_url_2],

negative_prompt="",

n=1,

# size="1280*1280",

prompt_extend=True,

watermark=False,

seed=12345)

print('response: %s' % rsp)

if rsp.status_code == HTTPStatus.OK:

# Save the image in the current directory

for result in rsp.output.results:

file_name = PurePosixPath(unquote(urlparse(result.url).path)).parts[-1]

with open('./%s' % file_name, 'wb+') as f:

f.write(requests.get(result.url).content)

else:

print('sync_call Failed, status_code: %s, code: %s, message: %s' %

(rsp.status_code, rsp.code, rsp.message))Response example

The URL is valid for 24 hours. Download the image promptly.

{

"status_code": 200,

"request_id": "8ad45834-4321-44ed-adf5-xxxxxx",

"code": null,

"message": "",

"output": {

"task_id": "3aff9ebd-35fc-4339-98a3-xxxxxx",

"task_status": "SUCCEEDED",

"results": [

{

"url": "https://dashscope-result-sh.oss-cn-shanghai.aliyuncs.com/xxx.png?Expires=xxx",

"orig_prompt": "Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

"actual_prompt": "Place the blue alarm clock from Image 1 to the right of the vase on the dining table in Image 2, near the edge of the tablecloth. Keep the alarm clock facing the camera, parallel to the table, with its shadow naturally cast on the table."

}

],

"submit_time": "2025-10-23 16:18:16.009",

"scheduled_time": "2025-10-23 16:18:16.040",

"end_time": "2025-10-23 16:19:09.591",

"task_metrics": {

"TOTAL": 1,

"FAILED": 0,

"SUCCEEDED": 1

}

},

"usage": {

"image_count": 1

}

}

Java

This example supports three image input methods: public URL, Base64 encoding, and local file path.

Request example

// Copyright (c) Alibaba, Inc. and its affiliates.

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesis;

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesisParam;

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesisResult;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.utils.Constants;

import com.alibaba.dashscope.utils.JsonUtils;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.util.*;

public class Image2Image {

static {

// The following is the URL for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/api/v1

Constants.baseHttpApiUrl = "https://dashscope-intl.aliyuncs.com/api/v1";

}

// If you have not configured an environment variable, replace the following line with your Model Studio API key: apiKey="sk-xxx"

// The API keys for the Singapore and China (Beijing) regions are different. Get an API key: https://www.alibabacloud.com/help/en/model-studio/get-api-key

static String apiKey = System.getenv("DASHSCOPE_API_KEY");

/**

* Image input methods: Choose one of the following three methods.

*

* 1. Use a public URL - Suitable for publicly accessible images.

* 2. Use a local file - Suitable for local development and testing.

* 3. Use Base64 encoding - Suitable for private images or scenarios that require encrypted transmission.

*/

//[Method 1] Public URL

static String imageUrl_1 = "https://img.alicdn.com/imgextra/i3/O1CN0157XGE51l6iL9441yX_!!6000000004770-49-tps-1104-1472.webp";

static String imageUrl_2 = "https://img.alicdn.com/imgextra/i3/O1CN01SfG4J41UYn9WNt4X1_!!6000000002530-49-tps-1696-960.webp";

//[Method 2] Local file path (file://+absolute path or file:///+absolute path)

// static String imageUrl_1 = "file://" + "/your/path/to/image_1.png"; // Linux/macOS

// static String imageUrl_2 = "file:///" + "C:/your/path/to/image_2.png"; // Windows

//[Method 3] Base64 encoding

// static String imageUrl_1 = encodeFile("/your/path/to/image_1.png");

// static String imageUrl_2 = encodeFile("/your/path/to/image_2.png");

// Set the list of images to be edited

static List<String> imageUrls = new ArrayList<>();

static {

imageUrls.add(imageUrl_1);

imageUrls.add(imageUrl_2);

}

public static void syncCall() {

// Set the parameters

Map<String, Object> parameters = new HashMap<>();

parameters.put("prompt_extend", true);

parameters.put("watermark", false);

parameters.put("seed", "12345");

ImageSynthesisParam param =

ImageSynthesisParam.builder()

.apiKey(apiKey)

.model("wan2.5-i2i-preview")

.prompt("Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.")

.images(imageUrls)

.n(1)

//.size("1280*1280")

.negativePrompt("")

.parameters(parameters)

.build();

ImageSynthesis imageSynthesis = new ImageSynthesis();

ImageSynthesisResult result = null;

try {

System.out.println("---sync call, please wait a moment----");

result = imageSynthesis.call(param);

} catch (ApiException | NoApiKeyException e){

throw new RuntimeException(e.getMessage());

}

System.out.println(JsonUtils.toJson(result));

}

/**

* Encodes a file into a Base64 string.

* @param filePath The file path.

* @return A Base64 string in the format: data:{MIME_type};base64,{base64_data}

*/

public static String encodeFile(String filePath) {

Path path = Paths.get(filePath);

if (!Files.exists(path)) {

throw new IllegalArgumentException("File does not exist: " + filePath);

}

// Detect the MIME type

String mimeType = null;

try {

mimeType = Files.probeContentType(path);

} catch (IOException e) {

throw new IllegalArgumentException("Cannot detect file type: " + filePath);

}

if (mimeType == null || !mimeType.startsWith("image/")) {

throw new IllegalArgumentException("Unsupported or unrecognized image format");

}

// Read the file content and encode it

byte[] fileBytes = null;

try{

fileBytes = Files.readAllBytes(path);

} catch (IOException e) {

throw new IllegalArgumentException("Cannot read file content: " + filePath);

}

String encodedString = Base64.getEncoder().encodeToString(fileBytes);

return "data:" + mimeType + ";base64," + encodedString;

}

public static void main(String[] args) {

syncCall();

}

}Response example

The URL is valid for 24 hours. Download the image promptly.

{

"request_id": "d362685b-757f-4eac-bab5-xxxxxx",

"output": {

"task_id": "bfa7fc39-3d87-4fa7-b1e6-xxxxxx",

"task_status": "SUCCEEDED",

"results": [

{

"orig_prompt": "Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

"actual_prompt": "Place the blue alarm clock from Image 1 to the right of the vase on the dining table in Image 2, near the edge of the tablecloth. Keep the front of the alarm clock facing the camera, parallel to the vase.",

"url": "https://dashscope-result-sh.oss-cn-shanghai.aliyuncs.com/xxx.png?Expires=xxx"

}

],

"task_metrics": {

"TOTAL": 1,

"SUCCEEDED": 1,

"FAILED": 0

}

},

"usage": {

"image_count": 1

}

}Asynchronous invocation

Ensure that the DashScope Python SDK is version 1.25.2 or later and the DashScope Java SDK is version 2.22.2 or later.

Outdated versions may trigger errors, such as "url error, please check url!". Install or upgrade the SDK.

Python

This example passes an image using a public URL.

Request example

import os

from http import HTTPStatus

from urllib.parse import urlparse, unquote

from pathlib import PurePosixPath

import dashscope

import requests

from dashscope import ImageSynthesis

# The following is the URL for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

# If you have not configured an environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx"

# The API keys for the Singapore and China (Beijing) regions are different. Get an API key: https://www.alibabacloud.com/help/en/model-studio/get-api-key

api_key = os.getenv("DASHSCOPE_API_KEY")

# Use a public image URL

image_url_1 = "https://img.alicdn.com/imgextra/i3/O1CN0157XGE51l6iL9441yX_!!6000000004770-49-tps-1104-1472.webp"

image_url_2 = "https://img.alicdn.com/imgextra/i3/O1CN01SfG4J41UYn9WNt4X1_!!6000000002530-49-tps-1696-960.webp"

def async_call():

print('----create task----')

task_info = create_async_task()

print('----wait task----')

wait_async_task(task_info)

# Create an asynchronous task

def create_async_task():

rsp = ImageSynthesis.async_call(api_key=api_key,

model="wan2.5-i2i-preview",

prompt="Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

images=[image_url_1, image_url_2],

negative_prompt="",

n=1,

# size="1280*1280",

prompt_extend=True,

watermark=False,

seed=12345)

print(rsp)

if rsp.status_code == HTTPStatus.OK:

print(rsp.output)

else:

print('Failed, status_code: %s, code: %s, message: %s' %

(rsp.status_code, rsp.code, rsp.message))

return rsp

# Wait for the asynchronous task to complete

def wait_async_task(task):

rsp = ImageSynthesis.wait(task=task, api_key=api_key)

print(rsp)

if rsp.status_code == HTTPStatus.OK:

print(rsp.output)

# save file to current directory

for result in rsp.output.results:

file_name = PurePosixPath(unquote(urlparse(result.url).path)).parts[-1]

with open('./%s' % file_name, 'wb+') as f:

f.write(requests.get(result.url).content)

else:

print('Failed, status_code: %s, code: %s, message: %s' %

(rsp.status_code, rsp.code, rsp.message))

# Get asynchronous task information

def fetch_task_status(task):

status = ImageSynthesis.fetch(task=task, api_key=api_key)

print(status)

if status.status_code == HTTPStatus.OK:

print(status.output.task_status)

else:

print('Failed, status_code: %s, code: %s, message: %s' %

(status.status_code, status.code, status.message))

# Cancel the asynchronous task. Only tasks in the PENDING state can be canceled.

def cancel_task(task):

rsp = ImageSynthesis.cancel(task=task, api_key=api_key)

print(rsp)

if rsp.status_code == HTTPStatus.OK:

print(rsp.output.task_status)

else:

print('Failed, status_code: %s, code: %s, message: %s' %

(rsp.status_code, rsp.code, rsp.message))

if __name__ == '__main__':

async_call()

Response example

1. Response example for a task creation request

{

"status_code": 200,

"request_id": "31b04171-011c-96bd-ac00-f0383b669cc7",

"code": "",

"message": "",

"output": {

"task_id": "4f90cf14-a34e-4eae-xxxxxxxx",

"task_status": "PENDING",

"results": []

},

"usage": null

}2. Response example for a task query request

The URL is valid for 24 hours. Download the image promptly.

{

"status_code": 200,

"request_id": "8ad45834-4321-44ed-adf5-xxxxxx",

"code": null,

"message": "",

"output": {

"task_id": "3aff9ebd-35fc-4339-98a3-xxxxxx",

"task_status": "SUCCEEDED",

"results": [

{

"url": "https://dashscope-result-sh.oss-cn-shanghai.aliyuncs.com/xxx.png?Expires=xxx",

"orig_prompt": "Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

"actual_prompt": "Place the blue alarm clock from Image 1 to the right of the vase on the dining table in Image 2, near the edge of the tablecloth. Keep the alarm clock facing the camera, parallel to the table, with its shadow naturally cast on the table."

}

],

"submit_time": "2025-10-23 16:18:16.009",

"scheduled_time": "2025-10-23 16:18:16.040",

"end_time": "2025-10-23 16:19:09.591",

"task_metrics": {

"TOTAL": 1,

"FAILED": 0,

"SUCCEEDED": 1

}

},

"usage": {

"image_count": 1

}

}

Java

This example passes an image using a public URL by default.

Request example

// Copyright (c) Alibaba, Inc. and its affiliates.

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesis;

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesisListResult;

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesisParam;

import com.alibaba.dashscope.aigc.imagesynthesis.ImageSynthesisResult;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.task.AsyncTaskListParam;

import com.alibaba.dashscope.utils.Constants;

import com.alibaba.dashscope.utils.JsonUtils;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

public class Image2Image {

static {

// The following is the URL for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/api/v1

Constants.baseHttpApiUrl = "https://dashscope-intl.aliyuncs.com/api/v1";

}

// If you have not configured an environment variable, replace the following line with your Model Studio API key: apiKey="sk-xxx"

// The API keys for the Singapore and China (Beijing) regions are different. Get an API key: https://www.alibabacloud.com/help/en/model-studio/get-api-key

static String apiKey = System.getenv("DASHSCOPE_API_KEY");

//Public URL

static String imageUrl_1 = "https://img.alicdn.com/imgextra/i3/O1CN0157XGE51l6iL9441yX_!!6000000004770-49-tps-1104-1472.webp";

static String imageUrl_2 = "https://img.alicdn.com/imgextra/i3/O1CN01SfG4J41UYn9WNt4X1_!!6000000002530-49-tps-1696-960.webp";

// Set the list of images to be edited

static List<String> imageUrls = new ArrayList<>();

static {

imageUrls.add(imageUrl_1);

imageUrls.add(imageUrl_2);

}

public static void asyncCall() {

// Set the parameters

Map<String, Object> parameters = new HashMap<>();

parameters.put("prompt_extend", true);

parameters.put("watermark", false);

parameters.put("seed", "12345");

ImageSynthesisParam param =

ImageSynthesisParam.builder()

.apiKey(apiKey)

.model("wan2.5-i2i-preview")

.prompt("Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.")

.images(imageUrls)

.n(1)

//.size("1280*1280")

.negativePrompt("")

.parameters(parameters)

.build();

ImageSynthesis imageSynthesis = new ImageSynthesis();

ImageSynthesisResult result = null;

try {

System.out.println("---async call, please wait a moment----");

result = imageSynthesis.asyncCall(param);

} catch (ApiException | NoApiKeyException e){

throw new RuntimeException(e.getMessage());

}

System.out.println(JsonUtils.toJson(result));

String taskId = result.getOutput().getTaskId();

System.out.println("taskId=" + taskId);

try {

result = imageSynthesis.wait(taskId, apiKey);

} catch (ApiException | NoApiKeyException e){

throw new RuntimeException(e.getMessage());

}

System.out.println(JsonUtils.toJson(result));

System.out.println(JsonUtils.toJson(result.getOutput()));

}

public static void listTask() throws ApiException, NoApiKeyException {

ImageSynthesis is = new ImageSynthesis();

AsyncTaskListParam param = AsyncTaskListParam.builder().build();

param.setApiKey(apiKey);

ImageSynthesisListResult result = is.list(param);

System.out.println(result);

}

public void fetchTask(String taskId) throws ApiException, NoApiKeyException {

ImageSynthesis is = new ImageSynthesis();

// If you have set DASHSCOPE_API_KEY as an environment variable, you can leave apiKey empty.

ImageSynthesisResult result = is.fetch(taskId, apiKey);

System.out.println(result.getOutput());

System.out.println(result.getUsage());

}

public static void main(String[] args) {

asyncCall();

}

}Response example

1. Response example for a task creation request

{

"request_id": "5dbf9dc5-4f4c-9605-85ea-542f97709ba8",

"output": {

"task_id": "7277e20e-aa01-4709-xxxxxxxx",

"task_status": "PENDING"

}

}2. Response example for a task query request

The URL is valid for 24 hours. Download the image promptly.

{

"request_id": "d362685b-757f-4eac-bab5-xxxxxx",

"output": {

"task_id": "bfa7fc39-3d87-4fa7-b1e6-xxxxxx",

"task_status": "SUCCEEDED",

"results": [

{

"orig_prompt": "Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

"actual_prompt": "Place the blue alarm clock from Image 1 to the right of the vase on the dining table in Image 2, near the edge of the tablecloth. Keep the front of the alarm clock facing the camera, parallel to the vase.",

"url": "https://dashscope-result-sh.oss-cn-shanghai.aliyuncs.com/xxx.png?Expires=xxx"

}

],

"task_metrics": {

"TOTAL": 1,

"SUCCEEDED": 1,

"FAILED": 0

}

},

"usage": {

"image_count": 1

}

}curl

This example includes two steps: creating a task and querying the result.

For asynchronous invocations, you must set the

X-DashScope-Asyncheader parameter toenable.The

task_idfor an asynchronous task is valid for queries for 24 hours. After this period, the task status changes toUNKNOWN.

Step 1: Send a request to create a task

This request returns a task ID (task_id).

Request example

curl --location 'https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/image2image/image-synthesis' \

-H 'X-DashScope-Async: enable' \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "wan2.5-i2i-preview",

"input": {

"prompt": "Place the alarm clock from Image 1 next to the vase on the dining table in Image 2.",

"images": [

"https://img.alicdn.com/imgextra/i3/O1CN0157XGE51l6iL9441yX_!!6000000004770-49-tps-1104-1472.webp",

"https://img.alicdn.com/imgextra/i3/O1CN01SfG4J41UYn9WNt4X1_!!6000000002530-49-tps-1696-960.webp"

]

},

"parameters": {

"n": 1

}

}'Response example

{

"output": {

"task_status": "PENDING",

"task_id": "0385dc79-5ff8-4d82-bcb6-xxxxxx"

},

"request_id": "4909100c-7b5a-9f92-bfe5-xxxxxx"

}Step 2: Query the result by task ID

Use the task_id from the previous step to poll the task status through the API until task_status becomes SUCCEEDED or FAILED.

Request example

Replace 86ecf553-d340-4e21-xxxxxxxxx with the actual task ID.

The API keys for the Singapore and Beijing regions are different. Create an API key.

The following `base_url` is for the Singapore region. For models in the Beijing region, replace the `base_url` with `https://dashscope.aliyuncs.com/api/v1/tasks/86ecf553-d340-4e21-xxxxxxxxx`.

curl -X GET https://dashscope-intl.aliyuncs.com/api/v1/tasks/86ecf553-d340-4e21-xxxxxxxxx \

--header "Authorization: Bearer $DASHSCOPE_API_KEY"Sample responses

The image URL is valid for 24 hours. Please download the image promptly.

{

"request_id": "d1f2a1be-9c58-48af-b43f-xxxxxx",

"output": {

"task_id": "7f4836cd-1c47-41b3-b3a4-xxxxxx",

"task_status": "SUCCEEDED",

"submit_time": "2025-09-23 22:14:10.800",

"scheduled_time": "2025-09-23 22:14:10.825",

"end_time": "2025-09-23 22:15:23.456",

"results": [

{

"orig_prompt": "Place the alarm clock from image 1 next to the vase on the dining table in image 2.",

"url": "https://dashscope-result-sh.oss-cn-shanghai.aliyuncs.com/xxx.png?Expires=xxx"

}

],

"task_metrics": {

"TOTAL": 1,

"FAILED": 0,

"SUCCEEDED": 1

}

},

"usage": {

"image_count": 1

}

}Model availability

For a list of models and their prices, see Wan2.5 general image editing.

Input parameters

Input images (images)

images is an array that supports passing one to three images for editing or fusion. The input images must meet the following requirements:

Image format: JPEG, JPG, PNG (alpha channel is not supported), BMP, and WEBP are supported.

Image resolution: The width and height of the image must both be between 384 and 5,000 pixels.

File size: A single image file cannot exceed 10 MB.

// This example only shows the structure of the images field. The input contains 2 images.

{

"images": [

"https://img.alicdn.com/imgextra/i4/O1CN01TlDlJe1LR9zso3xAC_!!6000000001295-2-tps-1104-1472.png",

"https://img.alicdn.com/imgextra/i4/O1CN01M9azZ41YdblclkU6Z_!!6000000003082-2-tps-1696-960.png"

]

}Image input order

For multi-image input, the order of the images is defined by their sequence in the array. Therefore, the image numbers referenced in the prompt must correspond to the order in the image array. For example, the first image in the array is "image 1", the second is "image 2", or you can use markers such as "[Image 1]" and "[Image 2]".

Input images | Output images | ||

Image 1 |

Image 2 |

Prompt: Move image 1 onto image 2 |

Prompt: Move image 2 onto image 1 |

Image input methods

This model supports the following three methods for passing images:

Optional parameters

This model also provides several optional parameters to adjust the image generation effect. The most important parameters include the following:

size: Specifies the resolution of the output image in pixels, using thewidth*heightformat. The default value is1280*1280. The resolution must be between 768×768 and 1280×1280 pixels. The aspect ratio must be between 1:4 and 4:1.If you do not specify the

sizeparameter, the system generates an image of 1280×1280 pixels by default and attempts to maintain the aspect ratio of the input image:For single-image input, the aspect ratio is the same as the input image.

For multi-image input, the aspect ratio is the same as the last image in the input array.

n: Sets the number of output images. The value can be an integer from 1 to 4. The default is4. We recommend setting it to 1 for testing.negative_prompt: A negative prompt describes content that you do not want to appear in the image, such as "blurry" or "extra fingers". This parameter is used only to help optimize the generation quality.watermark: Specifies whether to add a watermark. The watermark is located in the lower-right corner of the image, saying "AI-generated". The default isfalse, which means no watermark is added.seed: A random number seed. The value must be within the range of[0, 2147483647]. If you do not provide a seed, the algorithm automatically generates a random number to use. To keep the generated content relatively consistent, use the same seed value for each request.Note: Because model generation is probabilistic, using the same seed does not guarantee that the results will be identical every time.

Output parameters

Image format: PNG.

Number of images: One to four.

Image resolution: You can specify the resolution using the

sizeinput parameter. If this parameter is not specified, see the description of the size parameter.All output images will have the same resolution.

Examples

The wan2.5 image editing model does not currently support generating image sets.

Multi-image fusion

Input images | Output image |

|

Transfer the starry night painting from image 2 onto the phone case in image 1. |

|

Use the font and style of the text "Wan2.5" from [Image 1] as the sole reference. Keep the stroke width and curves unchanged. Precisely transfer the layered paper texture from [Image 2] to the text. |

|

Use two reference images for targeted redrawing: Use [Image 2] as a reference for the bag's shape and structure. Use the parrot feathers in [Image 1] as a reference for color and texture. Precisely transfer the color and texture of the parrot feathers to the surface material of the bag in [Image 2]. |

|

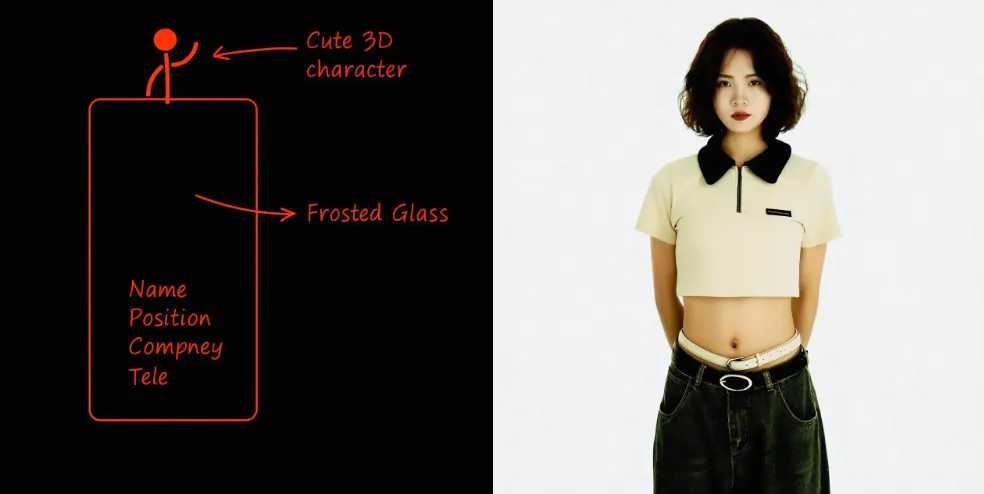

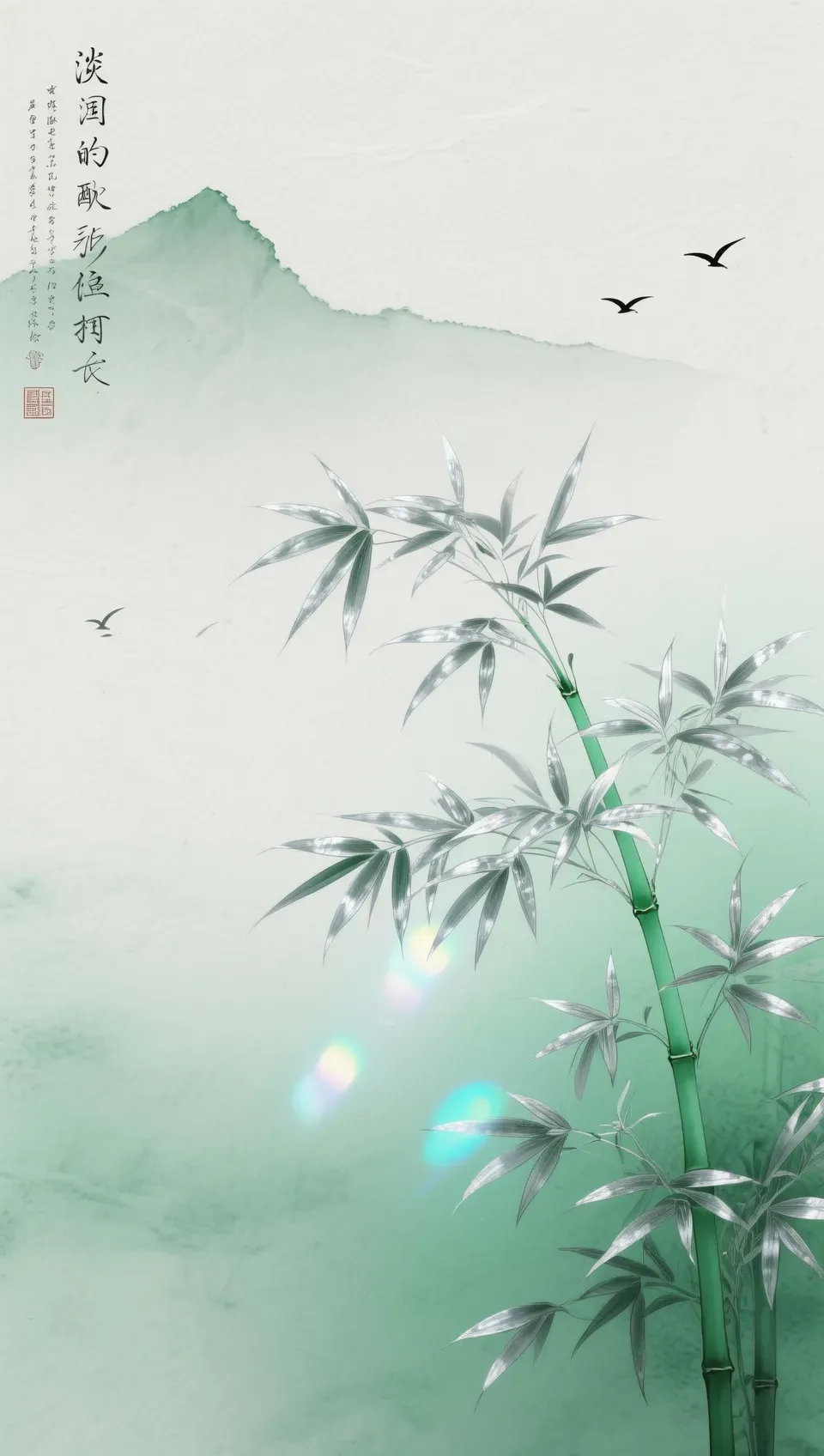

Use the composition and frosted glass texture of the business card design in [Image 1] as a template to generate a vertical work card for the person in [Image 2]. The card has rounded corners and is semi-transparent, with soft highlights and a light shadow. The person's bust is placed in the upper-middle area. The name, position, company, and phone number are arranged in the lower-left corner using a minimalist, sans-serif font with balanced white space. In the upper-right corner, place a cute 3D cartoon image of the person from [Image 1], breaking the border to semi-float out of the card and cast a light shadow, creating layers and a visual focus. The overall lighting is bright and natural, with realistic material details. Do not add any extra patterns or elements. |

Image understanding

Object detection and localization

Detects specified objects in an image and outputs an image with bounding boxes and labels.

Input image | Output image |

|

Detect the sunflowers and pumpkins in the image. Draw bounding boxes and add labels. |

Instance segmentation

The model generates a unique color region for each individual object in the image, showing its complete outline. For example, the purple area in the output image represents the segmented headphone instance.

Input image | Output image |

|

Segment the headphones in the image. |

Subject feature preservation

Input image | Output image |

|

Generate a highly detailed photo of a girl cosplaying this illustration, at Comiket. It must be a real person cosplaying. Exactly replicate the same pose, body posture, hand gestures, facial expression, and camera framing as in the original illustration. Keep the same angle, perspective, and composition, without any deviation. |

|

Generate three instances of the person from the photo in an office scene. One is sitting at a desk working. Another is standing and drinking coffee. The third is leaning against the desk reading a book. All three people are the same person from the photo. |

|

Generate a four-panel image set from this photo. In the first panel, the girl faces the camera. In the second, she raises her right hand to brush her hair. In the third, she waves enthusiastically to the viewer. In the fourth, she smiles with her eyes closed. |

Modification and upscaling

Feature | Input image | Output image |

Modify element |

|

Change the floral dress to a vintage-style lace long dress with exquisite embroidery details on the collar and cuffs. |

Modify lighting |

|

Add small, sparkling highlights to the surface of the starfish to make it glow against the sunset background. |

Upscale subject element |

|

Enlarge the cow in the image. |

Upscale image details |

|

Zoom in and show the branch the parrot is perched on. |

Element extraction

Input image | Output image |

|

Extract all the clothing items worn by the person in the image. Arrange them neatly on a white background. |

Sketch-to-image generation

Input image | Output image |

|

Generate a new real image based on this sketch, a charming Mediterranean village scene with rustic stone buildings featuring terracotta roofs, wooden shutters, and balconies adorned with hanging laundry. The foreground is filled with lush greenery, including climbing vines and blooming flowers, creating a vibrant and lively atmosphere. The architecture showcases intricate details like arched doorways and patterned stonework, evoking a sense of history and warmth], [a bright, sunny day with clear blue skies, casting soft shadows across the cobblestone streets and enhancing the warm tones of the buildings. In the background, rolling hills and distant mountains provide a picturesque setting, adding depth and serenity to the scene. |

Text generation

Input image | Output image |

|

Add the title "微距摄影" in large, rounded, white font to the upper-left corner of the image. Below the title, add the subtitle "勤劳就有收获" in a smaller, light green font. |

Watermark removal

Input image | Output image |

|

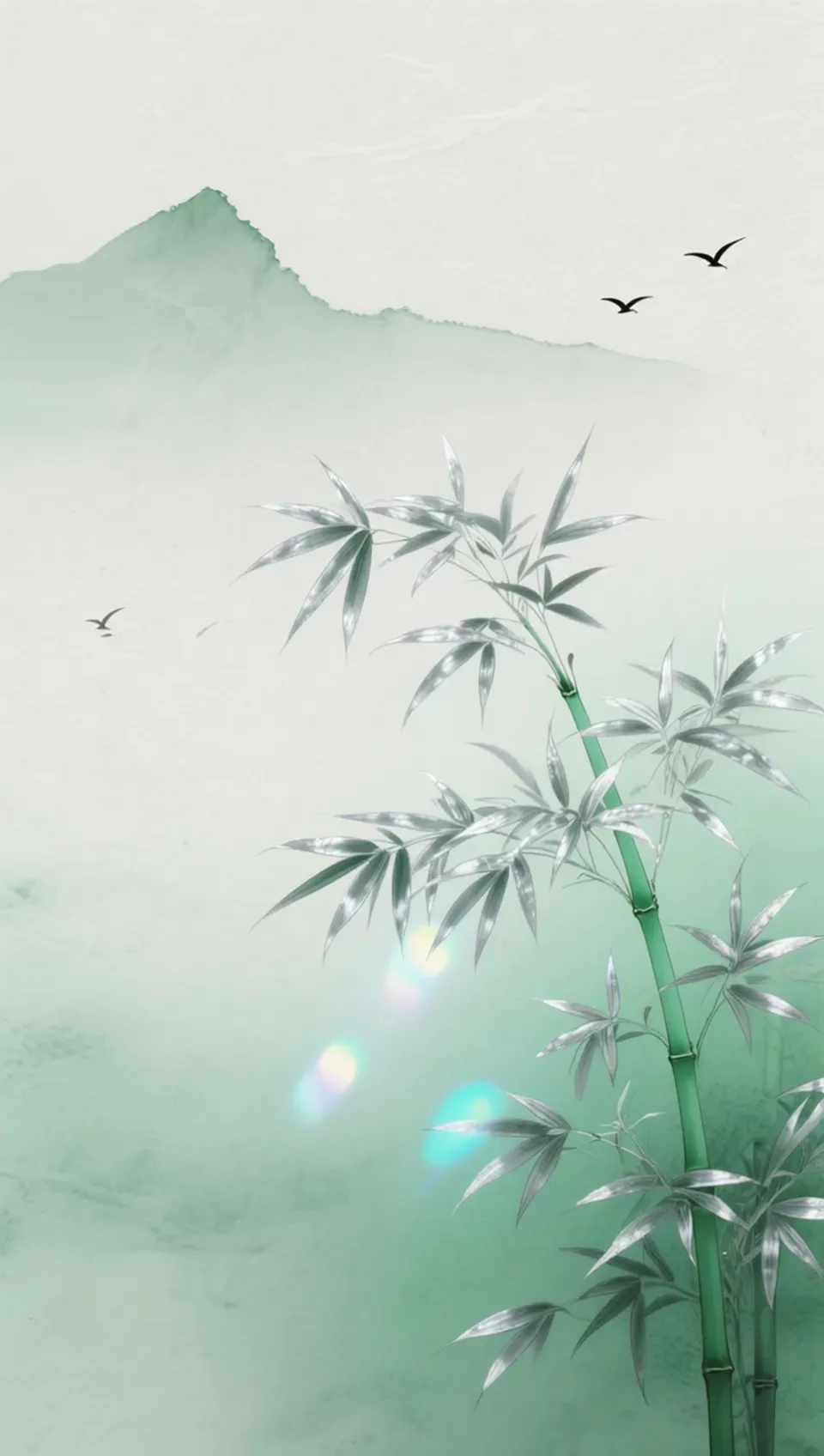

Remove all text from the upper-left corner. |

Outpainting

Input image | Output image |

|

Outpaint the image to generate a long shot photo. |

Billing and rate limiting

For free quotas and unit prices, see Models and pricing.

For rate limits, see Wan.

Billing description:

Billing is based on the number of successfully generated images. You are charged only when the API returns a

task_statusofSUCCEEDEDand an image is successfully generated.Failed model calls or processing errors do not incur any fees and do not consume your free quota.

API reference

For input and output parameters, see Wan - general image editing 2.5 API reference.

FAQ

Q: I was using the Wan2.1 general image editing. If I switch to wan2.5, do I need to change how I call the SDK?

A: Yes. The parameter design is different for the two versions:

General image editing 2.1: Requires both the

promptandfunctionparameters.General image editing 2.5: Only requires the

promptparameter. Describe all editing operations through text instructions. Thefunctionparameter is no longer supported or required.