Chatbox is an AI client and smart assistant that lets you chat with large language models without configuring a computing environment.

Prerequisites

Create an API key and make sure that you have activated Alibaba Cloud Model Studio.

Select a text generation model from the Model list. If you are a RAM user, see Workspace management to ensure that you have permission to call the model.

Model support may vary because Chatbox features are subject to change.

1. Open Chatbox

Go to Chatbox. Download and install the version for your device, or click Launch Web App.

2. Configure the model and API key

2.1. Select a Custom Provider

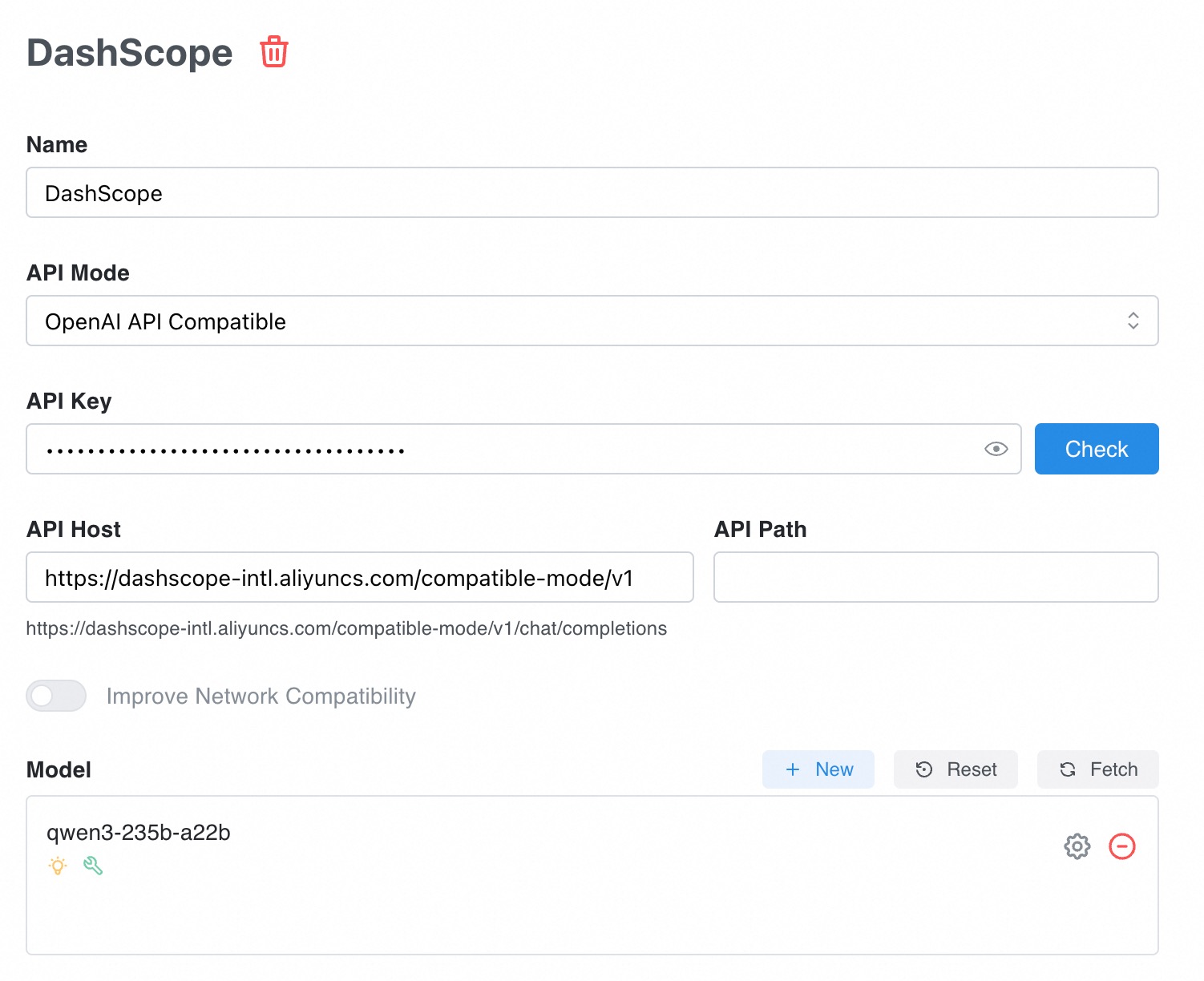

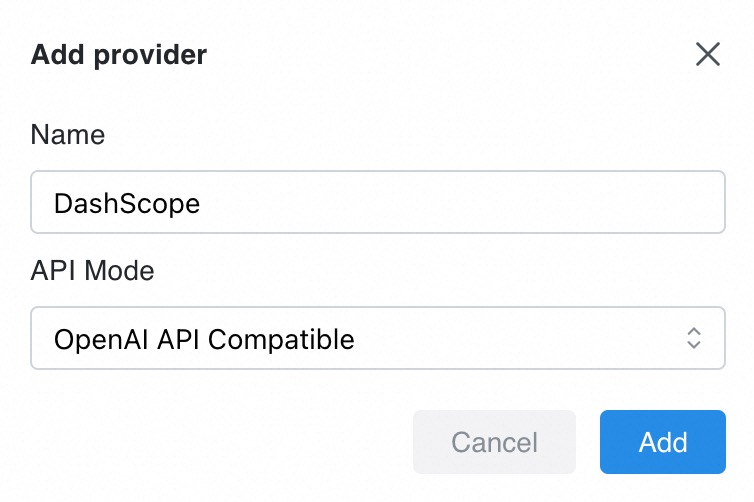

In the lower-left corner of the Chatbox page, click Settings, then Model Provider, and then click Add at the bottom of the list.

In the dialog box, set Name to "DashScope" and API Mode to OpenAI API Compatible. Click Add.

2.2. Configure the model and API key

|

|

2.3. Chat settings

After configuring the model and API key, click Chat Settings in the navigation pane on the left. Set the Max Message Count in Context and Temperature:

Max Message Count in Context

This parameter specifies the number of previous conversation turns that the model considers for each new question. For casual chats, a value of 5 to 10 is recommended. An excessive number of contextual messages can cause the following error:

Range of input length should be [1, xxx].Temperature

Controls the diversity of the text that the model generates.

A higher Temperature produces more diverse text, making it ideal for scenarios such as content creation and brainstorming.

A lower Temperature produces more deterministic text, which is ideal for scenarios such as code writing and mathematical reasoning.

Set this parameter to a number less than 2. Otherwise, the following error is reported:

'temperature' must be Float.Top P

This parameter is similar to temperature and also controls the diversity of the generated text.

Set this parameter to a number that is not greater than 1. Otherwise, the following error is reported: "xx is greater than the maximum of 1 - 'top_p'".

3. Start a chat

Enter a question in the dialog box to start the chat.

Note: You cannot use video or audio files in the chat.

Standard chat

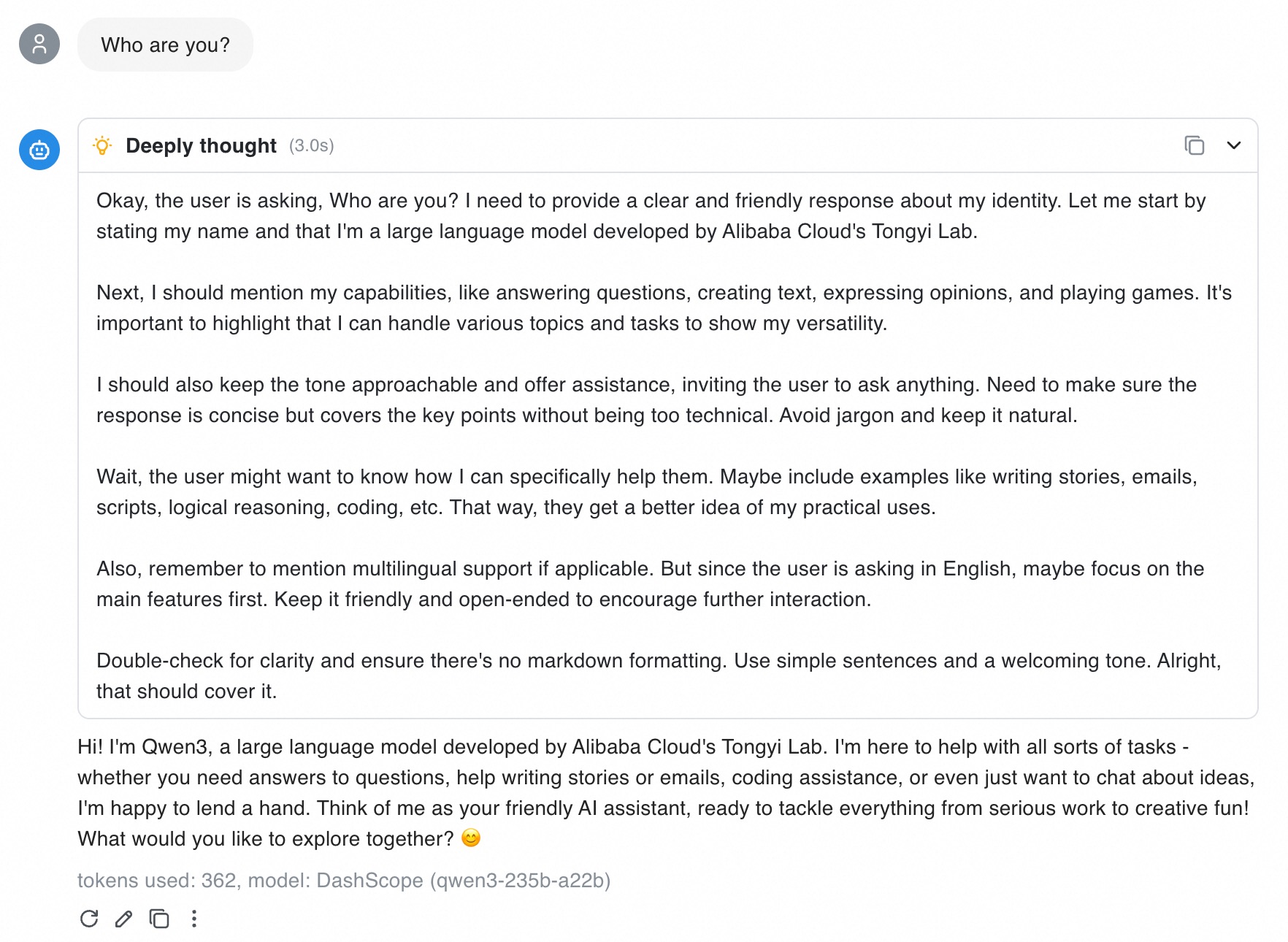

To test the chat, enter "Who are you?" in the input box.

Chatbox displays the thinking process and the response from the Qwen3 model.

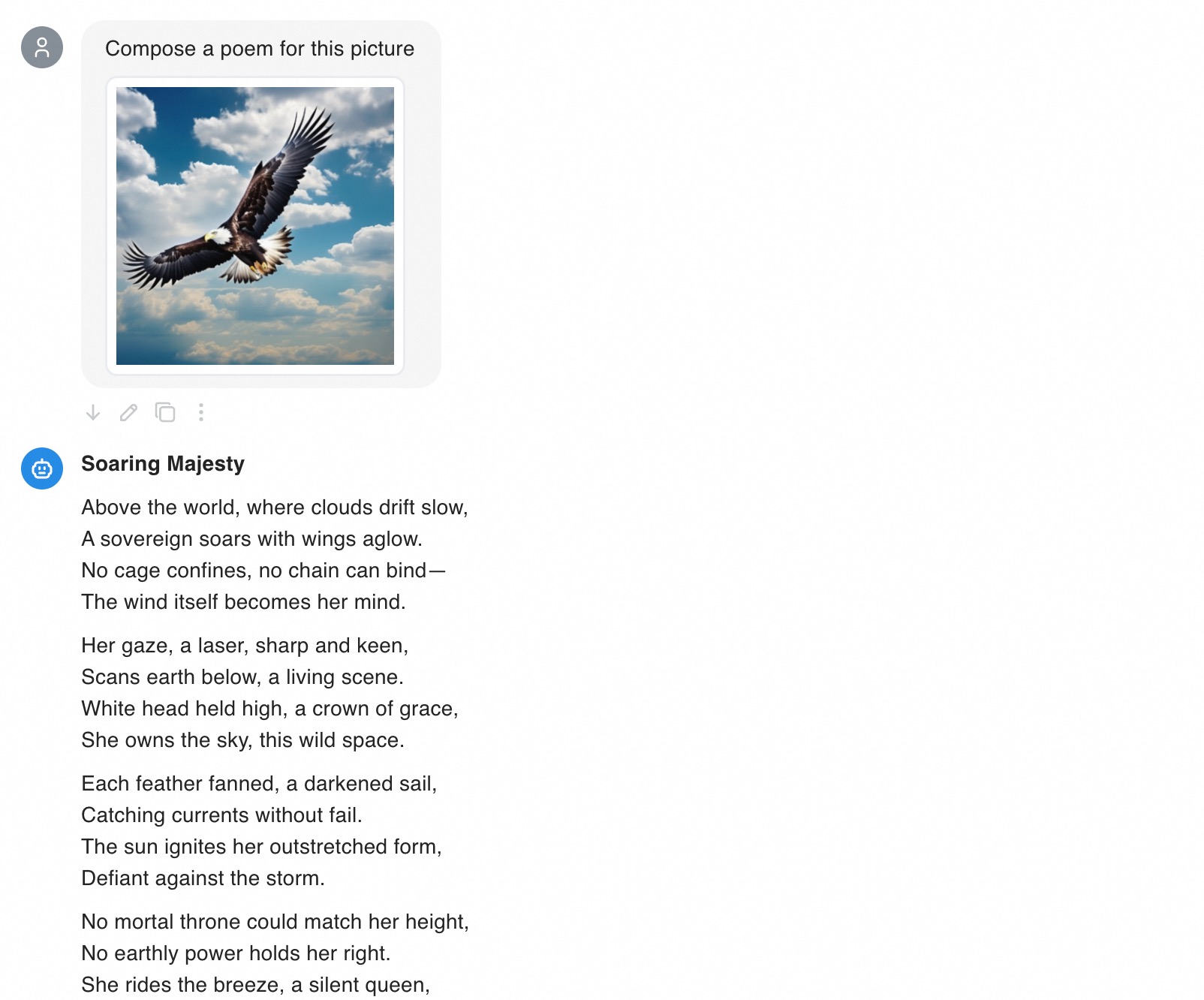

Use an image

1. Select a model

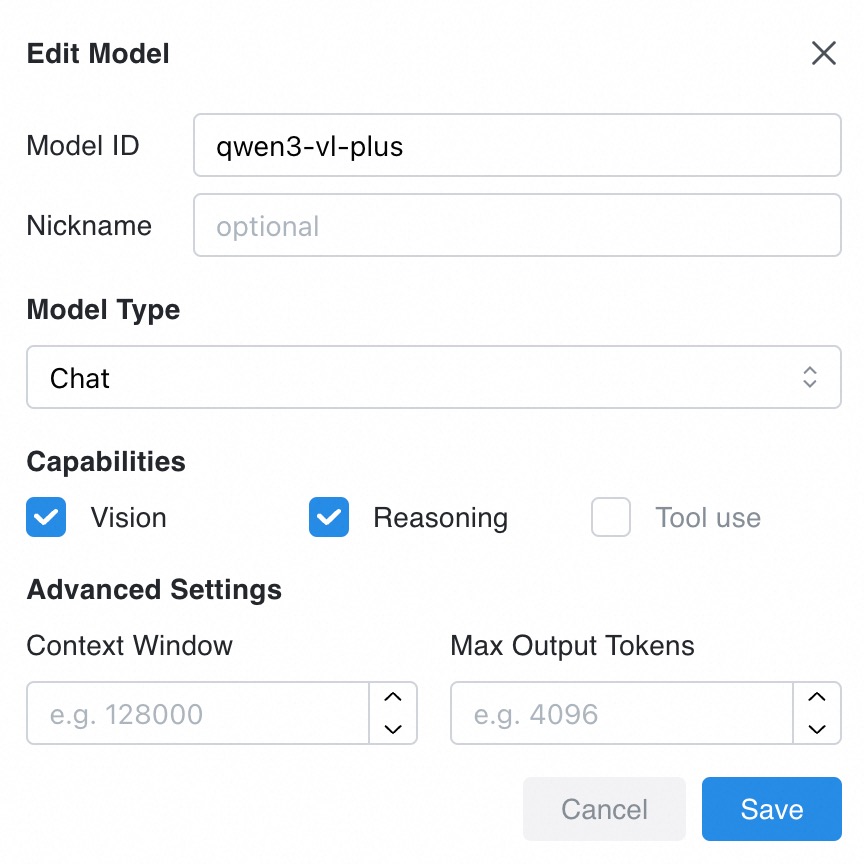

For image Q&A, you must use a model with visual capabilities. During configuration, select a Qwen-VL, QVQ, or Qwen-Omni model.

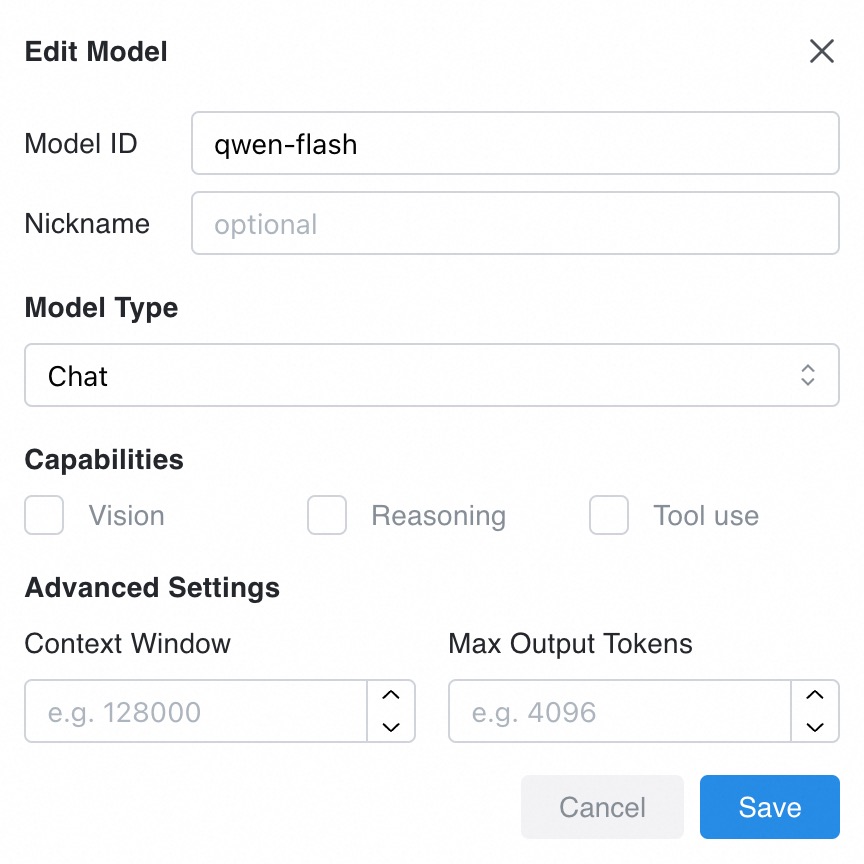

In 2.2. Configure the model and API key, add a visual model in the Model section and select Vision capability.

2. Start the chat

Next to the Send button, select the visual model ![]() . Enter a question in the input box, click

. Enter a question in the input box, click ![]() , and then click Add Image to upload an image.

, and then click Add Image to upload an image.

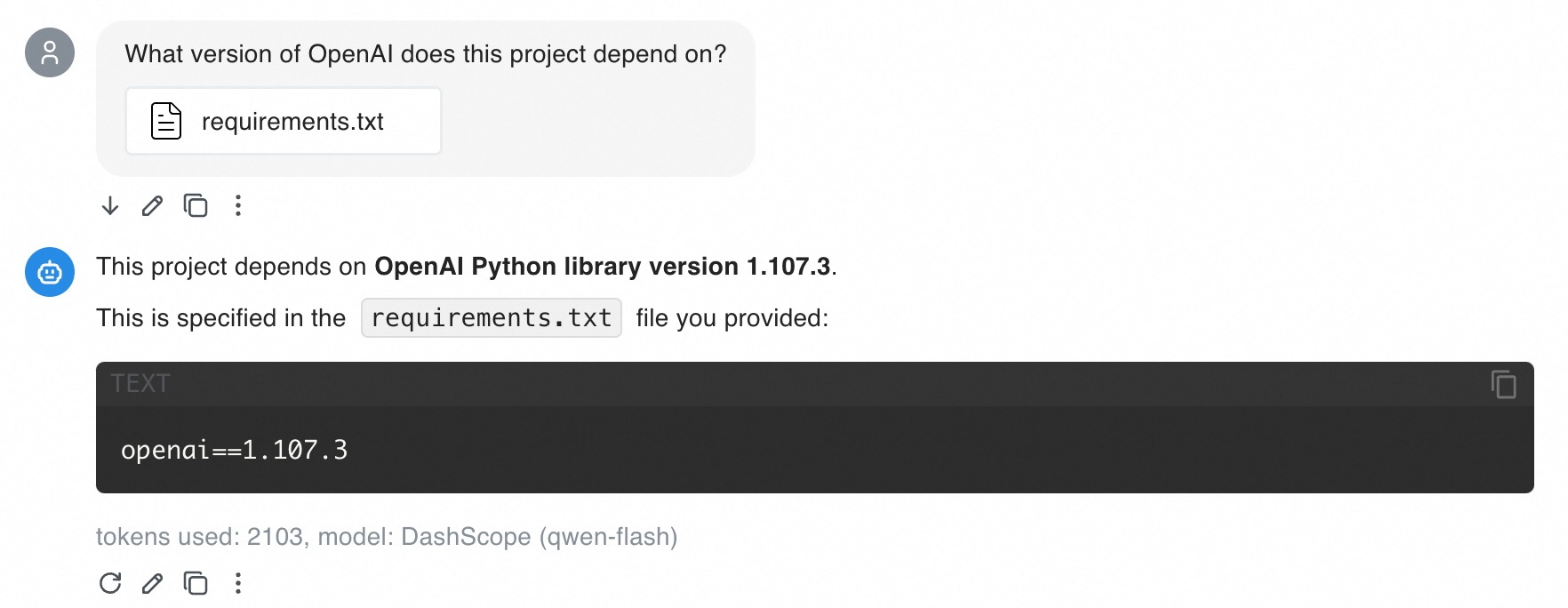

Use a document

Chatbox supports files such as PDF, DOCX, and TXT. The model can answer questions based on the document you provide.

Note: Chatbox cannot currently parse images within documents.

1. Select a model

Models with long context processing capabilities are suitable for document Q&A. We recommend Qwen-Flash, Qwen-Long, qwen2.5-14b-instruct-1m, or qwen2.5-7b-instruct-1m. These models can process millions of tokens at a lower cost.

In 2.2. Configure the model and API key, add the model that you want to use, such as qwen-flash, in the Model section.

2. Start the chat

Next to the Send button, select the model that you added, such as ![]() . Enter a question in the input box, click

. Enter a question in the input box, click ![]() , and Select File.

, and Select File.

Note: Asking consecutive questions about a document can consume many tokens. To reduce costs, you can either lower the Max Message Count in Context to reduce the number of input tokens, or use qwen-flash. This model supports context cache, which reduces the cost of input tokens in multi-turn conversations.

FAQ

Q: How am I billed?

A: Model Studio bills based on the input and output tokens of the model. For more information about model token costs, see the Models.

Note: Multi-turn conversations include historical chat records, which consume more tokens. To reduce token consumption, you can start a new chat or lower the Max Message Count in Context. For casual chats, we recommend setting the Max Message Count in Context to 5-10.

Q: What do I do if Chatbox reports the error "Failed to connect to Custom Provider"?

A: Troubleshoot the issue based on the error message:

Range of input length should be [1, xxx]

This error can occur if your input is too long or if the accumulated context from a multi-turn conversation exceeds the model's context window. Troubleshoot the issue based on your scenario:

Error on the first turn of the conversation

The text you entered may be too long, or the file you provided may contain too many tokens. Use a model with a context length of 1,000,000, such as qwen-flash or qwen-long.

Error after a multi-turn conversation

The accumulated tokens from the multi-turn conversation may have exceeded the model's context window. Try one of the following solutions:

Start a new chat

The model will not refer to the previous conversation history when it replies.

Reduce the Max Message Count in Context

This makes the model refer to only a limited number of previous messages instead of the entire conversation history.

Change the model

Switch to a model with a context length of 1,000,000, such as qwen-flash or qwen-long. This allows the model to handle a longer context for more conversation turns.

'temperature' must be Float

The model's

temperatureparameter must be less than 2. Set the Temperature parameter to a value less than 2.

Note: If your issue is not described above, see Error messages for troubleshooting.

Q: What are the limits for uploaded images and documents?

A:

Images: See Visual understanding.

Documents: Chatbox parses the document you provide. The total token length of the parsed text and the context must not exceed the model's context window.