This topic explains how to pass parameters by using a PyODPS node in DataWorks.

Prerequisites

A business flow is created in DataWorks. For more information, see Create a workflow.

Procedure

This example uses the basic mode of DataWorks. When creating a workspace, by default, Participate in Public Preview of Data Studio is not enabled, and this example does not apply to workspaces participating in the Data Studio public preview.

Prepare test data.

Create a table and upload data. For more information, see Create tables and upload data.

In this example, use the following table creation statements and source data:

The following statement creates the partitioned table user_detail:

CREATE TABLE IF NOT EXISTS user_detail ( userid BIGINT COMMENT 'User ID', job STRING COMMENT 'Job type', education STRING COMMENT 'Education level' ) COMMENT 'User information table' PARTITIONED BY (dt STRING COMMENT 'Date',region STRING COMMENT 'Region');The following statement creates the source data table user_detail_ods:

CREATE TABLE IF NOT EXISTS user_detail_ods ( userid BIGINT COMMENT 'User ID', job STRING COMMENT 'Job type', education STRING COMMENT 'Education level', dt STRING COMMENT 'Date', region STRING COMMENT 'Region' );Save the test data as the user_detail.txt file. Upload this file to the user_detail_ods table:

0001,Internet,Bachelor,20190715,beijing 0002,Education,junior college,20190716,beijing 0003,Finance,master,20190715,shandong 0004,Internet,master,20190715,beijing

Write data from the source data table

user_detail_odsto the partitioned tableuser_detail.Log on to the DataWorks console.

In the left-side navigation pane, click Workspace.

Find the target workspace, choose in the Actions column.

Right-click the business flow and choose .

Enter a node name and click Confirm.

Enter the following code in the ODPS SQL node:

INSERT OVERWRITE TABLE user_detail PARTITION (dt, region) SELECT userid, job, education, dt, region FROM user_detail_ods;Click Run to complete the data writing.

Use PyODPS to pass parameters.

Log on to the DataWorks console.

In the left-side navigation pane, click Workspace.

Find the target workspace, choose in the Actions column.

On the Data Development page, right-click the created business flow and select .

Enter a node name and click Confirm.

Enter the following code in the PyODPS 2 node to pass parameters:

import sys reload(sys) print('dt=' + args['dt']) # Change the default encoding format to UTF-8. sys.setdefaultencoding('utf8') # Obtain the user_detail table. t = o.get_table('user_detail') # Receive the partition field that is passed. with t.open_reader(partition='dt=' + args['dt'] + ',region=beijing') as reader1: count = reader1.count print("Query data in the partitioned table:") for record in reader1: print record[0],record[1],record[2]Click Run with Parameters.

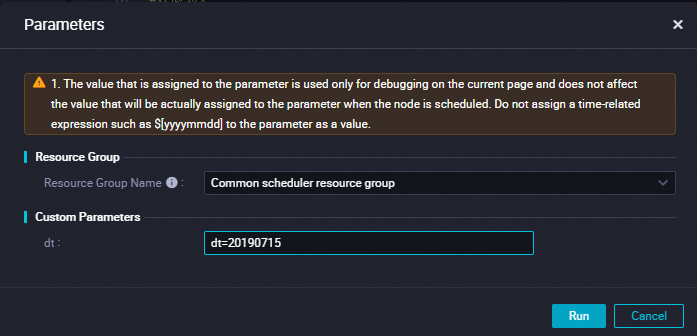

In the Parameters dialog box, configure the parameters and click Run.

Configure the following parameters:

Resource Group Name: Select Default Resource Group.

dt: Set to dt=20190715.

View the operation results in the Operation Log.