To support the integrated architecture of full and incremental storage and processing, Delta tables provide a unified table data format. Delta tables support all features of MaxCompute standard tables and are suitable for new scenarios that involve incremental processing, such as time travel queries and UPSERT operations. This topic describes the table data format of Delta tables.

Key table properties of Delta tables

Only Delta tables that have a primary key are supported. To execute a CREATE TABLE statement to create a Delta table that has a primary key, you can configure the following key properties in the statement: primary key and tblproperties ("transactional"="true").

primary key: After you configure this property, you can import data in UPSERT mode in an efficient manner. Multiple rows of records that have the same primary key are merged into one row of data after a snapshot-based query or the compaction operation is performed, and only the most recent status is retained.

tblproperties ("transactional"="true"): The transactional property indicates the atomicity, consistency, isolation, and durability (ACID) transaction characteristics to ensure snapshot isolation and control over concurrent read and write operations. A transactional property field is added to each row of data that is written to the table. For example, the timestamp field is added to each row of data to obtain the records of the required data version in time travel queries.

You can also configure other important table properties. For example, you can configure the write.bucket.num property to specify the degree of write parallelism and the acid.data.retain.hours property to specify the effective query time range of historical data. For more information about table properties, see Parameters for Delta tables.

File formats supported by Delta tables

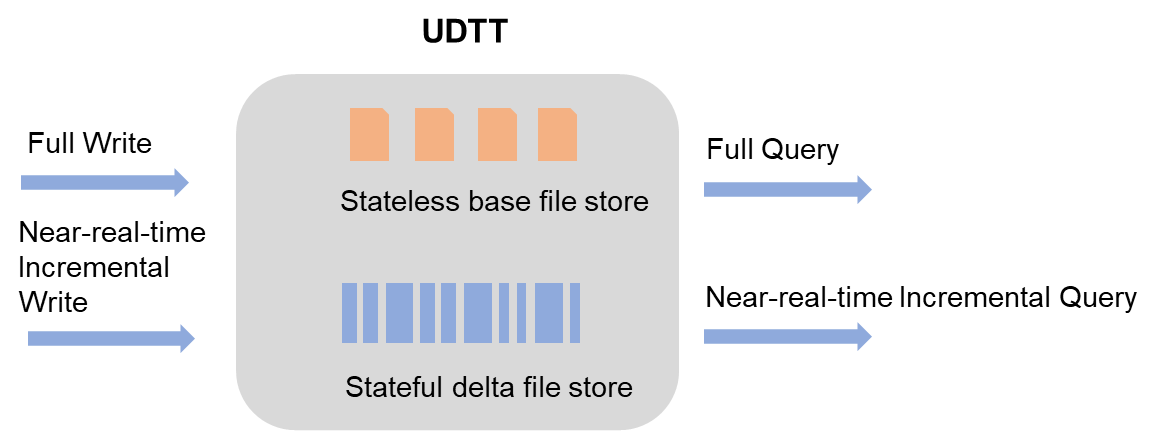

Delta tables support multiple file formats, which help ensure high efficiency in full read and write scenarios and near-real-time incremental read and write scenarios. The supported file formats include base files and delta files.

Description of base files and delta files:

Delta file: the type of file that is generated each time a transaction is committed and data is written in UPDATE or DELETE mode. A delta file stores the intermediate historical status of each row of data to meet near-real-time incremental read and write requirements. A delta file is also generated each time the clustering operation is performed. Delta files use column-oriented storage and compression.

Base file: the type of file that is generated after the compaction operation is performed on delta files. In a base file, the intermediate historical status is deleted and only one row of data is retained for records that have the same primary key. Base files use column-oriented storage and compression to support efficient full data queries.

When you query data in a Transaction Table 2.0 table, take note of the following items:

MaxCompute searches for the most recent base file each time a snapshot-based query is performed. Then, MaxCompute searches for all delta files to which data is written after the base file is generated and merges data in the delta files. Therefore, the query mode is Merge On Read (MOR). For more information, see Time travel queries and incremental queries.

All data files are sorted based on the value of the primary key column. This effectively improves merging efficiency and helps optimize data skipping queries. Data files use column-oriented storage and compression. This can effectively reduce the amount of stored data, save costs, and improve I/O efficiency.

Buckets

To further improve read and write efficiency, Delta tables allow data to be split for storage based on the bucket index column. By default, the bucket index column reuses data in the primary key column. You can configure the write.bucket.num property to specify the number of buckets to which data is written. This way, data that is written to the same table or partition is split based on the value of the primary key column. Records that have the same primary key are stored in the same bucket.

Near-real-time incremental import supports high concurrency based on the horizontal scaling of the number of buckets. Therefore, you must evaluate and specify a reasonable number of buckets based on the data write traffic and the total storage size of table data. If the number of buckets is excessively large, an excessive number of small files may be generated. This affects data read and write efficiency and storage stability. If the number of buckets is excessively small, the requirements for near-real-time import with high traffic and high speed may not be met.

This feature also helps improve data query efficiency. If the filter condition is applied to the bucket index column, bucket pruning can be performed in an efficient manner to reduce the amount of data that is queried. If the key column in the GROUP BY clause or of the JOIN operation is the same as the bucket index column, you can directly perform local join or GROUP BY operations. This helps reduce shuffling, save computing resources, and improve query performance.

Data optimization management operations, such as clustering and compaction of small files, can be performed in parallel based on buckets to improve execution efficiency and shorten up the processing time.

Record types

Only data records of the UPSERT and DELETE types can be written and stored. The UPSERT type contains two implicit semantics: INSERT and UPDATE. If a record does not exist previously, the record type is INSERT. If a record already exists, the record type is UPDATE.