This topic describes the architecture of the services that optimize data organization for Delta tables.

Background

Delta tables are an incremental data table format in MaxCompute. They support minute-level, near-real-time data import. In high-traffic scenarios, this can lead to many small incremental files and redundant intermediate states. To reduce storage overhead and computing costs and improve the speed of analysis execution and data read/write operations, MaxCompute provides three optimization services: Clustering (small file merging), COMPACTION, and data reclamation.

Clustering (small file merging)

Challenges

Delta tables support minute-level, near-real-time incremental data import. In high-traffic scenarios, this can cause a rapid increase in the number of small incremental files. This situation leads to the following problems:

High storage costs and increased I/O load.

Many small files also triggers frequent metadata updates.

Slower analysis execution and inefficient data read and write operations.

For these reasons, a well-designed small file merging service, known as the Clustering service, is required to automatically optimize these scenarios.

Solution

The Clustering service is executed by the internal Storage Service of MaxCompute and is designed to merge small files. Clustering does not change any historical intermediate states of the data. This means that the service does not eliminate the intermediate historical state of any record.

Workflow

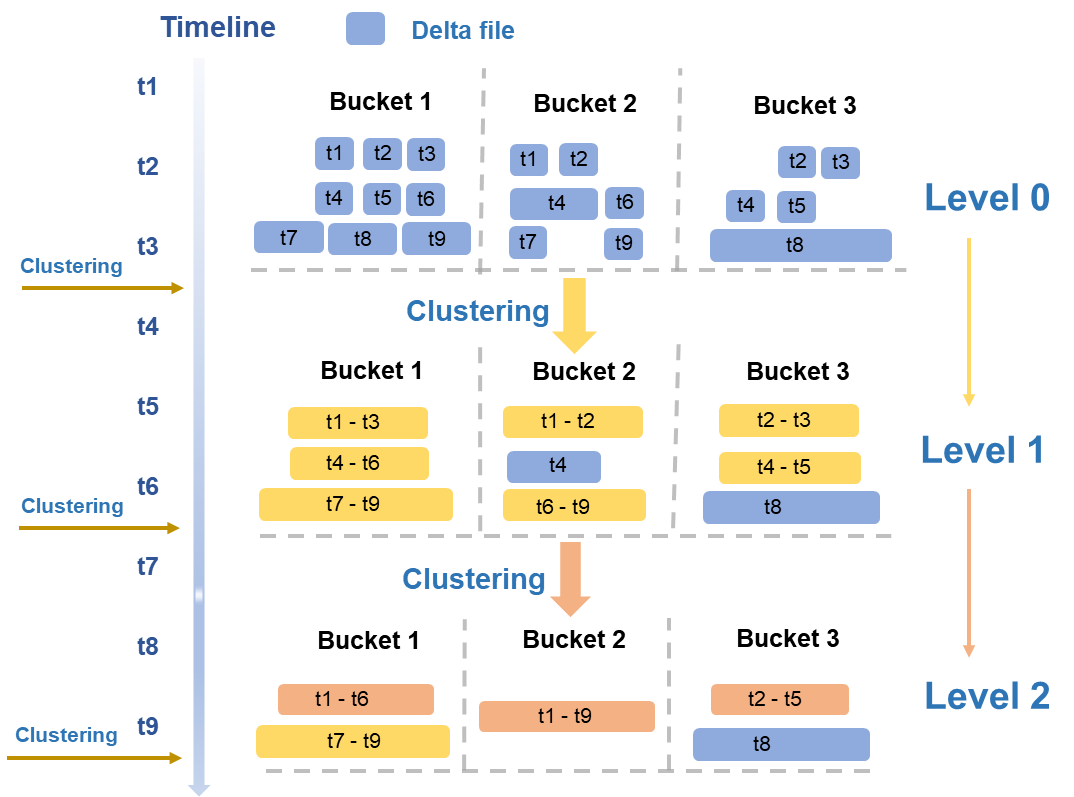

The overall workflow of the Clustering service is shown in the following figure.

Hierarchical merging

The service uses clustering policies that are based on typical read/write business scenarios. The service periodically evaluates data files based on multiple dimensions, such as size and quantity, and performs hierarchical merges.

Level 0 → Level 1: The smallest DeltaFiles from the initial write operation (blue data files in the figure) are merged into medium-sized DeltaFiles (yellow data files in the figure).

Level 1 → Level 2: When the medium-sized DeltaFiles reach a certain scale, a higher-level merge is triggered to generate larger, optimized files (orange data files in the figure).

Avoiding read/write amplification

Large file isolation: Data files that exceed a certain size, such as the T8 file in Bucket3, are excluded from the merge.

Time span limit: Files with a large time span are not merged. This prevents the need to read large amounts of historical data that fall outside a query's time range during Time Travel or incremental queries.

Automatic execution trigger: Each clustering operation reads and writes the data at least once. This consumes computing and I/O resources and causes some read/write amplification. To ensure that the Clustering service runs efficiently, the MaxCompute engine automatically triggers the operation based on the system status.

Concurrency and transactionality

Concurrent execution: Because data is partitioned and stored by BucketIndex, the Clustering service runs concurrently at the bucket level. This significantly reduces the overall runtime.

Transactional guarantee: The Clustering service interacts with the Meta Service to retrieve the list of tables or partitions to process. After the operation is complete, it passes information about the new and old data files to the Meta Service. The Meta Service plays a key role in this process. It is responsible for detecting transaction conflicts, coordinating the seamless update of metadata for new and old files, and securely reclaiming old data files.

Compaction

Challenges

Delta tables support UPDATE and DELETE operations. These operations write new records to mark the previous state of old records, rather than modifying the old records in place. Many such operations can lead to:

Data redundancy: Redundant records in intermediate states increase storage and computing costs.

Lower query efficiency

Therefore, a well-designed Compaction service is required to eliminate intermediate states and optimize these scenarios.

Solution

Compaction merges selected data files, including BaseFiles and DeltaFiles. It combines multiple records with the same primary key and eliminates the intermediate UPDATE and DELETE states. Only the record with the latest state is retained. Finally, a new BaseFile is generated that contains only INSERT data.

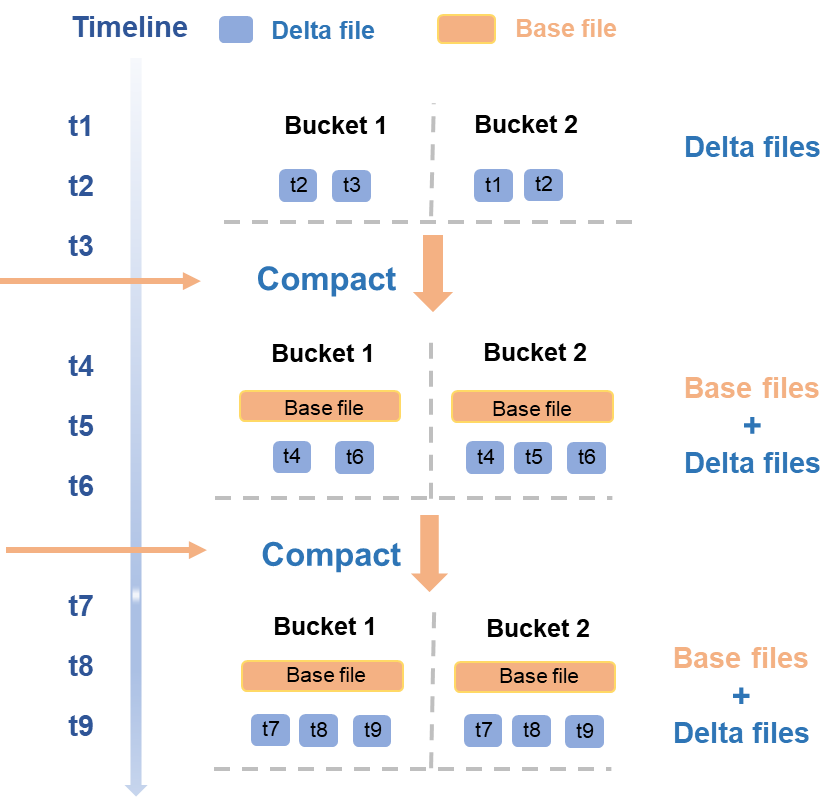

Workflow

The overall workflow of the Compaction service is as follows.

Merging DeltaFiles

From t1 to t3, a new batch of DeltaFiles is written. This triggers a Compaction operation that runs concurrently at the bucket level. The operation merges the files to generate a new BaseFile for each bucket.

At t4 and t6, another batch of new DeltaFiles is written, which triggers another Compaction operation. This operation merges the existing BaseFile with the new DeltaFiles to generate a new BaseFile.

Transactional guarantee

The Compaction service also needs to interact with the Meta Service. The process is similar to that of Clustering. It retrieves the list of tables or partitions on which to perform this operation. After the operation is complete, it passes information about the new and old data files to the Meta Service. The Meta Service is responsible for detecting transaction conflicts for the Compaction operation, atomically updating the metadata for the new and old files, and reclaiming old data files.

Execution frequency

The Compaction service saves computing and storage resources by eliminating historical record states. This improves the efficiency of full snapshot queries. However, frequent Compaction operations require significant computing and I/O resources. They can also cause the new BaseFile to use additional storage. Historical DeltaFiles might be used for Time Travel queries and cannot be deleted immediately, and therefore continue to incur storage costs.

Therefore, the execution frequency of Compaction operations should be determined based on your specific business needs and data characteristics. If UPDATE and DELETE operations are frequent and the demand for full snapshot queries is high, consider increasing the Compaction frequency to optimize query speed.

Data reclamation

Because Time Travel and incremental queries both query historical data states, Delta tables retain historical versions of data for a certain period.

Reclamation policy: You can configure the data retention period using the

acid.data.retain.hourstable property. If historical data is older than the configured value, the system automatically reclaims and cleans it up. Once the cleanup is complete, that historical version can no longer be queried using Time Travel. The reclaimed data consists mainly of operation logs and data files.NoteFor a Delta table, if you continuously write new DeltaFiles, no DeltaFiles can be deleted. This is because other DeltaFiles may have state dependencies on them. After you run a COMPACTION or an InsertOverwrite operation, the subsequently generated data files no longer depend on the previous DeltaFiles. After the Time Travel query period expires, they can be deleted.

Forced cleanup: In special scenarios, you can use the

PURGEcommand to manually trigger a forced cleanup of historical data.Automatic mechanism: To prevent unlimited historical data growth, which can occur if Compaction is not run for a long time, the MaxCompute engine includes several optimizations. The backend system periodically runs an automatic Compaction on BaseFiles or DeltaFiles that are older than the configured Time Travel period. This ensures that the reclamation mechanism functions correctly.