This topic describes the API of the Push SDK for iOS, the basic workflow of the SDK, and provides examples of how to use its features.

Features

Supports stream ingest over Real-Time Messaging Protocol (RTMP).

Supports RTS stream ingest and pulling based on Real-Time Communication (RTC).

Supports co-streaming and battles.

Adopts H.264 for video encoding and AAC for audio encoding.

Supports custom configurations for features such as bitrate control, resolution, and display mode.

Supports various camera operations.

Supports real-time retouching and custom retouching effects.

Allows you to add and remove animated stickers as watermarks.

Allows you to stream screen recordings.

Supports external audio and video inputs in different formats such as YUV and pulse-code modulation (PCM).

Supports mixing of multiple streams.

Supports ingest of audio-only and video-only streams and stream ingest in the background.

Supports background music.

Supports video snapshot capture.

Supports automatic reconnection and error handling.

Supports Automatic Gain Control (AGC), Automatic Noise Reduction (ANR), and Acoustic Echo Cancellation (AEC) algorithms.

Allows you to switch between the software and hardware encoding modes for video files. This improves the stability of the encoding module.

Limitations

Take note of the following limits before you use Push SDK for iOS:

You must configure screen orientation before stream ingest. You cannot rotate the screen during live streaming.

You must disable auto screen rotation for stream ingest in landscape mode.

In hardware encoding mode, the value of the output resolution must be a multiple of 16 to be compatible with the encoder. For example, if you set the resolution to 540p, the output resolution is 544 × 960. You must scale the screen size of the player based on the output resolution to prevent black bars.

API reference

Procedure

The basic procedure is as follows:

Feature usage

Register the SDK

To request and configure a license, see License Integration Guide.

Register the SDK before you use the stream ingest feature. Otherwise, you cannot use the Push SDK.

Call the license registration method at an early stage, before you use the Push SDK.

[AlivcLiveBase registerSDK];The AlivcLiveBase class lets you set log levels, set local log paths, and get the SDK version.

In the onLicenceCheck method of the AlivcLiveBase#setObserver interface, asynchronously check whether the license is configured.

Configure stream ingest parameters

In the ViewController where you need the pusher, import the header file #import <AlivcLivePusher/AlivcLivePusher.h>.

Basic stream ingest parameters have default values. We recommend that you use the default values. You can perform a simple initialization without extra configuration.

AlivcLivePushConfig *config = [[AlivcLivePushConfig alloc] init];// Initialize the stream ingest configuration class. You can also use initWithResolution for initialization.

config.resolution = AlivcLivePushResolution540P;// The default resolution is 540P. The maximum resolution is 720P.

config.fps = AlivcLivePushFPS20; // We recommend that you use 20 fps.

config.enableAutoBitrate = true; // Enable bitrate control. The default value is true.

config.videoEncodeGop = AlivcLivePushVideoEncodeGOP_2;// The default value is 2. A larger GOP size results in higher latency. We recommend that you set this parameter to 1 or 2.

config.connectRetryInterval = 2000; // Unit: milliseconds. The reconnection duration is 2 s. The reconnection interval must be at least 1 second. We recommend that you use the default value.

config.previewMirror = false; // The default value is false. In normal cases, select false.

config.orientation = AlivcLivePushOrientationPortrait; // The default orientation is portrait. You can set the device to landscape with the home button on the left or right.Considering mobile device performance and network bandwidth requirements, we recommend that you set the resolution to 540P. Most mainstream live streaming apps use 540P.

If you disable bitrate control, the bitrate is fixed at the initial bitrate and does not automatically adjust between the target and minimum bitrates. If the network is unstable, this may cause playback stuttering. Use this option with caution.

Ingest a camera stream

Initialize.

After you configure stream ingest parameters, use the initWithConfig method of the Push SDK to perform initialization. Sample code:

self.livePusher = [[AlivcLivePusher alloc] initWithConfig:config];NoteAlivcLivePusher does not support multiple instances. Therefore, each init call must have a corresponding destroy call.

Register stream ingest callbacks.

The following stream ingest callbacks are supported:

Info: the callbacks that are used for notifications and status detection.

Error: the callbacks that are returned when errors occur.

Network: the callbacks that are related to network.

Register the delegate to receive the corresponding callbacks. Sample code:

[self.livePusher setInfoDelegate:self]; [self.livePusher setErrorDelegate:self]; [self.livePusher setNetworkDelegate:self];Start preview.

After the livePusher object is initialized, you can start the preview. When you preview, you must pass in the display view for the camera preview. The view inherits from UIView. Sample code:

[self.livePusher startPreview:self.view];Start stream ingest.

You can start stream ingest only after the preview is successful. Therefore, listen for the onPreviewStarted callback of AlivcLivePusherInfoDelegate and add the following code in the callback.

[self.livePusher startPushWithURL:@"Test ingest URL (rtmp://......)"];NoteRTMP and RTS (artc://) ingest URLs are supported. To generate ingest URLs, see Generate streaming URLs.

ApsaraVideo Live does not support ingesting multiple streams to the same ingest URL at the same time. The second stream ingest request is rejected.

Camera-related operations

You can call camera-related operations only after the preview starts. These operations are available in the streaming, paused, or reconnecting state. You can switch between the front and rear cameras and configure the flash, focal length, zoom, and mirroring settings. If you call the following methods before the preview starts, the calls are invalid. Sample code:

/* Switch between the front and rear cameras. */

[self.livePusher switchCamera];

/* Enable or disable the flash. You cannot enable the flash for the front camera. */

[self.livePusher setFlash:false];

/* Adjust the focal length to zoom in or out of the captured frame. Pass a positive number to increase the focal length. Pass a negative number to decrease the focal length. */

CGFloat max = [_livePusher getMaxZoom];

[self.livePusher setZoom:MIN(1.0, max)];

/* Manually focus. To manually focus, you must pass two parameters: 1. point: the point to focus on (the coordinates of the point to focus on). 2. autoFocus: specifies whether to enable autofocus. This parameter takes effect only for the focus operation of this API call. Subsequent autofocus operations use the value set by the autofocus API. */

[self.livePusher focusCameraAtAdjustedPoint:CGPointMake(50, 50) autoFocus:true];

/* Set whether to enable autofocus. */

[self.livePusher setAutoFocus:false];

/* Configure mirroring. There are two mirroring interfaces: PushMirror for ingest stream mirroring and PreviewMirror for preview mirroring. The PushMirror setting takes effect only on the playback screen. The PreviewMirror setting takes effect only on the preview screen. The two settings do not affect each other. */

[self.livePusher setPushMirror:false];

[self.livePusher setPreviewMirror:false];Stream ingest control

Stream ingest control includes operations such as starting, stopping, pausing, and resuming stream ingest, stopping the preview, restarting stream ingest, and destroying the stream ingest instance. You can add buttons to perform these operations based on your business needs. Sample code:

/* You can set pauseImage and then call the pause method to switch from camera stream ingest to static image stream ingest. Audio stream ingest continues. */

[self.livePusher pause];

/* Switch from static image stream ingest to camera stream ingest. Audio stream ingest continues. */

[self.livePusher resume];

/* In the streaming state, you can call this method to stop stream ingest. After the operation is complete, stream ingest stops. */

[self.livePusher stopPush];

/* You can stop the preview only in the previewing state. If you are ingesting a stream, stopping the preview is invalid. After the preview stops, the preview screen freezes on the last frame. */

[self.livePusher stopPreview];

/* In the streaming state or when any Error-related callback is received, you can call this method to restart stream ingest. In an error state, you can only call this method (or reconnectPushAsync for reconnection) or call destroy to destroy the stream ingest instance. After the operation is complete, stream ingest restarts. All internal resources of ALivcLivePusher, including preview and stream ingest, are restarted. */

[self.livePusher restartPush];

/* In the streaming state or when an AlivcLivePusherNetworkDelegate-related Error callback is received, you can call this interface. In an error state, you can only call this interface (or restartPush to restart stream ingest) or call destroy to destroy the stream ingest instance. After the operation is complete, the stream ingest reconnects. The RTMP connection for stream ingest is re-established. */

[self.livePusher reconnectPushAsync];

/* After the stream ingest instance is destroyed, stream ingest and preview stop, and the preview screen is removed. All resources related to AlivcLivePusher are destroyed. */

[self.livePusher destory];

self.livePusher = nil;

/* Get the stream ingest status. */

AlivcLivePushStatus status = [self.livePusher getLiveStatus];Screen recording (screen sharing) stream ingest

ReplayKit is a feature introduced in iOS 9 that supports screen recording. In iOS 10, a feature was added to ReplayKit that lets you call third-party app extensions to live stream screen content. On iOS 10 and later, you can use the Push SDK with an Extension screen recording process to implement screen sharing for live streaming.

To ensure smooth system operation, iOS allocates relatively few resources to the Extension screen recording process. If the Extension screen recording process uses too much memory, the system forcibly terminates it. To address the memory limit on the Extension screen recording process, the Push SDK divides screen sharing stream ingest into an Extension screen recording process (Extension App) and a main app process (Host App). The Extension App is responsible for capturing screen content and sending it to the Host App through inter-process communication. The Host App creates the AlivcLivePusher stream ingest engine and pushes the screen data to the remote server. Because the entire stream ingest process is completed in the Host App, microphone capture and sending can be handled by the Host App. The Extension App is only responsible for screen content capture.

The Push SDK demo uses an App Group to implement inter-process communication between the Extension screen recording process and the main app process. This logic is encapsulated in the AlivcLibReplayKitExt.framework.

To implement screen sharing stream ingest on iOS, the Extension screen recording process is created by the system when screen recording is needed. It is responsible for receiving the screen images captured by the system. Perform the following steps:

Create an App Group.

Log on to Apple Developer and perform the following operations:

On the Certificates, IDs & Profiles page, register an App Group. For more information, see Register an App Group.

Return to the Identifier page, select App IDs, and then click your App ID to enable the App Group feature. The App IDs for the main app process and the Extension screen recording process must be configured in the same way. For more information, see Enable App Group.

After the operation is complete, download the corresponding Provisioning Profile again and configure it in Xcode.

After you perform the operations correctly, the Extension screen recording process can communicate with the main app process.

NoteAfter you create an App Group, save the App Group Identifier value. This value is required for subsequent steps.

Create an Extension screen recording process.

The iOS Push SDK demo provides the AlivcLiveBroadcast and AlivcLiveBroadcastSetupUI app extensions that support screen sharing for live streaming. To create an Extension screen recording process in the app, perform the following steps:

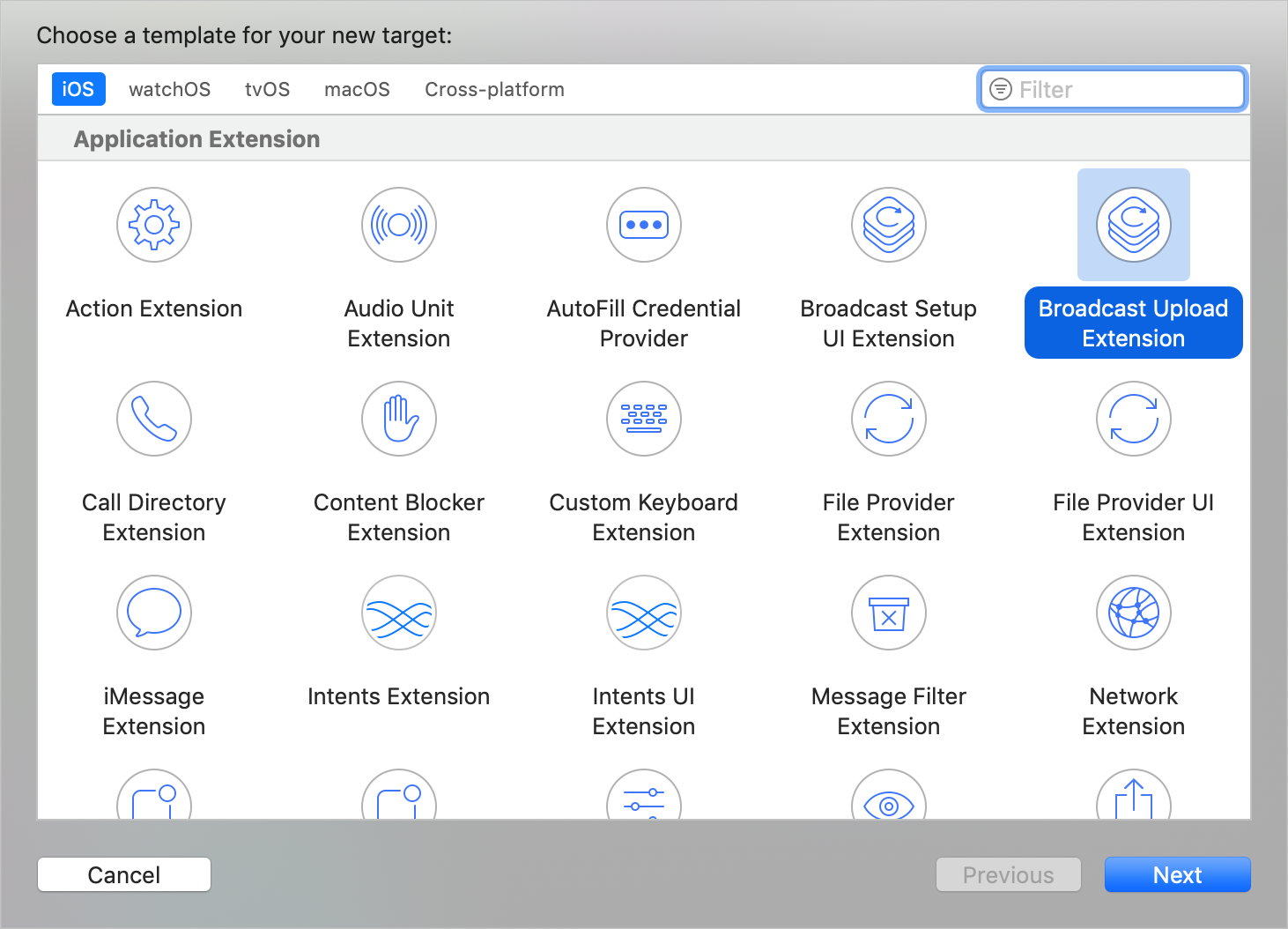

In your existing project, choose New > Target… and select Broadcast Upload Extension, as shown in the following figure:

Modify the Product Name, select Include UI Extension, and click Finish to create the live streaming extension and UI, as shown in the following figure:

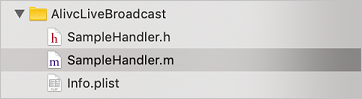

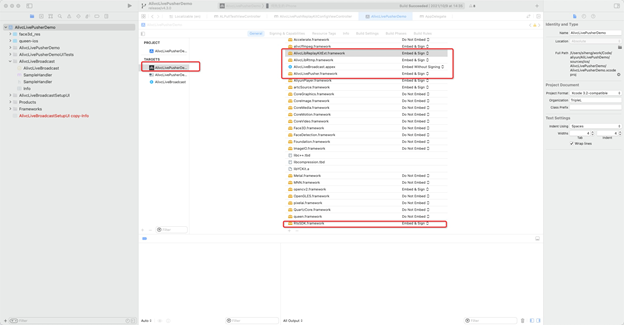

Configure the Info.plist file for the live streaming extension. In the newly created target, Xcode creates a header file and a source file named SampleHandler by default, as shown in the following figure:

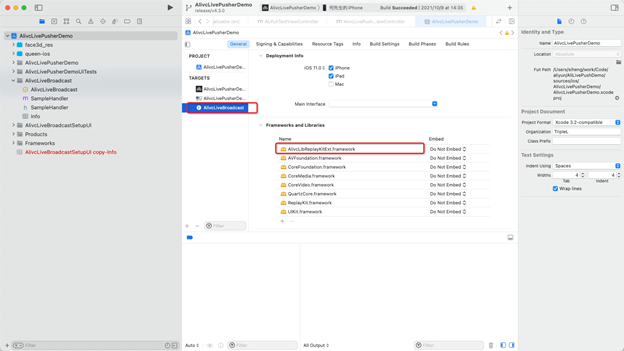

Drag the

AlivcLibReplayKitExt.frameworkto the project to make the Extension Target depend on it. Replace the code in SampleHandler.m with the following code. Replace KAPP Group in the code with the App Group Identifier that you created in the first step. Sample code:

Replace the code in SampleHandler.m with the following code. Replace KAPP Group in the code with the App Group Identifier that you created in the first step. Sample code:#import "SampleHandler.h" #import <AlivcLibReplayKitExt/AlivcLibReplayKitExt.h> @implementation SampleHandler - (void)broadcastStartedWithSetupInfo:(NSDictionary<NSString *,NSObject *> *)setupInfo { //User has requested to start the broadcast. Setup info from the UI extension can be supplied but optional. [[AlivcReplayKitExt sharedInstance] setAppGroup:kAPPGROUP]; } - (void)processSampleBuffer:(CMSampleBufferRef)sampleBuffer withType:(RPSampleBufferType)sampleBufferType { if (sampleBufferType != RPSampleBufferTypeAudioMic) { // Audio is captured and sent by the main app process. [[AlivcReplayKitExt sharedInstance] sendSampleBuffer:sampleBuffer withType:sampleBufferType]; } } - (void)broadcastFinished { [[AlivcReplayKitExt sharedInstance] finishBroadcast]; } @end

In your project, create a Broadcast Upload Extension target. In this Extension Target, integrate the

AlivcLibReplayKitExt.frameworkthat is customized for the screen recording extension module.Integrate the Live SDK into the main app process for screen sharing stream ingest.

In the main app process for screen sharing stream ingest, create AlivcLivePushConfig and AlivcLivePusher objects. Set ExternMainStream to True and AudioFromExternal to False. This configuration indicates that audio is still captured by the SDK. Call StartScreenCapture to start receiving screen data from the Extension App. Then, start and stop stream ingest. For more information, see the following steps:

In the main app process for screen sharing stream ingest, add dependencies on AlivcLivePusher.framework, AlivcLibRtmp.framework, RtsSDK.framework, and AlivcLibReplayKitExt.framework.

Initialize the Push SDK and configure it to use an external video source.

Set ExternMainStream to True and ExternVideoFormat to AlivcLivePushVideoFormatYUV420P. To use the internal SDK for audio capture, set AudioFromExternal to False. Configure other stream ingest parameters. For more information, see the following sample code:

self.pushConfig.externMainStream = true; self.pushConfig.externVideoFormat = AlivcLivePushVideoFormatYUV420P; self.pushConfig.audioSampleRate = 44100; self.pushConfig.audioChannel = 2; self.pushConfig.audioFromExternal = false; self.pushConfig.videoEncoderMode = AlivcLivePushVideoEncoderModeSoft; self.pushConfig.qualityMode = AlivcLivePushQualityModeCustom; self.pushConfig.targetVideoBitrate = 2500; self.pushConfig.minVideoBitrate = 2000; self.pushConfig.initialVideoBitrate = 2000; self.livePusher = [[AlivcLivePusher alloc] initWithConfig:self.pushConfig];Use AlivcLivePusher to perform live streaming-related functions. Call the following functions:

Start receiving screen data.

Replace

kAPPGroupin the code with theApp Group Identifierthat you created. Sample code:[self.livePusher startScreenCapture:kAPPGROUP];Start stream ingest.

Sample code:

[self.livePusher startPushWithURL:self.pushUrl]Stop stream ingest.

Sample code:

[self.livePusher stopPush]; [self.livePusher destory]; self.livePusher = nil;

Preview display modes

The Push SDK supports three preview modes. The preview display mode does not affect stream ingest.

ALIVC_LIVE_PUSHER_PREVIEW_SCALE_FILL: The preview fills the window. If the aspect ratio of the video is different from the aspect ratio of the window, the preview is distorted.

ALIVC_LIVE_PUSHER_PREVIEW_ASPECT_FIT: The aspect ratio of the video is preserved during preview. If the aspect ratio of the video is different from the aspect ratio of the window, black bars appear in the preview. This is the default mode.

ALIVC_LIVE_PUSHER_PREVIEW_ASPECT_FILL: The video is cropped to fit the window aspect ratio during preview. If the aspect ratio of the video is different from the aspect ratio of the window, the preview is cropped.

Sample code:

mAlivcLivePushConfig.setPreviewDisplayMode(AlivcPreviewDisplayMode.ALIVC_LIVE_PUSHER_PREVIEW_ASPECT_FIT);You can set one of the three modes in AlivcLivePushConfig. You can also dynamically set the mode during preview and stream ingest using the setpreviewDisplayMode API.

This setting takes effect only for the preview display. The resolution of the actual ingested video stream is the same as the resolution preset in AlivcLivePushConfig and does not change when you change the preview display mode. The preview display mode is designed to adapt to mobile phones of different sizes. You can select the preview effect that you prefer.

Image stream ingest

For a better user experience, the SDK provides settings for background image stream ingest and image stream ingest when the bitrate is too low. When the SDK is moved to the background, video stream ingest is paused by default, and only audio is ingested. At this time, you can set an image for image stream ingest. For example, you can display an image to remind users that the streamer is away for a moment and will be back soon. Sample code:

config.pauseImg = [UIImage imageNamed:@"image.png"];// Set the image for background stream ingest.In addition, when the network is poor, you can set a static image to be ingested based on your needs. After you set the image, the SDK detects when the current bitrate is low and ingests this image to avoid video stream stuttering. Sample code:

config.networkPoorImg = [UIImage imageNamed:@"image.png"];// Set the image to be ingested when the network is poor.Push external audio and video streams

The Push SDK supports ingesting external audio and video sources, such as an audio or video file.

Configure external audio and video input in the stream ingest configuration.

Insert external video data.

Insert audio data.

Sample code:

config.externMainStream = true;// Enable external stream input.

config.externVideoFormat = AlivcLivePushVideoFormatYUVNV21;// Set the color format of video data. In this example, the format is set to YUVNV21. You can set the format to another value as needed.

config.externAudioFormat = AlivcLivePushAudioFormatS16;// Set the bit depth format of audio data. In this example, the format is set to S16. You can set the format to another value as needed.Sample code:

/* Only continuous buffer data in the external video YUV and RGB formats can be sent using the sendVideoData interface. You can send the video data buffer, length, width, height, timestamp, and rotation angle. */

[self.livePusher sendVideoData:yuvData width:720 height:1280 size:dataSize pts:nowTime rotation:0];

/* If the external video data is in the CMSampleBufferRef format, you can use the sendVideoSampleBuffer interface. */

[self.livePusher sendVideoSampleBuffer:sampleBuffer]

/* You can also convert the CMSampleBufferRef format to a continuous buffer and then pass it to the sendVideoData interface. The following is reference code for the conversion. */

// Get the sample buffer length.

- (int) getVideoSampleBufferSize:(CMSampleBufferRef)sampleBuffer {

if(!sampleBuffer) {

return 0;

}

int size = 0;

CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

CVPixelBufferLockBaseAddress(pixelBuffer, 0);

if(CVPixelBufferIsPlanar(pixelBuffer)) {

int count = (int)CVPixelBufferGetPlaneCount(pixelBuffer);

for(int i=0; i<count; i++) {

int height = (int)CVPixelBufferGetHeightOfPlane(pixelBuffer,i);

int stride = (int)CVPixelBufferGetBytesPerRowOfPlane(pixelBuffer,i);

size += stride*height;

}

}else {

int height = (int)CVPixelBufferGetHeight(pixelBuffer);

int stride = (int)CVPixelBufferGetBytesPerRow(pixelBuffer);

size += stride*height;

}

CVPixelBufferUnlockBaseAddress(pixelBuffer, 0);

return size;

}

// Convert the sample buffer to a continuous buffer.

- (int) convertVideoSampleBuffer:(CMSampleBufferRef)sampleBuffer toNativeBuffer:(void*)nativeBuffer

{

if(!sampleBuffer || !nativeBuffer) {

return -1;

}

CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

CVPixelBufferLockBaseAddress(pixelBuffer, 0);

int size = 0;

if(CVPixelBufferIsPlanar(pixelBuffer)) {

int count = (int)CVPixelBufferGetPlaneCount(pixelBuffer);

for(int i=0; i<count; i++) {

int height = (int)CVPixelBufferGetHeightOfPlane(pixelBuffer,i);

int stride = (int)CVPixelBufferGetBytesPerRowOfPlane(pixelBuffer,i);

void *buffer = CVPixelBufferGetBaseAddressOfPlane(pixelBuffer, i);

int8_t *dstPos = (int8_t*)nativeBuffer + size;

memcpy(dstPos, buffer, stride*height);

size += stride*height;

}

}else {

int height = (int)CVPixelBufferGetHeight(pixelBuffer);

int stride = (int)CVPixelBufferGetBytesPerRow(pixelBuffer);

void *buffer = CVPixelBufferGetBaseAddress(pixelBuffer);

size += stride*height;

memcpy(nativeBuffer, buffer, size);

}

CVPixelBufferUnlockBaseAddress(pixelBuffer, 0);

return 0;

}Sample code:

/* Only continuous buffer data in the external PCM format is supported. Use sendPCMData to send the audio data buffer, length, and timestamp. */

[self.livePusher sendPCMData:pcmData size:size pts:nowTime];Configure watermarks

The Push SDK provides a feature to add watermarks. You can add multiple watermarks. The watermark images must be in the PNG format. Sample code:

NSString *watermarkBundlePath = [[NSBundle mainBundle] pathForResource:

[NSString stringWithFormat:@"watermark"] ofType:@"png"];// Set the path of the watermark image.

[config addWatermarkWithPath: watermarkBundlePath

watermarkCoordX:0.1

watermarkCoordY:0.1

watermarkWidth:0.3];// Add a watermark.coordX, coordY, and width are relative values. For example, watermarkCoordX:0.1 indicates that the x-coordinate of the watermark is at 10% of the x-axis of the ingest screen. If the ingest resolution is 540 × 960, the x-coordinate of the watermark is 54.

The height of the watermark image is scaled proportionally to the input width value based on the actual width and height of the watermark image.

To implement a text watermark, first convert the text to an image, and then use this interface to add the watermark.

To ensure the clarity and edge smoothness of the watermark, use a watermark source image that has the same size as the watermark output size. For example, if the output video resolution is 544 × 940 and the displayed width of the watermark is 0.1f, use a watermark source image with a width of about 544 × 0.1f = 54.4.

Set the video quality

Video quality supports three modes: resolution priority, fluency priority, and custom.

To set the video quality, you must enable bitrate control: config.enableAutoBitrate = true;

Resolution priority (default)

In resolution priority mode, the SDK internally configures the bitrate parameters to prioritize the clarity of the ingested video.

config.qualityMode = AlivcLivePushQualityModeResolutionFirst;// Resolution priority modeFluency priority

In fluency priority mode, the SDK internally configures the bitrate parameters to prioritize the fluency of the ingested video.

config.qualityMode = AlivcLivePushQualityModeFluencyFirst;// Fluency priority modeCustom mode

In custom mode, the SDK configures the bitrate based on the developer's settings. When you set the mode to custom, you need to define the initial bitrate, minimum bitrate, and target bitrate.

Initial bitrate: The bitrate at the start of the live stream.

Minimum bitrate: When the network is poor, the bitrate gradually decreases to the minimum bitrate to reduce video stuttering.

Target bitrate: When the network is good, the bitrate gradually increases to the target bitrate to improve video clarity.

config.qualityMode = AlivcLivePushQualityModeCustom// Set to custom mode.

config.targetVideoBitrate = 1400; // The target bitrate is 1,400 kbit/s.

config.minVideoBitrate = 600; // The minimum bitrate is 600 kbit/s.

config.initialVideoBitrate = 1000; // The initial bitrate is 1,000 kbit/s.When you set a custom bitrate, refer to the recommended settings from Alibaba Cloud to configure the corresponding bitrate. For more information about the recommended settings, see the following tables:

Table 1. Recommended settings for Resolution Priority mode

Resolution | initialVideoBitrate | minVideoBitrate | targetVideoBitrate |

360p | 600 | 300 | 1000 |

480p | 800 | 300 | 1200 |

540p | 1000 | 600 | 1400 |

720p | 1500 | 600 | 2000 |

1080p | 1800 | 1200 | 2500 |

Table 1. Recommended settings for Resolution Priority mode

Resolution | initialVideoBitrate | minVideoBitrate | targetVideoBitrate |

360p | 400 | 200 | 600 |

480p | 600 | 300 | 800 |

540p | 800 | 300 | 1000 |

720p | 1000 | 300 | 1200 |

1080p | 1500 | 1200 | 2200 |

Adaptive resolution

When dynamic resolution adjustment is enabled, the ingest stream resolution automatically decreases in poor network conditions. This improves video smoothness and clarity. Sample code:

config.enableAutoResolution = YES; // Enable adaptive resolution. The default value is NO.Adaptive resolution takes effect only when the video quality mode is set to Definition Priority or Fluency Priority. It does not take effect in Custom mode.

Some players may not support dynamic resolution. If you use the adaptive resolution feature, use ApsaraVideo Player.

Background music

The Push SDK supports background music playback, audio mixing, denoising, in-ear monitoring, and muting. Call the related API operations only after the preview starts. The following code provides an example:

/* Start playing background music. */

[self.livePusher startBGMWithMusicPathAsync:musicPath];

/* Stop playing background music. If you want to switch songs while background music is playing, simply call the startBGMWithMusicPathAsync operation. You do not need to stop the current playback. */

[self.livePusher stopBGMAsync];

/* Pause the background music. Call this operation only after the background music starts playing. */

[self.livePusher pauseBGM];

/* Resume the background music. Call this operation only when the background music is paused. */

[self.livePusher resumeBGM];

/* Enable loop playback. */

[self.livePusher setBGMLoop:true];

/* Set the denoising switch. When enabled, non-vocal sounds in the captured audio are filtered. This may cause slight suppression of human voices. Let users decide whether to enable this feature. This feature is disabled by default. */

[self.livePusher setAudioDenoise:true];

/* Set the in-ear monitoring switch. This feature is mainly used in karaoke scenarios. When enabled, the streamer can hear their own voice in the headphones. When disabled, the streamer cannot hear their own voice. This feature does not work if headphones are not connected. */

[self.livePusher setBGMEarsBack:true];

/* Configure audio mixing to adjust the volume of the background music and the captured human voice. */

[self.livePusher setBGMVolume:50];// Set the volume of the background music.

[self.livePusher setCaptureVolume:50];// Set the volume of the captured human voice.

/* Mute the audio. When muted, both the background music and the captured human voice are silenced. To mute the music or the voice separately, use the audio mixing operations to adjust their volumes. */

[self.livePusher setMute:isMute?true:false];Capture snapshots

The Push SDK lets you capture snapshots of local video streams. The following is sample code:

/* Set the snapshot callback. */

[self.livePushersetSnapshotDelegate:self];

/* Call the snapshot API. */

[self.livePushersnapshot:1interval:1];Configure the retouching feature

The Alibaba Cloud Push SDK provides two retouching modes: basic and advanced. Basic retouching supports whitening, smoothing, and a rosy complexion. Advanced retouching supports features based on facial recognition, such as whitening, smoothing, a rosy complexion, eye enlargement, and face slimming. This feature is provided by the Queen SDK. The following code shows an example:

#pragma mark - "APIs for retouching types and parameters"/**

* @brief Enables or disables a retouching type.

* @param type A value of QueenBeautyType.

* @param isOpen YES: Enables the type. NO: Disables the type.

*

*/

- (void)setQueenBeautyType:(kQueenBeautyType)type enable:(BOOL)isOpen;

/**

* @brief Sets retouching parameters.

* @param param The retouching parameter type. A value from QueenBeautyParams.

* @param value The value to set. The value ranges from 0 to 1. Values less than 0 are set to 0, and values greater than 1 are set to 1.

*/

- (void)setQueenBeautyParams:(kQueenBeautyParams)param

value:(float)value;

#pragma mark - "Filter-related APIs"

/**

* @brief Sets a filter image. Before you set the filter image, enable kQueenBeautyTypeLUT.

* @param imagePath The path of the filter image.

*/

- (void)setLutImagePath:(NSString *)imagePath;

#pragma mark - "Face shaping APIs"

/**

*@brief Sets the face shaping type. Before you set the type, enable kQueenBeautyTypeFaceShape.

*@param faceShapeType The face shaping type to set. For more information, see QueenBeautyFaceShapeType.

*@param value The value to set.

*/

- (void)setFaceShape:(kQueenBeautyFaceShapeType)faceShapeType

value:(float)value;

#pragma mark - "Makeup-related APIs"

/**

* @brief Sets the makeup type and the paths of image assets. Before you set the makeup, enable kQueenBeautyTypeMakeup.

* @param makeupType The makeup type.

* @param imagePaths A collection of paths to the makeup assets.

* @param blend The blend type.

*/

- (void)setMakeupWithType:(kQueenBeautyMakeupType)makeupType

paths:(NSArray<NSString *> *)imagePaths

blendType:(kQueenBeautyBlend)blend;

/**

* @brief Sets the makeup type and the paths of image assets.

* @param makeupType The makeup type.

* @param imagePaths A collection of paths to the makeup assets.

* @param blend The blend type.

* @param fps The frame rate.

*/

- (void)setMakeupWithType:(kQueenBeautyMakeupType)makeupType

paths:(NSArray<NSString *> *)imagePaths

blendType:(kQueenBeautyBlend)blend fps:(int)fps;

/**

* @brief Sets the makeup transparency. You can specify the gender.

* @param makeupType The makeup type.

* @param isFeMale Specifies whether the gender is female. YES: female. NO: male.

* @param alpha The makeup transparency.

*/

- (void)setMakeupAlphaWithType:(kQueenBeautyMakeupType)makeupType

female:(BOOL)isFeMale alpha:(float)alpha;

/**

* @brief Sets the blend type for the makeup.

* @param makeupType The makeup type.

* @param blend The blend type.

*/

- (void)setMakeupBlendWithType:(kQueenBeautyMakeupType)makeupType

blendType:(kQueenBeautyBlend)blend;

/**

* @brief Purges all makeup.

*/

- (void)resetAllMakeupType;Adjust retouching parameters in real time

The Push SDK supports real-time adjustment of retouching parameters during stream ingest. Enable the retouching feature, and then adjust the corresponding parameter values. The following code shows an example:

[_queenEngine setQueenBeautyType:kQueenBeautyTypeSkinBuffing enable:YES];

[_queenEngine setQueenBeautyType:kQueenBeautyTypeSkinWhiting enable:YES];

[_queenEngine setQueenBeautyParams:kQueenBeautyParamsWhitening value:0.8f];

[_queenEngine setQueenBeautyParams:kQueenBeautyParamsSharpen value:0.6f];

[_queenEngine setQueenBeautyParams:kQueenBeautyParamsSkinBuffing value:0.6];Configure the live quiz feature

The live quiz feature works by inserting supplemental enhancement information (SEI) into a live stream. The player then parses the SEI. The Push SDK provides a method to insert SEI messages. Call this method only during stream ingest. The following code is an example:

/*

msg: The SEI message body to insert into the stream. JSON format is recommended. ApsaraVideo Player SDK can receive and parse this message for display.

repeatCount: The number of frames in which to send the message. To prevent the SEI message from being dropped, set the number of repetitions. For example, a value of 100 inserts the message into the next 100 frames. The player deduplicates identical SEI messages.

delayTime: The delay in milliseconds before sending the message.

KeyFrameOnly: Specifies whether to send the message only in keyframes.

*/

[self.livePusher sendMessage:@"Question information" repeatCount:100 delayTime:0 KeyFrameOnly:false];iPhone X adaptation

Normally, setting the preview view to full screen works correctly. However, the iPhone X has a special aspect ratio. This causes the preview image to stretch when displayed in full screen. Do not use a full-screen view for previews on an iPhone X.

Change the view size during stream ingest

Traverse the UIView assigned in the startPreview or startPreviewAsync call. Change the frame for all subviews in the preview view. For example:

[self.livePusher startPreviewAsync:self.previewView];

for (UIView *subView in [self.previewView subviews]) {

// ...

}Play external audio

To play external audio on the stream ingest page, use AVAudioPlayer because the software development kit (SDK) is temporarily incompatible with AudioServicesPlaySystemSound. After playback, update the AVAudioSession settings. The following is sample code:

- (void)setupAudioPlayer {

NSString *filePath = [[NSBundle

mainBundle] pathForResource:@"sound" ofType:@"wav"];

NSURL *fileUrl = [NSURL URLWithString:filePath];

self.player = [[AVAudioPlayer alloc] initWithContentsOfURL:fileUrl error:nil];

self.player.volume = 1.0;

[self.player prepareToPlay];

}

- (void)playAudio {

self.player.volume = 1.0;

[self.player play];

// Configure AVAudioSession.

AVAudioSession *session = [AVAudioSession sharedInstance];

[session setMode:AVAudioSessionModeVideoChat error:nil];

[session overrideOutputAudioPort:AVAudioSessionPortOverrideSpeaker error:nil];

[session setCategory:AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker|AVAudioSessionCategoryOptionAllowBluetooth

| AVAudioSessionCategoryOptionMixWithOthers error:nil];

[session setActive:YES error:nil];

}Background mode and phone calls

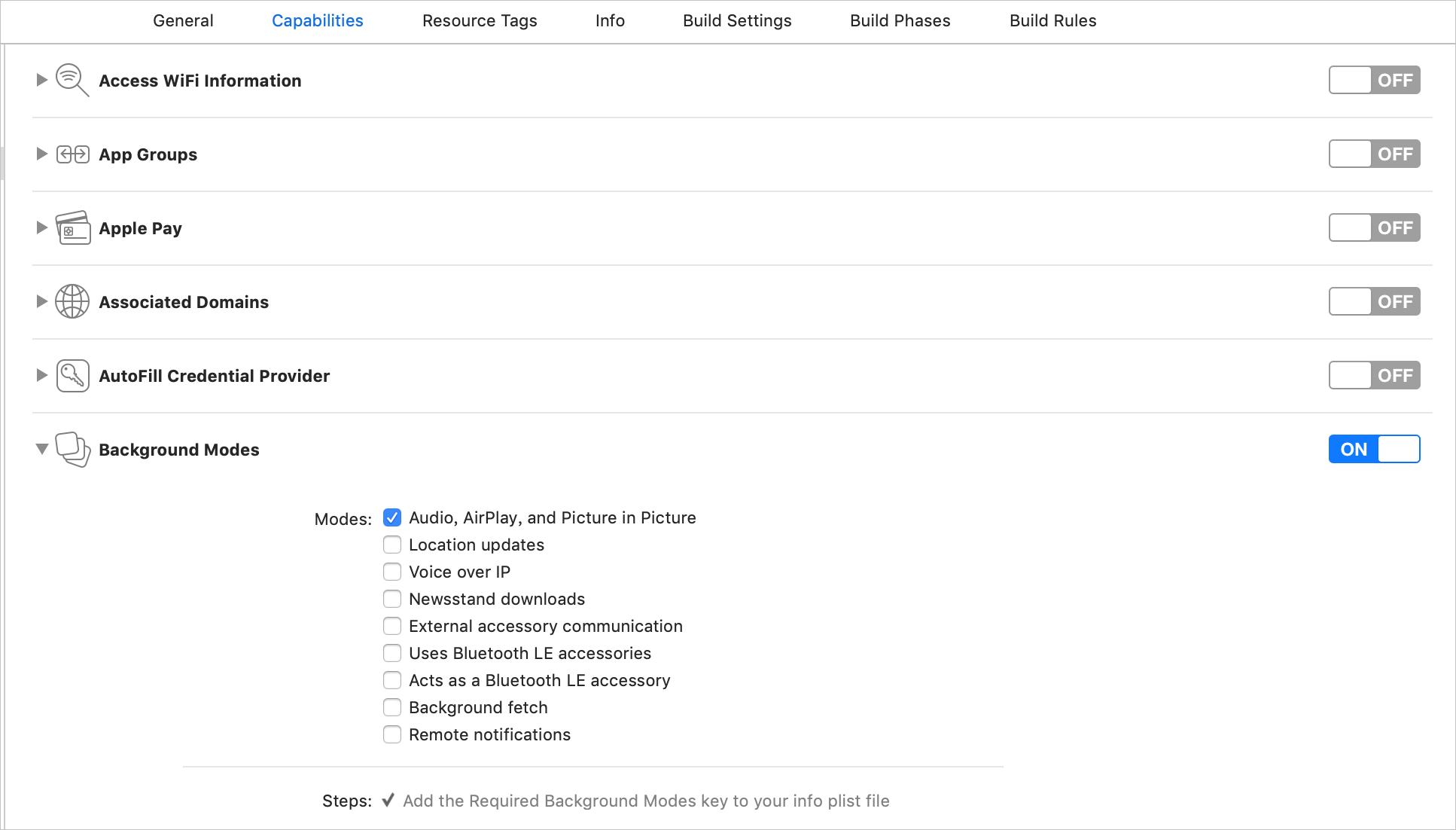

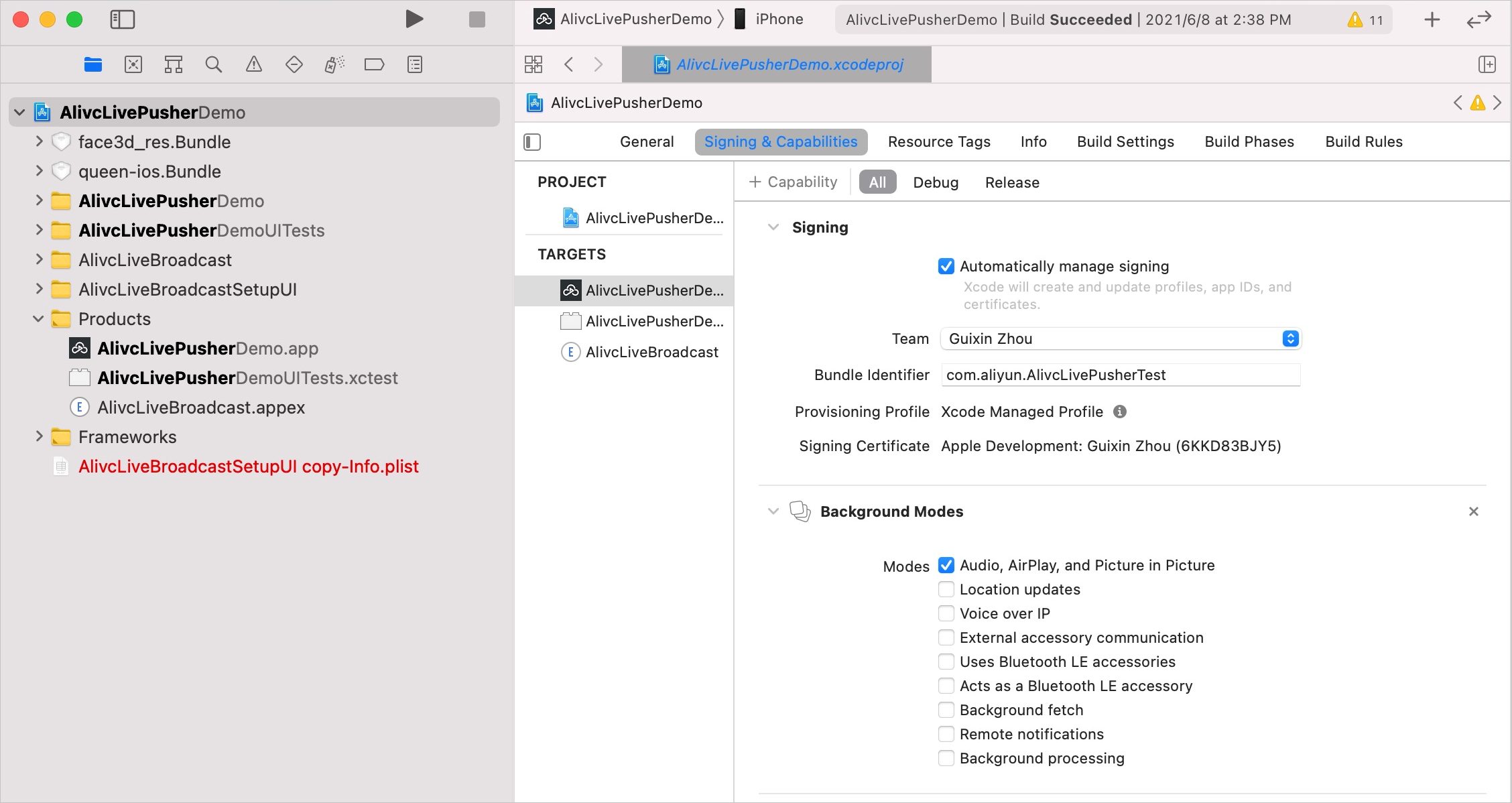

The SDK handles background processing automatically. By default, when the app moves to the background, the SDK continues to ingest the audio stream and pauses the video on the last frame. To ensure the app can collect audio in the background, go to your app's Capabilities, enable Background Mode, and select Audio, AirPlay and Picture in Picture.

To stop audio stream ingest when the app moves to the background, destroy the stream ingest engine. When the app returns to the foreground, re-create the engine to resume the stream.

When using this method, you must listen for UIApplicationWillResignActiveNotification and UIApplicationDidBecomeActiveNotification. Other methods pose a threat and may cause errors.

Callbacks

The Push SDK includes the following callbacks:

Callback Type | Callback Class Name |

AlivcLivePusherInfoDelegate | |

AlivcLivePusherNetworkDelegate | |

AlivcLivePusherErrorDelegate | |

AlivcLivePusherBGMDelegate | |

AlivcLivePusherCustomFilterDelegate |

Stream ingest callbacks

Stream ingest callbacks notify the app of the SDK status. These callbacks include notifications for when the preview starts, the first video frame is rendered, the first audio or video frame is sent, stream ingest starts, and stream ingest stops.

onPushStarted: Indicates a successful connection to the server.

onFirstFramePushed: Indicates that the first audio or video frame was sent successfully.

onPushStarted and onFirstFramePushed: Indicate that the SDK has successfully started stream ingest.

Network callbacks

Network callbacks notify the app of the network and connection status. For brief network fluctuations or transitions that are within the reconnection timeout and retry limits set in `AlivcLivePushConfig`, the SDK automatically reconnects. If the reconnection succeeds, stream ingest resumes.

onConnectFail: Indicates that stream ingest failed. Check whether the ingest URL is invalid, contains invalid characters, has an authentication issue, exceeds the concurrent stream ingest limit, or is on the ingest-disabled blacklist. After you confirm that the ingest URL is valid and active, try to ingest the stream again. The specific error codes are 0x30020901 to 0x30020905 and 0x30010900 to 0x30010901.

onConnectionLost: The connection is lost. The SDK automatically reconnects and returns `onReconnectStart`. If the connection is not recovered after the maximum number of reconnection attempts (`config.connectRetryCount`), `onReconnectError` is returned.

onNetworkPoor: Indicates a slow network. This callback means the current network is not strong enough for stream ingest, but the stream is not interrupted. You can handle your business logic here, such as showing a UI notification to the user.

onNetworkRecovery: Indicates that the network has recovered.

onReconnectError: Indicates that reconnection failed. Check the current network. When the network recovers, ingest the stream again.

onSendDataTimeout: Indicates a data sending timeout. Check the current network. When the network recovers, stop the current stream ingest and then start a new one.

onPushURLAuthenticationOverdue: Indicates that the URL signing for the current ingest URL has expired. You must pass a new URL to the SDK.

Error callbacks

onSystemError: A system device error occurred. You must destroy the engine and try again.

onSDKError: An SDK error occurred. Handle the error based on the error code:

If the error code is 805438211, it indicates poor device performance and a low frame rate for encoding and rendering. Notify the streamer and stop time-consuming business logic, such as advanced retouching and animations, at the app layer.

You must handle the callbacks for when the app lacks microphone or camera permissions. The error code for missing microphone permission is 268455940. The error code for missing camera permission is 268455939.

For all other errors, just write them to the log. No other action is required.

Background music callbacks

onOpenFailed: The background music failed to start. Check if the music file is valid and if its path is correct. Call the

startBGMWithMusicPathAsyncmethod to try playing it again.onDownloadTimeout: A timeout occurred during background music playback. This often happens when playing background music from a network URL. Prompt the streamer to check the current network status. Call the

startBGMWithMusicPathAsyncmethod to play the music again.

Callbacks for external retouching and filter processing

Use the AlivcLivePusherCustomFilterDelegate callback to integrate with a third-party retouching SDK. This lets you implement basic and advanced retouching features. The main purpose of AlivcLivePusherCustomFilterDelegate is to provide the internal texture or CVPixelBuffer from the Push SDK to the retouching SDK for processing. The retouching SDK then returns the processed texture or CVPixelBuffer to the Push SDK to apply the retouching effects.

Set the livePushMode switch in AlivcLivePushConfig to AlivcLivePushBasicMode. The SDK uses AlivcLivePusherCustomFilterDelegate to return the texture ID, not the CVPixelBuffer. The core callbacks are as follows:

onCreate: The OpenGL context is created. This callback is typically used to initialize the retouching engine.

onProcess: An OpenGL texture update callback that receives the original texture ID from the SDK. In this callback, you can call a retouching method and return the processed texture ID.

onDestory: The OpenGL context is destroyed. This callback is typically used to destroy the retouching engine.

Common methods and interfaces

/* In custom mode, you can adjust the minimum and target bitrates in real time. */

[self.livePusher setTargetVideoBitrate:800];

[self.livePusher setMinVideoBitrate:200]

/* Get the stream ingest status. */

BOOL isPushing = [self.livePusher isPushing];

/* Get the ingest URL. */

NSString *pushURLString = [self.livePusher getPushURL];

/* Get stream ingest performance and debugging information. For details about performance metrics, see the API documentation or the comments in the code. */

AlivcLivePushStatsInfo *info = [self.livePusher getLivePushStatusInfo];

/* Get the SDK version number. */

NSString *sdkVersion = [self.livePusher getSDKVersion];

/* Set the log level to filter debugging information as needed. */

[self.livePusher setLogLevel:(AlivcLivePushLogLevelDebug)];Debugging tools

The SDK provides the DebugView UI debugging tool, a movable global floating window that is always displayed at the top of the view. It includes debug features such as viewing stream ingest logs, real-time detection of stream ingest performance metrics, and line charts of key performance metrics.

In the release version, do not call the method to open DebugView.

Sample code:

[AlivcLivePusher showDebugView];// Open DebugView.API documentation

FAQ

Stream Ingestion Failure

Use the troubleshooting tool to check if the ingest URL is valid.

How do I obtain information about ingested streams?

Go to Stream Management and view ingested audio and video streams in Active Streams.

How do I play a stream?

After you start stream ingest, use a player (such as ApsaraVideo Player, FFplay, or VLC) to test stream pulling. To obtain playback URLs, see Generate streaming URLs.

The application failed App Store review

The RtsSDK binary is a fat library that contains both device and emulator slices. Apple rejects an IPA that contains emulator architectures. Use lipo -remove to remove the x86_64 architecture.