aliyun-timestream is a plug-in developed by the Alibaba Cloud Elasticsearch team based on the features of time series products that are provided by the Elastic community. This plug-in is used to enhance the storage and usage performance of time series data. Alibaba Cloud Elasticsearch can be seamlessly integrated with Prometheus and Grafana and supports APIs that can be used for Prometheus queries. After Alibaba Cloud Elasticsearch is integrated with Prometheus and Grafana, you can use time series indexes in Elasticsearch as data sources of Prometheus to display data in Grafana. This improves the storage and analytics performance of metric data of time series indexes and reduces costs. This topic describes how to integrate Elasticsearch with Prometheus and Grafana based on aliyun-timestream to implement integrated monitoring.

Background information

- The stored data has no copies. If a failure occurs on machines in a local cluster, Prometheus cannot access the stored data.

- Data is stored only in a single machine, and scale-out is not supported. Storage bottlenecks may occur as the amount of data increases.

- Data backup is not supported. If a hard disk is damaged, data may fail to be recovered.

- The costs of local disk storage are high. Separated storage of cold and hot data is not supported.

When you use the high-availability solution provided by Prometheus, we recommend that you store your data in a highly available, distributed remote storage system. aliyun-timestream provides remote storage and query capabilities for Prometheus and is developed based on the capabilities of Elasticsearch, such as distributed architecture, scalability, high availability, and separated storage of hot and cold data. Therefore, aliyun-timestream is an optimal remote storage system for Prometheus.

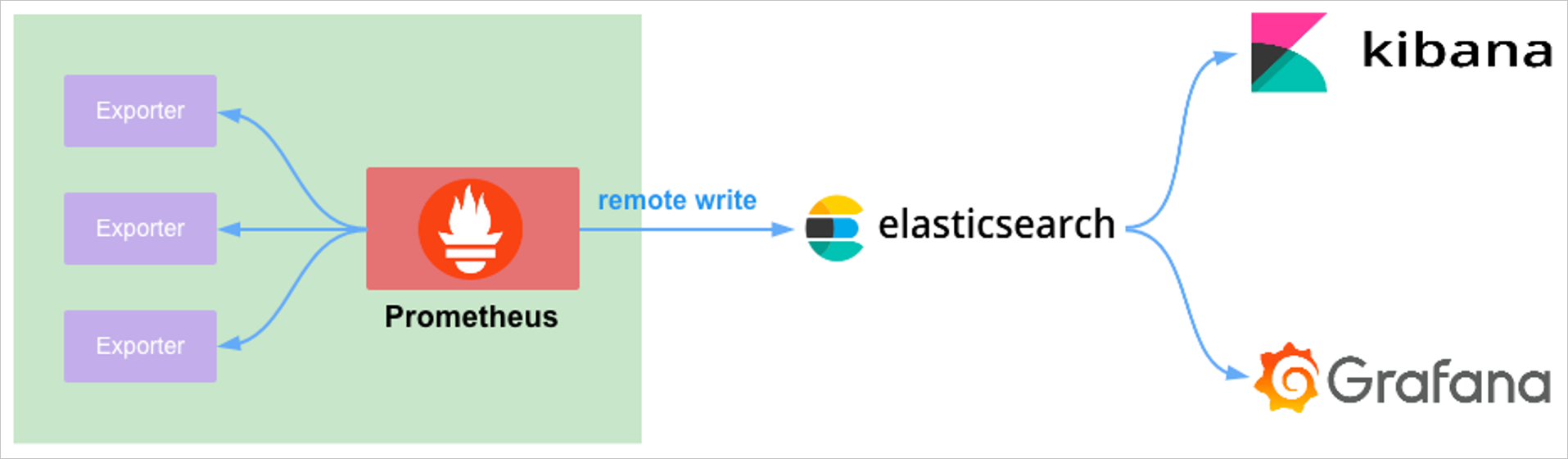

- Prometheus collects data from exporters.

- Prometheus uses the remote write feature to synchronize the collected data to Elasticsearch.

- Users view the data that is synchronized by Prometheus to Elasticsearch in Kibana and Grafana. Note When you use Grafana to access the data that is synchronized to Elasticsearch, you can use a native Elasticsearch data source or a data source of Prometheus to access data in Elasticsearch and executes Prometheus Query Language (PromQL) statements to query metric data.

Prerequisites

An Elasticsearch cluster of the Standard Edition that meets the following version requirements is created: The version of the cluster is V7.16 or later and the kernel version of the cluster is V1.7.0 or later, or the version of the cluster is V7.10 and the kernel version of the cluster is V1.8.0 or later. For information about how to create an Elasticsearch cluster, see Create an Alibaba Cloud Elasticsearch cluster.

Procedure

Step 1: Make preparations

- Create an Elasticsearch V7.16 cluster of the Standard Edition. For more information, see Create an Alibaba Cloud Elasticsearch cluster.

- Create an Elastic Compute Service (ECS) instance that resides in the same virtual private cloud (VPC) as the Elasticsearch cluster created in Substep 1 and runs the Linux operating system. For information about how to create an ECS instance, see Create an instance by using the wizard. The ECS instance is used to access the Elasticsearch cluster and install Prometheus and Grafana. This way, Elasticsearch can be integrated with Prometheus and Grafana.

- Create a time series index in the Elasticsearch cluster. The time series index is used to receive data that is synchronized by Prometheus.

Step 2: Download, install, and start the Node Exporter

The Prometheus Node Exporter exposes various hardware- and kernel-related metrics and allows Prometheus to read metric data. For more information about the Node Exporter and how to install it, see node_exporter.

- Connect to the ECS instance. For more information, see Connect to a Linux instance by using a password or key.Note In this example, a regular user is used to connect to the ECS instance.

- Download the installation package of the Node Exporter. In this example, the installation package of the Node Exporter 1.3.1 is downloaded. Sample download command:

wget https://github.com/prometheus/node_exporter/releases/download/v1.3.1/node_exporter-1.3.1.linux-amd64.tar.gz - Decompress the installation package, install the Node Exporter, and then start the Node Exporter.

tar xvfz node_exporter-1.3.1.linux-amd64.tar.gz cd node_exporter-1.3.1.linux-amd64 ./node_exporter

Step 3: Download, configure, and start Prometheus

- Connect to the ECS instance. For more information, see Connect to a Linux instance by using a password or key.

- Download the installation package of Prometheus from the root directory. In this example, the Prometheus 2.36.2 installation package is downloaded. Sample download command:

cd ~ wget https://github.com/prometheus/prometheus/releases/download/v2.36.2/prometheus-2.36.2.linux-amd64.tar.gz - Decompress the Prometheus installation package.

tar xvfz prometheus-2.36.2.linux-amd64.tar.gz - In the prometheus.yml file in the root directory in which Prometheus is installed, configure the node_exporter and remote_write parameters.

cd prometheus-2.36.2.linux-amd64 vim prometheus.ymlThe following code provides a configuration example:scrape_configs: # The job name is added as a label `job=<job_name>` to any timeseries scraped from this config. - job_name: 'prometheus' # metrics_path defaults to '/metrics' # scheme defaults to 'http'. static_configs: - targets: ['localhost:9090'] #Configure node_exporter. - job_name: "node" static_configs: - targets: ["127.0.0.1:9100"] #Configure remote_write and make sure that Prometheus can access the Elasticsearch cluster. remote_write: - url: "http://xxx:9200/_time_stream/prom_write/prom_index" basic_auth: username: elastic password: xxxxParameter Description node_exporter The connection information of the Node Exporter. You must configure the targets parameter in the following format: IP address of the Node Exporter:Port number. In this example, Prometheus and the Node Exporter are installed on the same ECS instance. For this example, you must specify 127.0.0.1 as the IP address of the Node Exporter and the default port number 9100 as the port number of the Node Exporter.

remote_write The connection information of the time series index in the Elasticsearch cluster. You must configure the following basic parameters in the remote_write parameter. For information about the advanced parameters that can be configured in the remote_write parameter, see remote_write. - url: Specifies the URL that can be used to access the time series index. You must configure this parameter in the following format: http://<Public or internal endpoint of the Elasticsearch cluster>:9200/_time_stream/prom_write/<yourTimeStreamIndex>. Note

- Public or internal endpoint of the Elasticsearch cluster: You can obtain the endpoint on the Basic Information page of the Elasticsearch cluster in the Elasticsearch console. If the ECS instance on which Prometheus is installed and the Elasticsearch cluster reside in the same VPC, you can specify the internal endpoint of the Elasticsearch cluster in the URL. In this example, the internal endpoint of the Elasticsearch cluster is used. If the ECS instance and the Elasticsearch cluster reside in different VPCs, you must specify the public endpoint of the Elasticsearch cluster in the URL and configure a public IP address whitelist for the Elasticsearch cluster. For more information about how to configure a public IP address whitelist for an Elasticsearch cluster, see Configure a public or private IP address whitelist for an Elasticsearch cluster.

- <yourTimeStreamIndex>: Specifies the name of the time series index used to receive the data that is synchronized by Prometheus. You must create such an index in advance. In this example, a time series index named prom_index is used.

- username: Specifies the username that is used to access the time series index. The default username is elastic. You can also use a custom user account. However, you must make sure that the custom user has the access and operation permissions on the time series index. For information about how to grant the required permissions to a user, see Use the RBAC mechanism provided by Elasticsearch X-Pack to implement access control.

- password: Specifies the password that corresponds to the username. The password of the elastic account is specified when you create the Elasticsearch cluster. If you forget the password, you can reset it. For information about the procedure and precautions for resetting the password, see Reset the access password for an Elasticsearch cluster.

- url: Specifies the URL that can be used to access the time series index. You must configure this parameter in the following format: http://<Public or internal endpoint of the Elasticsearch cluster>:9200/_time_stream/prom_write/<yourTimeStreamIndex>.

- Start Prometheus.

./prometheus - Check whether data is synchronized by Prometheus to the time series index in the Elasticsearch cluster. In the Kibana console, run the following command to perform the check:

- Query the data in the prom_index time series index.

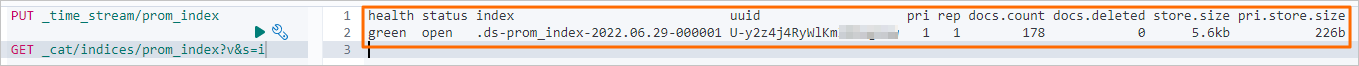

GET _cat/indices/prom_index?v&s=iIf the data is synchronized to the prom_index time series index, the result shown in the following figure is returned.

- Check whether you can obtain and view the data.

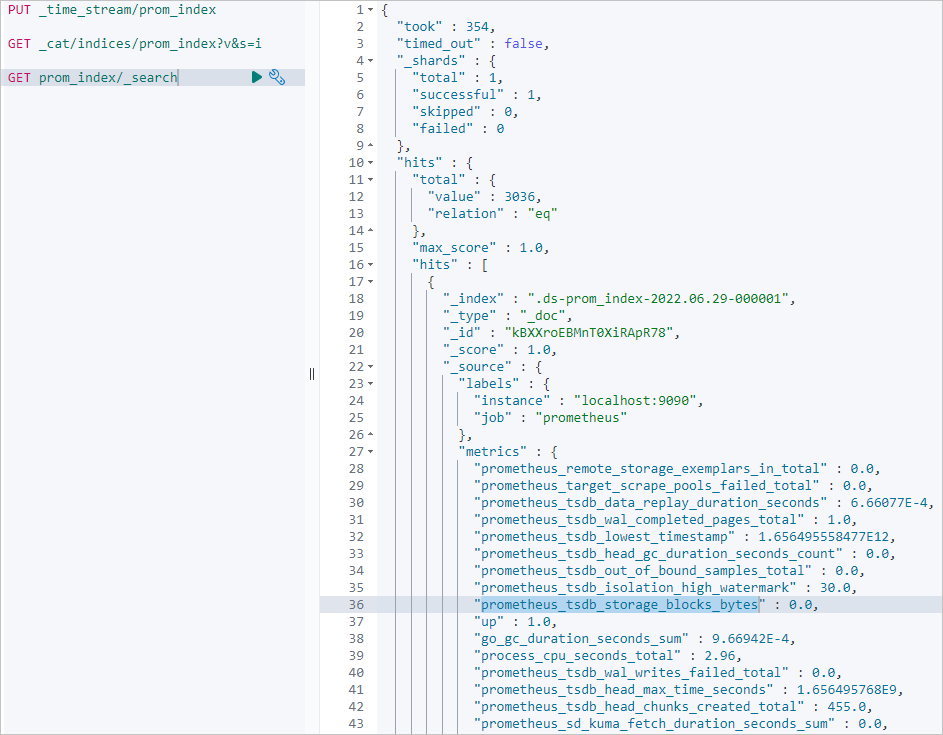

GET prom_index/_searchIf the data is synchronized to the prom_index time series index, the result shown in the following figure is returned.

- Query the data in the prom_index time series index.

Step 4: Download, start, and configure Grafana

- Connect to the ECS instance. For more information, see Connect to a Linux instance by using a password or key.

- Download the installation package of Grafana from the root directory. In this example, the Grafana 9.0.2 installation package is downloaded. Sample download command:

cd ~ wget https://dl.grafana.com/enterprise/release/grafana-enterprise-9.0.2.linux-amd64.tar.gz - Decompress the installation package, install Grafana, and then start Grafana.

tar xvfz grafana-enterprise-9.0.2.linux-amd64.tar.gz cd grafana-9.0.2 ./bin/grafana-server - In the address bar of a browser, enter

http://<Public IP address of the ECS instance>:3000to go to the logon page of the Grafana console. Enter the username and password to log on to the Grafana console.- The first time you log on to the Grafana console, you must enter the default username and default password for logon. The default username and default password are admin. After you log on to the Grafana console, the system prompts you to change the password. After you change the password, you can log on to the Grafana console again.

- You can perform the following steps to obtain the public IP address of the ECS instance: Log on to the ECS console, choose Instances & Images > Instances in the left-side navigation pane, find the ECS instance on the Instances page, and then view the public IP address that is displayed in the IP Address column of the instance.

- The default port number that can be used to access Grafana is 3000. If you want to access Grafana in a browser over port 3000, you must add an Allow inbound rule to the security group of the ECS instance. In the Allow inbound rule, you must set the destination to 3000 and the source to the IP address of your client. For more information about how to add a security group rule, see Add a security group rule.

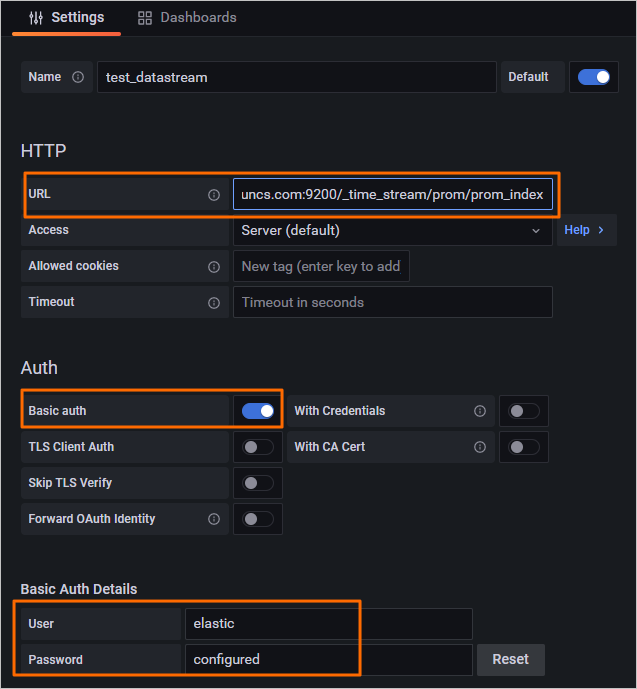

- In the Grafana console, add a data source for Prometheus.

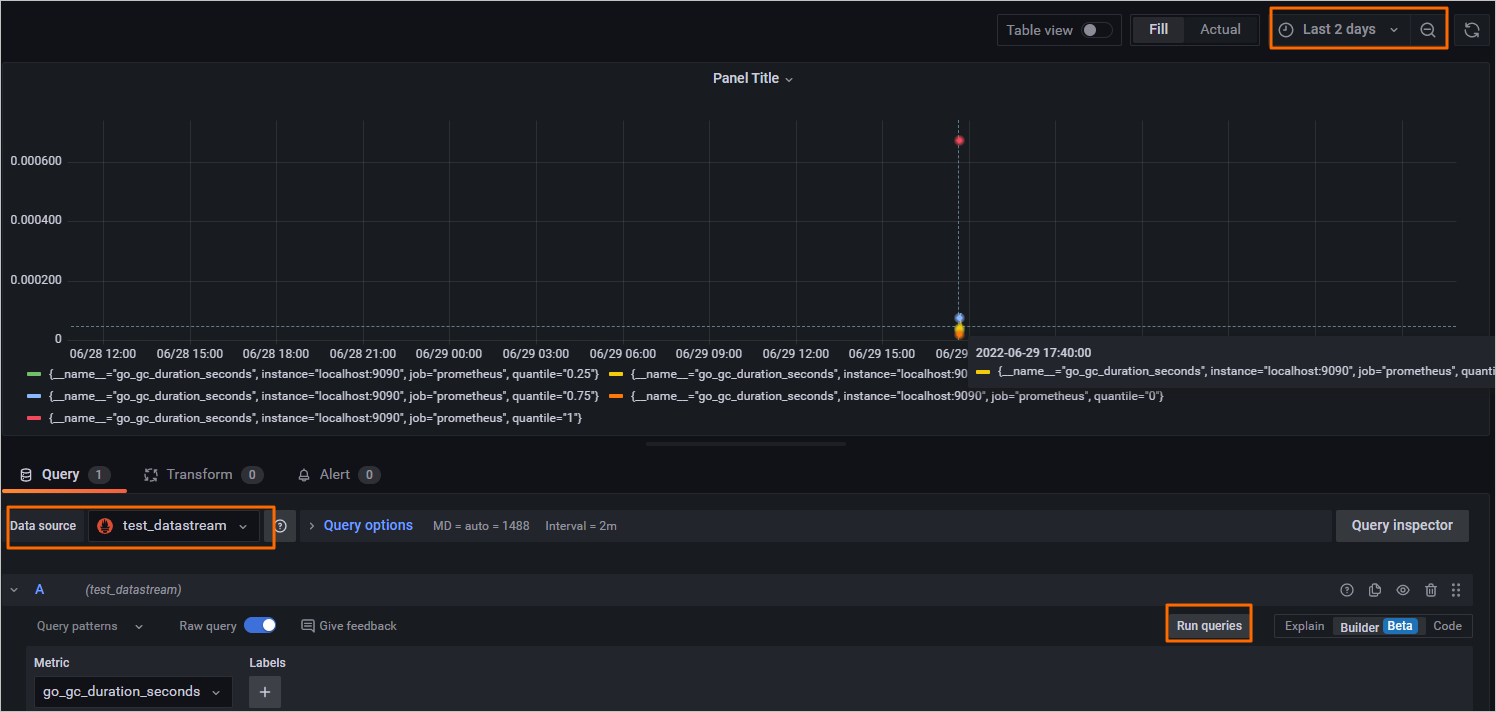

- Create a dashboard that is used to display the metric data of the data source of Prometheus.

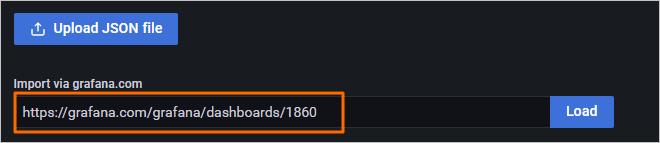

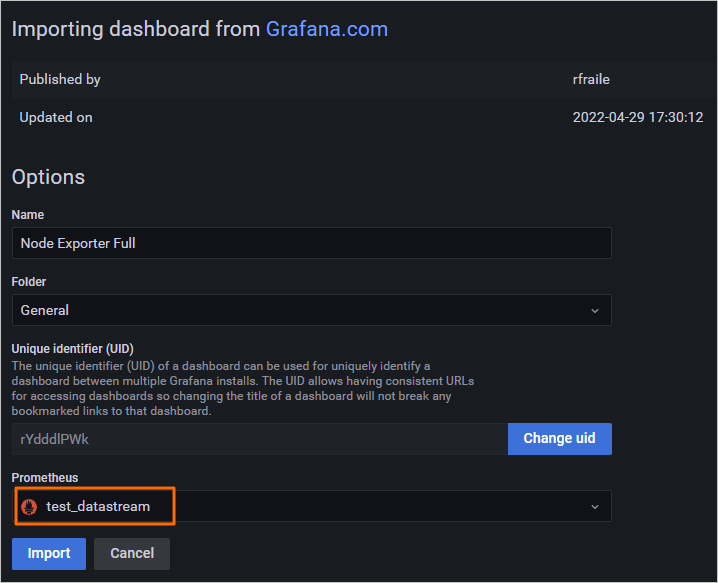

- In the Grafana console, import the built-in Grafana dashboard of the Node Exporter and configure the data source of Prometheus to generate a dashboard for metric monitoring.

icon and choose

icon and choose