This topic describes how to use the reindex API to migrate data from a self-managed Elasticsearch cluster that runs on Elastic Compute Service (ECS) instances to an Alibaba Cloud Elasticsearch cluster. Related operations include index creation and data migration.

Background information

You can use the reindex API to migrate data only to single-zone Alibaba Cloud Elasticsearch clusters. If you want to migrate data to a multi-zone Alibaba Cloud Elasticsearch cluster, we recommend that you use one of the following methods:

If the self-managed Elasticsearch cluster stores large volumes of data, use snapshots stored in Object Storage Service (OSS). For more information, see Use OSS to migrate data from a self-managed Elasticsearch cluster to an Alibaba Cloud Elasticsearch cluster.

If you want to filter source data, use Logstash. For more information, see Use Alibaba Cloud Logstash to migrate data from a self-managed Elasticsearch cluster to an Alibaba Cloud Elasticsearch cluster.

Prerequisites

The following operations are performed:

Create a single-zone Alibaba Cloud Elasticsearch cluster.

For more information, see Create an Alibaba Cloud Elasticsearch cluster.

Prepare a self-managed Elasticsearch cluster and the data to be migrated.

We recommend that you use a self-managed Elasticsearch cluster deployed on Alibaba Cloud ECS instances. For more information about how to deploy a self-managed Elasticsearch cluster, see Installing and Running Elasticsearch. The self-managed Elasticsearch cluster must meet the following requirements:

The ECS instances that host the self-managed Elasticsearch cluster are deployed in the same virtual private cloud (VPC) as the Alibaba Cloud Elasticsearch cluster. You cannot use ECS instances that are connected to VPCs over ClassicLink connections.

The IP addresses of nodes in the Alibaba Cloud Elasticsearch cluster are added to the security groups of the ECS instances that host the self-managed Elasticsearch cluster. You can query the IP addresses of the nodes in the Kibana console of the Alibaba Cloud Elasticsearch cluster. In addition, port 9200 is enabled.

The self-managed Elasticsearch cluster can be connected to the Alibaba Cloud Elasticsearch cluster. You can test the connectivity by running the

curl -XGET http://<host>:9200command on the server where you run scripts.NoteYou can run all scripts provided in this topic on a server that can be connected to both the self-managed Elasticsearch cluster and Alibaba Cloud Elasticsearch cluster over port 9200.

Limits

The network architecture of Alibaba Cloud Elasticsearch was adjusted in October 2020. In the new network architecture, the cross-cluster reindex operation is limited. You need to use the PrivateLink service to establish private connections between VPCs before you perform the operation. The following table provides data migration solutions in different scenarios.

Alibaba Cloud Elasticsearch clusters created before October 2020 are deployed in the original network architecture. Alibaba Cloud Elasticsearch clusters created in October 2020 or later are deployed in the new network architecture.

Scenario | Network architecture | Solution |

Migrate data between Alibaba Cloud Elasticsearch clusters | Both clusters are deployed in the original network architecture. | reindex API. For more information, see Use the reindex API to migrate data between Alibaba Cloud Elasticsearch clusters. |

One of the clusters is deployed in the original network architecture. Note The other cluster can be deployed in the original or new network architecture |

| |

Migrate data from a self-managed Elasticsearch cluster that runs on ECS instances to an Alibaba Cloud Elasticsearch cluster | The Alibaba Cloud Elasticsearch cluster is deployed in the original network architecture. | reindex API. For more information, see Use the reindex API to migrate data from a self-managed Elasticsearch cluster to an Alibaba Cloud Elasticsearch cluster. |

The Alibaba Cloud Elasticsearch cluster is deployed in the new network architecture. | reindex API. For more information, see Migrate data from a self-managed Elasticsearch cluster to an Alibaba Cloud Elasticsearch cluster deployed in the new network architecture. |

Precautions

The network architecture of Alibaba Cloud Elasticsearch was adjusted in October 2020. Elasticsearch clusters created before October 2020 are deployed in the original network architecture. Elasticsearch clusters created in October 2020 or later are deployed in the new network architecture. You cannot perform cross-cluster operations, such as reindex, searches, or replication, between a cluster deployed in the original network architecture and a cluster deployed in the new network architecture. If you want to perform these operations between two clusters, you must make sure that the clusters are deployed in the same network architecture. The time when the network architecture in the China (Zhangjiakou) region and the regions outside China was adjusted is uncertain. If you want to perform the preceding operations between a cluster created before October 2020 and a cluster created in October 2020 or later in such a region, submit a ticket to contact Alibaba Cloud technical support to check whether the clusters can be connected.

Alibaba Cloud Elasticsearch clusters deployed in the new network architecture reside in the VPC within the service account of Alibaba Cloud Elasticsearch. These clusters cannot access resources in other network environments. Alibaba Cloud Elasticsearch clusters deployed in the original network architecture reside in VPCs that are created by users. These clusters can access resources in other network environments.

To ensure data consistency and normal data read, we recommend that you do not write data to the self-managed Elasticsearch cluster during the migration. After the migration, you can read data from and write data to the Alibaba Cloud Elasticsearch cluster. If you want to write data to the self-managed Elasticsearch cluster during the migration, we recommend that you configure loop execution for the reindex operation to shorten the time during which write operations are suspended. For more information, see the method used to migrate a large volume of data (without deletions and with update time) in Step 4: Migrate data.

If you connect to the self-managed Elasticsearch cluster or the Alibaba Cloud Elasticsearch cluster by using its domain name, do not include path information in the URL, such as

http://host:port/path.

Procedure

Step 1: (Optional) Obtain the domain name of an endpoint

Alibaba Cloud Elasticsearch clusters created in October 2020 or later are deployed in the new network architecture. These clusters reside in the VPC within the service account of Alibaba Cloud Elasticsearch. If your Alibaba Cloud Elasticsearch cluster is deployed in the new network architecture, you need to use the PrivateLink service to establish a private connection between the VPC and your VPC. Then, obtain the domain name of the related endpoint for future use. For more information, see Configure a private connection for an Elasticsearch cluster.

Step 2: Create destination indexes

Create destination indexes on the Alibaba Cloud Elasticsearch cluster based on the index settings of the self-managed Elasticsearch cluster. You can also enable the Auto Indexing feature for the Alibaba Cloud Elasticsearch cluster. However, we recommend that you do not use this feature.

The following sample code is a Python 2 script that is used to create multiple indexes on the Alibaba Cloud Elasticsearch cluster at a time. By default, no replica shards are configured for these indexes.

#!/usr/bin/python

# -*- coding: UTF-8 -*-

# File name: indiceCreate.py

import sys

import base64

import time

import httplib

import json

## Specify the host information of the self-managed Elasticsearch cluster.

oldClusterHost = "old-cluster.com"

## Specify the username of the self-managed Elasticsearch cluster. The field can be empty.

oldClusterUserName = "old-username"

## Specify the password of the self-managed Elasticsearch cluster. The field can be empty.

oldClusterPassword = "old-password"

## Specify the host information of the Alibaba Cloud Elasticsearch cluster. You can obtain the information from the Basic Information page of the Alibaba Cloud Elasticsearch cluster in the Alibaba Cloud Elasticsearch console.

newClusterHost = "new-cluster.com"

## Specify the username of the Alibaba Cloud Elasticsearch cluster.

newClusterUser = "elastic"

## Specify the password of the Alibaba Cloud Elasticsearch cluster.

newClusterPassword = "new-password"

DEFAULT_REPLICAS = 0

def httpRequest(method, host, endpoint, params="", username="", password=""):

conn = httplib.HTTPConnection(host)

headers = {}

if (username != "") :

'Hello {name}, your age is {age} !'.format(name = 'Tom', age = '20')

base64string = base64.encodestring('{username}:{password}'.format(username = username, password = password)).replace('\n', '')

headers["Authorization"] = "Basic %s" % base64string;

if "GET" == method:

headers["Content-Type"] = "application/x-www-form-urlencoded"

conn.request(method=method, url=endpoint, headers=headers)

else :

headers["Content-Type"] = "application/json"

conn.request(method=method, url=endpoint, body=params, headers=headers)

response = conn.getresponse()

res = response.read()

return res

def httpGet(host, endpoint, username="", password=""):

return httpRequest("GET", host, endpoint, "", username, password)

def httpPost(host, endpoint, params, username="", password=""):

return httpRequest("POST", host, endpoint, params, username, password)

def httpPut(host, endpoint, params, username="", password=""):

return httpRequest("PUT", host, endpoint, params, username, password)

def getIndices(host, username="", password=""):

endpoint = "/_cat/indices"

indicesResult = httpGet(oldClusterHost, endpoint, oldClusterUserName, oldClusterPassword)

indicesList = indicesResult.split("\n")

indexList = []

for indices in indicesList:

if (indices.find("open") > 0):

indexList.append(indices.split()[2])

return indexList

def getSettings(index, host, username="", password=""):

endpoint = "/" + index + "/_settings"

indexSettings = httpGet(host, endpoint, username, password)

print index + " Original settings: \n" + indexSettings

settingsDict = json.loads(indexSettings)

## By default, the number of primary shards is the same as that for the indexes on the self-managed Elasticsearch cluster.

number_of_shards = settingsDict[index]["settings"]["index"]["number_of_shards"]

## The default number of replica shards is 0.

number_of_replicas = DEFAULT_REPLICAS

newSetting = "\"settings\": {\"number_of_shards\": %s, \"number_of_replicas\": %s}" % (number_of_shards, number_of_replicas)

return newSetting

def getMapping(index, host, username="", password=""):

endpoint = "/" + index + "/_mapping"

indexMapping = httpGet(host, endpoint, username, password)

print index + " Original mappings: \n" + indexMapping

mappingDict = json.loads(indexMapping)

mappings = json.dumps(mappingDict[index]["mappings"])

newMapping = "\"mappings\" : " + mappings

return newMapping

def createIndexStatement(oldIndexName):

settingStr = getSettings(oldIndexName, oldClusterHost, oldClusterUserName, oldClusterPassword)

mappingStr = getMapping(oldIndexName, oldClusterHost, oldClusterUserName, oldClusterPassword)

createstatement = "{\n" + str(settingStr) + ",\n" + str(mappingStr) + "\n}"

return createstatement

def createIndex(oldIndexName, newIndexName=""):

if (newIndexName == "") :

newIndexName = oldIndexName

createstatement = createIndexStatement(oldIndexName)

print "New index " + newIndexName + " Index settings and mappings: \n" + createstatement

endpoint = "/" + newIndexName

createResult = httpPut(newClusterHost, endpoint, createstatement, newClusterUser, newClusterPassword)

print "New index " + newIndexName + " Creation result: " + createResult

## main

indexList = getIndices(oldClusterHost, oldClusterUserName, oldClusterPassword)

systemIndex = []

for index in indexList:

if (index.startswith(".")):

systemIndex.append(index)

else :

createIndex(index, index)

if (len(systemIndex) > 0) :

for index in systemIndex:

print index + " It may be a system index and will not be recreated. You can manually recreate the index based on your business requirements."Step 3: Configure a remote reindex whitelist for the Alibaba Cloud Elasticsearch cluster

- Log on to the Alibaba Cloud Elasticsearch console.

- In the left-side navigation pane, click Elasticsearch Clusters.

- Navigate to the desired cluster.

- In the top navigation bar, select the resource group to which the cluster belongs and the region where the cluster resides.

- On the Elasticsearch Clusters page, find the cluster and click its ID.

In the left-side navigation pane of the page that appears, choose .

On the page that appears, click Modify Configuration on the right side of YML Configuration.

In the Other Configurations field of the YML File Configuration panel, configure a remote reindex whitelist.

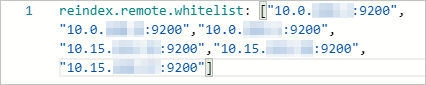

The following code provides a configuration example:

reindex.remote.whitelist: ["10.0.xx.xx:9200","10.0.xx.xx:9200","10.0.xx.xx:9200","10.15.xx.xx:9200","10.15.xx.xx:9200","10.15.xx.xx:9200"]

The reindex.remote.whitelist parameter is used to configure a remote reindex whitelist. When you configure the whitelist, you must add the IP addresses of the hosts in the self-managed Elasticsearch cluster to the whitelist. The configuration rules vary based on the network architecture in which the Alibaba Cloud Elasticsearch cluster is deployed.

If the Alibaba Cloud Elasticsearch cluster is deployed in the original network architecture, you must configure this parameter in the format of Host:Port number. Separate multiple configurations with commas (,), such as otherhost:9200,another:9200,127.0.10.**:9200,localhost:**. Protocols cannot be identified.

If the Alibaba Cloud Elasticsearch cluster is deployed in the new network architecture, you must configure this parameter in the format of Domain name of the related endpoint:Port number, such as ep-bp1hfkx7coy8lvu4****-cn-hangzhou-i.epsrv-bp1zczi0fgoc5qtv****.cn-hangzhou.privatelink.aliyuncs.com:9200. You can obtain the domain name of the related endpoint based on the instructions in Step 1: (Optional) Obtain the domain name of an endpoint. For more information, see Step 3: Configure a private connection for the Elasticsearch cluster.

NoteFor more information about other parameters, see Configure the YML file.

- Select This operation will restart the cluster. Continue? and click OK. Then, the system restarts the Elasticsearch cluster. You can view the restart progress in the Tasks dialog box. After the cluster is restarted, the configuration is complete.

Step 4: Migrate data

This section describes how to migrate data to an Alibaba Cloud Elasticsearch cluster deployed in the original network architecture. You can use one of the following methods to migrate data. Select a method based on the volume of data that you want to migrate and your business requirements.

Migrate a small volume of data

Run the following script:

#!/bin/bash

# file:reindex.sh

indexName="The name of the index."

newClusterUser="The username of the Alibaba Cloud Elasticsearch cluster."

newClusterPass="The password of the Alibaba Cloud Elasticsearch cluster."

newClusterHost="The host information of the Alibaba Cloud Elasticsearch cluster."

oldClusterUser="The username of the self-managed Elasticsearch cluster."

oldClusterPass="The password of the self-managed Elasticsearch cluster."

# You must configure the host information of the self-managed Elasticsearch cluster in the format of [scheme]://[host]:[port]. Example: http://10.37.*.*:9200.

oldClusterHost="The host information of the self-managed Elasticsearch cluster."

curl -u ${newClusterUser}:${newClusterPass} -XPOST "http://${newClusterHost}/_reindex?pretty" -H "Content-Type: application/json" -d'{

"source": {

"remote": {

"host": "'${oldClusterHost}'",

"username": "'${oldClusterUser}'",

"password": "'${oldClusterPass}'"

},

"index": "'${indexName}'",

"query": {

"match_all": {}

}

},

"dest": {

"index": "'${indexName}'"

}

}'Migrate a large volume of data (without deletions and with update time)

To migrate a large volume of data without deletions, you can perform a rolling update to shorten the time during which write operations are suspended. The rolling update requires that your data schema has a time-series attribute that indicates the update time. You can stop writing data to the self-managed Elasticsearch cluster after data is migrated. Then, use the reindex API to perform a rolling update to synchronize the data that is updated during the migration. After the rolling update is complete, you can read data from and write data to the Alibaba Cloud Elasticsearch cluster.

#!/bin/bash

# file: circleReindex.sh

# CONTROLLING STARTUP:

# This is a script that uses the reindex API to remotely reindex data. Requirements:

# 1. Indexes are created on the Alibaba Cloud Elasticsearch cluster, or the Auto Indexing and dynamic mapping features are enabled for the cluster.

# 2. A remote reindex whitelist is configured for the Alibaba Cloud Elasticsearch cluster in the YML File Configuration panel of the Alibaba Cloud Elasticsearch console. For example, the following information is specified in the Other Configurations field: reindex.remote.whitelist: 172.16.**.**:9200.

# 3. The host is configured in the format of [scheme]://[host]:[port].

USAGE="Usage: sh circleReindex.sh <count>

count: the number of reindex operations that you can perform. A negative number indicates loop execution.

Example:

sh circleReindex.sh 1

sh circleReindex.sh 5

sh circleReindex.sh -1"

indexName="The name of the index."

newClusterUser="The username of the Alibaba Cloud Elasticsearch cluster."

newClusterPass="The password of the Alibaba Cloud Elasticsearch cluster."

oldClusterUser="The username of the self-managed Elasticsearch cluster."

oldClusterPass="The password of the self-managed Elasticsearch cluster."

## http://myescluster.com

newClusterHost="The host information of the Alibaba Cloud Elasticsearch cluster."

# You must configure the host information of the self-managed Elasticsearch cluster in the format of [scheme]://[host]:[port]. Example: http://10.37.*.*:9200.

oldClusterHost="The host information of the self-managed Elasticsearch cluster."

timeField="The update time of data."

reindexTimes=0

lastTimestamp=0

curTimestamp=`date +%s`

hasError=false

function reIndexOP() {

reindexTimes=$[${reindexTimes} + 1]

curTimestamp=`date +%s`

ret=`curl -u ${newClusterUser}:${newClusterPass} -XPOST "${newClusterHost}/_reindex?pretty" -H "Content-Type: application/json" -d '{

"source": {

"remote": {

"host": "'${oldClusterHost}'",

"username": "'${oldClusterUser}'",

"password": "'${oldClusterPass}'"

},

"index": "'${indexName}'",

"query": {

"range" : {

"'${timeField}'" : {

"gte" : '${lastTimestamp}',

"lt" : '${curTimestamp}'

}

}

}

},

"dest": {

"index": "'${indexName}'"

}

}'`

lastTimestamp=${curTimestamp}

echo "${reindexTimes} reindex operations are performed. The last reindex operation is complete at ${lastTimestamp}. Result: ${ret}."

if [[ ${ret} == *error* ]]; then

hasError=true

echo "An unknown error occurred when you perform this operation. All subsequent operations are suspended."

fi

}

function start() {

## A negative number indicates loop execution.

if [[ $1 -lt 0 ]]; then

while :

do

reIndexOP

done

elif [[ $1 -gt 0 ]]; then

k=0

while [[ k -lt $1 ]] && [[ ${hasError} == false ]]; do

reIndexOP

let ++k

done

fi

}

## main

if [ $# -lt 1 ]; then

echo "$USAGE"

exit 1

fi

echo "Start the reindex operation for the ${indexName} index."

start $1

echo "${reindexTimes} reindex operations are performed."Migrate a large volume of data (without deletions and update time)

You can migrate a large volume of data if no update time is defined in the index mappings of the self-managed Elasticsearch cluster. However, you must add an update time field to the index mappings. After the field is added, you can migrate existing data. Then, perform a rolling update that is described in the second data migration method to migrate incremental data.

#!/bin/bash

# file:miss.sh

indexName="The name of the index."

newClusterUser="The username of the Alibaba Cloud Elasticsearch cluster."

newClusterPass="The password of the Alibaba Cloud Elasticsearch cluster."

newClusterHost="The host information of the Alibaba Cloud Elasticsearch cluster."

oldClusterUser="The username of the self-managed Elasticsearch cluster."

oldClusterPass="The password of the self-managed Elasticsearch cluster."

# You must configure the host information of the self-managed Elasticsearch cluster in the format of [scheme]://[host]:[port]. Example: http://10.37.*.*:9200.

oldClusterHost="The host information of the self-managed Elasticsearch cluster."

timeField="updatetime"

curl -u ${newClusterUser}:${newClusterPass} -XPOST "http://${newClusterHost}/_reindex?pretty" -H "Content-Type: application/json" -d '{

"source": {

"remote": {

"host": "'${oldClusterHost}'",

"username": "'${oldClusterUser}'",

"password": "'${oldClusterPass}'"

},

"index": "'${indexName}'",

"query": {

"bool": {

"must_not": {

"exists": {

"field": "'${timeField}'"

}

}

}

}

},

"dest": {

"index": "'${indexName}'"

}

}'FAQ

Problem: When I run a cURL command, the system displays

{"error":"Content-Type header [application/x-www-form-urlencoded] is not supported","status":406}. What do I do?Solution: Add

-H "Content-Type: application/json"to the cURL command and try again.// Obtain all the indexes on the self-managed Elasticsearch cluster. If you do not have the required permissions, remove the "-u user:pass" parameter. Replace oldClusterHost with the host information of the self-managed Elasticsearch cluster. curl -u user:pass -XGET http://oldClusterHost/_cat/indices | awk '{print $3}' // Obtain the settings and mappings of the index that you want to migrate for the specified user based on the returned indexes. Replace indexName with the index name that you want to query. curl -u user:pass -XGET http://oldClusterHost/indexName/_settings,_mapping?pretty=true // Create an index on the Alibaba Cloud Elasticsearch cluster based on the settings and mappings that you obtained. You can set the number of replica shards to 0 to accelerate data migration, and change the number to 1 after data is migrated. // Replace newClusterHost with the host information of the Alibaba Cloud Elasticsearch cluster, testindex with the name of the index that you have created, and testtype with the type of the index. curl -u user:pass -XPUT http://<newClusterHost>/<testindex> -d '{ "testindex" : { "settings" : { "number_of_shards" : "5", // Specify the number of primary shards for the index on the self-managed Elasticsearch cluster, such as 5. "number_of_replicas" : "0" // Set the number of replica shards to 0. } }, "mappings" : { // Specify the mappings of the index on the self-managed Elasticsearch cluster. Example: "testtype" : { "properties" : { "uid" : { "type" : "long" }, "name" : { "type" : "text" }, "create_time" : { "type" : "long" } } } } } }'Problem: What do I do if the source index stores large volumes of data and the data migration is slow?

Solution:

If you use the reindex API to migrate data, data is migrated in scroll mode. To improve the efficiency of data migration, you can increase the scroll size or configure a sliced scroll. The sliced scroll can parallelize the reindex process. For more information, see the reindex API.

If the self-managed Elasticsearch cluster stores large volumes of data, we recommend that you use snapshots stored in OSS to migrate data. For more information, see Use OSS to migrate data from a self-managed Elasticsearch cluster to an Alibaba Cloud Elasticsearch cluster.

If the source index stores large volumes of data, you can set the number of replica shards to 0 and the refresh interval to -1 for the destination index before you migrate data to accelerate data migration. After data is migrated, restore the settings to the original values.

// You can set the number of replica shards to 0 and disable the refresh feature to accelerate the data migration. curl -u user:password -XPUT 'http://<host:port>/indexName/_settings' -d' { "number_of_replicas" : 0, "refresh_interval" : "-1" }' // After data is migrated, set the number of replica shards to 1 and the refresh interval to 1s, which is the default value. curl -u user:password -XPUT 'http://<host:port>/indexName/_settings' -d' { "number_of_replicas" : 1, "refresh_interval" : "1s" }'