This tutorial uses two data sources as an example: the ods_user_info_d table in MySQL, which contains basic user information, and the user_log.txt file in OSS, which contains website access log data. You will use a Data Integration batch sync task to synchronize data from these sources to the ods_user_info_d and ods_raw_log_d tables in MaxCompute. This tutorial describes how to use DataWorks Data Integration to synchronize data between heterogeneous data sources for a data warehouse.

Prerequisites

Ensure that you have prepared the required operating environment. For more information, see Prepare the environment.

1. Create data sources

To ensure that data can be processed in subsequent steps, you must add the following data sources to your DataWorks workspace to retrieve the raw data.

MySQL data source: This tutorial uses a data source named

user_behavior_analysis_mysqlto retrieve basic user information (ods_user_info_d) from MySQL.HttpFile data source: In this tutorial, the data source is named

user_behavior_analysis_httpfileand is used to retrieve user website access logs (user_log.txt) stored in OSS.

If you have already created MySQL and HttpFile data sources for other user persona analysis tutorials, you can skip this step.

Create a MySQL data source (user_behavior_analysis_mysql)

The basic user information for this tutorial is stored in a MySQL database. You must create a MySQL data source to synchronize the user information (ods_user_info_d) from the database to MaxCompute.

Go to the Data Source page.

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to Management Center.

In the navigation pane on the left, click Data Sources to go to the Data Sources page.

Click Add Data Source. Search for and select MySQL as the data source type.

On the Add MySQL Data Source page, configure the parameters. For this tutorial, use the same example values for both the development and production environments.

The following table describes the key parameters. You can keep the default values for the other parameters.

Parameter

Description

Data Source Name

Enter a name for the data source. For this tutorial, enter

user_behavior_analysis_mysql.Data Source Description

This data source is for DataWorks tutorials. Read data from this data source when you configure an batch sync task to access the test data provided by the platform. This data source can only be read in Data Integration scenarios. Other modules cannot use it.

Configuration Mode

Select Connection String Mode.

Connection Address

Host IP address:

rm-bp1z69dodhh85z9qa.mysql.rds.aliyuncs.comPort:

3306

Database Name

Enter the database name. For this tutorial, enter

workshop.Username

Enter the username. For this tutorial, enter

workshop.Password

Enter the password. For this tutorial, enter

workshop#2017.Authentication Method

No authentication.

In the Connection Configuration section, click Test Network Connectivity for both the production and development environments. Ensure that the connectivity status is Connected.

ImportantEnsure that the resource group is attached to the workspace and has public network access enabled. Otherwise, data synchronization fails. For more information, see Prepare the environment.

If you do not have a resource group available, follow the prompts in the connection configuration section. Click Purchase and Associated Purchased Resource Group.

Click Complete Creation.

Create an HttpFile data source (user_behavior_analysis_httpfile)

The user website access logs for this tutorial are stored in OSS. You must create an HttpFile data source to synchronize the user website access log (user_log.txt) from OSS to MaxCompute.

Click Data Sources in the navigation pane on the left.

Click Add Data Source. In the Add Data Source dialog box, search for and select HttpFile as the data source type.

On the Add HttpFile Data Source page, configure the parameters. For this tutorial, use the same example values for both the development and production environments.

The following table describes the key parameters. You can keep the default values for the other parameters.

Parameter

Description

Data Source Name

Enter the data source name. For this tutorial, enter

user_behavior_analysis_httpfile.Data Source Description

This data source is for DataWorks tutorials. Read data from this data source when you configure an batch sync task to access the test data provided by the platform. This data source can only be read in Data Integration scenarios. Other modules cannot use it.

URL

Set URL to

https://dataworks-workshop-2024.oss-cn-shanghai.aliyuncs.comfor both the development and production environments.In the Connection Configuration section, click Test Network Connectivity for both the production and development environments. Ensure that the connectivity status is Connected.

ImportantEnsure that the resource group is attached to the workspace and has public network access enabled. Otherwise, data synchronization fails. For more information, see Prepare the environment.

If you do not have a resource group available, follow the prompts in the connection configuration section. Click Purchase and Associated Purchased Resource Group.

Click Complete Creation.

2. Build a sync pipeline

In this step, you can build a sync pipeline to synchronize the user information and website access logs to their respective MaxCompute tables. This prepares the data for further processing.

Click the

icon in the upper-left corner and select . Then, at the top of the page, switch to the workspace that is created for this tutorial.

icon in the upper-left corner and select . Then, at the top of the page, switch to the workspace that is created for this tutorial.In the navigation pane on the left, click

to go to the Data Development page. Then, in the Workspace Directories area, click

to go to the Data Development page. Then, in the Workspace Directories area, click  , select Create Workflow, and enter a workflow name. For this tutorial, the name is set to

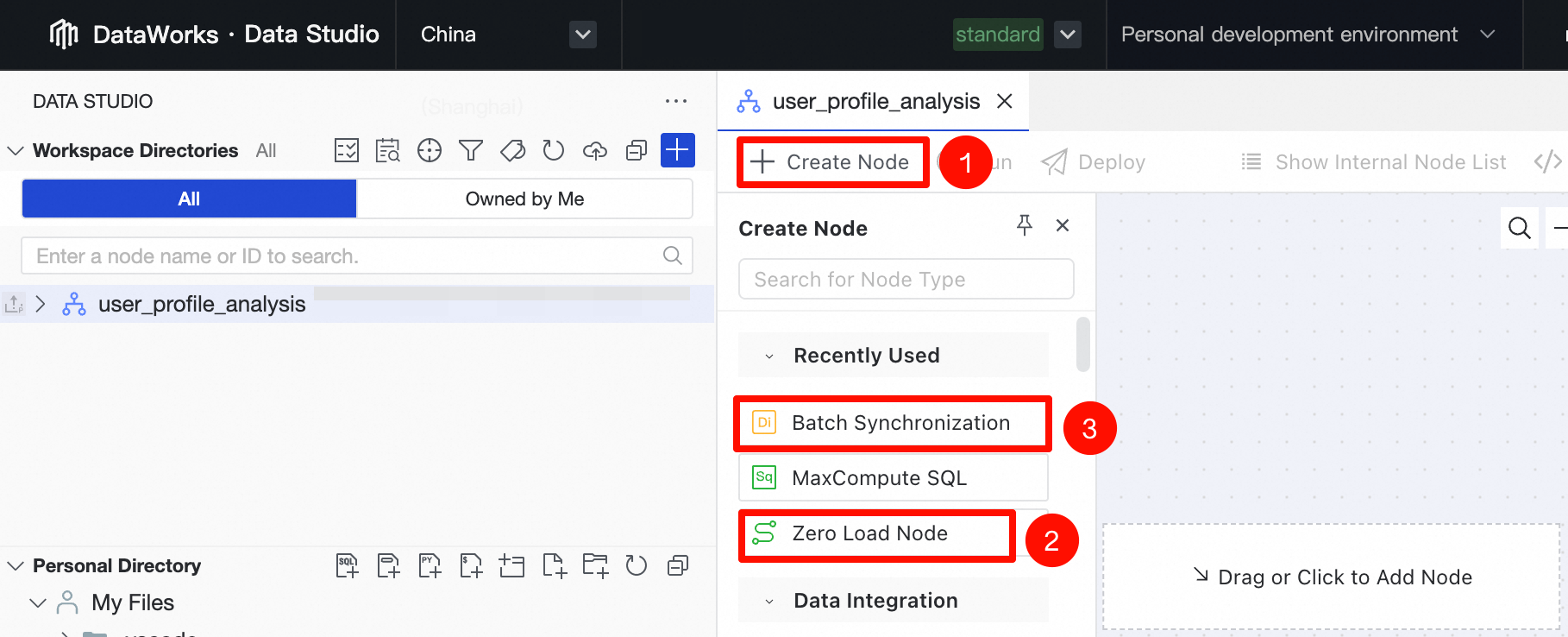

, select Create Workflow, and enter a workflow name. For this tutorial, the name is set to user_profile_analysis.On the workflow orchestration page, click Create Node, drag a Zero Load Node and two Batch Synchronization nodes from the pane on the left to the canvas, and name the nodes.

The following table shows the example node names and their functions for this tutorial.

Node Type

Node Name

Node Function

Zero Load Node

Zero Load Nodeworkshop_startManages the entire user persona analysis workflow and clarifies the data forwarding path. This node is a Dry-run node and requires no code editing.

Batch Synchronization

Batch Synchronizationods_user_info_dSynchronizes basic user information from MySQL to the

ods_user_info_dtable in MaxCompute. Batch Synchronization

Batch Synchronizationods_raw_log_dUsed to synchronize user website access records from OSS to the

ods_raw_log_dtable in MaxCompute.Drag a connection to set the

workshop_startnode as the ancestor node of the two batch sync nodes, as shown in the following figure.Configure the workflow scheduling properties.

On the workflow canvas, click Scheduling in the right-side pane and configure the parameters. The following table describes the key parameters. You can keep the default values for the other parameters.

Scheduling Parameter

Description

Scheduling Parameters

You can configure scheduling parameters for the entire workflow. The inner nodes of the workflow can directly use the configured scheduling parameters. In this tutorial, the parameter is set to

bizdate=$[yyyymmdd-1]to obtain the date of the previous day.Scheduling Cycle

Set to

Dayfor this tutorial.Scheduling Time

In this tutorial, Scheduling Time is set to

00:30, which means the workflow will start daily at00:30.Scheduling Dependencies

The workflow has no upstream dependencies, so you do not need to configure any. For easier management, click Use Workspace Root Node to attach the workflow to the root node of the workspace.

The naming format for the workspace root node is

workspace_name_root.

3. Configure sync tasks

Configure the initial node

On the workflow orchestration page, hover over the

workshop_startnode and click Open Node.In the right-side pane of the

workshop_startnode configuration page, click Scheduling and configure the required parameters. The following table describes the key parameters. You can keep the default values for the other parameters.Scheduling Parameter

Description

Scheduling Type

This tutorial uses

dry-run scheduling.Resource Group

For this tutorial, select the Serverless resource group created in the Prepare the environment step.

Scheduling Dependencies

Because

workshop_startis the initial node and has no upstream dependencies, you can click Use Workspace Root Node to trigger the workflow execution from the workspace root node.The workspace root node is named

workspace_name_root.

Configure the user data sync pipeline (ods_user_info_d)

On the workflow orchestration page, hover over the

ods_user_info_dnode and click Open Node.Configure the network and resources for the sync pipeline.

Parameter

Description

Source

Data source:

MySQL.Data source name:

user_behavior_analysis_mysql.

Resource Group

Select the Serverless resource group that you purchased in the Prepare the environment step.

Destination

Data Destination:

MaxCompute(ODPS).Data Source Name: Select the MaxCompute computing resource that you attached in the Prepare the environment step. For example,

MaxCompute_Source.

Click Next to configure the sync task.

Configure Source and Destination

The following table describes the key parameters. You can keep the default values for the other parameters.

Module

Configuration Item

Description

Data source

Table

Select

ods_user_info_d.Split key

The shard key is set to the

uidfield. The `uid` field is the primary key of theods_user_info_dtable in MySQL.Destination

Tunnel Resource Group

This tutorial uses the Common transmission resources by default. If you have an exclusive Tunnel Quota, you can select it from the drop-down list. For more information about exclusive Tunnel Quotas, see Purchase and use an exclusive resource group for Data Transmission Service.

Table

Click Generate Destination Table Schema to quickly create a MaxCompute table. Paste the following DDL statement into the Statement for Creating Table section and click Create Table. This table receives the user data from the source.

CREATE TABLE IF NOT EXISTS ods_user_info_d ( uid STRING COMMENT 'User ID', gender STRING COMMENT 'Gender', age_range STRING COMMENT 'Age range', zodiac STRING COMMENT 'Zodiac sign' ) PARTITIONED BY ( dt STRING ) LIFECYCLE 7;Partition information

For this tutorial, enter

${bizdate}. This lets you assign a constant value to thebizdateparameter during the testing phase, and dynamically assign a value to thebizdateparameter during scheduled execution. For information about the variable formats and configuration methods supported by DataStudio, see Scheduling Parameters.Confirm the Field Mapping and Channel Control.

DataWorks synchronizes data from the specified source fields to the specified destination fields based on the configured field mapping. You can also set the concurrency and configure policies for dirty data. For this tutorial, set Policy for Dirty Data Records to Disallow Dirty Data Records. You can keep the default values for the other settings. For more information, see Configure a sync task using the codeless UI.

Configure debug parameters.

In the right-side pane of the batch sync task configuration page, click Run Configuration. Configure the following parameters to test the run in Step 4: Sync data.

Configuration Item

Description

Resource Group

Select the Serverless resource group that you purchased in the Prepare the environment step.

Script Parameters

You do not need to configure this parameter. In this tutorial, the sample code uses

${bizdate}to represent the data timestamp. When you debug the workflow in Step 4, set the Value Used in This Run to a specific constant, such as20250223. The job run will then use this constant to replace the variable defined in the job.(Optional) Configure scheduling properties.

For this tutorial, you can keep the default values for the scheduling parameters. In the right-side pane of the batch sync task configuration page, click Scheduling. For more information about the parameters, see Node scheduling configuration.

Scheduling Parameters: These parameters are already configured for the workflow. Inner nodes do not require separate configuration and can use the parameters directly in tasks or code.

Scheduling Policies: You can use the Time for Delayed Execution parameter to specify the duration that a child node waits to run after the workflow is executed. This parameter is not set in this tutorial.

In the node toolbar, click Save.

Configure the user log sync pipeline (ods_raw_log_d)

On the workflow orchestration page, hover over the

ods_raw_log_dnode and click Open Node.Configure the network and resources for the sync pipeline.

Parameter

Description

Source

Data source:

HttpFile.Data source name:

user_behavior_analysis_HttpFile.

Resource Group

Select the Serverless resource group that you purchased in the Prepare the environment step.

Destination

Data Destination:

MaxCompute(ODPS).Data Source Name: Select the MaxCompute computing resource that you attached in the Prepare environment stage. In this example, the name is

MaxCompute_Source.

Click Next to configure the sync task.

Configure Source and Destination

The following table describes the key parameters. You can keep the default values for the other parameters.

Module

Configuration Item

Description

Data source

File path

For this tutorial, enter

/user_log.txt.Text type

This tutorial uses the

texttype.Column Delimiter

For this tutorial, enter

|.Advanced configuration > Skip Header

For this tutorial, select

Noto not skip the table header.ImportantAfter you configure the data source, click Confirm Data Structure to check if the log file can be read correctly.

Destination

Tunnel Resource Group

This tutorial uses the Common transmission resources by default. If you have an exclusive Tunnel Quota, you can select it from the drop-down list. For more information about exclusive Tunnel Quotas, see Purchase and use an exclusive resource group for Data Transmission Service.

Table

Click Generate Destination Table Schema to quickly create a MaxCompute table. Paste the following DDL statement into the Statement for Creating Table section and click Create Table.

CREATE TABLE IF NOT EXISTS ods_raw_log_d ( col STRING ) PARTITIONED BY ( dt STRING ) LIFECYCLE 7;Partition information

For this tutorial, enter

${bizdate}. This lets you assign a constant value to thebizdateparameter during the testing phase, and dynamically assign a value to thebizdateparameter during scheduled execution. For information about the variable formats and configuration methods supported by DataStudio, see Scheduling Parameters.Confirm the Field Mapping and Channel Control.

DataWorks synchronizes data from the specified source fields to the specified destination fields based on the configured field mapping. You can also set the concurrency and configure policies for dirty data. For this tutorial, set Policy for Dirty Data Records to Disallow Dirty Data Records. You can keep the default values for the other settings. For more information, see Configure a sync task using the codeless UI.

Configure debug parameters.

In the right-side pane of the batch sync task configuration page, click Run Configuration. Configure the following parameters to test the run in Step 4: Sync data.

Configuration Item

Description

Resource Group

Select the Serverless resource group that you purchased in the Prepare the environment step.

Script Parameters

You do not need to configure this parameter. In this tutorial, the sample code uses

${bizdate}to represent the data timestamp. When you debug the workflow in Step 4, set the Value Used in This Run to a specific constant, such as20250223. The job run will then use this constant to replace the variable defined in the job.(Optional) Configure scheduling properties.

For this tutorial, you can keep the default values for the scheduling parameters. In the right-side pane of the batch sync task configuration page, click Scheduling. For more information about the parameters, see Node scheduling configuration.

Scheduling Parameters: These parameters are already configured for the workflow. Inner nodes do not require separate configuration and can use the parameters directly in tasks or code.

Scheduling Policies: You can use the Time for Delayed Execution parameter to specify the duration that a child node waits to run after the workflow is executed. This parameter is not set in this tutorial.

In the node toolbar, click Save.

Step 4: Sync data

Sync the data.

In the workflow toolbar, click Run. Set the values for the parameter variables that are defined in each node for this run. This tutorial uses

20250223, but you can modify the value as needed. Then, click OK and wait for the run to complete.Query the data sync results.

In the navigation pane on the left of DataStudio, click

to go to the Data Development page. Then, in the personal folder area, click

to go to the Data Development page. Then, in the personal folder area, click  to create a file with the

to create a file with the .sqlextension. You can specify a custom file name.At the bottom of the page, confirm that the language mode is

MaxCompute SQL.

In the node editing window, enter the following SQL statement to view the number of records that are imported into

ods_raw_log_dandods_user_info_d. This confirms whether the synchronized data is written to the destination tables.-- You need to change the partition filter condition to the actual data timestamp for your current operation. In this tutorial, the debug parameter bizdate (data timestamp) was set to 20250223. SELECT count(*) FROM ods_user_info_d WHERE dt='your_data_timestamp'; SELECT count(*) FROM ods_raw_log_d WHERE dt='your_data_timestamp';If the queries return data, the data synchronization is complete.

If no data is returned, ensure that the Value Used in This Run that is configured for the workflow run matches the data timestamp specified by

dtin the query. You can click the workflow, click Running History in the right-side pane, and then click View in the Actions column for a run record to confirm the data timestamp value (partition=[pt=xxx]) in the workflow run log.

Next steps

After you configure data synchronization, you can proceed to the next tutorial to learn how to process and analyze the synchronized data. For more information, see Process data.