DataWorks Check nodes verify the availability of target objects (such as MaxCompute partitioned tables, FTP/OSS/HDFS files, or real-time synchronization tasks). The node runs successfully when the specified check strategy is met. Use a Check node as an upstream dependency for tasks that require these objects. When the Check node passes, it triggers the downstream task. This topic describes the objects supported by Check nodes, specific check strategies, and how to configure a Check node.

Supported objects and check strategies

Check nodes verify data sources and real-time synchronization tasks. The supported check strategies are as follows:

Data source

MaxCompute partitioned table, DLF (Paimon partitioned table)

Note Check nodes support MaxCompute partitioned tables only.

Check nodes provide the following two strategies to determine whether MaxCompute partitioned table data is available:

Strategy 1: Check whether the target partition exists

If the target partition exists, the data is considered available.

Strategy 2: Check whether the target partition is updated within a specified period

If the target partition is not updated within the specified period, data generation is considered complete.

FTP, OSS, HDFS, or OSS-HDFS file

If the Check node detects that the target FTP, OSS, HDFS, or OSS-HDFS file exists, the system considers the file available.

Real-time synchronization task

The system uses the Check node's scheduled start time as the checkpoint. If the real-time synchronization task has finished writing data up to this time, the check passes.

You must also specify the check interval (the time between consecutive checks) and the stop check condition (maximum number of retries or a deadline). If the task reaches the limit or deadline without passing the check, the Check node fails. For more information about strategy configuration, see Step 2: Configure check strategies.

Note Check nodes perform periodic checks on target objects. Configure the schedule time based on the expected start time. Once the scheduling conditions are met, the Check node runs until the check passes or fails due to a timeout. For more information about scheduling configuration, see Step 3: Configure task scheduling.

The Check node occupies scheduling resources while running until the check is complete.

Limitations

Resource groups: Check nodes require serverless resource groups. To purchase and use a serverless resource group, see Use serverless resource groups.

Data source limits: FTP data sources using the SFTP protocol with Key authentication are not supported.

Node function limits

Each Check node validates a single object. If a task depends on multiple objects (for example, multiple MaxCompute partitioned tables), create a separate Check node for each dependency.

The check interval ranges from 1 to 30 minutes.

DataWorks version limits: Check nodes are available only in DataWorks Professional Edition or higher. If you are using a lower edition, see Version upgrade instructions to upgrade.

Supported regions: China (Hangzhou), China (Shanghai), China (Beijing), China (Shenzhen), China (Chengdu), China (Hong Kong), Japan (Tokyo), Singapore, Malaysia (Kuala Lumpur), Indonesia (Jakarta), Germany (Frankfurt), UK (London), US (Silicon Valley), and US (Virginia).

Prerequisites

To verify a data source, you must first create the data source based on the object type. Details are as follows:

Object type | Preparation | References |

MaxCompute partitioned table | A MaxCompute compute resource is created and bound to DataStudio. Binding a MaxCompute compute resource automatically creates the data source. A MaxCompute partitioned table is created.

| |

FTP file | An FTP data source is created. In DataWorks, you must register the FTP service as a DataWorks FTP data source to access the data. | Create an FTP data source |

OSS file | An OSS data source is created with AccessKey mode. In DataWorks, you must register the OSS bucket as a DataWorks OSS data source to access the data.

Note Currently, Check nodes support only AccessKey mode for OSS data sources. OSS data sources configured with RAM roles are not supported. | |

HDFS file | An HDFS data source is created. In DataWorks, you must register the HDFS file system as a DataWorks HDFS data source to access the data. | Create an HDFS data source |

OSS-HDFS file | An OSS-HDFS data source is created. In DataWorks, you must register the OSS-HDFS service as a DataWorks OSS-HDFS data source to access the data. | OSS-HDFS data source |

When using a Check node to verify a real-time synchronization task, only Kafka-to-MaxCompute tasks are supported. Create the real-time synchronization task before using the Check node. For more information, see Configure a real-time synchronization task.

Step 1: Create a Check node

Go to the DataStudio page.

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to Data Development.

Click the  icon, and select .

icon, and select .

Follow the on-screen instructions to enter the path, name, and other information.

Step 2: Configure check strategies

Select a data source or real-time synchronization task and configure the corresponding strategy based on your business requirements.

Data source

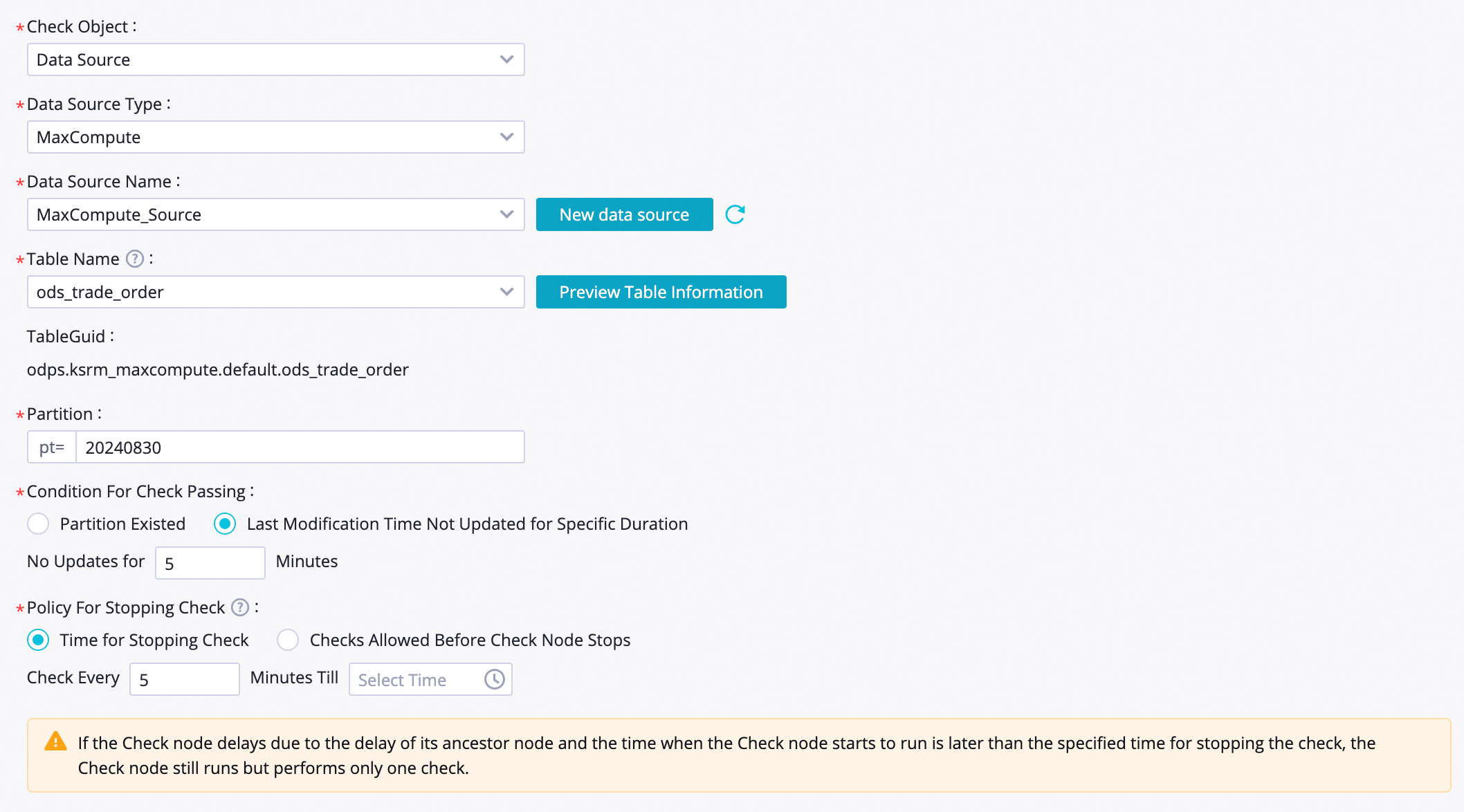

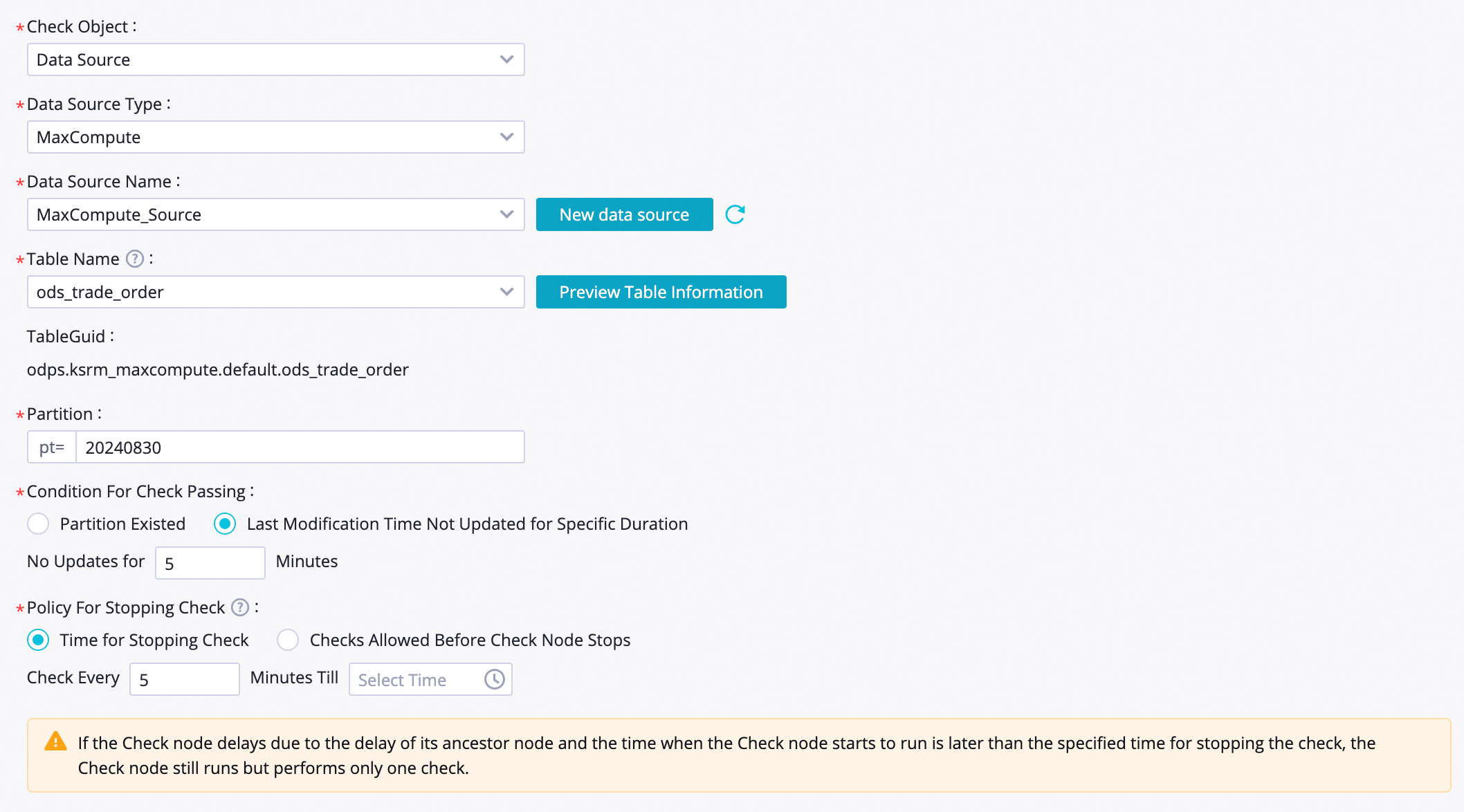

MaxCompute partitioned table

The following table describes the parameters.

Parameter | Description |

Data Source Type | Select MaxCompute. |

Data Source Name | The data source where the MaxCompute partitioned table resides. If no data source is available, click New data source. For more information about how to create a MaxCompute data source, see Associate a MaxCompute compute resource. |

Table Name | The MaxCompute partitioned table to check.

Note You can select only MaxCompute partitioned tables under the selected data source. |

Partition | The partition to check. After configuring Table name, you can preview table information to view partition names. You can also use scheduling parameters. For more information about scheduling parameters, see Supported formats for scheduling parameters. |

Condition For Check Passing | Define the check method and pass condition. Two methods are available: |

Policy For Stopping Check | Configure the stop strategy for the Check node. You can set a deadline or a maximum number of retries, and configure the check frequency: Set deadline: Specify a cutoff time. The node performs checks at the specified interval until the deadline is reached. If the check does not pass by the deadline, the task fails.

Note The check interval ranges from 1 to 30 minutes. If the upstream task is delayed and the Check node starts after the deadline, the Check node runs once.

Set limit on check times: Define a maximum number of retries. The node performs checks at the specified interval until the limit is reached. If the check does not pass after the maximum retries, the task fails.

Note The check interval ranges from 1 to 30 minutes. The maximum run time of a Check node task is 24 hours (1440 minutes). The maximum number of retries depends on the check interval. For example, a 5-minute interval allows up to 288 retries, while a 10-minute interval allows up to 144 retries. Refer to the actual interface for details.

|

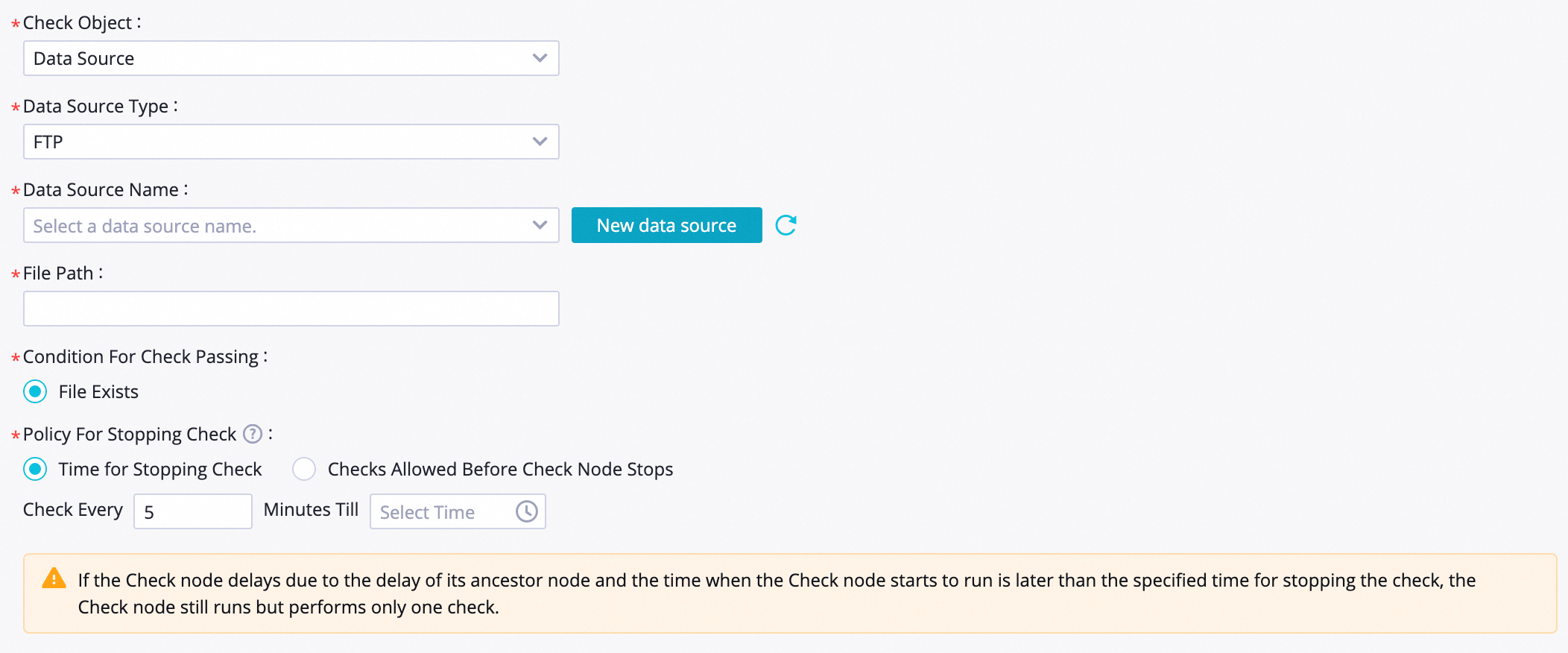

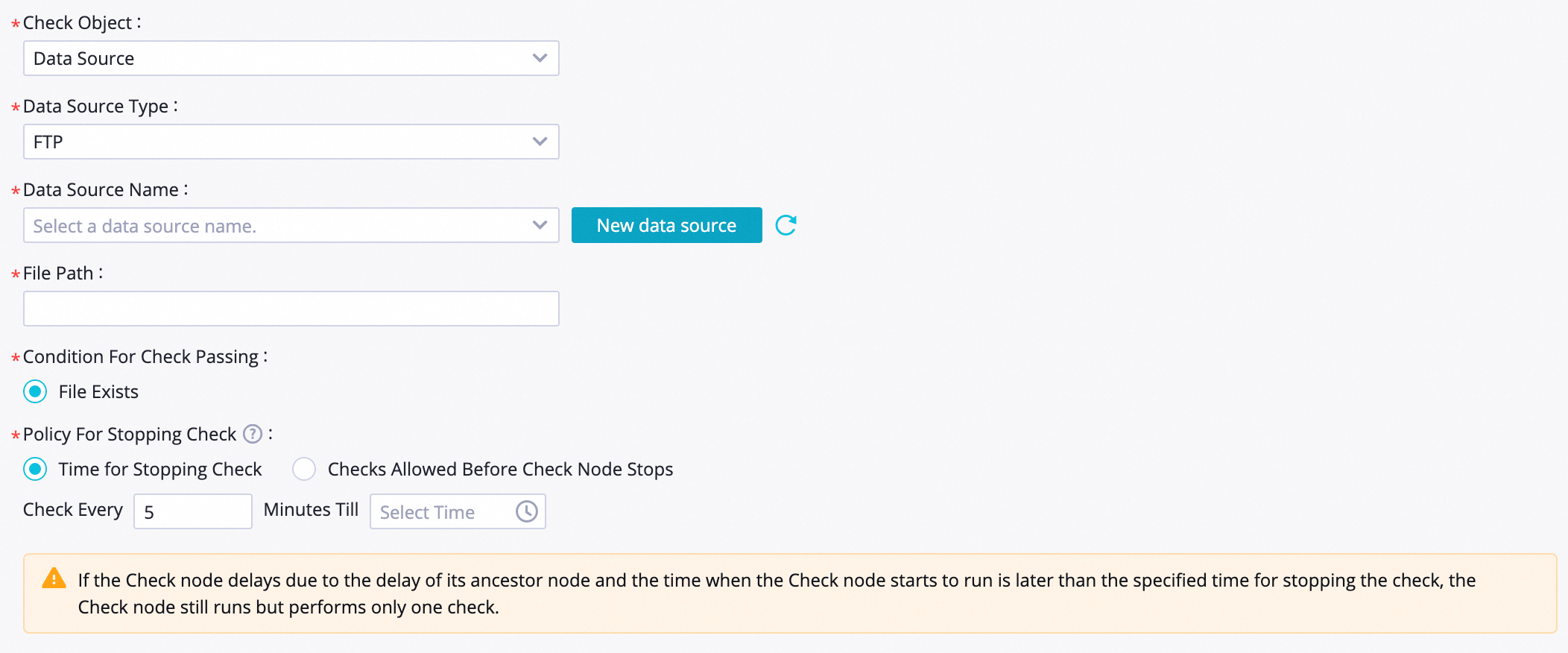

FTP file

The following table describes the parameters.

Parameter | Description |

Data Source Type | Select FTP. |

Data Source Name | The data source where the FTP file resides. If no data source is available, click New data source. For more information about how to create an FTP data source, see FTP data source. |

File Path | The path of the FTP file to check, for example, /var/ftp/test/. If the Check node detects that the path exists, the file exists. You can enter the path or use scheduling parameters. For more information about scheduling parameters, see Supported formats for scheduling parameters. |

Condition For Check Passing | Define the pass condition for the FTP file. If the FTP file exists, the check passes, and the system considers the file available. If the FTP file does not exist, the check fails, and the system considers the file unavailable.

|

Policy For Stopping Check | Configure the stop strategy for the Check node. You can set a deadline or a maximum number of retries, and configure the check frequency: Set deadline: Specify a cutoff time. The node performs checks at the specified interval until the deadline is reached. If the check does not pass by the deadline, the task fails.

Note The check interval ranges from 1 to 30 minutes. If the upstream task is delayed and the Check node starts after the deadline, the Check node runs once.

Set limit on check times: Define a maximum number of retries. The node performs checks at the specified interval until the limit is reached. If the check does not pass after the maximum retries, the task fails.

Note The check interval ranges from 1 to 30 minutes. The maximum run time of a Check node task is 24 hours (1440 minutes). The maximum number of retries depends on the check interval. For example, a 5-minute interval allows up to 288 retries, while a 10-minute interval allows up to 144 retries. Refer to the actual interface for details.

|

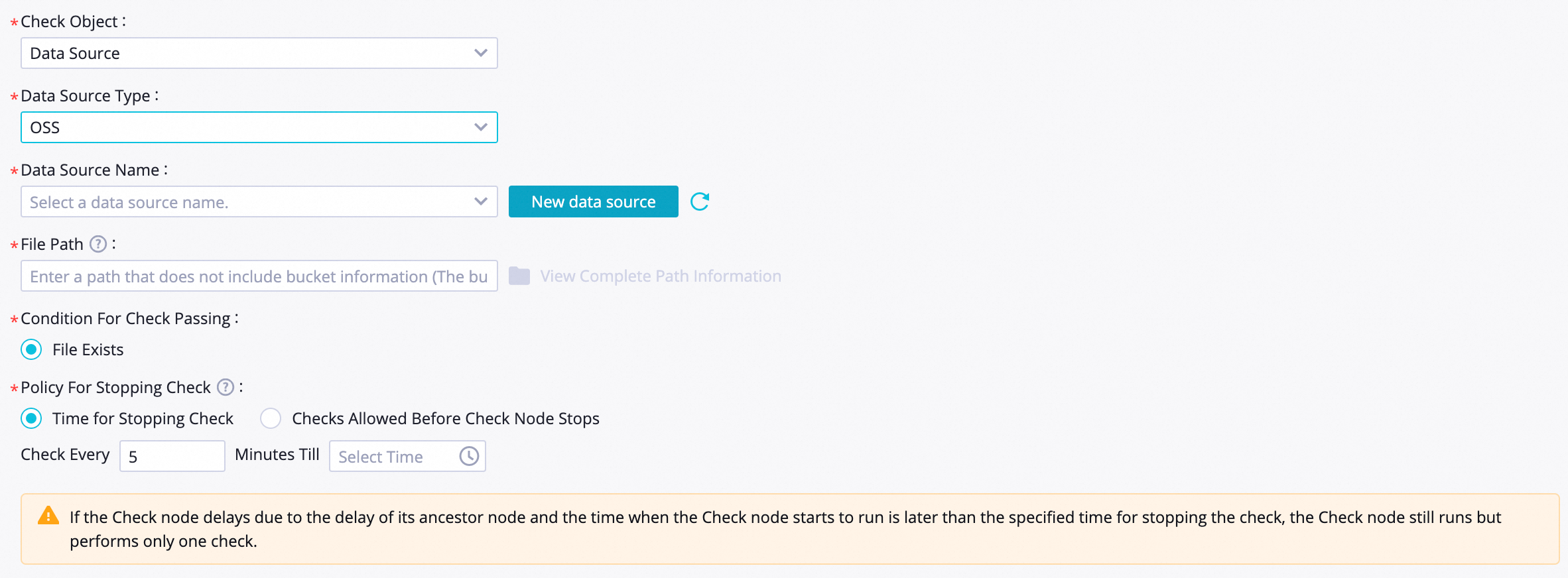

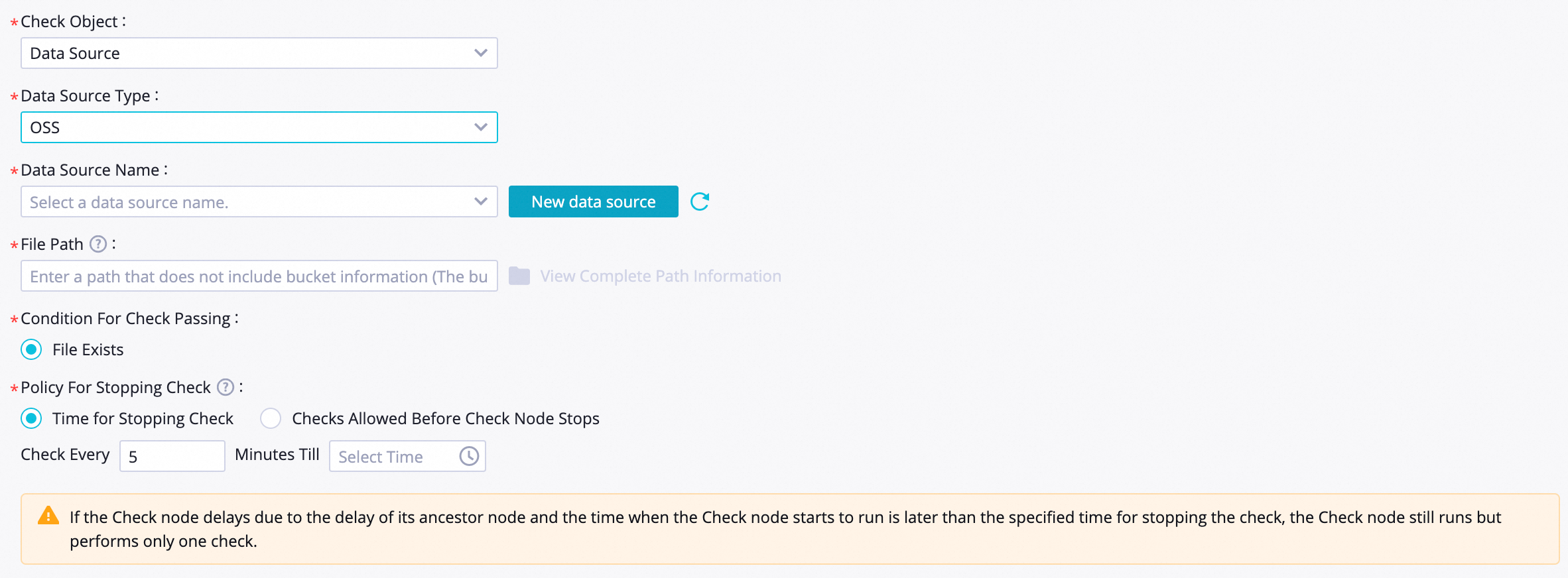

OSS file

The following table describes the parameters.

Parameter | Description |

Data Source Type | Select OSS. |

Data Source Name | The data source where the OSS file resides. If no data source is available, click New data source. For more information about how to create an OSS data source, see OSS data source. |

File Path | The path of the OSS file to check. You can view the path on the page of the target bucket in the OSS console.

Note The system uses the Bucket information from the selected OSS data source. Do not include the oss:// prefix, bucket name, or leading forward slash /. Format requirements: If the path ends with /, the Check node verifies whether a folder with the same name exists in OSS. Example: user/. This checks whether the "user" folder exists. If the path does not end with /, the Check node verifies whether a file with the same name exists in OSS. Example: user. This checks whether the "user" file exists.

Folder check limits: Note the following when checking for folder existence: Direct uploads (e.g., put /a/b/1.txt) do not create actual directory objects for /a or /a/b. Although the console displays a virtual directory /a/b/, the folder check will fail because the object does not exist. Multipart uploads (e.g., put /a -> put /a/b -> put /a/b/1.txt) create directory objects for /a and /a/b (0 KB). Both file and directory objects exist, so the folder check will pass.

|

Condition For Check Passing | Define the pass condition for the OSS file. If the OSS file exists, the check passes, and the system considers the file available. If the OSS file does not exist, the check fails, and the system considers the file unavailable.

|

Policy For Stopping Check | Configure the stop strategy for the Check node. You can set a deadline or a maximum number of retries, and configure the check frequency: Set deadline: Specify a cutoff time. The node performs checks at the specified interval until the deadline is reached. If the check does not pass by the deadline, the task fails.

Note The check interval ranges from 1 to 30 minutes. If the upstream task is delayed and the Check node starts after the deadline, the Check node runs once.

Set limit on check times: Define a maximum number of retries. The node performs checks at the specified interval until the limit is reached. If the check does not pass after the maximum retries, the task fails.

Note The check interval ranges from 1 to 30 minutes. The maximum run time of a Check node task is 24 hours (1440 minutes). The maximum number of retries depends on the check interval. For example, a 5-minute interval allows up to 288 retries, while a 10-minute interval allows up to 144 retries. Refer to the actual interface for details.

|

HDFS file

The following table describes the parameters.

The following table describes the parameters.

Parameter | Description |

Data Source Type | Select HDFS. |

Data Source Name | The data source where the HDFS file resides. If no data source is available, click New data source. For more information about how to create an HDFS data source, see HDFS data source. |

File Path | The path of the HDFS file to check, for example, /user/dw_test/dw. If the Check node detects that the path exists, the file exists. You can enter the path or use scheduling parameters. For more information about scheduling parameters, see Supported formats for scheduling parameters. |

Condition For Check Passing | Define the pass condition for the HDFS file. If the HDFS file exists, the check passes, and the system considers the file available. If the HDFS file does not exist, the check fails, and the system considers the file unavailable.

|

Policy For Stopping Check | Configure the stop strategy for the Check node. You can set a deadline or a maximum number of retries, and configure the check frequency: Set deadline: Specify a cutoff time. The node performs checks at the specified interval until the deadline is reached. If the check does not pass by the deadline, the task fails.

Note The check interval ranges from 1 to 30 minutes. If the upstream task is delayed and the Check node starts after the deadline, the Check node runs once.

Set limit on check times: Define a maximum number of retries. The node performs checks at the specified interval until the limit is reached. If the check does not pass after the maximum retries, the task fails.

Note The check interval ranges from 1 to 30 minutes. The maximum run time of a Check node task is 24 hours (1440 minutes). The maximum number of retries depends on the check interval. For example, a 5-minute interval allows up to 288 retries, while a 10-minute interval allows up to 144 retries. Refer to the actual interface for details.

|

OSS-HDFS file

The following table describes the parameters.

The following table describes the parameters.

Parameter | Description |

Data Source Type | Select OSS-HDFS. |

Data Source Name | The data source where the OSS-HDFS file resides. If no data source is available, click New data source. For more information about how to create an OSS-HDFS data source, see OSS-HDFS data source. |

File Path | The path of the OSS-HDFS file to check. You can view the path on the page of the target bucket in the OSS console. Format requirements: If the path ends with /, the Check node verifies whether a folder with the same name exists in OSS-HDFS. Example: user/. This checks whether the "user" folder exists. If the path does not end with /, the Check node verifies whether a file with the same name exists in OSS-HDFS. Example: user. This checks whether the "user" file exists.

|

Condition For Check Passing | Define the pass condition for the OSS-HDFS file. If the OSS-HDFS file exists, the check passes, and the system considers the file available. If the OSS-HDFS file does not exist, the check fails, and the system considers the file unavailable.

|

Policy For Stopping Check | Configure the stop strategy for the Check node. You can set a deadline or a maximum number of retries, and configure the check frequency: Set deadline: Specify a cutoff time. The node performs checks at the specified interval until the deadline is reached. If the check does not pass by the deadline, the task fails.

Note The check interval ranges from 1 to 30 minutes. If the upstream task is delayed and the Check node starts after the deadline, the Check node runs once.

Set limit on check times: Define a maximum number of retries. The node performs checks at the specified interval until the limit is reached. If the check does not pass after the maximum retries, the task fails.

Note The check interval ranges from 1 to 30 minutes. The maximum run time of a Check node task is 24 hours (1440 minutes). The maximum number of retries depends on the check interval. For example, a 5-minute interval allows up to 288 retries, while a 10-minute interval allows up to 144 retries. Refer to the actual interface for details.

|

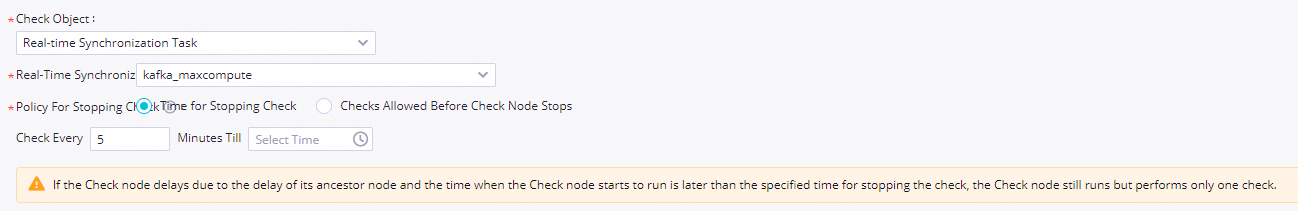

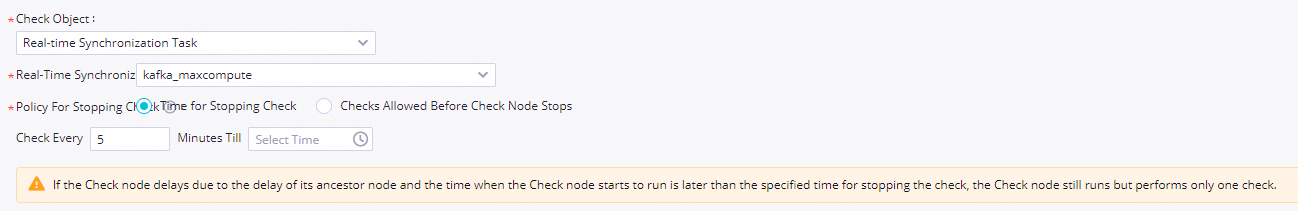

Real-time synchronization task

The following table describes the parameters.

Parameter | Description |

Check Object | Select Real-time Synchronization Task. |

Real-Time Synchronization Task | The real-time synchronization task to check.

Note Only Kafka-to-MaxCompute real-time synchronization tasks are supported. If an existing real-time synchronization task cannot be selected, check whether it has been published to the production environment.

|

Policy For Stopping Check | Configure the stop strategy for the Check node. You can set a deadline or a maximum number of retries, and configure the check frequency: Set deadline: Specify a cutoff time. The node performs checks at the specified interval until the deadline is reached. If the check does not pass by the deadline, the task fails.

Note The check interval ranges from 1 to 30 minutes. If the upstream task is delayed and the Check node starts after the deadline, the Check node runs once.

Set limit on check times: Define a maximum number of retries. The node performs checks at the specified interval until the limit is reached. If the check does not pass after the maximum retries, the task fails.

Note The check interval ranges from 1 to 30 minutes. The maximum run time of a Check node task is 24 hours (1440 minutes). The maximum number of retries depends on the check interval. For example, a 5-minute interval allows up to 288 retries, while a 10-minute interval allows up to 144 retries. Refer to the actual interface for details.

|

Step 3: Configure task scheduling

To schedule the Check node to run periodically, click Properties in the right pane of the configuration tab to configure scheduling properties. For more information, see Overview.

Configure scheduling properties in the Properties tab. Check nodes must have upstream dependencies. If the Check node has no actual upstream dependency, you can set the workspace root node as the upstream node. For more information, see Virtual node.

Note You must configure the Rerun properties and Parent Nodes dependencies before you can submit the node.

Step 4: Submit and publish the task

Submit and publish the node to enable periodic scheduling.

Click the  icon to save the node.

icon to save the node.

Click the  icon to submit the node.

icon to submit the node.

In the Submit dialog box, enter a change description and select code review or smoke testing options if applicable.

Note You must configure the Rerun properties and Parent Nodes dependencies before you can submit the node.

Code review ensures code quality and prevents errors caused by incorrect code from being published. If code review is enabled, the submitted node code must be approved by reviewers before it can be published. For more information, see Code review.

To ensure that the scheduling node runs as expected, we recommend that you perform smoke testing before publishing the task. For more information, see Smoke testing.

If you are using a workspace in standard mode, you must click Deploy in the upper-right corner to publish the task to the production environment. For more information, see Publish tasks.

icon, and select .

icon, and select .

icon to save the node.

icon to save the node. icon to submit the node.

icon to submit the node.

The following table describes the parameters.

The following table describes the parameters. The following table describes the parameters.

The following table describes the parameters.