Container Compute Service (ACS) allows you to create stateless applications by using an image, a YAML template, or kubectl. This topic describes how to create a stateless NGINX application in an ACS cluster.

Use the console

Create a Deployment from an image

Step 1: Configure basic settings

Log on to the ACS console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its ID. In the left-side navigation pane of the cluster details page, choose .

On the Deployments tab, click Create from Image.

On the Basic Information wizard page, configure the basic settings of the application.

Parameter

Description

Name

Enter a name for the application.

Replicas

The number of pods that are provisioned for the application. Default value: 2.

Type

In this example, Deployment is selected.

Labels

Add labels to the application. The labels are used to identify the application.

Annotations

Add annotations to the application.

Instance Type

The instance type that you want to use. For more information, see ACS pod overview.

QoS Type

Select a QoS class. For more information, see Computing power QoS.

General-purpose pods support the Default and BestEffort QoS classes.

Performance-enhanced pods support only the Default QoS class.

Click Next to go to the Container wizard page.

Step 2: Configure containers

On the Container wizard page, configure the container image, resource configurations, ports, environment variables, health checks, lifecycle, volumes, and logs.

Click Add Container to the right of the Container1 tab to add more containers.

In the General section, configure the basic container settings.

Parameter

Description

Image Name

Select Image

Click Select images and select a container image.

Container Registry Enterprise Edition: Select an image stored on a Container Registry Enterprise Edition instance. You must select the region and the Container Registry instance to which the image belongs. For more information about Container Registry, see What is Container Registry?

Container Registry Personal Edition: Select an image stored on a Container Registry Personal Edition instance. Make sure that Container Registry Personal Edition is activated. You must select the region and the Container Registry instance to which the image belongs.

Artifact Center: The artifact center contains base operating system images, base language images, and AI- and big data-related images for application containerization. In this example, an NGINX image is selected. For more information, see Overview of the artifact center.

(Optional ) Set the image pulling policy

Select an image pulling policy from the Image Pull Policy drop-down list. By default, the Kubernetes IfNotPresent policy is used.

ifNotPresent: If the image that you want to pull is found on your on-premises machine, the image on your on-premises machine is used. Otherwise, the system pulls the image from the image registry.

Always: The system pulls the image from the registry each time the application is deployed or expanded.

Never: The system uses only images on your on-premises machine.

(Optional) Set the image pull secret

Click Set Image Pull Secret to set a Secret used to pull the private image.

You can use Secrets to pull images from Container Registry Personal Edition instances. For more information about how to set a Secret, see Manage Secrets.

You can pull images without using Secrets from Container Registry Enterprise Edition instances. For more information, see Pull images from a Container Registry instance without using Secrets.

Required Resources

Specify the resource requests of the container.

CPU: You can configure the CPU request and CPU limit of the container. By default, the CPU request equals the CPU limit. CPU resources are billed on a pay-as-you-go basis. If you use a YAML template to set a resource limit that differs from the resource request, the resource request is automatically overridden to the value of the resource limit. For more information, see Resource specifications.

Memory: You can configure the memory request and memory limit of the container. By default, the memory request equals the memory limit. Memory resources are billed on a pay-as-you-go basis. If you use a YAML template to set a resource limit that differs from the resource request, the resource request is automatically overridden to the value of the resource limit. For more information, see Resource specifications.

Container Start Parameter

Specify the startup options of the container. This parameter is optional.

stdin: passes input in the ACK console to the container.

tty: passes start parameters that are defined in a virtual terminal to the ACK console.

Notestdin and tty are used together. In this case, the virtual terminal (tty) is associated with the stdin of the container. For example, an interactive program receives the stdin from the user and displays the content in the terminal.

Init Containers

If you select this check box, an init container is created. This parameter is optional.

Init containers can be used to block or postpone the startup of application containers. Application containers in a pod concurrently start only after init containers start. For example, you can use init containers to verify the availability of a service on which the application depends. You can run tools or scripts that are not provided by an application image in init containers to initialize the runtime environment for application containers. For example, you can run tools or scripts to configure kernel parameters or generate configuration files. For more information, see Init Containers.

Optional: In the Ports section, you can click Add to add container ports.

Parameter

Description

Name

Enter a name for the container port.

Container Port

Specify the container port that you want to expose. The port number must be in the range of 1 to 65535.

Protocol

Valid values: TCP and UDP.

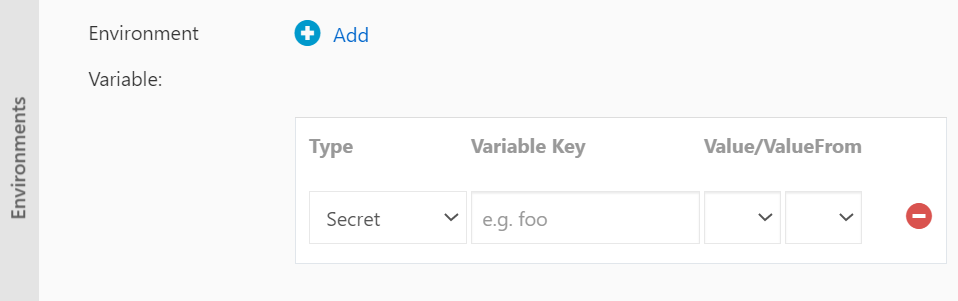

Optional: In the Environments section, you can click Add to add environment variables.

You can add environment variables in key-value pairs to a pod in order to add environment labels or pass configurations. For more information, see Expose Pod Information to Containers Through Environment Variables.

Parameter

Description

Type

Select the type of environment variable. Valid values:

Custom

Parameter

Secrets

Value/ValueFrom

ResourceFieldRef

If you select ConfigMaps or Secrets, you can pass all data in the selected ConfigMap or Secret to the container environment variables.

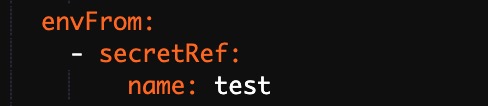

In this example, Secrets is selected. Select Secrets from the Type drop-down list and select a Secret from the Value/ValueFrom drop-down list. By default, all data in the selected Secret is passed to the environment variable.

In this case, the YAML file that is used to deploy the application contains the settings that reference all data in the selected Secret.

Variable Key

The name of the environment variable.

Value/ValueFrom

The value of the environment variable.

Optional: In the Health Check section, you can enable liveness, readiness, and startup probes based on your business requirements.

Liveness: Check whether the container is running as expected. After multiple consecutive failures, kubelet restarts the container. Liveness probes help identify issues where a container remains running but becomes unresponsive, such as deadlocks.

Readiness: Readiness probes are used to determine whether a container is ready to receive traffic. Pods are attached to a Service’s backend only after passing readiness checks.

Startup: Startup probes are executed during container initialization to verify successful startup. Subsequent liveness and readiness probes begin only after startup probes succeed.

For more information, see Configure Liveness, Readiness and Startup Probes.

Parameter

Description

HTTP

Sends an HTTP GET request to the container. You can set the following parameters:

Protocol: the protocol over which the request is sent. Valid values: HTTP and HTTPS.

Path: the requested HTTP path on the server.

Port: the number or name of the port exposed by the container. The port number must be from 1 to 65535.

HTTP Header: the custom headers in the HTTP request. Duplicate headers are allowed. You can specify HTTP headers in key-value pairs.

Initial Delay (s): the initialDelaySeconds field in the YAML file. This field specifies the waiting time (in seconds) before the first probe is performed after the container is started. Default value: 3.

Period (s): the periodSeconds field in the YAML file. This field specifies the time interval (in seconds) at which probes are performed. Default value: 10. Minimum value: 1.

Timeout (s): the timeoutSeconds field in the YAML file. This field specifies the time (in seconds) after which a probe times out. Default value: 1. Minimum value: 1.

Healthy Threshold: the minimum number of consecutive successes that must occur before a container is considered healthy after a failed probe. Default value: 1. Minimum value: 1. For liveness probes, this parameter must be set to 1.

Unhealthy Threshold: the minimum number of consecutive failures that must occur before a container is considered unhealthy after a success. Default value: 3. Minimum value: 1.

TCP

Sends a TCP socket to the container. kubelet attempts to open the socket on the specified port. If the connection can be established, the container is considered healthy. Otherwise, the container is considered unhealthy. You can configure the following parameters:

Port: the number or name of the port exposed by the container. The port number must be from 1 to 65535.

Initial Delay (s): the initialDelaySeconds field in the YAML file. This field specifies the waiting time (in seconds) before the first probe is performed after the container is started. Default value: 15.

Period (s): the periodSeconds field in the YAML file. This field specifies the time interval (in seconds) at which probes are performed. Default value: 10. Minimum value: 1.

Timeout (s): the timeoutSeconds field in the YAML file. This field specifies the time (in seconds) after which a probe times out. Default value: 1. Minimum value: 1.

Healthy Threshold: the minimum number of consecutive successes that must occur before a container is considered healthy after a failed probe. Default value: 1. Minimum value: 1. For liveness probes, this parameter must be set to 1.

Unhealthy Threshold: the minimum number of consecutive failures that must occur before a container is considered unhealthy after a success. Default value: 3. Minimum value: 1.

Command

Runs a probe command in the container to check the health status of the container. You can configure the following parameters:

Command: the probe command that is run to check the health status of the container.

Initial Delay (s): the initialDelaySeconds field in the YAML file. This field specifies the waiting time (in seconds) before the first probe is performed after the container is started. Default value: 5.

Period (s): the periodSeconds field in the YAML file. This field specifies the time interval (in seconds) at which probes are performed. Default value: 10. Minimum value: 1.

Timeout (s): the timeoutSeconds field in the YAML file. This field specifies the time (in seconds) after which a probe times out. Default value: 1. Minimum value: 1.

Healthy Threshold: the minimum number of consecutive successes that must occur before a container is considered healthy after a failed probe. Default value: 1. Minimum value: 1. For liveness probes, this parameter must be set to 1.

Unhealthy Threshold: the minimum number of consecutive failures that must occur before a container is considered unhealthy after a success. Default value: 3. Minimum value: 1.

Optional: In the Lifecycle section, you can configure the lifecycle of the container.

You can specify the following parameters to configure the lifecycle of the container: Start, Post Start, and Pre Stop. For more information, see Attach Handlers to Container Lifecycle Events.

Parameter

Description

Start

Specify a command and parameter that takes effect before the container starts.

Post Start

Specify a command that takes effect after the container starts.

Pre Stop

Specify a command that terminates the container.

Optional: In the Volume section, configure volumes that you want to mount to the container.

Local storage: You can select ConfigMap, Secret, or EmptyDir from the PV Type drop-down list. Then, set the Mount Source and Container Path parameters to mount the volume to a container path. For more information, see Volumes.

Cloud storage: Disks and File Storage NAS (NAS) file systems are supported. For more information, see Storage overview.

Optional: In the Log section, you can specify logging configurations and add custom tags to the collected log.

Parameter

Description

Collection Configuration

Logstore: Create a Logstore in Log Service to store the collected log data.

Log Path in Container (Can be set to stdout): specifies stdout or a path to collect the log.

Collect stdout files: If you specify stdout, the stdout files are collected.

Text Logs: specifies that the logs in the specified path of the container are collected. In this example,

/var/log/nginxis specified as the path. Wildcard characters can be used in the path.

Custom Tag

You can also add custom tags. The tags are added to the log of the container when the log is collected. You can add custom tags to container logs for log analysis and filtering.

Click Next to go to the Advanced wizard page.

Step 3: Configure advanced settings

On the Advanced wizard page, configure the following settings: access control, scaling, scheduling, annotations, and labels.

In the Access Control section, you can configure access control settings for exposing backend pods.

You can also specify how backend pods are exposed to the Internet. In this example, a ClusterIP Service and an Ingress are created to expose the NGINX application to the Internet.

To create a Service, click Create on the right side of Services. In the Create dialog box, set the parameters.

To create an Ingress, click Create to the right side of Ingresses. In the Create dialog box, set the parameters.

Optional: In the Scaling section, you can enable HPA to handle fluctuating workloads.

Horizontal Pod Autoscaler (HPA) can automatically scale the number of pods in an ACS cluster based on the CPU and memory usage metrics.

NoteTo enable HPA, you must configure the resources required by the container. Otherwise, HPA does not take effect.

Parameter

Description

Metric

Select CPU Usage or Memory Usage. The selected resource type must be the same as that specified in the Required Resources field.

Condition

Specify the resource usage threshold. HPA triggers scale-out events when the threshold is exceeded.

Max. Replicas

The maximum number of replicated pods to which the application can be scaled.

Min. Replicas

The minimum number of replicated pods that must run.

CronHPA can scale an ACS cluster at a scheduled time. Before you enable CronHPA, you must first install ack-kubernetes-cronhpa-controller. For more information about CronHPA, see CronHPA.

Optional: In the Labels,Annotations section, you can click Add to add pod labels and annotations.

Click Create.

Step 4: View application information

After the application is created, you can click View Details on the page that appears to view the details of the Deployment.

You can also view the information on the Deployments page. Click the name of the Deployment or click Details in the Actions column to go to the details page.

Use a YAML template

In an ACS orchestration template, you must define the resource objects that are required for running an application and configure mechanisms such as label selectors to orchestrate the resource objects into an application.

This section describes how to use an orchestration template to create an NGINX application that consists of a Deployment and a Service. The Deployment provisions pods for the application and the Service manages access to the backend pods.

Log on to the ACS console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its ID. In the left-side navigation pane of the cluster details page, choose .

In the upper-right corner of the Deployments page, click Create from YAML.

On the Create page, configure the template and click Create.

Sample Template: ACS provides YAML templates for various Kubernetes resource objects. You can also create a custom template based on YAML syntax to define the resources that you want to create.

Create Workload: You can quickly define a YAML template.

Use Existing Template: You can import an existing template.

Save Template: You can save the template that you have configured.

The following YAML file is an example. You can use the file to create a Deployment to run an NGINX application. By default, a Classic Load Balancer (CLB) instance is created.

NoteACS supports Kubernetes YAML orchestration. You can use

---to separate resource objects. This allows you to define multiple resource objects in one YAML template.Optional: By default, when you mount a volume to an application, the files in the mount target are overwritten. To avoid the existing files from being overwritten, you can add a subPath parameter.

After you click Create, a message that indicates the deployment status appears.

kubectl

You can use kubectl to create applications or view application pods.

Connect to your ACS cluster. For more information, see Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster and Use kubectl on Cloud Shell to manage ACS clusters.

In a CLI, run the following command to start a container. An NGINX web server is used in this example.

kubectl create deployment nginx --image=registry.cn-hangzhou.aliyuncs.com/acs-sample/nginx:latestRun the following command to create an Ingress for the pod and specify

--type=LoadBalancerto use a load balancer provided by Alibaba Cloud.kubectl expose deployment nginx --port=80 --target-port=80 --type=LoadBalancerRun the following command to query the pod of the NGINX Service:

kubectl get pod |grep nginxExpected results:

NAME READY STATUS RESTARTS AGE nginx-2721357637-d**** 1/1 Running 1 9h