When you use the Terway network plug-in in an Alibaba Cloud Container Service for Kubernetes (ACK) cluster, you may need to add more vSwitches if the current ones run out of IP addresses or to add a new pod CIDR block. This topic describes how to add a vSwitch to increase the number of available IP addresses for your ACK cluster.

Limits

The vSwitch that you add must be in the same zone as the node. If a vSwitch is not available in the node's zone, the system uses the vSwitch of the primary Elastic Network Interface (ENI).

You cannot modify the vSwitch configuration for existing ENIs. After you modify the pod vSwitches, the new configuration is applied only to new nodes.

When pod vSwitch configuration changes take effect

Existing nodes

Terway applies the new vSwitch configuration only when it creates a new ENI. Therefore, the new configuration does not take effect immediately on existing nodes in the following two cases:

The ENI is in use: Terway does not delete or replace an existing ENI if pods are running on it or if it is a trunk ENI.

The ENI limit is reached: A new ENI cannot be created because the number of ENIs on the node's instance has reached the maximum limit for the instance type.

New nodes

For new nodes added to the cluster, Terway creates ENIs using the vSwitch selected based on the current configuration at the cluster, node, or pod level. The new configuration takes effect immediately.

vSwitch selection policy and IP resource balancing

When multiple vSwitches are configured for a zone, Terway provides different selection policies to control how vSwitches are assigned when creating ENIs. For more information about the configuration items, see Customize Terway parameters.

Default policy: ordered

Terway sorts the vSwitches in descending order based on the number of available IP addresses and prioritizes the vSwitch with the largest number of available IP addresses. This policy is suitable for most scenarios.

Edge case: In scenarios with a sudden burst of pod creations that triggers batch ENI creation, all new ENIs might be assigned to the same vSwitch that has the largest number of IP addresses. This can cause uneven IP resource distribution and exhaust the IP addresses of a single vSwitch in a short period.

Solutions

To balance IP consumption across multiple vSwitches, use one of the following methods:

Solution 1: Switch to the random policy

Change the vSwitch selection policy to

random.Terway will randomly select a vSwitch from the list of available vSwitches to create an ENI. This helps distribute the IP usage pressure.

Solution 2: Partition vSwitches by node pool

Use node-level network configurations to divide nodes into multiple node pools.

Within each node pool, attach a unique pod vSwitch for each zone.

Symptoms of insufficient vSwitch IP addresses

In a Terway network, the following symptoms indicate that the IP addresses of a vSwitch are exhausted:

If a pod fails to be created and its status is

ContainerCreating, run the following command to view the Terway logs on the node where the pod is located.kubectl logs --tail=100 -f terway-eniip-***** -n kube-system -c terwayIf an error message similar to the following is returned, the vSwitch used by Terway on the node has no available IP addresses. The pod remains in the

ContainerCreatingstate because no IP addresses are available.time="20**-03-17T07:03:40Z" level=warning msg="Assign private ip address failed: Aliyun API Error: RequestId: 2095E971-E473-4BA0-853F-0C41CF52651D Status Code: 403 Code: InvalidVSwitchId.IpNotEnough Message: The specified VSwitch \"vsw-***\" has not enough IpAddress., retrying"Log on to the Virtual Private Cloud (VPC) console. In the navigation pane on the left, click vSwitch. Find the vSwitch and check that the value in the Available IP Address Count column is 0.

Modify pod vSwitches

You can easily add a vSwitch in the console. We recommend that you first upgrade the Terway component to the latest version and then configure the pod vSwitch in the console.

Terway v1.4.4 and later support pod vSwitch configuration through both the console and kubectl.

Versions earlier than Terway v1.4.4 support only kubectl for pod vSwitch configuration.

Use the console

Log on to the VPC console and create a vSwitch. The new vSwitch must be in the same region as the vSwitch that has insufficient IP addresses. For more information about how to create a vSwitch, see Create and manage vSwitches.

NoteTo provide sufficient IP addresses for an increasing number of pods, we recommend that the CIDR block of the vSwitch for pods contains at least 8,192 IP addresses. This means the prefix of the CIDR block must be 19 or smaller.

Log on to the ACK console. In the navigation pane on the left, click Clusters.

On the Clusters page, click the name of the target cluster. In the navigation pane on the left, click Component Management.

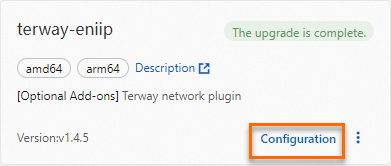

On the Add-ons page, click the Network tab. Find the Terway component that you want to upgrade and click Upgrade. After the component is upgraded to the latest version, click Configure.

If the Upgrade button is not displayed, the Terway component is already the latest version.

NoteChanges made to the deployed component using other methods are overwritten after the component is redeployed.

In the terway-eniip Parameter Settings dialog box, in the PodVswitchId section, select the vSwitch that you need. Keep the default values for the other parameters.

After you configure the parameters, click OK.

Use kubectl

Log on to the VPC console and create a vSwitch. The new vSwitch must be in the same region as the vSwitch that has insufficient IP addresses. For more information about how to create a vSwitch, see Create and manage vSwitches.

NoteTo provide sufficient IP addresses for an increasing number of pods, we recommend that the CIDR block of the vSwitch for pods contains at least 8,192 IP addresses. This means the prefix of the CIDR block must be 19 or smaller.

Run the following command to add the vSwitch to the Terway ConfigMap:

kubectl edit cm eni-config -n kube-systemThe following sample code shows how to add the vSwitch:

eni_conf: | { "version": "1", "max_pool_size": 25, "min_pool_size": 10, "vswitches": {"cn-shanghai-f":["vsw-AAA", "vsw-BBB"]}, "service_cidr": "172.21.0.0/20", "security_group": "sg-CCC" }In this example,

vsw-BBBis added to thevSwitchessection.vsw-AAAis the existing vSwitch that has insufficient IP addresses.Run the following command to delete all Terway pods. The system automatically recreates them.

For scenarios where multiple pods share an ENI, run the following command to delete all Terway pods:

kubectl delete -n kube-system pod -l app=terway-eniipFor scenarios where one pod uses an ENI exclusively, run the following command to delete all Terway pods:

kubectl delete -n kube-system pod -l app=terway-eni

Run the following command to check whether all Terway pods are successfully created.

kubectl get pod -n kube-system | grep terwayCreate a pod to verify that it can be assigned an IP address from the new vSwitch.

NoteAfter you modify the vSwitch configuration, the new configuration takes effect only for new ENIs. Existing ENIs continue to use the old configuration. You can perform a rolling restart on the nodes to apply the new configuration.

FAQ

Why can't the cluster access the public network after I add a vSwitch in a Terway network?

Symptom: In a Terway network, you manually add a vSwitch because pods lack IP resources. After you add the vSwitch, the cluster cannot access the public network.

Cause: The vSwitch to which the pod IP addresses belong does not have public network access.

Solution: Use the SNAT feature of a NAT Gateway to configure an SNAT rule for the vSwitch to which the pod IP addresses belong. For more information, see Enable an ACK cluster to access the public network.

What do I do if the IP address assigned to a pod is not in the vSwitch CIDR block?

Symptom: In a Terway network, the IP address of a created pod is not within the configured vSwitch CIDR block.

Cause: Pod IP addresses are allocated from the VPC and assigned to containers through ENIs. vSwitches can be configured only when a new ENI is created. If an ENI already exists, pod IP addresses continue to be allocated from the vSwitch that corresponds to that ENI. This issue typically occurs in the following two scenarios:

You add a node to a cluster that was previously used in another cluster, and the pods on the node were not drained when the node was removed. In this case, ENI resources used by the previous cluster may remain on the node.

You manually add or modify the vSwitch configuration used by Terway. Because the original ENIs may still exist on the nodes, new pods may continue to use the IP addresses on the original ENIs.

Solution: You can create new nodes or perform a rolling restart on existing nodes to ensure the new configuration takes effect.

Why is IP consumption unbalanced after I configure multiple vSwitches?

Symptom: In a Terway network plug-in environment, after you configure multiple pod vSwitches for the same zone, the IP address consumption across the vSwitches is significantly unbalanced. Some vSwitches run out of IP resources quickly, while others still have many available IP addresses.

Cause: Terway selects a pod vSwitch only when it creates an Elastic Network Interface (ENI). After the ENI is created, its vSwitch is fixed. All subsequent pod IP addresses allocated on this ENI are from this vSwitch, and the vSwitch is not selected again.

When a new ENI is created, Terway uses the ordered vSwitch selection policy by default, which prioritizes the vSwitch with the largest number of available IP addresses.

However, when you scale out pods on a large scale in a short period, such as creating nodes in batches or deploying many replicas, multiple concurrent ENI creation requests may execute the vSwitch selection logic at nearly the same time. Because the number of available IP addresses on each vSwitch has not yet changed significantly, these requests are likely to select the same vSwitch, which leads to uneven IP address allocation.

Solution: Depending on your business scenario, adopt one of the following strategies:

For existing nodes (existing node pools)

If IP allocation is already uneven and some vSwitches are about to run out of IP addresses:Manually perform a rolling restart on some existing nodes to release their attached ENIs.

After the ENIs are released, the IP addresses are returned to their corresponding vSwitches, which helps balance IP usage.

For new nodes or new deployment scenarios

Change Terway's vSwitch selection policy

vswitch_selection_policyfromorderedtorandom. This policy randomly selects an available vSwitch when creating an ENI, which effectively avoids the hot spot vSwitch issue. For more information about how to perform this operation, see Customize Terway parameters.For deterministic and isolated allocation

To strictly control network resource allocation, you can configure node-level network configurations for each node pool. This ensures that only one pod vSwitch is associated with each zone. This creates a one-to-one mapping between vSwitches and node pools, completely avoiding IP allocation competition.