After you migrate data from a self-managed ClickHouse cluster to the cloud, you may encounter compatibility and performance issues. To ensure a smooth migration and avoid affecting your production environment, we recommend migrating data in a test environment first. This lets you analyze compatibility and performance bottlenecks and resolve any related issues in advance.

Background information

In the early stages of your business operations, you may have deployed self-managed ClickHouse clusters. As your business evolves, you might want to migrate data from these clusters to the cloud to enhance stability, reduce O&M costs, and improve disaster recovery capabilities. After migration, you may encounter the following issues:

Version compatibility issues.

MaterializedMySQL engine compatibility issues.

SQL compatibility issues.

After migrating your business to ApsaraDB for ClickHouse, you may find that CPU resources are exhausted and memory is insufficient.

To resolve these issues, you must focus on compatibility and performance during the migration.

Compatibility analysis and resolution

Parameter compatibility

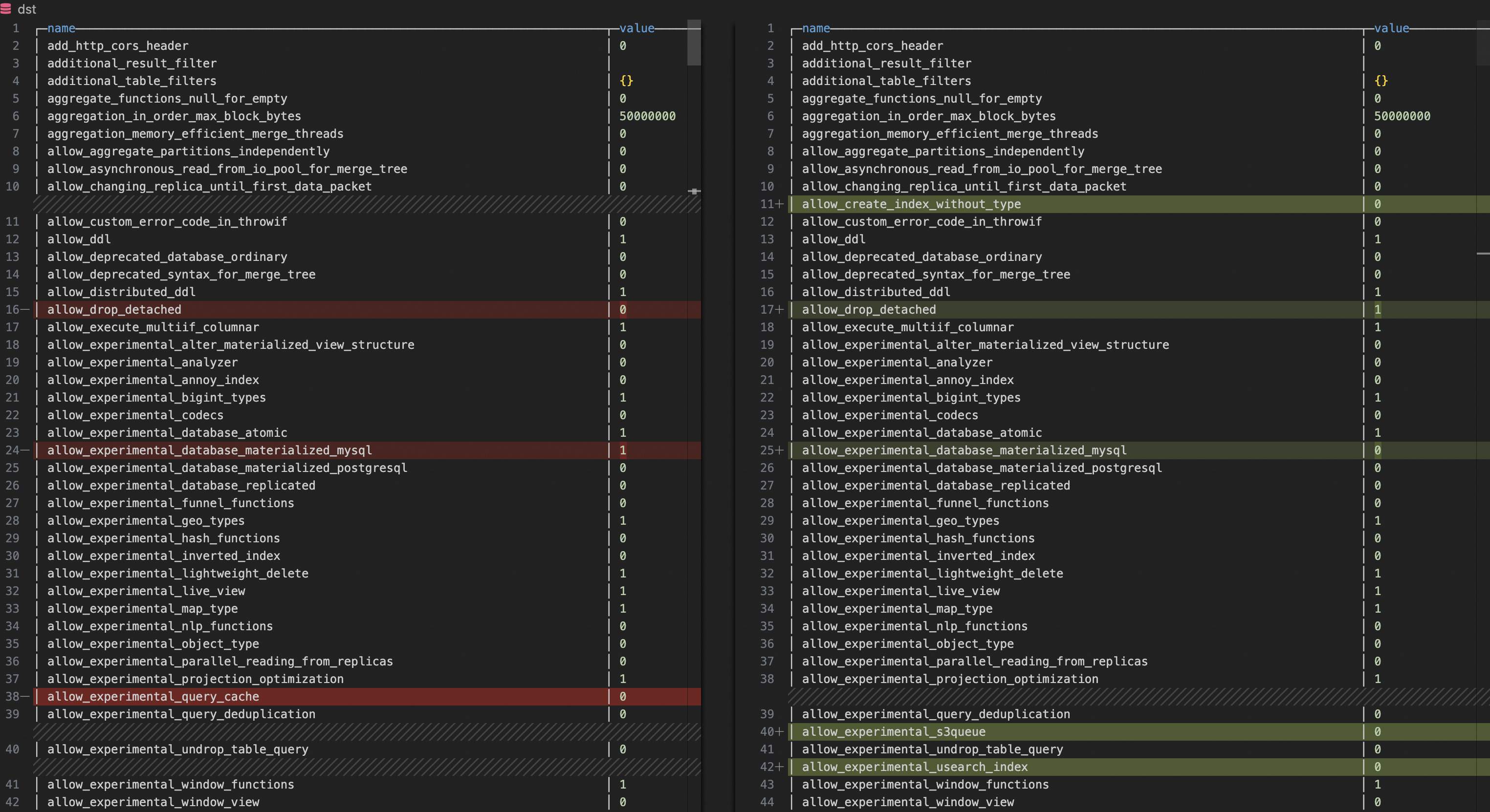

You can retrieve the configuration parameters of the self-managed ClickHouse cluster and the ApsaraDB for ClickHouse cluster.

SELECT name, groupArrayDistinct(value) AS value FROM clusterAllReplicas(`default`, system.settings) GROUP BY name ORDER BY name ASCYou can use a text comparison tool, such as VS Code, to compare the parameters of the two clusters. If you find any inconsistencies, you can align the parameters of the ApsaraDB for ClickHouse cluster with those of the self-managed ClickHouse cluster.

Common parameters that affect compatibility: `compatibility`, `prefer_global_in_and_join`, and `distributed_product_mode`.

Common parameters that affect performance: `max_threads`, `max_bytes_to_merge_at_max_space_in_pool`, and `prefer_global_in_and_join`.

MaterializedMySQL compatibility

If your self-managed ClickHouse cluster synchronizes data from a MySQL database, the ApsaraDB for ClickHouse cluster must continue to synchronize data from the MySQL database after the migration. Therefore, you must pay attention to the compatibility of the MaterializedMySQL engine.

The MaterializedMySQL engine creates a `ReplacingMergeTree` table on each node, and each node retains the same data. However, the community version of the MaterializedMySQL engine is no longer maintained. The primary method for synchronizing MySQL data to ApsaraDB for ClickHouse is using DTS. Because the community version of the MaterializedMySQL engine is no longer maintained, DTS uses a `ReplacingMergeTree` table instead of a `MaterializedMySQL` table when synchronizing MySQL data to ApsaraDB for ClickHouse. This implementation creates a distributed table and a local `ReplacingMergeTree` table on each node of the ApsaraDB for ClickHouse cluster. ApsaraDB for ClickHouse then distributes data to each node through the distributed table. This approach can cause compatibility issues that may affect your business when migrating from a self-managed ClickHouse cluster to ApsaraDB for ClickHouse. The most common issues are as follows:

Issue 1: In a self-managed ClickHouse cluster, MaterializedMySQL synchronizes data to each shard. After migrating to the cloud using DTS, a `ReplacingMergeTree` table replaces the `MaterializedMySQL` table, and data is distributed to each node through a distributed table. This change affects `IN` and `JOIN` queries that are associated with the distributed table. For more information, see What do I do if an error occurs when I use a subquery on a distributed table?.

Issue 2: After migrating to the cloud and replacing the `MaterializedMySQL` table with a `ReplacingMergeTree` table, the data merging speed of the `ReplacingMergeTree` table may not be fast enough. This can result in more duplicate data in query results compared to the results from the self-managed cluster. You can use the following solutions to resolve this issue:

Solution 1: In ApsaraDB for ClickHouse, you can run the

SET global final=1command to merge data during queries. This parameter ensures that the query data is not duplicated, but it consumes more CPU and memory.Solution 2: In ApsaraDB for ClickHouse, you can modify the `min_age_to_force_merge_seconds` and `min_age_to_force_merge_on_partition_only` parameters of the target `ReplacingMergeTree` table. This makes the target table merge more frequently and prevents the generation of excessive duplicate data. The following is an example:

ALTER TABLE <AIM_TABLE> MODIFY SETTING min_age_to_force_merge_on_partition_only = 1, min_age_to_force_merge_seconds = 60;min_age_to_force_merge_on_partition_only

Description: This parameter is used to control the merge policy of the MergeTree table engine. When this parameter is set to 1, forced data merging in partitions is enabled.

Default value: 0 (Disabled)

min_age_to_force_merge_seconds

Description: The time interval for forcing the merging of parts.

Default value: 3600

Unit: seconds

SQL compatibility verification

You can install a Python environment.

This verification requires a basic Python 3 environment. We recommend that you use an Alibaba Cloud ECS instance that runs Linux for verification because it already includes a Python 3 environment. For more information about how to purchase an ECS instance, see Purchase an instance.

If you are not using an Alibaba Cloud ECS environment, you need to install a Python environment yourself. For more information about how to install a Python environment, see the Python official website.

You can install the Python client library for ClickHouse.

You can execute the following command in the terminal or command prompt.

pip3 install clickhouse_driverYou can verify that the server used for verification can communicate over the network with both the ApsaraDB for ClickHouse cluster and the self-managed ClickHouse cluster.

For more information about how to resolve network connectivity issues between the verification server and the ApsaraDB for ClickHouse cluster, see How do I resolve network connectivity issues between the target cluster and the data source?

You can run a Python script to extract SELECT requests from a self-managed ClickHouse cluster and run them on an ApsaraDB for ClickHouse cluster to verify its SQL compatibility with ApsaraDB for ClickHouse. The script is as follows:

from clickhouse_driver import connect import datetime import logging # pip3 install clickhouse_driver is required. # The VPC endpoint of the self-managed instance. host_old='HOST_OLD' # The TCP port of the self-managed instance. port_old=TCP_PORT_OLD # The username of the self-managed instance. user_old='USER_OLD' # The password for the user of the self-managed instance. password_old='PASSWORD_OLD' # The VPC endpoint of the ApsaraDB for ClickHouse cluster. host_new='HOST_NEW' # The TCP port of the ApsaraDB for ClickHouse cluster. port_new=TCP_PORT_NEW # The username of the ApsaraDB for ClickHouse cluster. user_new='USER_NEW' # The password for the user of the ApsaraDB for ClickHouse cluster. password_new='PASSWORD_NEW' # Configure logs. logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s') def create_connection(host, port, user, password): """Establish a connection to ClickHouse.""" return connect(host=host, port=port, user=user, password=password) def get_query_hashes(cursor): """Query the query_hash list of the last two days.""" get_queryhash_sql = ''' select distinct normalized_query_hash from system.query_log where type='QueryFinish' and `is_initial_query`=1 and `user` not in ('default', 'aurora') and lower(`query`) not like 'select 1%' and lower(`query`) not like 'select timezone()%' and lower(`query`) not like '%dms-websql%' and lower(`query`) like 'select%' and `event_time` > now() - INTERVAL 2 DAY; ''' cursor.execute(get_queryhash_sql) return cursor.fetchall() def get_sql_info(cursor, queryhash): """Query the SQL information for the specified query_hash in the last 2 days (search range is 3 days).""" get_sqlinfo_sql = f''' select `event_time`, `query_duration_ms`, `read_rows`, `read_bytes`, `memory_usage`, `databases`, `tables`, `current_database`, `query` from system.query_log where `event_time` > now() - INTERVAL 3 DAY and `type`='QueryFinish' and `normalized_query_hash`='{queryhash}' limit 1 ''' cursor.execute(get_sqlinfo_sql) sql_info = cursor.fetchone() if sql_info: return [info.strftime('%Y-%m-%d %H:%M:%S') if isinstance(info, datetime.datetime) else info for info in sql_info[:-1]],sql_info[-1] return None def execute_sql_on_new_db(cursor, current_database, query_sql, execute_failed_sql): """Execute the SQL query on the new node and record the failed SQL query.""" try: cursor.execute(f"USE {current_database};") cursor.execute(query_sql) except Exception as error: logging.error(f'query_sql execute in new db failed: {query_sql}') execute_failed_sql[query_sql] = error def main(): # Establish connections. conn_old = create_connection( host=host_old, port=port_old, user=user_old, password=password_old ) conn_new = create_connection( host=host_new, port=port_new, user=user_new, password=password_new ) # Create cursors. cursor_old = conn_old.cursor() cursor_new = conn_new.cursor() # Obtain the query_hash list from the old node. old_query_hashes = get_query_hashes(cursor_old) # Obtain SQL execution information from the old node. old_db_execute_dir = {} for queryhash in old_query_hashes: sql_info,query = get_sql_info(cursor_old, queryhash[0]) if sql_info: old_db_execute_dir[query] = sql_info # Close the cursor and connection to the old node. cursor_old.close() conn_old.close() # Execute the SQL queries on the new node. This is the most important verification step. execute_failed_sql = {} keys_list = list(old_db_execute_dir.keys()) for query_sql in old_db_execute_dir: position = keys_list.index(query_sql) current_database = old_db_execute_dir[query_sql][-1] logging.info(f"new db test the {position + 1}th/{len(old_db_execute_dir)}, running sql: {query_sql}\n") execute_sql_on_new_db(cursor_new, current_database, query_sql, execute_failed_sql) # Obtain the query_hash list from the new node. new_query_hashes = get_query_hashes(cursor_new) new_db_execute_dir = {} for queryhash in new_query_hashes: sql_info,query = get_sql_info(cursor_new, queryhash[0]) if sql_info: new_db_execute_dir[query] = sql_info # Close the cursor and connection to the new node. cursor_new.close() conn_new.close() # Print the SQL execution information for the nodes of the old and new versions. for query_sql in new_db_execute_dir: if query_sql in old_db_execute_dir: logging.info(f'succeed sql: {query_sql}') logging.info(f'old sql info: {old_db_execute_dir[query_sql]}') logging.info(f'new sql info: {new_db_execute_dir[query_sql]}\n') # Print the SQL queries that failed to execute. for query_sql in execute_failed_sql: logging.error('\033[31m{}\033[0m'.format(f'failed sql: {query_sql}')) logging.error('\033[31m{}\033[0m'.format(f'failed error: {execute_failed_sql[query_sql]}\n')) if __name__ == "__main__": main()You can verify the results and resolve any issues.

SQL compatibility verification is complex. If an error occurs, you must troubleshoot and resolve it based on the specific error message.

Performance analysis and optimization

Because your self-managed cluster is already running services, it is difficult to perform gradual cloud migration tests and performance optimization. After your services are switched to ApsaraDB for ClickHouse, the instance may experience issues such as full CPU utilization and insufficient memory. If the performance of your ApsaraDB for ClickHouse instance deteriorates after you switch to the ApsaraDB for ClickHouse service, you can use the following methods to quickly locate the problematic table or query and identify the performance bottleneck. If you already know which table or query is causing poor performance in the destination instance, you can skip to Step 3: Analyze SQL performance.

Step 1: Identify the key tables that cause the overall performance bottleneck

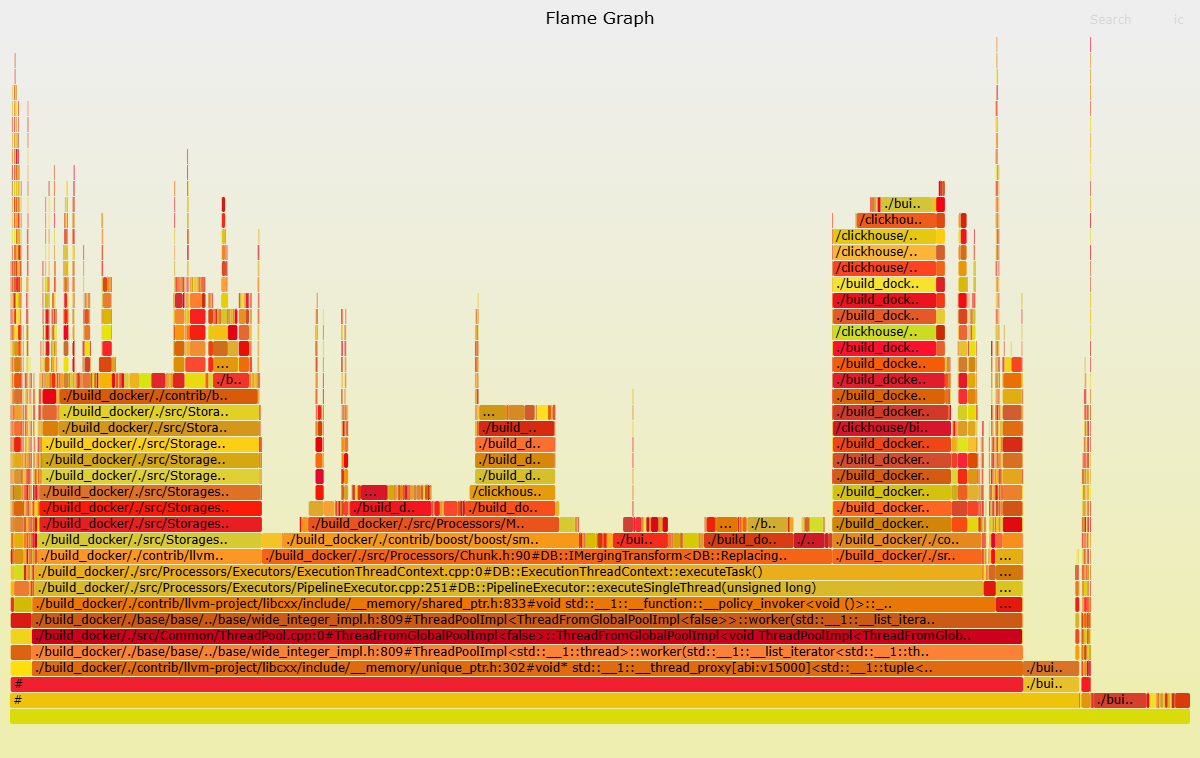

Flame graphs and `query_log` analysis are two methods for locating the key tables that cause performance issues. Creating a flame graph is more complex, but the information it provides is more intuitive. Analyzing the `query_log` table is simpler and does not require external tools, but you must analyze the information yourself. You can use both methods together.

Create a flame graph

You can export `trace_log`.

You can use `clickhouse-client` to log on to the ApsaraDB for ClickHouse instance. For more information about how to log on, see Connect to a ClickHouse cluster using the command-line interface.

You can run the following command to export `trace_log` and generate

cpu_trace_log.txt.-- trace_type = 'CPU' indicates CPU tracing. -- trace_type = 'Real' indicates real-time tracing. /clickhouse/bin/clickhouse-client -h <IP> --port <port> -q "SELECT arrayStringConcat(arrayReverse(arrayMap(x -> concat( addressToLine(x), '#', demangle(addressToSymbol(x)) ), trace)), ';') AS stack, count() AS samples FROM system.trace_log WHERE trace_type = 'CPU' and event_time >= '2025-01-08 19:31:00' and event_time < '2025-01-08 19:33:00' group by trace order by samples desc FORMAT TabSeparated settings allow_introspection_functions=1" > cpu_trace_log.txtThe following table describes the parameters.

Parameter

Description

IP

The VPC endpoint of ApsaraDB for ClickHouse.

port

The TCP port of ApsaraDB for ClickHouse.

In addition to the preceding parameters, you must also configure `event_time` to obtain trace logs for the target time period.

You can use `clickhouse-flamegraph` to create a flame graph.

You can install `clickhouse-flamegraph`. For more information about how to download and install it, see clickhouse-flamegraph.

You can run the following command to generate and analyze a flame graph.

cat cpu_trace_log.txt | flamegraph.pl > cpu_trace_log.svgIn the following flame graph, you can observe the functions that consume a large amount of CPU resources in the destination instance, such as the `ReplacingSortedMerge` function. Therefore, you can focus on the SQL queries on the `ReplacingMergeTree` table.

Analyze query_log

A table with `SELECT` requests that consumes a lot of CPU and memory does not necessarily indicate poor overall performance. It only suggests a higher probability of causing high CPU and memory usage. Therefore, you need to run the relevant commands on both the self-managed ClickHouse cluster and the ApsaraDB for ClickHouse cluster simultaneously to perform a TOP N comparison. After you identify the tables with significant differences, you need to locate the relevant `SELECT` statements. The following examples show how to locate tables with high CPU or memory usage.

Locate tables with CPU issues

SELECT

tables,

first_value(query),

count() AS cnt,

groupArrayDistinct(normalizedQueryHash(query)) AS normalized_query_hash,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS sum_user_cpu,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS sum_system_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS avg_user_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS avg_system_cpu,

sum(memory_usage) as sum_memory_usage,

avg(memory_usage) as avg_memory_usage

FROM clusterAllReplicas(default, system.query_log)

WHERE (event_time > '2025-01-08 19:30:00') AND (event_time < '2025-01-08 20:30:00') AND (query_kind = 'Select')

GROUP BY tables

ORDER BY sum_user_cpu DESC

LIMIT 5Locate tables with memory issues

SELECT

tables,

first_value(query),

count() AS cnt,

groupArrayDistinct(normalizedQueryHash(query)) AS normalized_query_hash,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS sum_user_cpu,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS sum_system_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS avg_user_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS avg_system_cpu,

sum(memory_usage) as sum_memory_usage,

avg(memory_usage) as avg_memory_usage

FROM clusterAllReplicas(default, system.query_log)

WHERE (event_time > '2025-01-08 19:30:00') AND (event_time < '2025-01-08 20:30:00') AND (query_kind = 'Select')

GROUP BY tables

ORDER BY sum_memory_usage

LIMIT 5Step 2: Identify the key SQL queries that cause the performance bottleneck

After you identify the table that is causing the performance bottleneck in the previous step, you can locate the specific SQL queries that are causing the issue. The following is an example.

Locate SQL queries that cause CPU issues

SELECT

tables,

first_value(query),

count() AS cnt,

normalizedQueryHash(query) AS normalized_query_hash,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS sum_user_cpu,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS sum_system_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS avg_user_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS avg_system_cpu,

sum(memory_usage) as sum_memory_usage,

avg(memory_usage) as avg_memory_usage

FROM clusterAllReplicas(default, system.query_log)

WHERE (event_time > '2025-01-08 19:30:00') AND (event_time < '2025-01-08 20:30:00') AND (query_kind = 'Select') AND has(tables, '<AIM_TABLE>')

GROUP BY

tables,

normalized_query_hash

ORDER BY sum_user_cpu DESC

LIMIT 5Locate SQL queries that cause memory issues

SELECT

tables,

first_value(query),

count() AS cnt,

normalizedQueryHash(query) AS normalized_query_hash,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS sum_user_cpu,

sum(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS sum_system_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'UserTimeMicroseconds')]) AS avg_user_cpu,

avg(ProfileEvents.Values[indexOf(ProfileEvents.Names, 'SystemTimeMicroseconds')]) AS avg_system_cpu,

sum(memory_usage) as sum_memory_usage,

avg(memory_usage) as avg_memory_usage

FROM clusterAllReplicas(default, system.query_log)

WHERE (event_time > '2025-01-08 19:30:00') AND (event_time < '2025-01-08 20:30:00') AND (query_kind = 'Select') AND has(tables, 'AIM_TABLE')

GROUP BY

tables,

normalized_query_hash

ORDER BY sum_memory_usage

LIMIT 5The following table describes the parameters:

`AIM_TABLE`: The table whose performance issues you want to locate.

In addition to the preceding parameter, you must also configure `event_time` to obtain data for the target time period.

Step 3: Analyze SQL performance

You can use the `EXPLAIN` statement and the `system.query_log` table to analyze the target statement.

You can use `EXPLAIN` to analyze the target SQL. For more information about the meaning of each field in the `EXPLAIN` output, see EXPLAIN.

EXPLAIN PIPELINE <SQL with performance issues>You can use the `system.query_log` table to analyze the target SQL.

SELECT hostname () AS host, * FROM clusterAllReplicas (`default`, system.query_log) WHERE event_time > '2025-01-18 00:00:00' AND event_time < '2025-01-18 03:00:00' AND initial_query_id = '<INITIAL_QUERY_ID>' AND type = 'QueryFinish' ORDER BY query_start_time_microsecondsThe following table describes the parameters.

`INITIAL_QUERY_ID`: The query ID of the target search statement.

In addition to the preceding parameter, you must also configure `event_time` to obtain data for the target time period.

You need to focus on the following returned fields:

ProfileEvents: This field provides detailed query execution event counters and statistics to help you analyze the performance and resource usage of the query for metric comparison. For more information about query events, see events.

Settings: This field provides the various configuration parameters that were used during query execution. These parameters can affect the behavior and performance of the query. By viewing this field, you can better understand and optimize the target SQL. For more information about query parameters, see settings.

query_duration_ms: This field indicates the total running time of the query. If you find that a subquery is particularly slow, you can use the subquery's `query_id` to find its execution details in the log file. For more information about how to modify the log level, see Configure config.xml parameters.

If none of the preceding methods help you locate the issue that is causing the target statement's performance to deteriorate, you can retrieve the relevant information for the statement from the self-managed ClickHouse cluster. Then, compare this information with the statement's information in ApsaraDB for ClickHouse to analyze the reason for the performance degradation after migrating to the cloud.

Step 4: Optimize SQL

After you locate the SQL that is causing the performance issue, you can optimize it accordingly. The following sections provide several optimization strategies. Specific issues must be analyzed and handled based on the actual situation.

Index optimization: You can analyze the target statement, locate the columns that are frequently used for filtering, and create an index for them.

Data types: Using appropriate data types can reduce storage space and improve query performance.

Query optimization:

Query column optimization: You can avoid using `SELECT *` and select only the required columns to reduce I/O and network transmission.

WHERE clause optimization: You can use primary keys and index fields in the `WHERE` clause.

Use PREWHERE: For complex filter conditions, you can use the

PREWHEREclause to filter data in advance and reduce the amount of data to be processed later.JOIN optimization: For distributed tables, you can use `GLOBAL JOIN` to improve `JOIN` performance.

Parameter setting optimization: You can optimize performance by adjusting query settings. For example, increasing

max_threadscan utilize more CPU cores, but be careful not to over-configure it and cause resource contention. For more information about query parameters, see settings. For more information about how to view the parameter settings in a query, see Step 3: Analyze SQL performance.Materialized views: For frequently executed complex queries, you can create materialized views to pre-calculate results and improve query performance.

Data compression: ClickHouse enables data compression by default. You can further optimize storage and query performance by adjusting the compression algorithm and level.

Configure a larger cache: For frequently executed queries whose results do not change often, ApsaraDB for ClickHouse uses a query cache to improve performance. If you find that the current cache is insufficient, you can modify the `uncompressed_cache_size` parameter to set a larger cache space. For more information about how to modify parameters, see Configure config.xml parameters.