Alibaba Cloud Linux 3 provides Shared Memory Communication (SMC), a high-performance network protocol that functions in kernel space. SMC utilizes the Remote Direct Memory Access (RDMA) technology and works with socket interfaces to establish network communications. SMC can transparently optimize the network communication performance of Transmission Control Protocol (TCP) applications. However, when you use SMC to optimize the performance of network communications in a native Elastic Compute Service (ECS) environment, you must carefully maintain the SMC whitelist and configurations in the network namespace of the related pod to prevent SMC from being unexpectedly downgraded to TCP. Service Mesh (ASM) provides the SMC optimization capability in a controllable network environment (that is, cluster) to automatically optimize the network communications between pods in an ASM instance. You do not need to care about the specific SMC configuration.

Prerequisites

Limits

Nodes must use ECS instances that support elastic Remote Direct Memory Access (eRDMA). For more information, see Configure eRDMA on an enterprise-level instance.

Nodes must use Alibaba Cloud Linux 3. For more information, see Alibaba Cloud Linux 3.

The version of your ASM instance is V1.23 or later. For more information about how to update an ASM instance, see Update an ASM instance.

Your Container Service for Kubernetes (ACK) cluster uses the Terway network plug-in. For more information, see Work with Terway.

Internet access to the API server of the ACK cluster is enabled. For more information, see Control public access to the API server of a cluster.

Procedure

Step 1: Initialize the related nodes

SMC uses elastic RDMA interface (ERI) to accelerate network communications. Before you enable SMC, you must initialize the related nodes.

Upgrade the kernel version of Alibaba Cloud Linux 3 to 5.10.134-17.3 or later. For more information, see Change the kernel version.

Install the erdma-controller on the nodes and enable Shared Memory Communication over RDMA (SMC-R). For more information, see Use eRDMA to accelerate container networking.

Step 2: Deploy test applications

Enable automatic sidecar proxy injection for the default namespace, which is used in the following tests. For more information, see Enable automatic sidecar proxy injection.

Create a fortioserver.yaml file that contains the following content:

Use kubectl to connect to the ACK cluster based on the information in the kubeconfig file and then run the following command to deploy the test applications:

kubectl apply -f fortioserver.yamlRun the following command to view the status of the test applications:

kubectl get pods | grep fortioExpected output:

NAME READY STATUS RESTARTS fortioclient-8569b98544-9qqbj 3/3 Running 0 fortioserver-7cd5c46c49-mwbtq 3/3 Running 0The output indicates that both test applications start as expected.

Step 3: Run a test in the baseline environment and view the baseline test results

After the fortio application starts, the listening port 8080 is exposed. You can access this port to open the console page of the fortio application. To generate test traffic, you can map the port of the fortioclient application to a local port. Then, open the console page of the fortio application on your on-premises host.

Use kubectl to connect to the ACK cluster based on the information in the kubeconfig file and then run the following command to map port 8080 of the fortioclient application to the local port 8080.

kubectl port-forward service/fortioclient 8080:8080In the address bar of your browser, enter

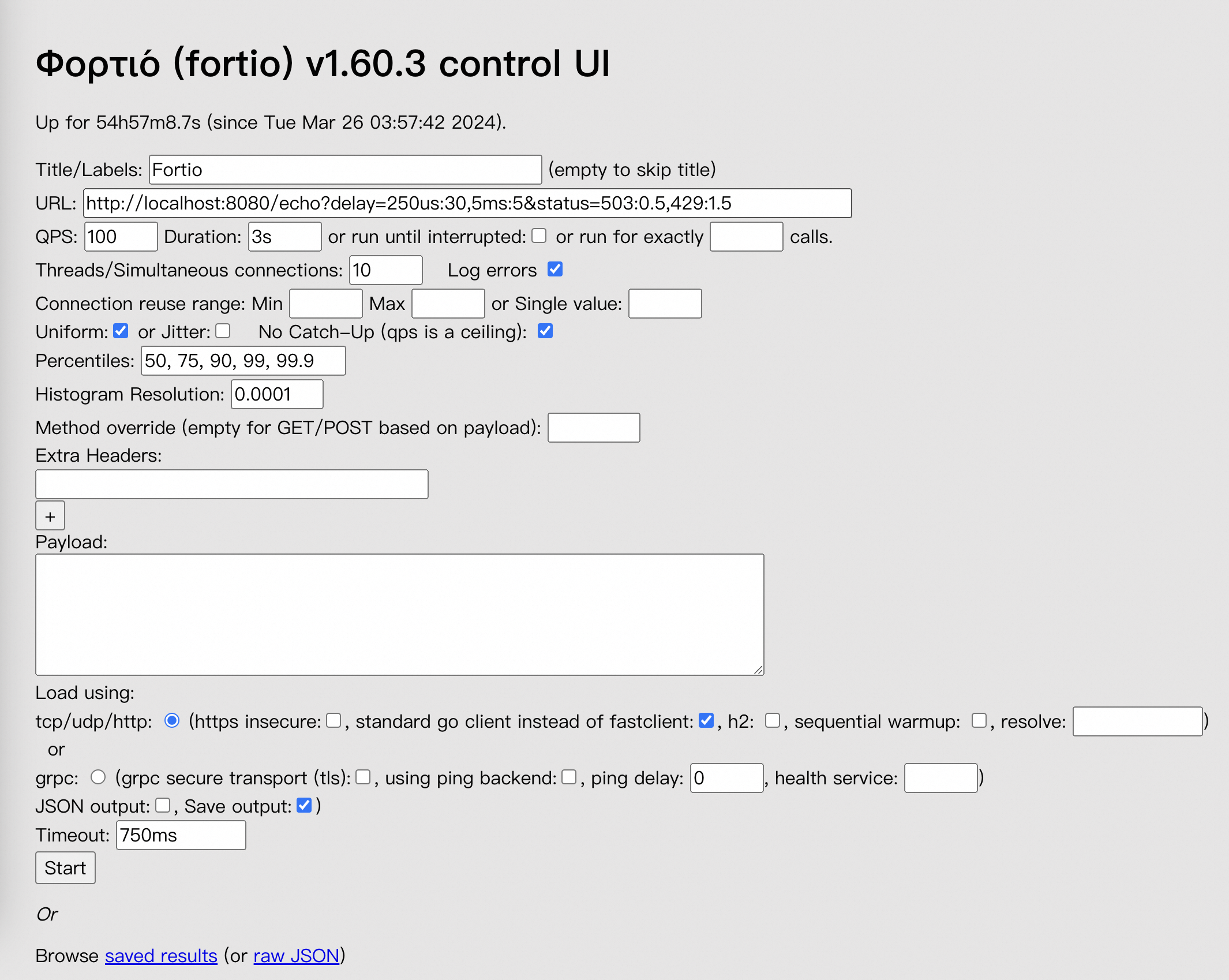

http://localhost:8080/fortioto access the console of the fortioclient application and modify related configurations.

Modify the parameter settings on the page shown in the preceding figure according to the following table.

Parameter

Example

URL

http://fortioserver:8080/echo

QPS

100000

Duration

30s

Threads/Simultaneous connections

64

Payload

Enter the following string (128 bytes):

xhsyL4ELNoUUbC3WEyvaz0qoHcNYUh0j2YHJTpltJueyXlSgf7xkGqc5RcSJBtqUENNjVHNnGXmoMyILWsrZL1O2uordH6nLE7fY6h5TfTJCZtff3Wib8YgzASha8T8g

After the configuration is complete, click Start in the lower part of the page to start the test. The test ends after the progress bar reaches the end.

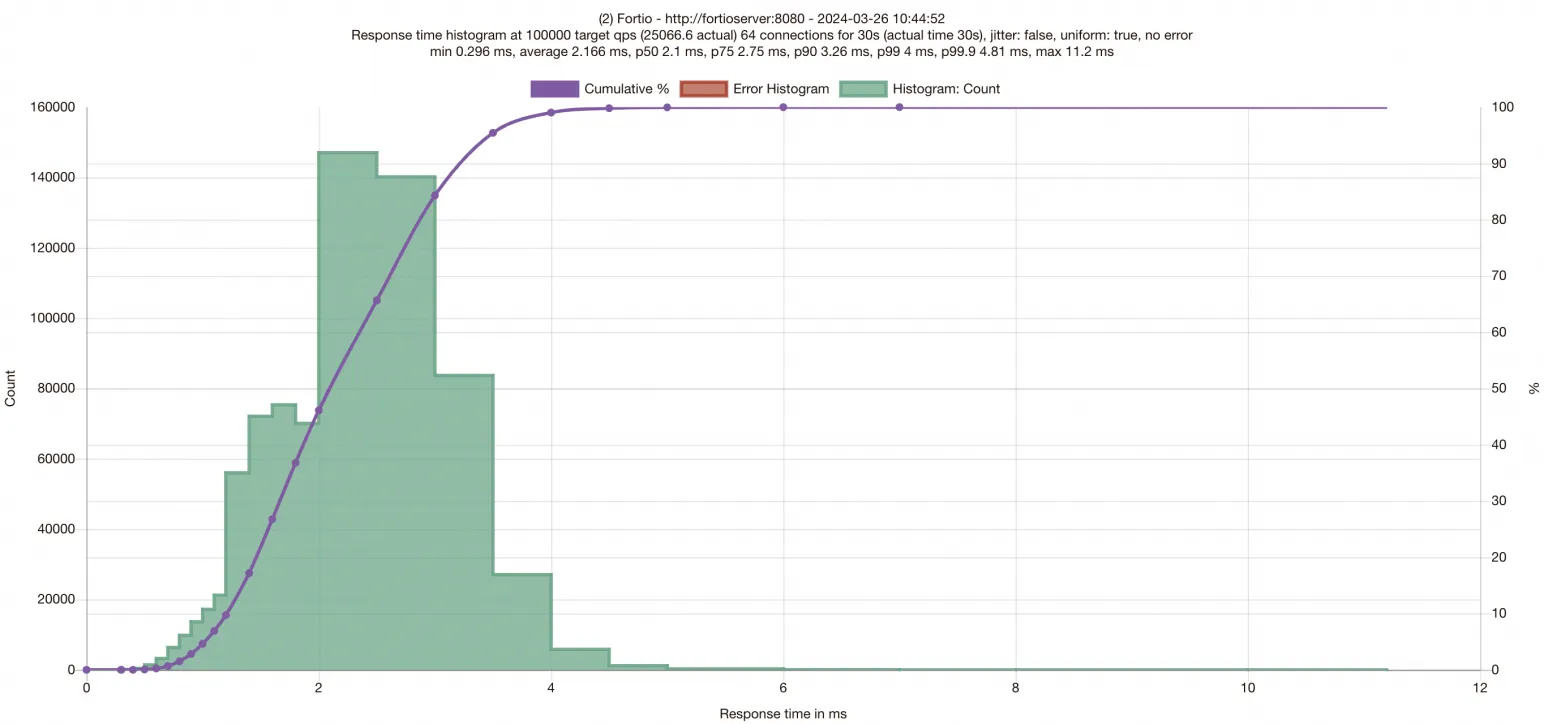

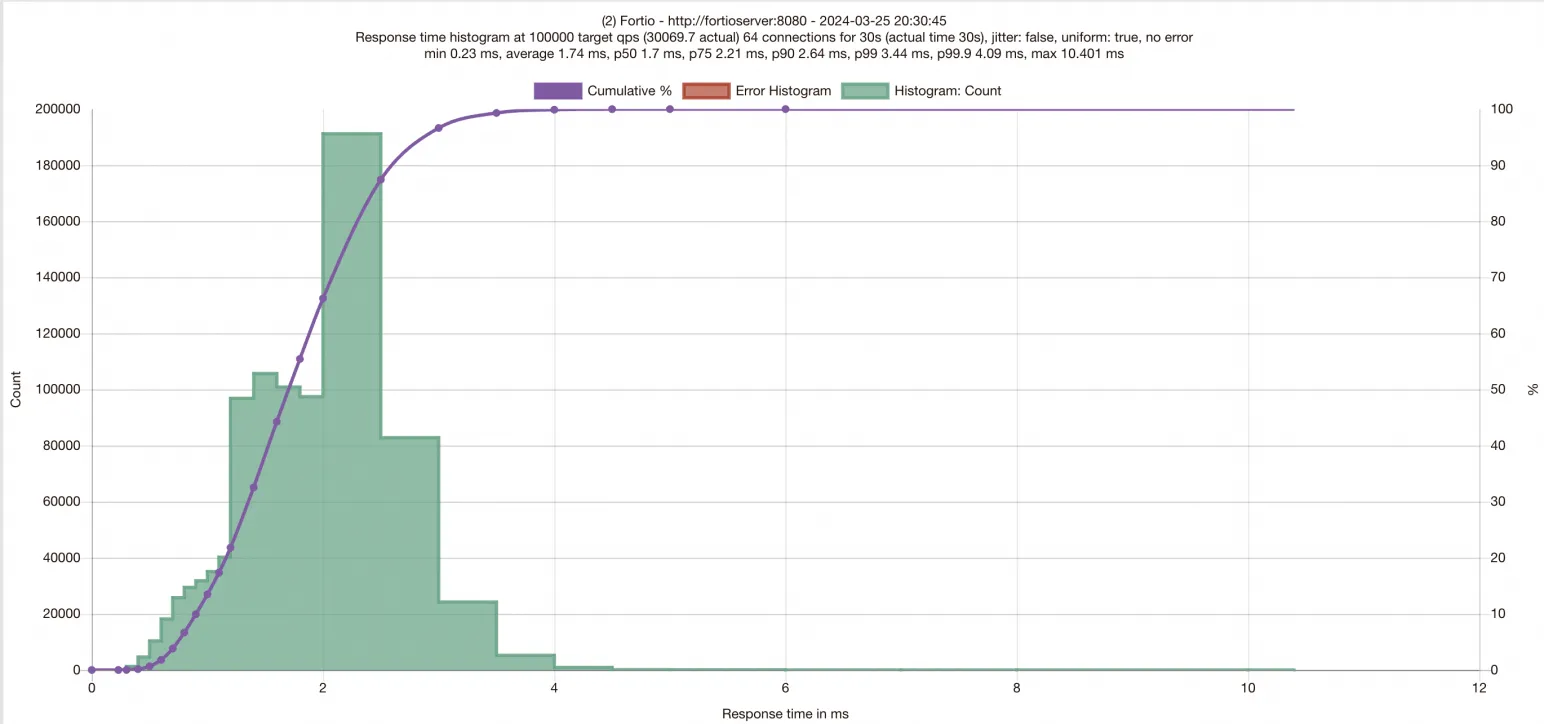

After the test ends, the results of the test are displayed on the page. The following figure is for reference only. Test results vary with test environments.

On the test results page, the x-axis shows the latencies of requests. You can obtain the distribution of the latencies of requests by observing the distribution of data on the x-axis of the histogram. The purple curve shows the number of the processed requests within different response time. The y-axis shows the number of processed requests. At the top of the histogram, the P50, P75, P90, P99, and P99.9 latencies of requests are provided. After you obtain the test data of the baseline environment, you need to enable SMC for the applications to test the performance of the applications after SMC is enabled.

Step 4: Enable network communication acceleration based on SMC for your ASM instance and workloads

Use kubectl to connect to the ASM instance based on the information in the kubeconfig file. Then, run the following command to add the "smcEnabled: true" field to enable network communication acceleration based on SMC.

$ kubectl edit asmmeshconfig apiVersion: istio.alibabacloud.com/v1beta1 kind: ASMMeshConfig metadata: name: default spec: ambientConfiguration: redirectMode: "" waypoint: {} ztunnel: {} cniConfiguration: enabled: true repair: {} smcEnabled: trueUse kubectl to connect to the ACK cluster based on the information in the kubeconfig file. Then, run the following command to modify the Deployments of the fortioserver and fortioclient applications and add the smc.asm.alibabacloud.com/enabled: "true" annotation to the pods in which the fortioserver and fortioclient applications reside.

After you enable SMC for the ASM instance, you need to further enable SMC for the workloads. To enable SMC for workloads, you can add the

smc.asm.alibabacloud.com/enabled: "true"annotation to the related pods. You must enable SMC for workloads on both the client and server sides.Modify the Deployment of the fortioclient application.

$ kubectl edit deployment fortioclient apiVersion: apps/v1 kind: Deployment metadata: ...... name: fortioclient spec: ...... template: metadata: ...... annotations: smc.asm.alibabacloud.com/enabled: "true"Modify the Deployment of the fortioserver application.

$ kubectl edit deployment fortioserver apiVersion: apps/v1 kind: Deployment metadata: ...... name: fortioserver spec: ...... template: metadata: ...... annotations: smc.asm.alibabacloud.com/enabled: "true"

Step 5: Run the test in the environment in which SMC is enabled and view the test results

Workloads are restarted after you modify the Deployments. Therefore, you must map the port of the fortioclient application to the local port again by referring to Step 3. Then, start the test again and wait until the test is complete.

Compared with the test results when you do not enable SMC, you can see that after you enable SMC for the ASM instance, latencies of requests decrease and the queries per second (QPS) significantly increases.

FAQ

What do I do if I fail to access a database service after SMC is enabled?

This issue occurs because the TCP-kind 254 in SMC protocol is incorrectly identified when you access the database service through a load balancer that fails to be compliant with the TCP specifications.

You can perform the following steps to resolve this issue:

Install the aliyun-smc-extensions toolkit on the node:

sudo yum install -y aliyun-smc-extensionsCheck the communication link of the final URL by using aliyunsmc-check:

aliyunsmc-check syn_check --url <url>The aliyunsmc-check can identify issues such as TCP option replay, conflict, and overlength to the final URL. Sample output:

If TCP options are not properly processed when you access the URL of the service, we recommend that you disable SMC optimization when you access the pod of the service.