You can analyze the data displayed on volume dashboards to identify issues that are caused by frequent I/O operations on clients. For example, frequent I/O operations on clients and frequent access to metadata may slow down the system, and frequent access to files may consume large amounts of bandwidth resources. This topic describes how to use volume dashboards to identify the issues caused by frequent I/O operations on clients.

Prerequisites

A Container Service for Kubernetes (ACK) cluster whose Kubernetes version is later than 1.20 is created. The Container Storage Interface (CSI) plug-in is used as the volume plug-in. For more information, see Create an ACK managed cluster.

The versions of csi-plugin and csi-provisioner are later than 1.22.14-820d8870-aliyun. For more information about how to update csi-plugin and csi-provisioner, see Install and update the CSI plug-in.

Background information

The following volume dashboards are displayed in the ACK console:

Container Storage IO Monitoring (Cluster Level): This dashboard shows information about the top N pods of each metric.

Pod IO Monitoring (Pod Level): This dashboard shows the metrics of each pod. You can switch between different pods.

OSS IO Monitoring (Cluster Level): This dashboard shows the metrics of each Object Storage Service (OSS) bucket. You can switch between different buckets.

Billing rules for CSI monitoring

After you enable CSI monitoring, the relevant plug-ins will automatically submit metrics to Managed Service for Prometheus. These metrics are considered custom metrics. Fees may be charged for custom metrics.

To avoid incurring additional fees, we recommend that you read and understand Billing overview before you enable this feature. The fees may vary based on the cluster size and number of applications. You can follow the steps in View resource usage to monitor and manage resource usage.

View volume dashboards

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, click the name of the cluster that you want to manage and choose in the left-side navigation pane.

On the Prometheus Monitoring page, click the Storage Monitoring tab.

On the Storage Monitoring page, find and click OSS IO Monitoring (Cluster Level) to view the dashboard.

On the Storage Monitoring page, find and click Pod IO Monitoring (Pod Level) to view the dashboard.

Identify the issues caused by frequent I/O operations of pods

Read operations are analyzed in the following examples.

Issue 1: What I/O operations may slow down the system if the operations become frequent?

If the number of read requests to a persistent volume claim (PVC) that is mounted to your application sharply increases, the application may be throttled or stop responding. To identify the issue, perform the following steps:

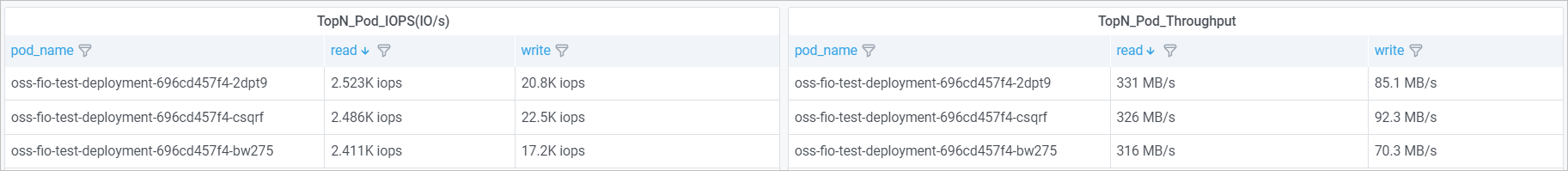

Go to the Container Storage IO Monitoring (Cluster Level) dashboard page. In the TopN_Pod_IOPS(IO/s) and TopN_Pod_Throughput(IO/s) panels, you can sort the pods based on the read column to view the pod that has the most frequent I/O operations and the pods that has the highest IOPS.

The figure shows that the oss-fio-test-deployment-696cd457f4-2dpt9 pod has the most frequent I/O operations and highest IOPS.

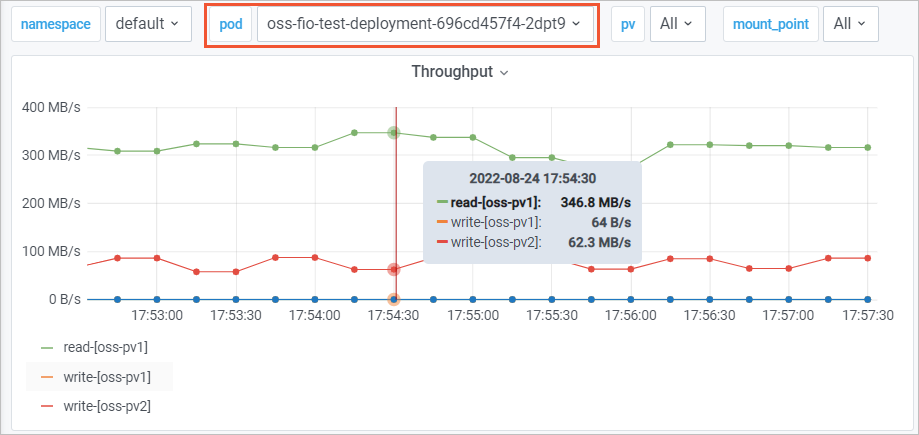

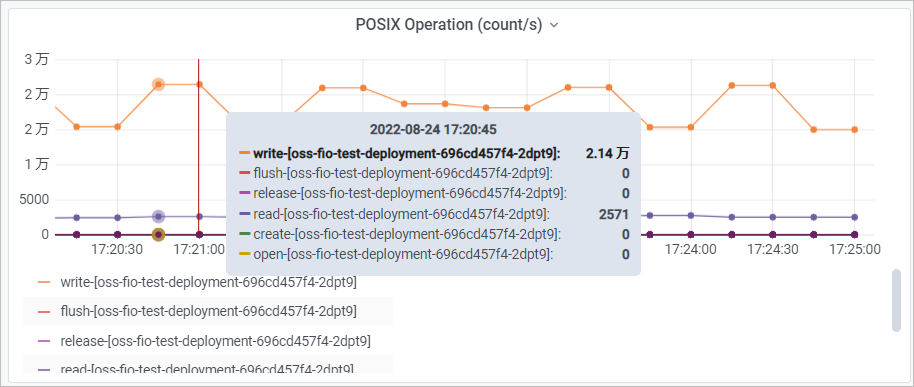

Go to the Pod IO Monitoring (Pod Level) dashboard page. Select the oss-fio-test-deployment-696cd457f4-2dpt9 pod. In the Throughput and POSIX Operation(count/s) panels, you can view the number of Portable Operating System Interface (POSIX) operations and throughput of each pod.

On the Clusters page, click the name of the cluster that you want to manage and choose in the left-side navigation pane.

On the Pods page, click the pod named oss-fio-test-deployment-696cd457f4-2dpt9 in the Name column to go to the details page.

You can obtain the image that is used to deploy the pod on the details page. You can modify the pod configuration to resolve the issues caused by frequent I/O operations and high throughput.

Issue 2: What metadata may slow down the system if the requests to the metadata become frequent?

If the number of read requests to the metadata in an OSS bucket sharply increases, the application may be throttled or stop responding. To identify the issue, perform the following steps:

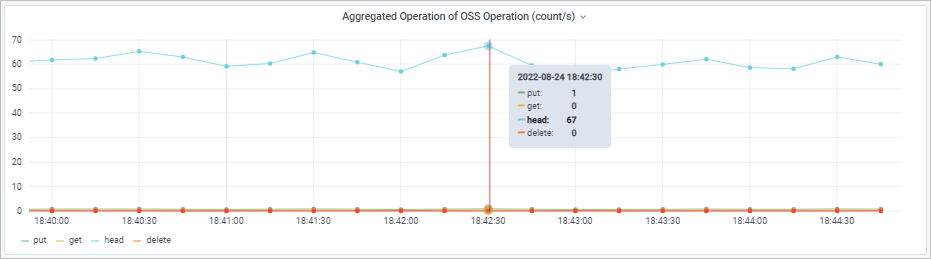

Go to the OSS IO Monitoring (Cluster Level) dashboard page. Select the bucket that you want to check. In the Aggregated Operation of Operation(count/s) panel, you can view the top N pods of the number of HEAD requests.

The figure shows that the oss--1 bucket receives a large number of HEAD requests.

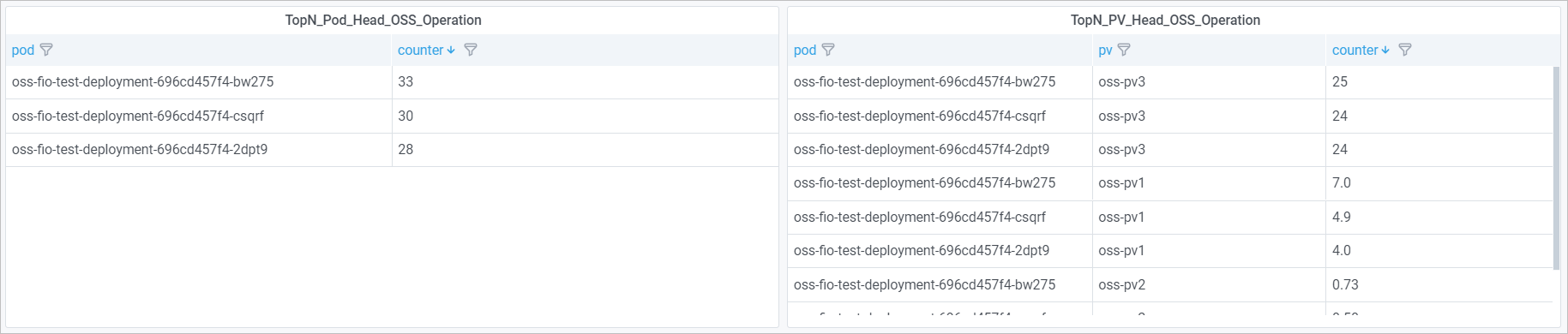

Go to the Container Storage IO Monitoring (Cluster Level) dashboard page. In the TopN_Pod_Head_OSS_Operation panel, you can sort the pods based on the counter column to view the pod that sends the most HEAD requests. In the TopN_PV_Head_OSS_Operation panel, you can view the bucket that receives the most HEAD requests.

The figure shows that the oss-fio-test-deployment-696cd457f4-bw275 pod sends the most HEAD requests and the oss-pv3 bucket receives the most HEAD requests.

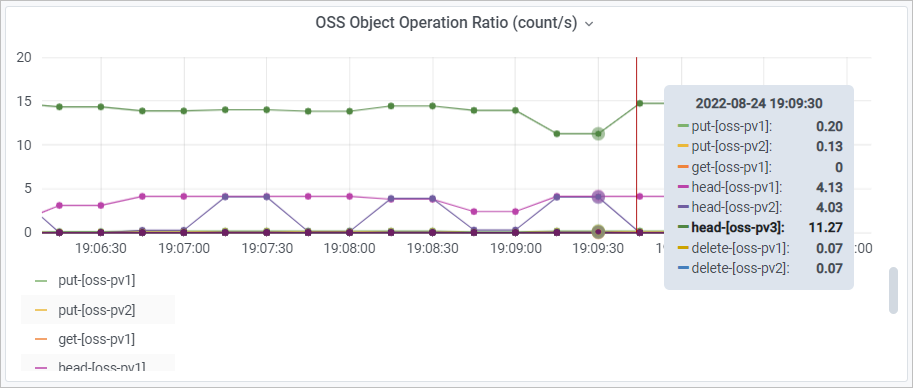

Go to the Pod IO Monitoring (Pod Level) dashboard page. Select the oss-fio-test-deployment-696cd457f4-bw275 pod. In the OSS Object Operation Ration(count/s) panel, you can view the I/O operations of the pod.

On the Clusters page, click the name of the cluster that you want to manage and choose in the left-side navigation pane.

On the Pods page, click the pod named oss-fio-test-deployment-696cd457f4-bw275 in the Name column to go to the details page.

You can obtain the image that is used to deploy the pod on the details page. You can modify the pod configuration to resolve the issues caused by frequent HEAD requests and I/O operations.

Issue 3: How do I find the files and paths that are frequently accessed?

Go to the OSS IO Monitoring (Cluster Level) dashboard page. You can view the files and the paths that are frequently accessed in an OSS bucket.

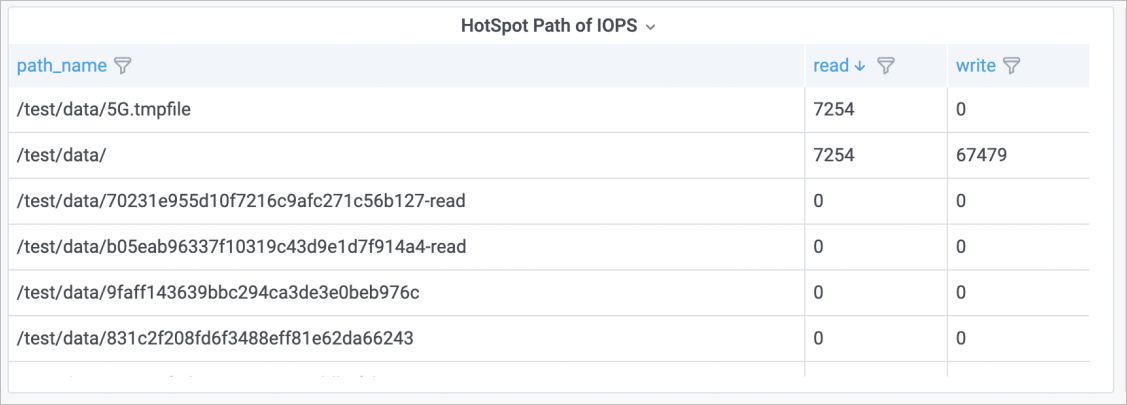

In the HotSpot Path of IOPS panel, you can sort the files and the paths based on the read column to view the file and the path that are most frequently accessed in the bucket.

The figure shows that the /test/data/5G.tmpfile file and the /test/data path are most frequently accessed.

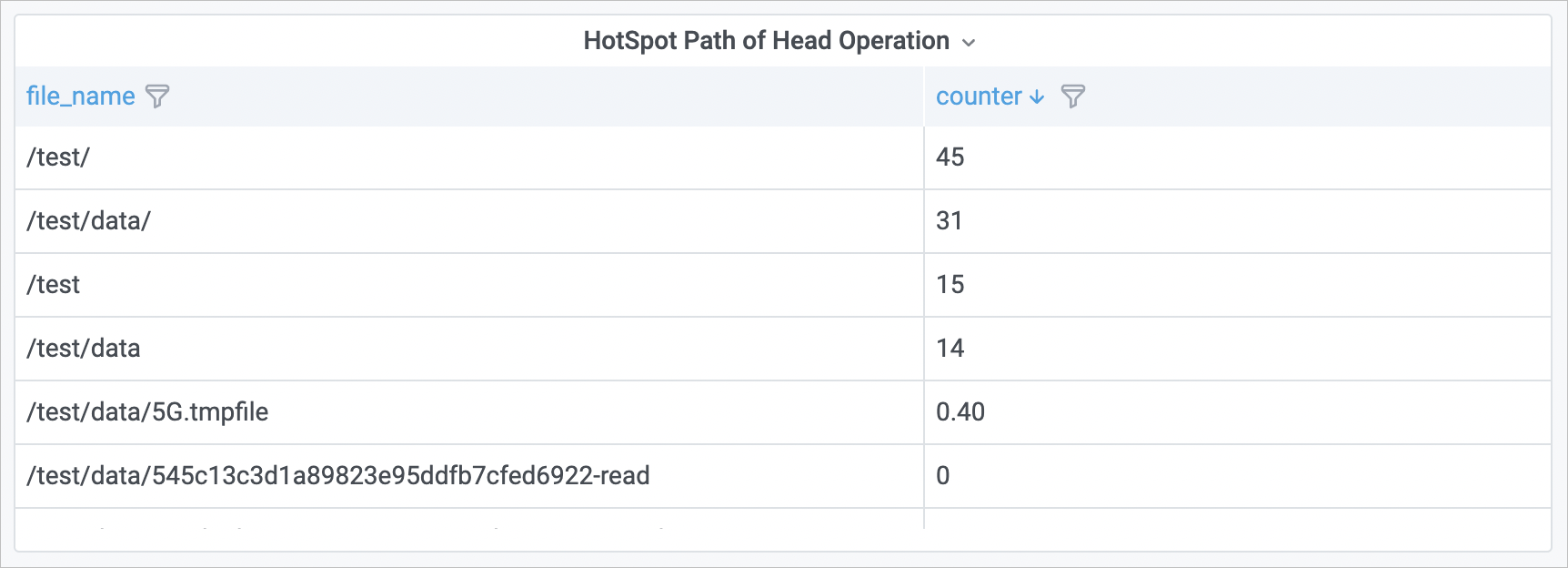

In the HotSpot Path of Head Operation panel, you can sort the paths based on the read column to view the path that receives the most HEAD requests.

The figure shows that the /test/ path in the bucket receives the most HEAD requests.

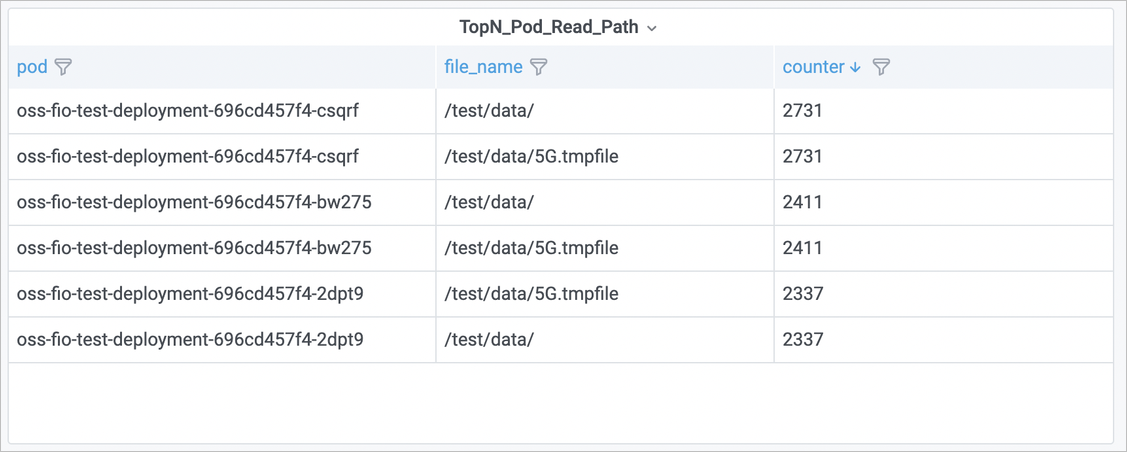

Go to the Container Storage IO Monitoring (Cluster Level) dashboard page. In the TopN_Pod_Read_Path panel, you can sort the pods based on the counter column to view the pod that sends the most requests to a file.

The figure shows that the oss-fio-test-deployment-696cd457f4-csqrf pod sends the most requests to a file.

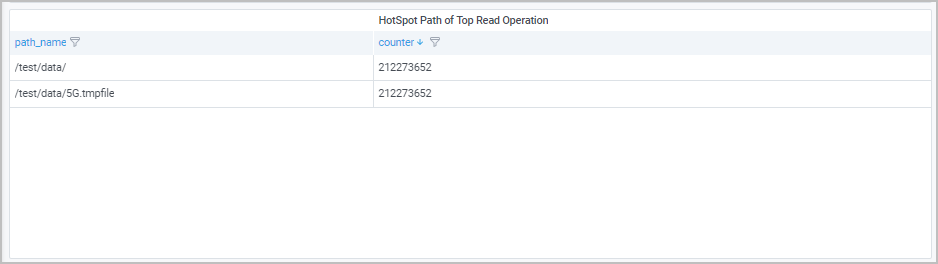

Go to the Pod IO Monitoring (Pod Level) dashboard page. Select the oss-fio-test-deployment-696cd457f4-csqrf pod. In the HotSpot Path of Top Read Operation panel, you can sort the files and the paths based on the counter column to view the file and the path that are most frequently accessed by the pod.

The figure shows that the /test/data/5G.tmpfile file and the /test/data path are most frequently accessed.

Issue 4: What do I do if I receive an alert notification from the event center and the notification shows that a volume fails to be mounted?

When a volume fails to be mounted, the event center sends you an alert notification. To locate the persistent volume (PV) that fails to be mounted and identify the issue, perform the following steps:

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, click the name of the cluster that you want to manage and choose in the left-side navigation pane.

On the Event Center page, click the Core Component Events tab. In the CSI section, you can view volume mount failures and the cause of the failures.