The Advanced Horizontal Pod Autoscaler (AHPA) component can predict GPU resource requests based on the GPU utilization data obtained from Prometheus Adapter, historical load trends, and prediction algorithms. AHPA can automatically adjust the number of replicated pods or the allocation of GPU resources to ensure that scale-out operations are completed before GPU resources are out of stock. It also performs scale-in operations when idle resources exist to reduce costs and improve cluster resource utilization.

Prerequisites

An ACK managed cluster that contains GPU-accelerated nodes is created. For more information, see Create an ACK cluster with GPU-accelerated nodes.

AHPA is installed and data sources are configured to collect metrics. For more information, see AHPA overview.

Managed Service for Prometheus is enabled, and application statistics within at least seven days are collected by Managed Service for Prometheus. The statistics include the details of the GPU resources that are used by an application. For more information, see Managed Service for Prometheus.

How it works

In high-performance computing fields, particularly in scenarios such as model training and model inference in deep learning, fine-grained management and dynamic adjustment of GPU resource allocation can improve resource utilization and reduce costs. Container Service for Kubernetes(ACK) supports auto scaling based on GPU metrics. You can use Managed Service for Prometheus to collect key metrics such as the real-time GPU utilization and memory usage. Then, you can use Prometheus Adapter to convert these metrics to the metrics that can be recognized by Kubernetes and integrate them with AHPA. AHPA can predict GPU resource requests based on these data, historical load trends, and prediction algorithms. AHPA can automatically adjust the number of replicated pods or the allocation of GPU resources to ensure that scale-out operations are completed before resources are out of stock. It also performs scale-in operations when idle resources exist to reduce costs and improve cluster efficiency.

Step 1: Deploy Metrics Adapter

Obtain the internal HTTP API endpoint of the Prometheus instance that is used by your cluster.

Log on to the ARMS console.

In the left-side navigation pane, choose .

At the top of the Instances page, select the region where the ACK cluster is located.

Click the name of the Managed Service for Prometheus instance. In the left-side navigation pane of the instance details page, click Settings and record the internal endpoint in the HTTP API URL section.

Deploy ack-alibaba-cloud-metrics-adapter.

Log on to the ACK console. In the left-side navigation pane, choose .

On the Marketplace page, click the App Catalog tab. Find and click ack-alibaba-cloud-metrics-adapter.

In the upper-right corner of the ack-alibaba-cloud-metrics-adapter page, click Deploy.

On the Basic Information wizard page, specify Cluster and Namespace, and click Next.

On the Parameters wizard page, set the Chart Version parameter and specify the internal HTTP API endpoint that you obtained in Step 1 as the value of the

prometheus.urlparameter in the Parameters section. Then, click OK.

Step 2: Use AHPA to perform predictive scaling based on GPU metrics

In this example, an inference service is deployed. Then, requests are sent to the inference service to check whether AHPA can perform predictive scaling based on GPU metrics.

Deploy an inference service.

Run the following command to deploy the inference service:

Run the following command to query the status of the pods:

kubectl get pods -o wideExpected output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES bert-intent-detection-7b486f6bf-f**** 1/1 Running 0 3m24s 10.15.1.17 cn-beijing.192.168.94.107 <none> <none>Run the following command to send requests to the inference service and check whether the service is deployed.

You can run the

kubectl get svc bert-intent-detection-svccommand to query the IP address of the GPU-accelerated node on which the inference service is deployed. Then, replace47.95.XX.XXin the following command with the IP address that you obtained:curl -v "http://47.95.XX.XX/predict?query=Music"Expected output:

* Trying 47.95.XX.XX... * TCP_NODELAY set * Connected to 47.95.XX.XX (47.95.XX.XX) port 80 (#0) > GET /predict?query=Music HTTP/1.1 > Host: 47.95.XX.XX > User-Agent: curl/7.64.1 > Accept: */* > * HTTP 1.0, assume close after body < HTTP/1.0 200 OK < Content-Type: text/html; charset=utf-8 < Content-Length: 9 < Server: Werkzeug/1.0.1 Python/3.6.9 < Date: Wed, 16 Feb 2022 03:52:11 GMT < * Closing connection 0 PlayMusic # The query result.If the HTTP status code

200and the query result are returned, the inference service is deployed.

Configure AHPA.

In this example, AHPA is configured to scale pods when the GPU utilization of the pod exceeds 20%.

Configure data sources to collect metrics for AHPA.

Create a file named application-intelligence.yaml and copy the following content to the file.

Set the

prometheusUrlparameter to the internal endpoint of the Prometheus instance that you obtained in Step 1.apiVersion: v1 kind: ConfigMap metadata: name: application-intelligence namespace: kube-system data: prometheusUrl: "http://cn-shanghai-intranet.arms.aliyuncs.com:9090/api/v1/prometheus/da9d7dece901db4c9fc7f5b*******/1581204543170*****/c54417d182c6d430fb062ec364e****/cn-shanghai"Run the following command to deploy application-intelligence:

kubectl apply -f application-intelligence.yaml

Deploy AHPA

Create a file named fib-gpu.yaml and copy the following content to the file.

In this example, the

observermode is used. For more information about the parameters related to AHPA, see Parameters.Run the following command to deploy AHPA:

kubectl apply -f fib-gpu.yamlRun the following command to query the status of AHPA:

kubectl get ahpaExpected output:

NAME STRATEGY REFERENCE METRIC TARGET(%) CURRENT(%) DESIREDPODS REPLICAS MINPODS MAXPODS AGE fib-gpu observer bert-intent-detection gpu 20 0 0 1 10 50 6d19hIn the output,

0is returned in theCURRENT(%)column and20is returned in theTARGET(%)column. This indicates that the current GPU utilization is 0% and pod scaling will be triggered if the GPU utilization exceeds 20%.

Test auto scaling on the inference service.

Run the following command to access the inference service:

Run the following command to query the status of AHPA:

kubectl get ahpaExpected output:

NAME STRATEGY REFERENCE METRIC TARGET(%) CURRENT(%) DESIREDPODS REPLICAS MINPODS MAXPODS AGE fib-gpu observer bert-intent-detection gpu 20 189 10 4 10 50 6d19hThe output shows that the current GPU utilization (

CURRENT(%)) is higher than the scaling threshold (TARGET(%)). Therefore, pod scaling is triggered and the expected number of pods is10, which is the value returned in theDESIREDPODScolumn.Run the following command to query the prediction results:

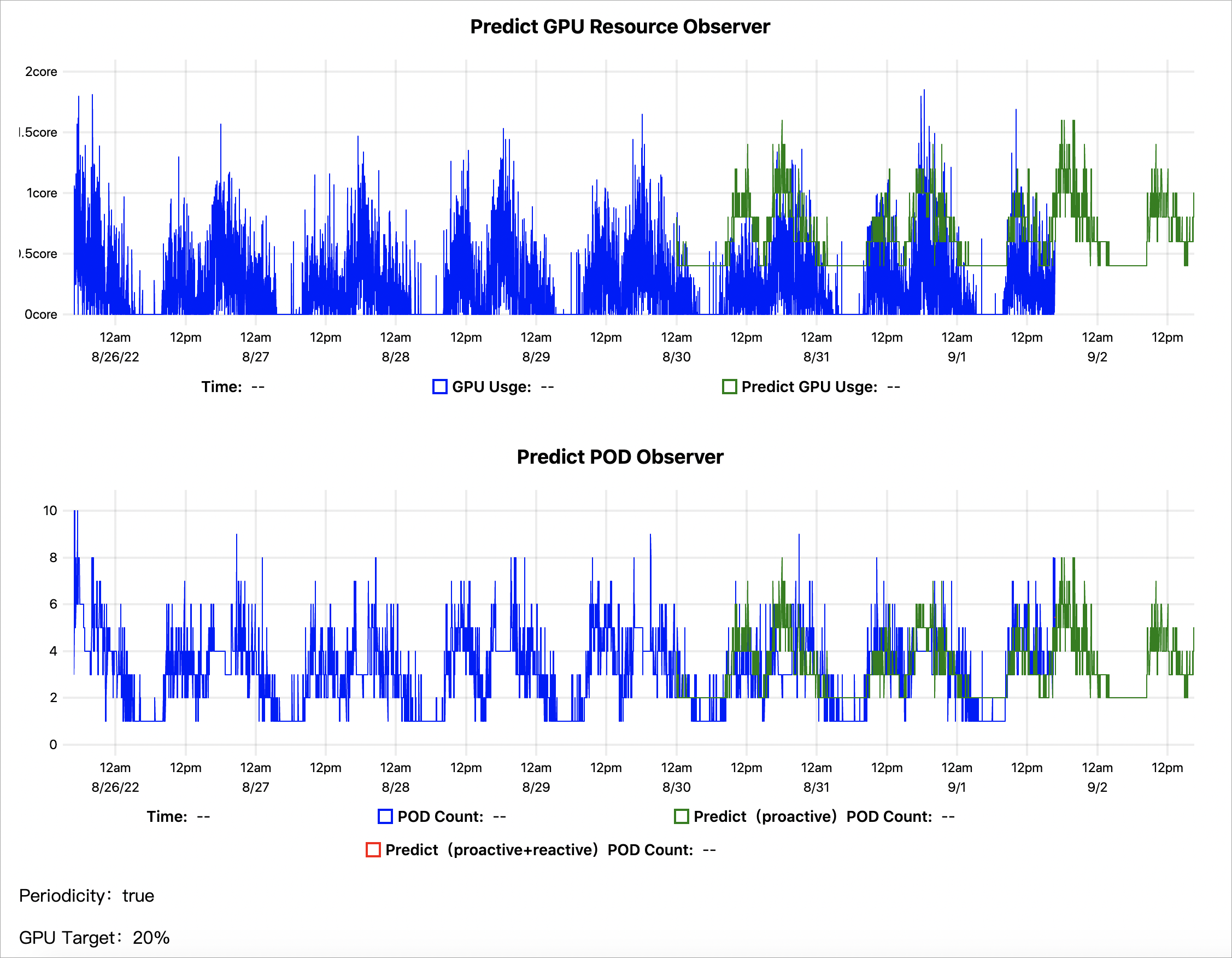

kubectl get --raw '/apis/metrics.alibabacloud.com/v1beta1/namespaces/default/predictionsobserver/fib-gpu'|jq -r '.content' |base64 -d > observer.htmlThe following figures show the prediction results of GPU utilization based on historical data within the last seven days.

Predict GPU Resource Observer: The actual GPU utilization is represented by a blue line. The GPU utilization predicted by AHPA is represented by a green line. You can find that the predicted GPU utilization is higher than the actual GPU utilization.

Predict POD Observer: The actual number of pods that are added or deleted in scaling events is represented by a blue line. The number of pods that AHPA predicts to be added or deleted in scaling events is represented by a green line. You can find that the predicted number of pods is less than the actual number of pods. You can set the scaling mode to

autoand configure other settings based on the predicted number of pods. This way, AHPA can save pod resources.

The results show that AHPA can use predictive scaling to handle fluctuating workloads as expected. After you confirm the prediction results, you can set the scaling mode to

auto, which allows AHPA to automatically scale pods.

References

Knative allows you to use AHPA in serverless Kubernetes (ASK) clusters. If your application requests resources in a periodic pattern, you can use AHPA to predict changes in resource requests and prefetch resources for scaling activities. This reduces the impact of cold starts when your application is scaled. For more information, see Use AHPA to enable predictive scaling in Knative.

In some scenarios, you may need to scale applications based on custom metrics, such as the QPS of HTTP requests or message queue length. AHPA provides the External Metrics mechanism that can work with the alibaba-cloud-metrics-adapter component to allow you to scale applications based on custom metrics. For more information, see Use AHPA to configure custom metrics for application scaling.