After enabling the managed node pool feature, you can turn on node auto-repair. Alibaba Cloud Container Service for Kubernetes (ACK) will then automatically monitor node health and trigger self-healing tasks when issues are detected. This helps simplify node operations and maintenance. However, due to the complexity of potential failures, auto-repair cannot resolve all fault scenarios. Some severe or complex issues may still require manual intervention.

How it works

The workflow for fault detection, notification, and node auto repair is as follows:

Fault diagnosis and detection

ACK uses the ack-node-problem-detector (NPD) add-on to check for node exceptions. If a node becomes unhealthy and remains in that state for a specified period, ACK considers the node to have failed.

Fault notification

When a fault is detected, ACK generates a node condition and a Kubernetes event. Configure alerts in the Event Center to receive notifications.

(For exclusive GPUs) Fault isolation

After a GPU exception is detected, ACK isolates the faulty GPU card.

For more information about GPU fault detection and automatic isolation, see GPU exception detection and automatic isolation.

Node auto repair process

System and Kubernetes add-on exceptions

Node instance exceptions

ACK repairs the faulty system and Kubernetes add-ons. For example, it may restart the kubelet or the container runtime.

If Reboot Node on System/Kubernetes Component Failure is allowed and the initial repair actions fail, ACK takes the following steps:

ACK automatically marks the faulty node as unschedulable.

ACK drains the faulty node that requires a restart.

ACK restarts the node.

When the node's status returns to normal, ACK makes the node schedulable again.

For a detailed description of the process, see System and Kubernetes add-on anomalies.

ACK automatically adds a taint to the faulty node.

If Repair Nodes Only After Acquiring Permissions is enabled, ACK waits for your authorization before proceeding with the next steps.

ACK drains the faulty node that requires a restart or replacement.

ACK performs a repair action, such as restarting the node or initiating a hardware repair. The node status changes to Repairing.

(For exclusive GPUs) When the GPU card's status returns to normal, ACK removes its isolation.

When the node's status returns to normal, ACK removes the taint.

For a detailed description of the process, see Node instance anomalies.

If a cluster contains multiple node pools, ACK repairs them serially, one node pool at a time.

If a node pool contains multiple unhealthy nodes, ACK repairs them serially, one by one. If a node fails to heal, ACK stops the auto repair process for all other faulty nodes in that node pool.

Before you begin

This feature requires the Event Center to receive alerts for node pool events and the ack-node-problem-detector add-on to detect node exceptions. For more information, see Event monitoring.

This feature is available only for ACK managed clusters and is supported for managed node pools and Lingjun node pools.

The following features are being released in phases and may have different rollout schedules. To use them, submit a ticket to request access.

Auto repair for node instance exceptions: This is on an allowlist basis.

Node auto repair for Lingjun node pools: This is on an allowlist basis.

Alert rule sets: After enabling node auto repair, we recommend enabling alert management and activating the Cluster Node auto repair Alert Rule Set and Cluster GPU Monitoring Alert Rule Set. This ensures you receive alerts when an exception occurs. The corresponding rule sets are in a phased release and may not be visible yet.

To learn how to enable the rule sets, see Container Service Alert Management.

NPD version: auto repair for node instance exceptions requires NPD version 1.2.26 or later. Version 1.2.26 is currently in a phased release.

Configure node auto repair

Enable and configure node auto repair for a new or existing node pool through its managed configuration. Node pools and Lingjun node pools have similar steps. The following steps use a standard node pool as an example.

New node pools

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster to manage and click its name. In the left navigation pane, choose .

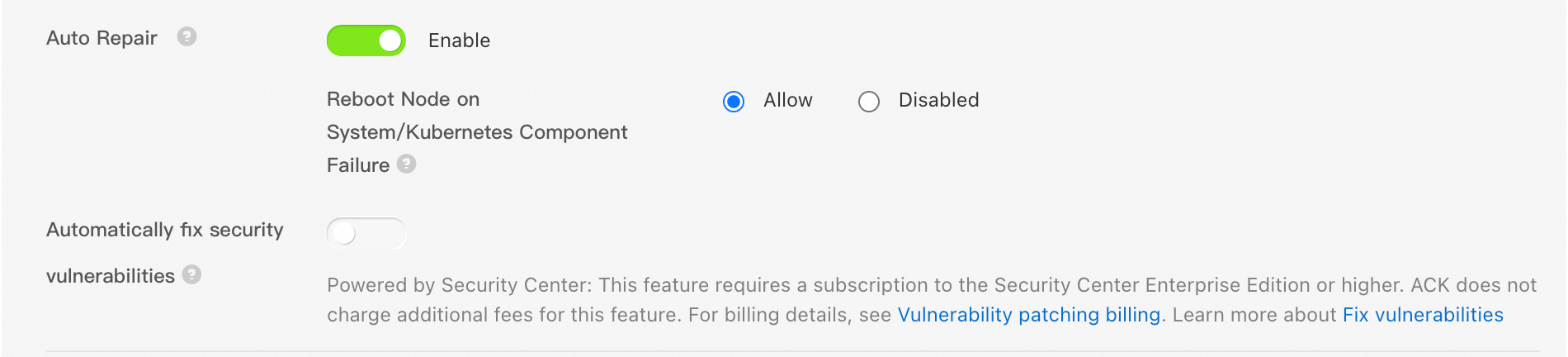

On the Node Pools page, click Create Node Pool. In the Configure Managed Node Pool section, select Custom Node Management. Enable Auto Repair and configure the repair option Reboot Node on System/Kubernetes Component Failure. Follow the on-screen instructions to complete the creation of the node pool.

For a complete description of the configuration options, see Create and manage a node pool. For important considerations regarding node restarts and authorization, see the sections below.

Existing node pools

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster to manage and click its name. In the left navigation pane, choose .

In the node pool list, find the target node pool. In the Actions column, click

> Enable Managed Node Pool (for a regular node pool) or Configure Managed Node Pool (for a managed node pool). In the Configure Managed Node Pool section, select Custom Node Management. Enable Auto Repair and configure the repair option Reboot Node on System/Kubernetes Component Failure. Follow the on-screen instructions to submit the configuration.

> Enable Managed Node Pool (for a regular node pool) or Configure Managed Node Pool (for a managed node pool). In the Configure Managed Node Pool section, select Custom Node Management. Enable Auto Repair and configure the repair option Reboot Node on System/Kubernetes Component Failure. Follow the on-screen instructions to submit the configuration.

For a complete description of the configuration options, see Create and manage a node pool. For important considerations regarding node restarts and authorization, see the sections below.

System and Kubernetes add-on anomalies

Repair process

ACK initiates a repair task based on information such as the node's condition. Run the kubectl describe node command to view the node's status in the condition field.

When ACK detects a system or Kubernetes add-on exception that persists beyond a specified threshold, it automatically starts the repair process, which for this scenario is as follows:

ACK attempts to repair the faulty system and Kubernetes add-ons. For example, it may restart the kubelet or the container runtime.

If Reboot Node on System/Kubernetes Component Failure is allowed and the initial repair actions fail, ACK takes the following steps:

ACK automatically marks the faulty node as unschedulable.

ACK drains the faulty node that requires a restart. The drain operation times out after 30 minutes.

When draining a node, ACK evicts the pods while respecting any configured Pod Disruption Budgets (PDBs). To ensure high service availability, we recommend deploying your workloads with multiple replicas across different nodes. Also, configure PDBs for critical services to control concurrent disruptions.

If the drain fails, ACK still proceeds with the subsequent steps.

ACK restarts the node.

When the node's status returns to normal, ACK makes the node schedulable again.

If a node was already unschedulable before the process began, ACK will not automatically make it schedulable after the repair.

Node conditions that trigger auto repair

Node condition | Description | Risk level | Threshold | repair action |

KubeletNotReady(KubeletHung) | The kubelet has stopped unexpectedly, causing the node to report a | High | 180s | 1. Restart the kubelet. |

KubeletNotReady(PLEG) | The PLEG health check has failed, causing the node to report a | Medium | 180s | 1. Restart containerd or Docker. |

KubeletNotReady(SandboxError) | PodSandbox not found, preventing the kubelet from starting correctly. | High | 180s | 1. Delete the corresponding sandbox container. |

RuntimeOffline | containerd or Docker has stopped, making the node unavailable. | High | 90s | 1. Restart containerd or Docker. |

NTPProblem | The time synchronization service (ntpd or chronyd) is abnormal. | High | 10s | Restart ntpd or chronyd. |

SystemdOffline | The Systemd state is abnormal, preventing containers from being started or stopped. | High | 90s | If Reboot Node on System/Kubernetes Component Failure is allowed, restart the ECS instance. |

ReadonlyFilesystem | The node's file system has become read-only. | High | 90s | If Reboot Node on System/Kubernetes Component Failure is allowed, restart the ECS instance. |

Node instance anomalies

Ensure that you have completed the preparations described in Before you begin.

Repair process

When a Lingjun node fails and requires hardware repair, the repair process redeploys the node and erases all data on its local disks. In this scenario, we recommend enabling Repair Nodes Only After Acquiring Permissions for the node pool so you can back up data before authorizing the repair.

In the case of a node instance exception, the auto repair process is as follows.

ACK automatically triggers the following repair process 5 minutes after a node instance exception occurs.

After detecting an exception, ACK adds the following taint to the faulty node:

Key: alibabacloud.com/node-needrepair

Value: Unschedulable

Effect: NoSchedule

If Repair Nodes Only After Acquiring Permissions is enabled, ACK waits for your authorization before proceeding.

If you need to handle the workloads on the unhealthy node first, we recommend enabling Repair Nodes Only After Acquiring Permissions. ACK will only begin the repair after you grant authorization.

ACK automatically adds the label

alibabacloud.com/node-needrepair=Inquiringto the faulty node.You can handle the pods running on the node or back up your data first. Once you have finished, authorize the repair by deleting the

alibabacloud.com/node-needrepairlabel or setting its value toApproved(alibabacloud.com/node-needrepair=Approved).After receiving your authorization, ACK proceeds with the next steps.

If Repair Nodes Only After Acquiring Permissions is not enabled, ACK automatically proceeds with the next steps after detecting the exception.

ACK drains the node. The drain operation times out after 30 minutes.

When draining a node, ACK evicts the pods while respecting any configured PDBs. To ensure high service availability, we recommend deploying your workloads with multiple replicas across different nodes. Also, configure PDBs for critical services to control concurrent disruptions.

If the drain fails, ACK still proceeds with the subsequent steps.

ACK performs a repair action, such as restarting the node or initiating a hardware repair.

ACK checks whether the node's status has returned to normal.

If the fault is resolved, ACK removes the taint, and the node returns to a normal state.

If the fault persists or the repair process fails, the taint is not removed. ACK periodically sends event notifications. You can view the events for troubleshooting or submit a ticket.

(For exclusive GPUs) When the GPU card's status returns to normal, ACK removes its isolation.

Node conditions that trigger auto repair

If a hardware repair is performed on a Lingjun node, you must manually remove the node from the node pool after the repair is successful. Then, re-add the repaired device to the node pool by adding it as an existing node. For more information and important considerations, see Remove a node and Add an existing Lingjun node.

Node condition | Description | Repair action |

| Indicates a fatal NVLink hardware error. This severe failure requires offline repair. | Repair hardware |

| Indicates the GPU has "fallen off the bus"—meaning it is no longer detectable by the system. This severe hardware failure requires offline repair. | Repair hardware |

| The GPU has failed to perform a row remapping. | Repair hardware |

| The GPU has fallen off the bus or has otherwise become inaccessible. | Repair hardware |

| The infoROM is corrupted. | Repair hardware |

| A device's external power cables are not properly attached. | Repair hardware |

| Indicates an uncontained ECC error. All applications on the GPU are affected. The GPU must be reset before applications can be restarted. | Restart node |

| Indicates a Double Bit ECC Error (DBE). This uncorrectable error requires a GPU reset or node restart to clear. | Restart node |

| A timeout occurred while waiting for the GSP core to respond to an RPC message. | Restart node |

| Indicates an unrecovered ECC error. The driver detected uncorrectable errors in GPU memory, requiring a GPU reset. | Restart node |

| An error occurred in the code running on the GPU's GSP core. | Restart node |

| The GPU has pending retired pages that require a GPU reset to take effect. | Restart node |

| The GPU has an uncorrectable, uncontained error that requires a GPU reset for recovery. | Restart node |

| Indicates a Graphics Engine fault during a context switch. This uncorrectable error requires a GPU reset or node restart. | Restart node |

| Indicates an internal micro-controller breakpoint or warning. This uncorrectable error requires a GPU reset or node restart. | Restart node |

| Indicates an internal micro-controller halt. This uncorrectable error requires a GPU reset or node restart. | Restart node |

| Indicates a Graphics Engine class error. This uncorrectable error requires a GPU reset or node restart. | Restart node |

For more information about these items, such as the node conditions they generate and whether they produce an event, see GPU exception detection and automatic isolation.

Node status during the auto repair process

While a repair task is in progress, the node status is Repairing.

If the repair is complete and the fault is resolved, the node returns to a normal state.

If the repair is complete but the fault persists, the node's status is set to Recovery failed.

A node in the Recovery failed state will not trigger another auto repair operation until the underlying fault is resolved.

View node auto repair events

When ACK triggers a node auto repair operation, it logs corresponding events in the Event Center. On your cluster's details page, choose Operations > Event Center to view records of automatic recoveries and the specific actions taken. You can subscribe to these events as described in Event monitoring.

Event | Level | Description |

| Normal | Node auto repair has started. |

| Normal | A node auto repair action was performed, such as restarting the kubelet. |

| Normal | Node auto repair succeeded. |

| Warning | Node auto repair failed. For troubleshooting, see the FAQ section below. |

| Normal | Node auto repair was skipped because the ECS instance was not in a running state. |

FAQ

What should I do if node auto repair fails?

Due to the complexity of some failures, auto repair cannot resolve all failures. When an auto repair task fails or the fault persists after the task is complete, ACK sets the node's status to Recovery failed. If a node fails to heal, ACK stops the auto repair process for other faulty nodes in that node pool until the initial fault is resolved. Submit a ticket to contact technical support.

Related documents

We recommend enabling NPD and monitoring cluster events through the Event Center. For more information, see Event monitoring.

For more information about GPU fault detection, see GPU exception detection and automatic isolation and Diagnose GPU node issues.

To resolve a node issue by removing the faulty node and adding it back, follow the documented procedures in the ACK console to prevent unexpected behavior. For more information, see Remove a node and Add existing nodes.