This topic describes how to use DataWorks to synchronize data from MaxCompute to ApsaraDB for ClickHouse.

Background information

You can use the batch data synchronization feature of DataWorks to synchronize data from various data sources to ApsaraDB for ClickHouse. For more information about the data sources supported by batch data synchronization, see Supported data source types, Reader plug-ins, and Writer plug-ins.

Prerequisites

A DataWorks workspace is created and MaxCompute is selected as the compute engine. For more information, see Create a workspace.

An exclusive resource group for Data Integration is created and configured. For more information, see Create and use an exclusive resource group for Data Integration.

An ApsaraDB for ClickHouse cluster is created, and a username and a password of a database account are created. For more information, see Create an ApsaraDB for ClickHouse cluster and Manage database accounts of an ApsaraDB for ClickHouse cluster.

You have the permissions to log on to the database. For more information, see Grant permissions.

If a RAM user wants to use DataWorks, the owner of the Alibaba Cloud account must assign a role to the RAM user. For more information, see Add workspace members and assign roles to them.

Usage notes

ApsaraDB for ClickHouse supports only exclusive resource groups for Data Integration.

If you want to synchronize a table that has been synchronized before, execute the

TRUNCATE TABLE <Table name>;statement to clear synchronized data in the ApsaraDB for ClickHouse table.

Procedure

Add data sources.

You need to add data sources for MaxCompute and ApsaraDB for ClickHouse.

NoteFor more information, see Add a MaxCompute data source and Add a ClickHouse data source.

Create a MaxCompute table.

Log on to the DataWorks console.

In the left-side navigation pane, click Workspace.

In the top navigation bar, select the region where the desired workspace resides.

On the Workspaces page, find the workspace and choose in the Actions column.

On the DataStudio page, move the pointer over the

icon and choose .

icon and choose . In the Create Table dialog box, select a path from the Path drop-down list and configure the Name parameter. In this example, odptabletest1 is used as the table name. Click Create.

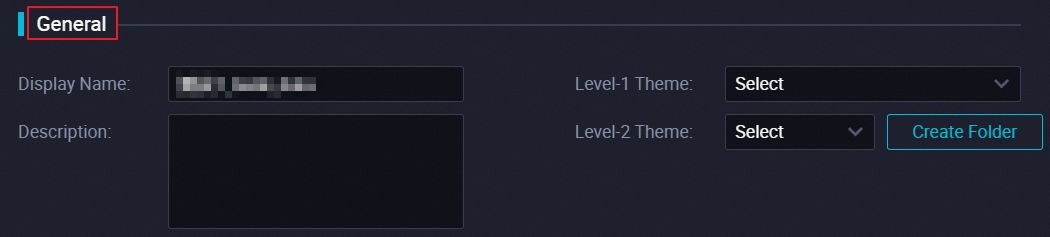

In the General section, configure the parameters.

The following table describes the parameters.

Parameter

Description

Display Name

The display name of the table.

Theme

The folders that are used to store and manage the table. You can specify the level-1 and level-2 folders to store the table. The Level-1 Theme and Level-2 Theme parameters can be used to categorize tables based on business categories. You can store tables of the same business category in the same folder.

NoteThe level-1 and level-2 themes in the Workspace Tables pane of the DataStudio page help you better manage tables in folders. You can quickly find the current table in the Workspace Tables pane by theme. If no theme is available, you can create one. For information about how to create a theme, see the Create or manage folders for tables section of the "Manage settings for tables" topic.

Click DDL in the toolbar.

In the DDL dialog box, enter the following statement and click Generate Table Schema:

CREATE TABLE IF NOT EXISTS odptabletest1 ( v1 TINYINT, v2 SMALLINT );Click Commit to Development Environment and Commit to Production Environment in sequence.

Write data to the MaxCompute table.

On the DataStudio page, click Ad Hoc Query in the left-side navigation pane.

Move the pointer over the

icon and choose .

icon and choose . In the Create Node dialog box, select a path from the Path drop-down list and configure the Name parameter.

Click Confirm.

On the edit page of the node, enter the following statement to write data to the MaxCompute table:

insert into odptabletest1 values (1,"a"),(2,"b"),(3,"c"),(4,"d");Click the

icon in the toolbar.

icon in the toolbar. In the Estimate MaxCompute Computing Cost dialog box, click Run.

Create an ApsaraDB for ClickHouse table.

Log on to the ApsaraDB for ClickHouse console.

In the top navigation bar, select the region where the desired cluster is deployed.

On the Clusters page, click a tab based on your cluster edition and click the ID of the cluster that you want to manage.

On the Cluster Information page, click Log On to Database in the upper-right corner.

In the Log on to Database Instance dialog box, enter the username and password of your database account and click Login.

Enter the following statement and click Execute(F8). Sample statement:

create table default.dataworktest ON CLUSTER default ( v1 Int, v2 String ) ENGINE = MergeTree ORDER BY v1;NoteThe schema type of the ApsaraDB for ClickHouse table must map the schema type of the MaxCompute table.

Create a workflow.

If you already have a workflow, skip this step.

On the DataStudio page, click Scheduled Workflow in the left-side navigation pane.

Move the pointer over the

icon and select Create Workflow.

icon and select Create Workflow. In the Create Workflow dialog box, configure the Workflow Name parameter.

ImportantThe name must be 1 to 128 characters in length and can contain letters, digits, underscores (_), and periods (.).

Click Create.

Create a batch synchronization node.

Click the newly created workflow and right-click Data Integration.

Choose .

In the Create Node dialog box, configure the Name parameter, and select a path from the Path drop-down list.

ImportantThe node name must be 1 to 128 characters in length and can contain letters, digits, underscores (_), and periods (.).

Click Confirm.

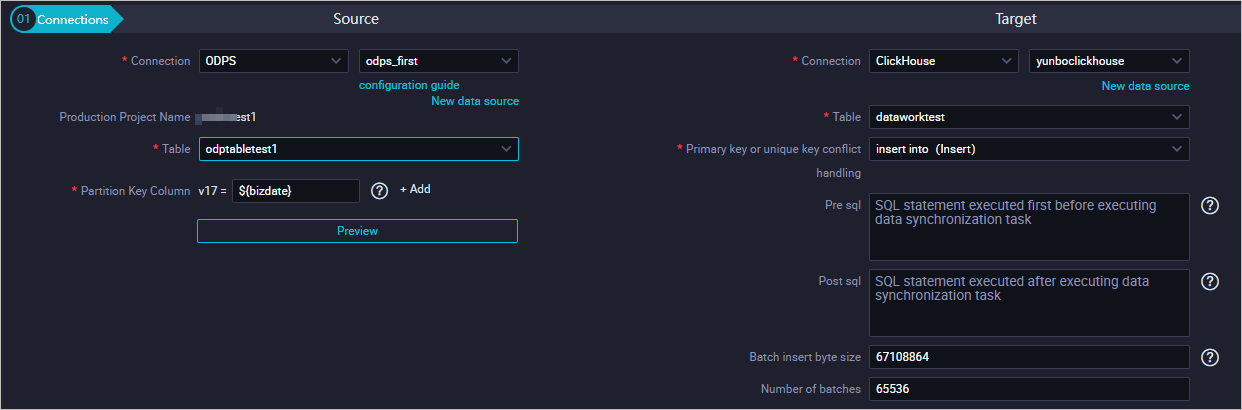

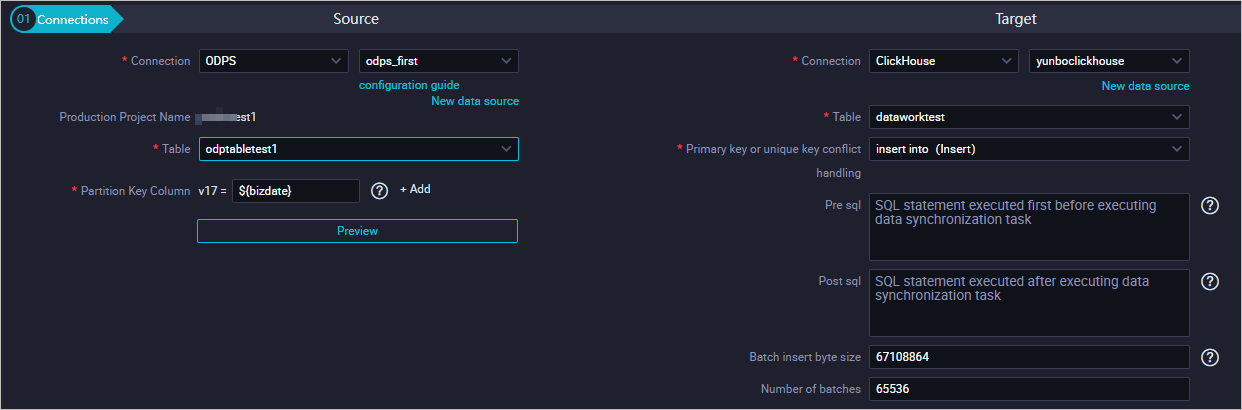

Configure the data source and destination.

Source: Select a supported data source. In this example, MaxCompute is selected.

The following table describes the parameters.

Parameter

Description

Connection

The type and name of the data source.

Production Project Name

The name of the project in the production environment. You cannot change the value.

Table

The table that you want to synchronize.

Partition Key Column

If your daily incremental data is stored in the partitions of a specific date, you can specify the partition information to synchronize the daily incremental data. For example, set v17 to ${bizdate}.

NoteDataWorks cannot map the fields in partitioned MaxCompute tables. If you want to read data from a partitioned MaxCompute table, you must specify each desired partition when you configure MaxCompute Reader.

NoteFor more information about the parameters, see MaxCompute Reader.

Target: Select ClickHouse.

The following table describes the parameters.

Parameter

Description

Connection

The type and name of the data source. Select ClickHouse.

Table

The table to which you want to import the synchronized data.

Primary key or unique key conflict handling

Set this value to insert into (Insert).

Pre sql

The SQL statement that you want to execute before the synchronization task is run.

Post sql

The SQL statement that you want to execute after the synchronization task is run.

Batch insert byte size

The maximum number of bytes to be inserted.

Number of batches

The number of data entries to be inserted in one batch.

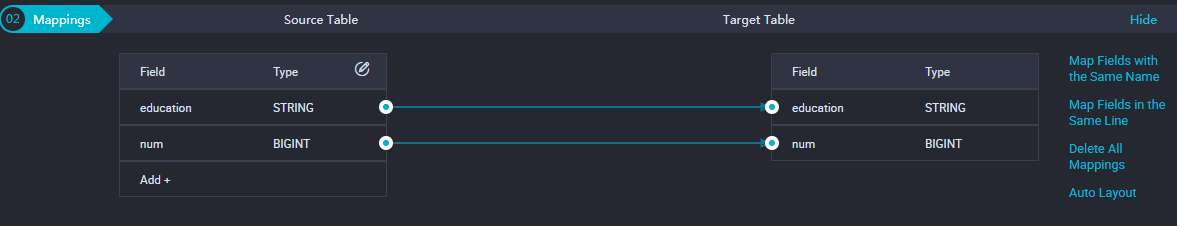

(Optional) Mappings: You can select the field mapping. The Field on the left side corresponds to the Field on the right side.

Note

NoteFor information about the parameters, see the Step 4: Configure mappings between source fields and destination fields section of the Configure a batch synchronization task by using the codeless UI topic.

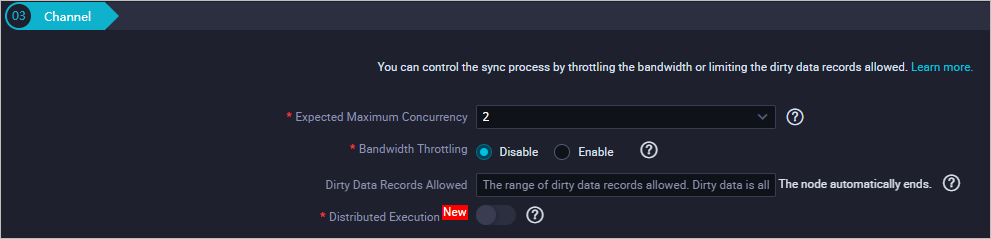

(Optional) Channel: Configure the maximum transmission rate and dirty data check rules.

Note

NoteFor information about the parameters, see the Step 5: Configure channel control policies section of the Configure a batch synchronization task by using the codeless UI topic.

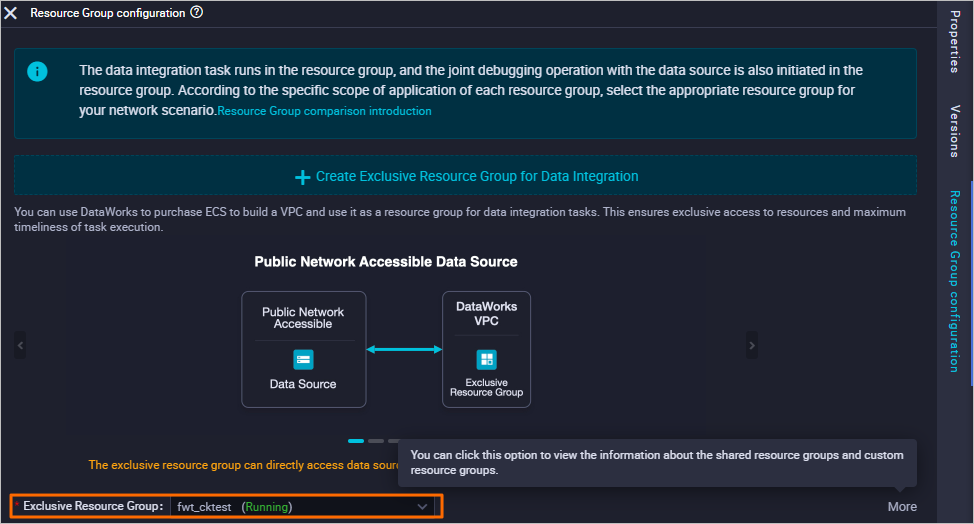

Configure the resource group for Data Integration.

Click Resource Group configuration on the right and select a group from the Exclusive Resource Group drop-down list.

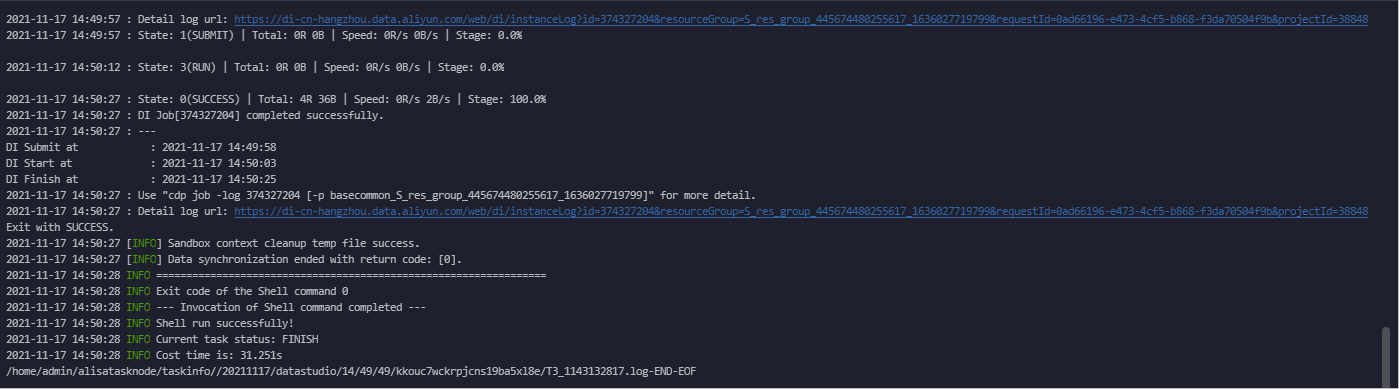

Run and save the synchronization task.

Click the

icon in the toolbar to save the synchronization task.

icon in the toolbar to save the synchronization task. Click the

icon in the toolbar to run the synchronization task.

icon in the toolbar to run the synchronization task.

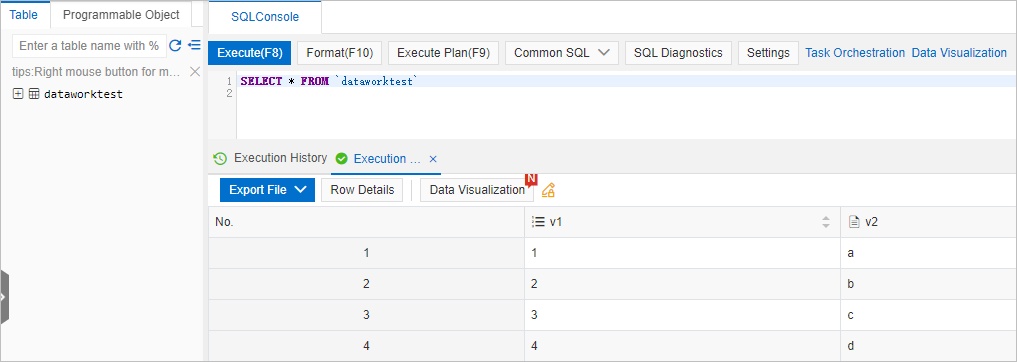

Verify the data synchronization result

Log on to the ApsaraDB for ClickHouse console.

In the top navigation bar, select the region where the desired cluster is deployed.

On the Clusters page, click a tab based on your cluster edition and click the ID of the cluster that you want to manage.

On the Cluster Information page, click Log On to Database in the upper-right corner.

In the Log on to Database Instance dialog box, enter the username and password of your database account and click Login.

Enter the following query statement and click Execute(F8) to check whether the data has been synchronized:

SELECT * FROM dataworktest;The following result is returned.

Note

NoteIf the result is returned after you execute the query statement, the data has been synchronized from MaxComput to ApsaraDB for ClickHouse.