This blog tutorial describes the problem of sudden increases in TIME_WAIT on an ECS instance of a client in the Alibaba Cloud environment and how you can go about troubleshooting this problem. We specifically go through the troubleshooting process that the technical support of Alibaba Cloud went through with one of our customers to solve this issue.

But before we get into the main topic of this article, let's give some background to what this problem actually is. Often this sort of problem occurs in the following situation:

When the client directly accesses the backend Web server with a small number of TIME_WAIT sockets. At this time, a 7-layer SLB is now introduced to implement load balancing for the backend server. Then, the client SLB accesses the backend server, but it finds that the number of sockets for the TIME_WAIT status of the client will tend to grow to over 4,000, which is very abnormal. Yet, in this situation, the customer reports that no kernel parameters have been modified. This is the underlying problem this article will discuss.

As the first step that needs to be done to troubleshoot this issue, you need to identity the exact problem. Based on the above information, the following information can be inferred:

The client connects to the SLB (server) through a transient connection, and the client is also the party that actively closes the connection. Next, it is the case that concurrency is relatively large.

If TIME_WAIT accumulation was not found before, but is now, then in the case that the access mode remains unchanged. Then, it is very likely that the TIME_WAIT socket was quickly recovered or reused before. Therefore, it can be inferred that the following TCP kernel parameter settings have a high probability of being set as follows:

The customer confirmed the above information.

The next step is try to see if you can fix the problem. The only change we can confirm in this case is the introduction of the SLB, so we need to check the effect of the SLB on TIME_WAIT. For the client, if the TCP kernel parameters tcp_tw_recycle and tcp_timestamps are both set to 1, normally the sockets in the TIME_WAIT status will be quickly recycled. But the current phenomenon is that the sockets in the TIME_WAIT status is not quickly recycled.

Because tcp_tw_recycle is a system parameter, and Timestamps is a field in TCP options, we need to pay attention to the change of the Timestamps. If you are familiar with SLB, then you may not need to capture packets to know what the SLB has done to TCP Timestamps. But here, we follow the normal troubleshooting procedure, which is to capture packets, and compare what is different between the packets before and after the introduction of the SLB.

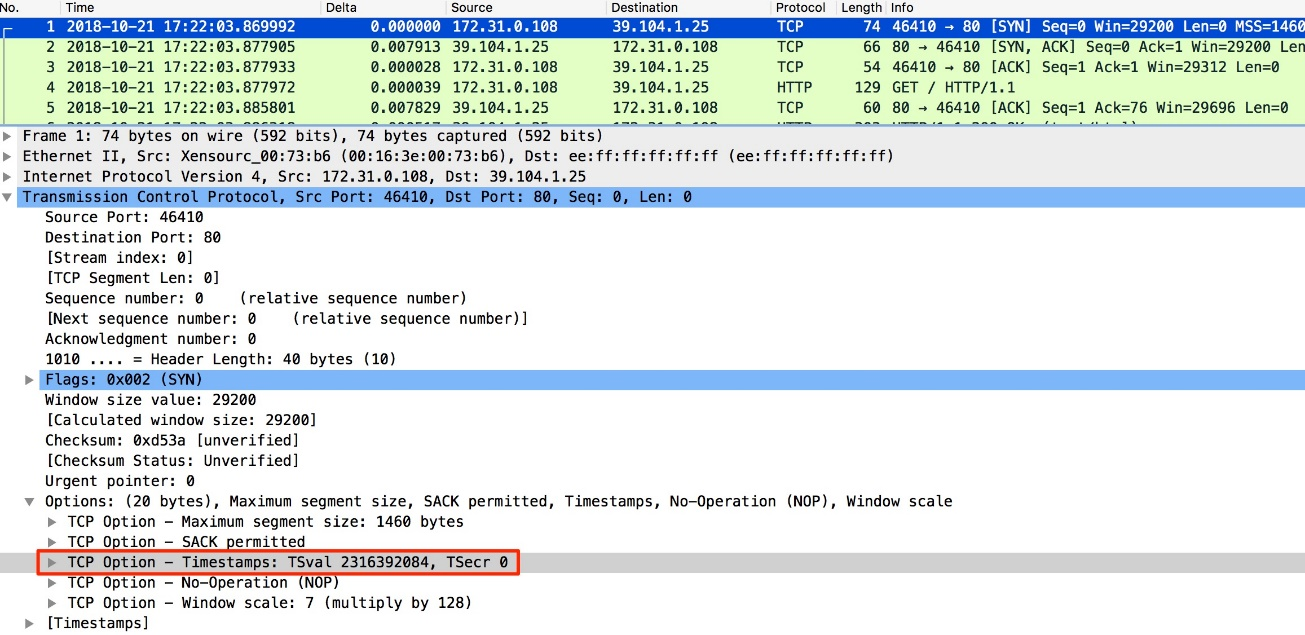

After SLB is introduced, the client accesses the SLB and captures packets on the client. The following figure shows the SYN from the client to the SLB. We can see that TCP Timestamps option is included. TSval is the value obtained based on the CPU ticks of the local system. TSecr echoes the TSval value sent by the peer party last time, and is obtained based on the CPU ticks of the peer system to calculate the RTT. Here, it is the first SYN to establish a TCP connection, so the TSecr value is 0.

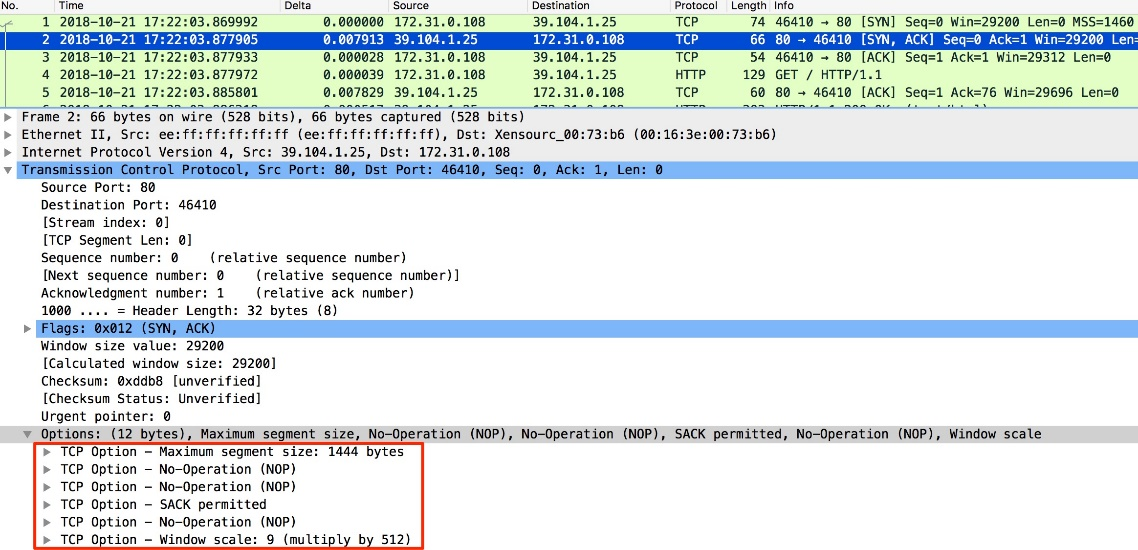

However, when the client observes the returned packets of the SLB, we saw that the TCP Timestamps option no longer exists, while TCP Timestamps always exists when the client directly accesses the backend server. Through comparison, we found that this is the change brought about by the introduction of the SLB: SLB has erased the Timestamps field in TCP options.

So far, we are close to discovering why there was a change, but there's still more we can do. But how exactly does the erasure of the TCP Timestamps affect the change in the number of sockets in the TIME_WAIT status? Well, let's check the code logic for the fast recycling of TCP TIME_WAIT sockets.

In this step of troubleshooting, we will drive further to try to discover the answers to these above questions. To do this, we will look into the fast recycling of TCP TIME_Wait sockets. Consider the following procedure:

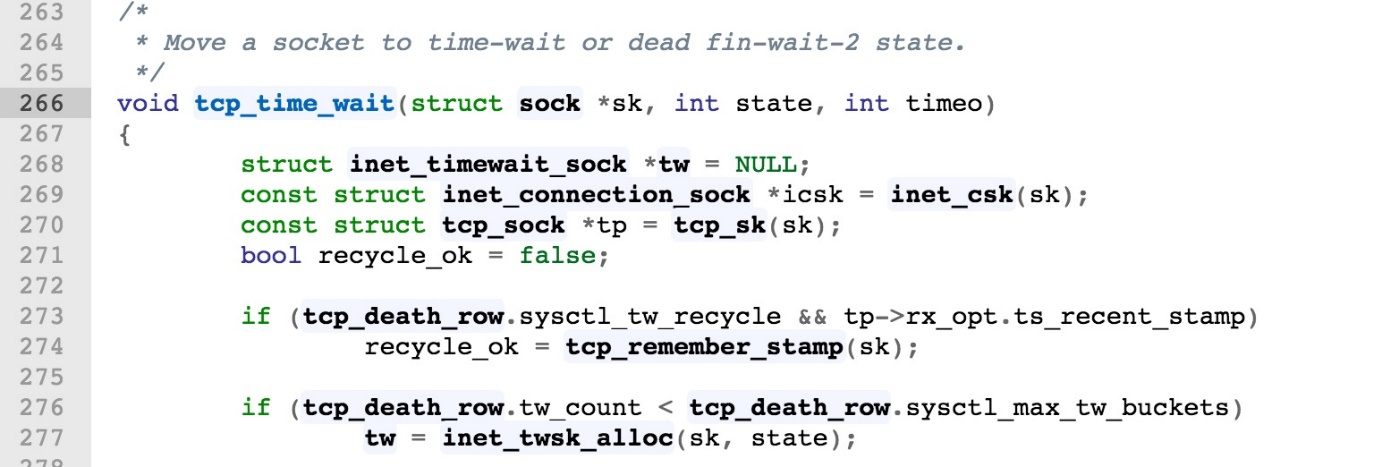

The code for different Linux kernel versions may be slightly different. Here, the code for the Linux kernel version 3.10.0 is used. The logic for TCP entering the TIME_WAIT(FIN_WAIT_2) status is in tcp_time_wait() in the tcp_minisocks.c.

Here, we can see a recycle_ok bool variable. This variable determines whether the socket in the TIME_WAIT status is quickly recycled. The recycle_ok is set to true only when tcp_death_row.sysctl_tw_recycle and tp->rx_opt.ts_recent_stamp are both true.

Before we discussed "If the TCP kernel parameters tcp_tw_recycle and tcp_timestamps are both set to 1, normally the sockets in the TIME_WAIT status will be quickly recycled". The reason for this relates to whether such sockets are recycled based on the rx_opt.ts_recent_stamp in tcp_sock or if it is based on the TCP kernel parameter setting of the current system. Here, the TCP Timestamps information is not found in the returned packets of the SLB, so recycle_ok can only be returned as false. The remaining code is as follows:

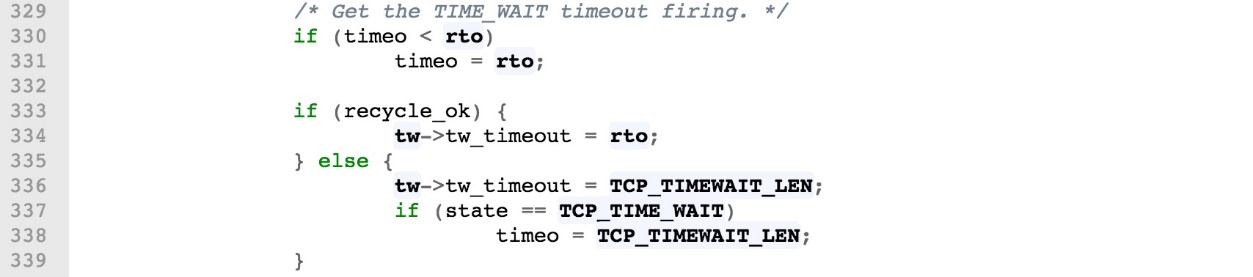

The specific recycling time of TIME_WAIT is in the above code:

recycle_ok is true, the TIME_WAIT recycling time is rto;recycle_ok is false, the recycling time is normal 2MSL: TCP_TIMEWAIT_LEN (60s, which is hard-coded in tcp.h, fixed after kernel compilation, and not adjustable).The rto in the code above is just a local variable. It is assigned 3.5 times the icsk->icsk_rto (RTO, Retransmission Timeout) in the function, as you can see below:

const int rto = (icsk->icsk_rto << 2) - (icsk->icsk_rto >> 1);The icsk->icsk_rto is dynamically computed based on the actual network conditions. The maximum and minimum RTO values are specified in tcp.h, as follows:

#define TCP_RTO_MAX ((unsigned)(120*HZ))

#define TCP_RTO_MIN ((unsigned)(HZ/5))HZ is 1s, TCP_RTO_MAX = 120s, and TCP_RTO_MIN = 200 ms. Therefore, in the LAN intranet environment, even if the RTT is less than 1 ms, the minimum RTO value for TCP retransmission can only be 200 ms.

Thus, in the LAN intranet environment, it can be understood that the time for the fast recycling of TIME_WAIT sockets is 3.5 * 200 ms = 700 ms. However, for an extremely bad network environment, it is possible that a pitfall exists - the "fast recycling" of TIME_WAIT sockets may take more than 60s.

From the above troubleshooting, we found that the recycling time of TIME_WAIT is changed from 3.5 times RTO to 60 seconds, and the client has a large concurrency of TCP transient connections. Therefore, we can conclude that what is happening is that the client rapidly accumulates sockets while in the TIME_WAIT status.

This blog describes the problem of sudden increases in TIME_WAIT on an ECS instance of a client in the Alibaba Cloud environment and how to troubleshoot this issue.

But one question you may ask from this is whether this problem where more than 4,000 TIME_WAIT sockets even exist on the client? In fact, it is not a problem in a normal system environment, but it may make the netstat/ss output unfriendly. So to get to the point, the TIME_WAIT socket has little impact on the consumption of system resources, but the following restrictions must be taken into account due to the large number of TIME_WAIT sockets:

net.ipv4.ip_local_port_range)TIME_WAIT buckets (net.ipv4.tcp_max_tw_buckets)max open files)If the number of TIME_WAIT sockets is far from reaching the above limits, we are not in a hurry to take any measures.

7 posts | 5 followers

FollowAlibaba Cloud Community - July 9, 2024

William Pan - August 19, 2019

William Pan - August 19, 2019

Alibaba Cloud Community - June 19, 2023

digoal - October 12, 2022

Alibaba Clouder - April 19, 2019

7 posts | 5 followers

Follow Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Time Series Database for InfluxDB®

Time Series Database for InfluxDB®

A cost-effective online time series database service that offers high availability and auto scaling features

Learn More Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn MoreMore Posts by William Pan