When troubleshooting network problems, it is common to encounter TCP connection failures. If you can obtain the packets captured at both ends, the packets will look like the following:

This is because the first packet has not obtained the RTT and RTO, and it will be retransmitted in 1, 2, 4, 8 or so seconds until net.ipv4.tcp_syn_retries retransmissions are completed.

This problem occurs relatively frequently. This blog will focus in on network problems that are related to the TCP protocol stack. By the TCP protocol stack, what we mean is that for related network problems it may be the case that: the TCP SYN packet may have reached the TCP processing module of the kernel, but no SYNACK was returned to the client based on the server-side kernel code. The client's persistent retransmission of TCP SYN may also be caused by other causes, such as inconsistent access paths caused by multiple NICs on the server side, or SYN packets blocked by iptables rules, but these won't be discussed here. Rather, we will focus on the most common causes.

In this tutorial, we will be using the widely used kernel version of CentOS 7. First in this tutorial, let's take a look at the main logic of TCP processing SYN, and analyze the points that may cause problems based on case handling experiences. The logic for a socket in the Listen status to process the first TCP SYN packet is like the following:

tcp_v4_do_rcv() @net/ipv4/tcp_ipv4.c

|--> tcp_rcv_state_process() @net/ipv4/tcp_input.c // This functiuon implements the processing of accept messgaes in the vast TCP state (except for ESTABLISHED and TIME-WAIT), including of course the LISTEN state we are interested in.

|--> tcp_v4_conn_request() @@net/ipv4/tcp_ipv4.c // When the TCP socket is in the LISTEN state and the TCP SYN flag is in the received message is set, it comes to this function.The kernel code in CentOS may be slightly adjusted. If you need to track the exact number of lines in the source code, systemtap is a suitable method for this purpose, as shown below:

# uname -r

3.10.0-693.2.2.el7.x86_64

# stap -l 'kernel.function("tcp_v4_conn_request")'

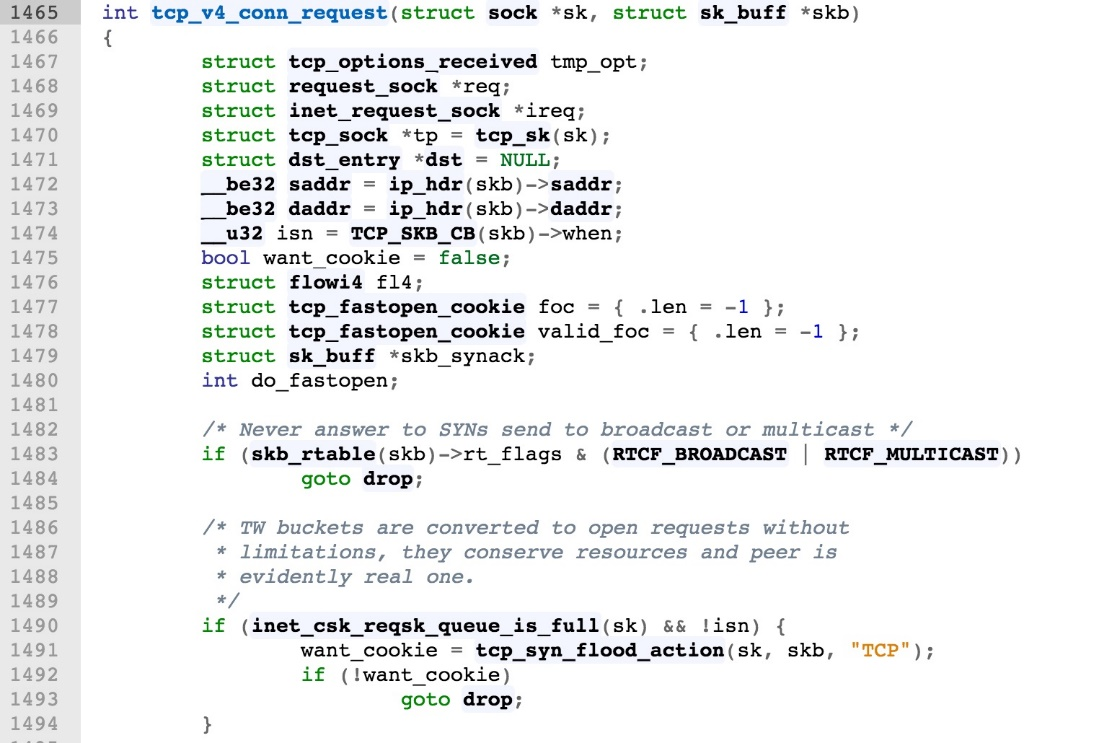

kernel.function("tcp_v4_conn_request@net/ipv4/tcp_ipv4.c:1303")In the code of tcp_v4_conn_request(), the first few rows of the function logic are like the following:

The precondition for entering this function is that the TCP socket is in the Listen state, and the TCP SYN flag is set in the received packet. After entering the function logic, we can find that the function should consider various possible exceptions, but many of them are not actually that common. For example, the two situations in the first few lines:

want_cookie is false, the SYN packet is dropped.The first case is relatively easy to understand, and has not been seen in practice. But, the second case is a little more complicated, and may be encountered with some probability in practice. Let's take a look at it below:

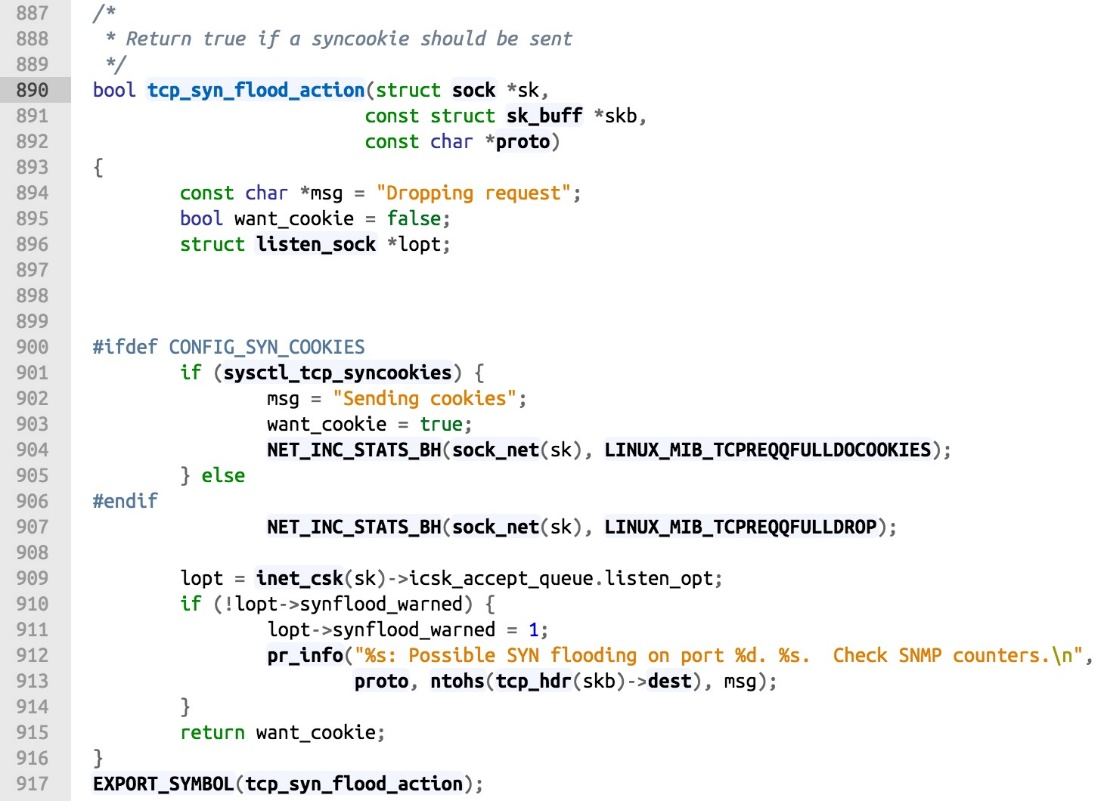

The first condition where the request queue is full is actually easily met. A syn flood attack can easily cause this to happen. The isn is assigned as TCP_SKB_CB(skb)-> when at the beginning of the function. This is the field used to compute the RTT in the TCP control block structure. The want_cookie indicates whether the syn syncookies method is used or not. Its definition in tcp_syn_flood_action() is as follows. If the ifdef is added before CONFIG_SYN_COOKIES, and the kernel parameter net.ipv4.tcp_syncookies is also set to 1, the summary returns true, so the want_cookie returns as true.

Therefore, in the above case of dropping SYN packets, the true precondition is that the kernel parameter net.ipv4.tcp_syncookies is not enabled. However, in actual production systems, the parameter, net.ipv4.tcp_syncookies, is enabled by default. Syn syncookies is a method to defend against syn flood attacks by exchanging time (CPU computing) for space (request queue). In actual production, you do not need to turn this switch off explicitly in any scenarios. So in general, the request in line 1490 are not very common.

Below are the two main scenarios of in which SYN packets may be dropped and how to quickly judge why the server does not return SYNACK.

This is the most common problem in the actual production environment: For servers with both the net.ipv4.tcp_tw_recycle and the net.ipv4.tcp_timestamps enabled, the probability of this problem is very high when the server has NAT client access. From the client side, the symptom of this problem is that the new connection is unstable. Sometimes it can be connected and sometimes it cannot.

For background information, PAWS is short for Protect Against Wrapped Sequences, which is a means for preventing sequence numbers from being wrapped. Next, Per-host checks the IP address of the peer host rather than the quad-tuples of the IP port.

The way per-host PAWS checks is as follows: For the quintuple peer host IP of the TIME_WAIT socket that is quickly recycled, which helps to prevent the interference of old data from the same host. The TCP Timestamps option of the new SYN packet needs to be increased within 60 seconds as a result. When the client is in a NAT environment, this condition is often not easily met.

Theoretically, it is only necessary to remember the above sentence, to solve the problem that the three-way handshakes for many clients are sometimes connected and sometimes disconnected. For more information, see the detailed explanation below.

Per-host PAWS is mentioned in RFC 1323, as follows:

The reason why TIME_WAIT is needed is also explained in the code annotations of tcp_minisocks.c. And the PAWS mechanism, the theoretical basis for fast recycling of TIME_WAIT, is as follows:

According to the description of RFC and the kernel code annotations above, we can see that the Linux kernel implements a fast recycling mechanism for the TIME-WAIT status. Linux can drop the 60-second TIME-WAIT time, and directly shorten it to 3.5 times of the RTO time, because Linux uses some "smart" methods to capture old duplicate packets (for example, based on the PAWS mechanism). In contrast, Linux does use per-host PAWS to prevent packets in the previous connections from being wrapped into new connections.

In tcp_ipv4.c, before the SYN is received, if the following two conditions are met, check whether the peer is proven, that is, to perform the per-host PAWS check:

...

else if (!isn) {

/* VJ's idea. We save last timestamp seen

* from the destination in peer table, when entering

* state TIME-WAIT, and check against it before

* accepting new connection request.

*

* If "isn" is not zero, this request hit alive

* timewait bucket, so that all the necessary checks

* are made in the function processing timewait state.

*/

if (tmp_opt.saw_tstamp && // The report contains TCP timestamp option

tcp_death_row.sysctl_tw_recycle && // The net.ipv4.tcp_tw_recyclekernel parameter is enabled.

(dst = inet_csk_route_req(sk, &fl4, req)) != NULL &&

fl4.daddr == saddr) {

if (!tcp_peer_is_proven(req, dst, true)) { // peer (per-host PAWS)

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_PAWSPASSIVEREJECTED);

goto drop_and_release;

}

}In tcp_metrics.c, the implementation logic of Linux per-host PAWS is as follows. Simply put, as mentioned at the beginning of this section: The TCP Timestamps option of the new SYN packet needs to be increased within 60 seconds.

bool tcp_peer_is_proven(struct request_sock *req, struct dst_entry *dst, bool paws_check)

{

struct tcp_metrics_block *tm;

bool ret;

...

tm = __tcp_get_metrics_req(req, dst);

if (paws_check) {

if (tm &&

// peer information is saved within 60 seconds (TCP_PAWS_MSL)之内

(u32)get_seconds() - tm->tcpm_ts_stamp < TCP_PAWS_MSL &&

// Compared with the timestamp in the current(TCP_PAWS_WINDOW)

(s32)(tm->tcpm_ts - req->ts_recent) > TCP_PAWS_WINDOW)

ret = false;

else

ret = true;

}

}When this per-host PAWS mechanism made to allow the TIME-WAIT status to be quickly recycled was implemented in Linux, it was designed to be a solution based on the network environment with a sufficient number of IPv4 address pools. However, with the rapid development of the Internet, the application of NAT is becoming more and more common, and it is very common for clients to access the same server within the SNAT device.

The Per-host PAWS mechanism determines the wrapped data with the increase of the TCP Timestamps option field, and the timestamp is the value obtained based on the CPU ticks of each client, which can be said to be completely random within the NAT device. When client host 1 establishes a TCP connection with the server through NAT, and then the server closes and quickly recycles the TIME-WAIT sockets, the new connection source IP of other client hosts are the same as those recorded in the server peer table, but the TCP Timestamps option is completely random or has a 50% probability of being random compared with the timestamp of host 1 recorded at that time. If timestamp is smaller than that of host 1, the new connection will be rejected within 60 seconds, and the new connection will succeed after 60 seconds. If the timestamp is larger than that of host 1, the new connection is directly successful. So, from the client side, the symptom of this problem is that the new connection is unstable. Sometimes it can be connected and sometimes it cannot.

This is the side effect of using the TIME-WAIT fast recycling mechanism on clients in the NAT environment. This side effect cannot be expected at the beginning of designing the per-host PAWS mechanism, because the network environment at that time was quite different from the current one. In the current network environment, the only recommendation is to disable the TIME-WAIT fast recycling, that is, to make net.ipv4.tcp_tw_recycle=0. Disabling net. ipv4.tcp _ timestamps to remove the TCP Time Stamp option can also solve this problem. However, because the timestamp is the basis for computing RTT and RTO, it is generally not recommended to disable it.

In actual production, troubleshooting is not easy. However, for servers with both the net.ipv4.tcp_tw_recycle and the net.ipv4.tcp_timestamps enabled, the probability of this problem is very high when the server has NAT client access, so if you obtain the settings of these two kernel parameters and the NAT environment of the client network, you can make a basic judgment.

In addition, you can refer to the statistics in netstat-s, which collect data from /proc/net/snmp, /proc/net/netstat and /proc/net/sctp/snmp. As shown below, this statistic value indicates how many new connections have been rejected due to the timestamp. This is a historical statistical total, so the difference between the two time points is more meaningful for troubleshooting.

xx passive connections rejected because of time stamp

A unified and regular phenomenon does not exist. This happens when TCP accept queue is full. This often happens when a problem occurs with the user-space application. In general, the probability of occurrence is not very high.

The accept queue is translated into a fully connected queue or a receiving queue. The new connection enters the accept queue after three handshakes. The user-space application calls the accept system call to obtain the connection and creates a new socket, returns the file descriptor (fd) associated with the socket. In the user space, poll and other mechanisms can be used to learn through the readable event that a new connection that has completed 3 handshakes has entered the accept queue, and the accept system call can be called to obtain the new connection immediately after receiving the notification.

The length of the accept queue is limited. The length depends on the min [backlog, net.core.somaxconn], which is the smaller one of the two parameters.

Even in the case of a large number of concurrent connections, the normal use of the accept system call by the application to obtain connections in the accept queue will not be delayed due to efficiency problems. However, if connections are not obtained in time due to application blocking, it may cause the accept queue to be full, resulting in the new SYN packets being dropped.

In tcp_ipv4, the implementation of "rejecting SYN packets when the accept queue is full" is very simple, as follows:

/* Accept backlog is full. If we have already queued enough

* of warm entries in syn queue, drop request. It is better than

* clogging syn queue with openreqs with exponentially increasing

* timeout.

*/

//If accept queueis full, and SYN queuehas a semi-join that has not been retransmitted by SYNACK, the SYN request is discarded.

if (sk_acceptq_is_full(sk) && inet_csk_reqsk_queue_young(sk) > 1) {

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_LISTENOVERFLOWS);

goto drop;

}In sock.h, the inline function of full accept queue is defined:

static inline bool sk_acceptq_is_full(const struct sock *sk)

{

return sk->sk_ack_backlog > sk->sk_max_ack_backlog;

}In inet_connection_sock.h and request_sock.h, the method of judging whether semi-connections that no SYNACK has been retransmitted exist in the SYN queue is defined:

static inline int inet_csk_reqsk_queue_young(const struct sock *sk)

{

return reqsk_queue_len_young(&inet_csk(sk)->icsk_accept_queue);

}

static inline int reqsk_queue_len_young(const struct request_sock_queue *queue)

{

return queue->listen_opt->qlen_young;

}The above is the implementation in 3.10. In fact, we need to judge two conditions: "whether the accept queue is full" and "whether the SYN queue has semi-connections that no SYNACK has been retransmitted". A large number of new connections usually exist when the accept queue is full, so the second condition is usually met at the same time. If the SYN queue does not have semi-connections that no SYNACK has been retransmitted when the accept queue is full, the Linux kernel still accepts the SYN and returns SYNACK. This situation is rare in actual production, unless the application process is completely stalled, such as stopping the process using the SIGSTOP signal. So when the accept queue is full, the TCP kernel protocol stack still will not directly drop the SYN packet.

The logic of dropping SYN because the accept queue is full has changed slightly in newer kernel versions. For example, in 4.10, the judgment condition is changed from two to one, that is, the kernel only determines whether the accept queue is full. Therefore, in these versions, when the accept queue is full, the kernel will directly drop the SYN packet.

This kind of problem often happens when a problem occurs with the user-space application. In general, the probability of occurrence is not very high. The following two methods are available to confirm this problem:

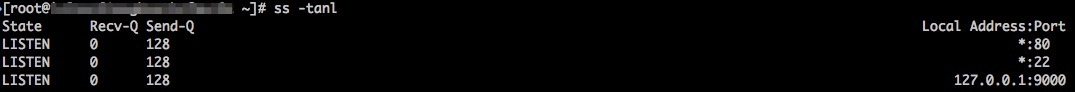

Use option -l of the ss command to check the listening socket. Recv-Q and Send-Q are displayed. Recv-Q indicates the total connections in the current accept queue, and Send-Q indicates the maximum length of the accept queue. As shown in the following: The default accept queue of several processes is 128, because it is restricted by the net.core.somaxconn=128 of the system.

Refer to the statistics in netstat -s. The following statistical value indicates the number of new connections rejected due to socket overflow. Similarly, this is also a historical statistical total, and the difference between the two time points is more meaningful for troubleshooting.

xx times the listen queue of a socket overflowed

If it is confirmed that the SYN packet is dropped due to the accept queue, it is natural to think that a solution is to increase the length of the accept queue. Increasing the backlog and net.core.somaxconn parameters simultaneously can increase the length of accept queue. However, in general, this method can only alleviate the problem, and the most likely situation is that the lengthened accept queue will be filled up again quickly. Therefore, the best way to solve this problem is to check the application and see why it accepts new connections so slowly, solving the root cause.

The main body of this blog article summarizes the two main scenarios, mainly issues related to the cloud infrastructure and service software layers, where SYN is dropped due to the per-host PAWS check and full accept queue. These two scenarios cover the vast majority of the TCP stack dropping SYN situations. If SYN is dropped in other protocol stacks, further case-by-case troubleshooting is required in combination with the parameter configuration and code logic.

Why Are Thousands of TIME_WAIT Sockets Stacked on the Client?

TCP Connection Analysis Why the Socket Remains in the FIN_WAIT_1 State Post Killing the Process

7 posts | 5 followers

FollowWilliam Pan - August 19, 2019

OpenAnolis - May 13, 2022

OpenAnolis - September 4, 2025

Alibaba Cloud Community - January 2, 2024

OpenAnolis - August 3, 2022

Alibaba Cloud Community - July 9, 2024

7 posts | 5 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn MoreMore Posts by William Pan