Consider the following situation. An Alibaba Cloud customer creates an interface for uploading small images on ECS. The interface is released to end users through the network link of Anti-DDoS to SLB and to ECS. However, the upload speed of this interface is far from ideal, with it taking around 8 seconds for a small image of about 600 KB to upload. Considering this problem, the customer first consider if the issue is related to Anti-DDoS problem, but through some tinkering around, he find that the issue is that the TCP transmission throughput is too low, which in turn is limited by the current backend server configuration. The customer's troubleshooting process along with some guiding principles are covered in this blog.

At first glance, the issue that the customer had seemed to be an Anti-DDoS problem, but after some further investigation, detailed here, the issue was found to be elsewhere. Let's consider the entire transmission chain of the interface, as shown below:

Client > 4-layer Anti-DDoS node > 4-layer SLB > backend RS (ECS)

After some investigation, the customer figured out that the client machine, SLB, and the backend RS are all in Beijing, and there is also a 4-tier Anti-DDoS node being used (the geographical location of which is unknown, but it is clear it is not in Beijing). From the very beginning, with a small amount of information, the customer along with our technical support team at Alibaba Cloud had much reason to suspect that the introduction of a new Anti-DDoS node caused the increase of RTT between the client and the backend RS, which would be the problem to lead to longer upload times. However, the question remain, in all actuality, is it normal that a small image of 600 KB or so to take 8 seconds to upload? Judging from experience, this is not normal, but more information is required to determine where the problem actually lies.

Consider the following questions and their answers. They provide us with invaluable information in our hunt for the causes of this technical issue.

Based on the above information, as well as after confirmation that the Anti-DDoS node side of things clearly had no problem, the only thing that could be suspected to be at issue was the increase of round-trip time (RTT) leads to more upload time. Of course, it is necessary to continue the troubleshooting, because no resolution can be found at this point with what me know so far. In the steps to follow, we will need to first test the upload time of uploading images to the Anti-DDoS and the source station SLB separately to see how long the upload times are. We will also need to capture packets to verify whether any points affecting the TCP transmission efficiency exist besides RTT.

The general test results of some quantitative testing are as follows. The exact size of the uploaded file is 605 KB, and it takes approximately 8 seconds to upload it to Anti-DDoS. We did so with the following code:

$ time curl -X POST https://gate.customer.com/xxx/yyy -F "expression=@/Users/customer/test.jpg"

real 0m8.067s

user 0m0.016s

sys 0m0.030sThen, it takes about 2.3 seconds to bind the host to upload the file to the SLB, as shown with the following code:

$ time curl -X POST https://gate.customer.com/xxx/yyy -F "expression=@/Users/customer/test.jpg"

real 0m2.283s

user 0m0.017s

sys 0m0.031sThe above quantitative analysis makes it clear that a previous piece of information reported is not entirely accurate to what is actually going on. In fact, the speed of uploading files to the SLB is also pretty slow. For the same Metropolitan Area Network (MAN), the round-trip time (RTT) is usually less than 10 ms. If the TCP window is normal, then the time required for the client to upload an image of 605 KB to Alibaba Cloud SLB instances should be in the milliseconds range, rather than in the seconds range, and so the actual recorded time 2.3 seconds is not normal. Of course, 2 seconds slower than usual may be something that isn't too easy to perceive, but quantitatively speaking it is an anomaly, nonetheless, and should be considered a factor in the overall issue.

So, with all of that discovered, now the remaining problem is to see why uploading files to Anti-DDoS and SLB is slow, with the first being much slower than the second. You can only understand this issue more clearly once after you have the captured packets.

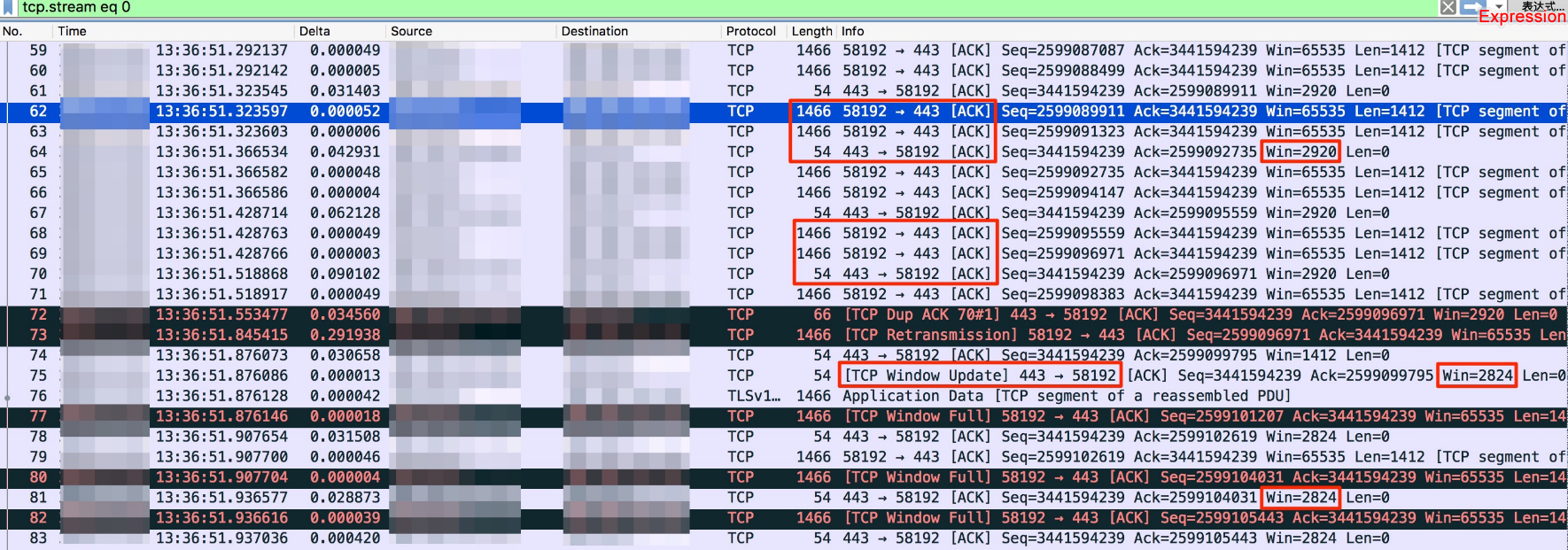

Next, the problem can be effectively narrowed down through the analysis for the captured packets. For this, we will do a detailed analysis of all related issues. Doing so will help us to solve the issue of the slow uploads. In fact, obtaining the captured packets of the test directly can avoid many detours. The captured packets for uploading files from the client to the Anti-DDoS node are as follows:

The following features can be seen from the captured packets:

If you are familiar with the TCP protocol, then you know that the TCP Receive Window on the server has always been small. And you also know that the RTT through the Anti-DDoS is large, resulting in low TCP throughput, which slows down the upload process.

If you are not familiar with the TCP protocol, you need to consider additional problems that we will cover below, and analyze in detail.

Among the questions we need to consider, it is important to know that TCP transmission does not mean that the sender sends a data packet, the receiver returns the acknowledgement (ACK), and that the sender continues to send the next data packet. Rather, what it means is that the sender can send multiple data packets at a time. However, when the data volume reaches a certain size, the sender must wait for ACK to send the next batch of data packets. This data volume is the amount of unacknowledged data in flight.

In this case, it is clearly that the unacknowledged data in flight has been very small, with only about 1-2 MSS(s) (generally, the MSS is 1460, which will be described in the following section). But what is the amount of the in-flight unacknowledged data anyway? Well, this depends on the minimum values of the Congestion Window (CWND) and the Receive Window (RWND). That is, the size of the Receive Window is sent by the peer end along with the ACK, while the Congestion Window is dynamically adjusted by the sender based on the link status through the congestion control and prevention algorithms.

Another issue is related to the Congestion Window, which is dynamically adjusted according to the link status. When the packet is sent to the peer end at the beginning, the link status cannot be known. Therefore, a more robust way is adopted to set the initial value of the Congestion Window to a smaller value, which is the cause of the slow start in TCP. But how small should this value be set anyway?

Here are our recommendations in the RFC:

Now here's its implementation in Linux:

Then, if no packet is dropped in the link, the size of the Congestion Window will increase exponentially during the slow start process, which isn't good for us.

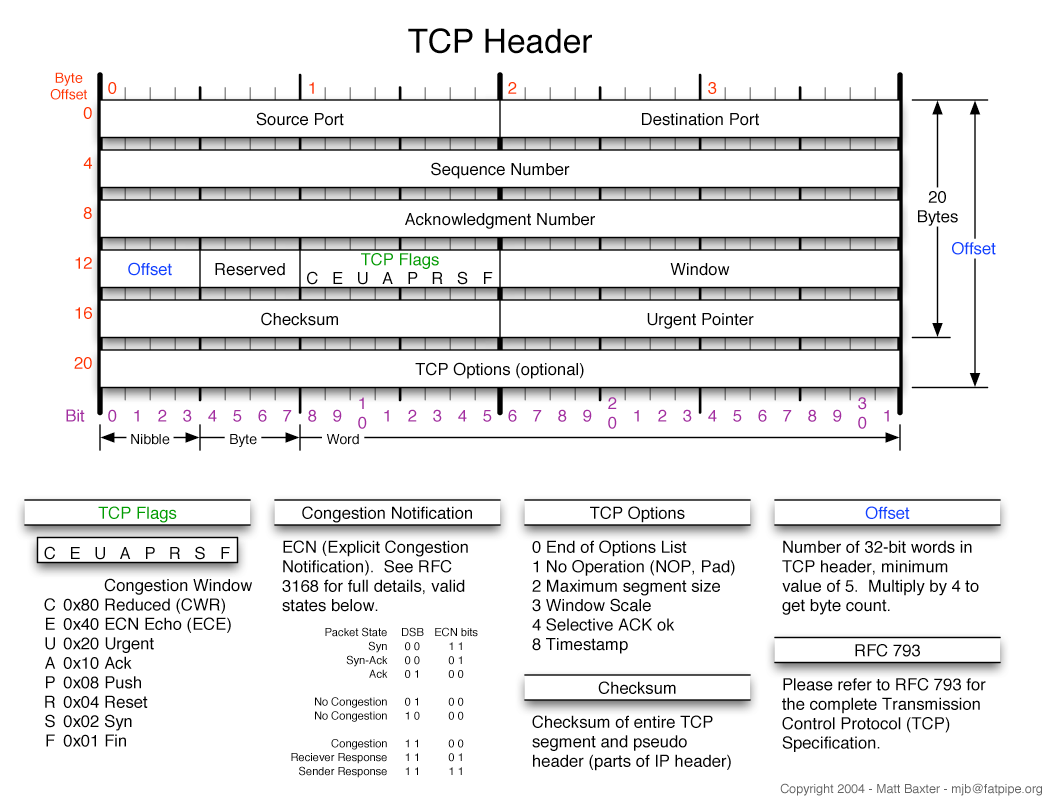

The TCP Header contains a Window field with 16 bytes. The Window range can be 0-64 KB (65535, 2^16-1). 64 KB is considered to be an appropriate upper limit in earlier network environments. This Window can be enlarged using the TCP Window Scale option. For example, if the Window Scale is 5, the Window can be enlarged by 32 (2^5) times based on the Window field.

The size of the Receive Window is sent by the peer end along with the ACK. The Window field we can see in Wireshark is the Receive Window, rather than the Congestion Window.

TCP is a duplex transmission channel, and the Receive Window is directional. The two senders respectively notifies the peer end of its TCP Receive Window, which eventually affects the transmission efficiency from the peer end to the local end. For example, in this case, when the client uploads data to the server, the TCP Receive Window notified by the server will affect the efficiency of data transmission from the client to the server.

Above each time the client sends 1466 bytes, which is the total length of the two-layer data frame. This, of course, depends on the MSS that the client and server notify each other during three handshakes. This field is in TCP Options. In the three handshakes, the MSS notified by the client to the server is 1460 bytes, and the MSS notified by the server to the client is 1412 bytes. Then, 1412 is transmitted as the MSS for transmission. Therefore, what we can get out of this is that, when the client transmits a packet, the size of a two-layer data frame is 1412 + 20 + 20 + 14 = 1466 bytes.

So from all of the above analysis, we know that the cause of the problem is that the TCP Receive Window on the server is small, which limits the amount of the unacknowledged data in flight to 1-2 MSS(s). It has nothing to do with the Anti-DDoS and the SLB itself.

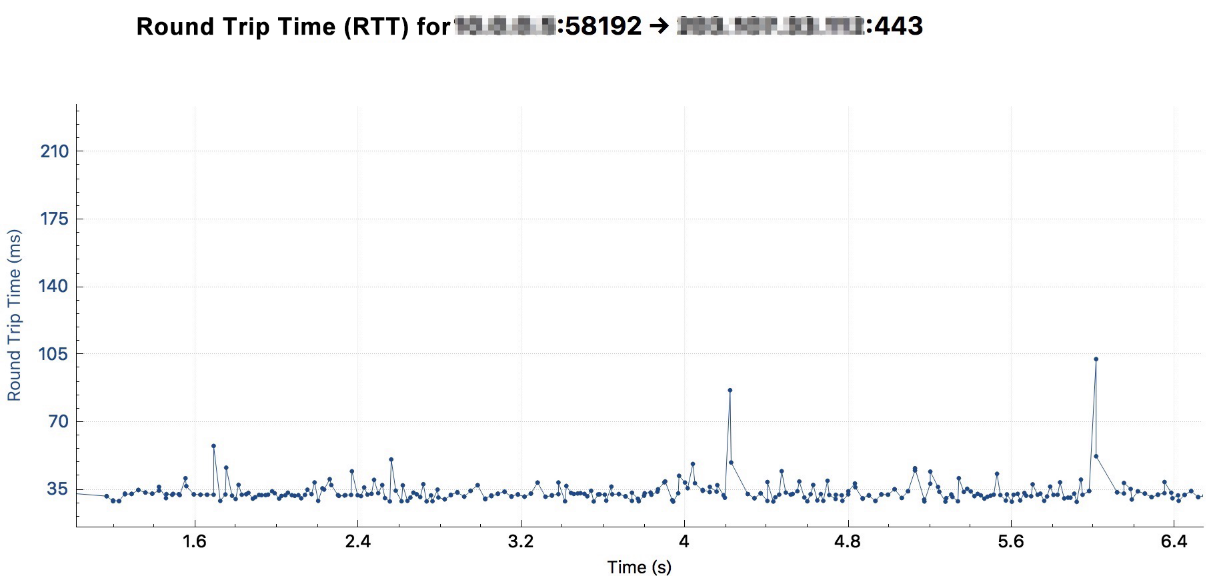

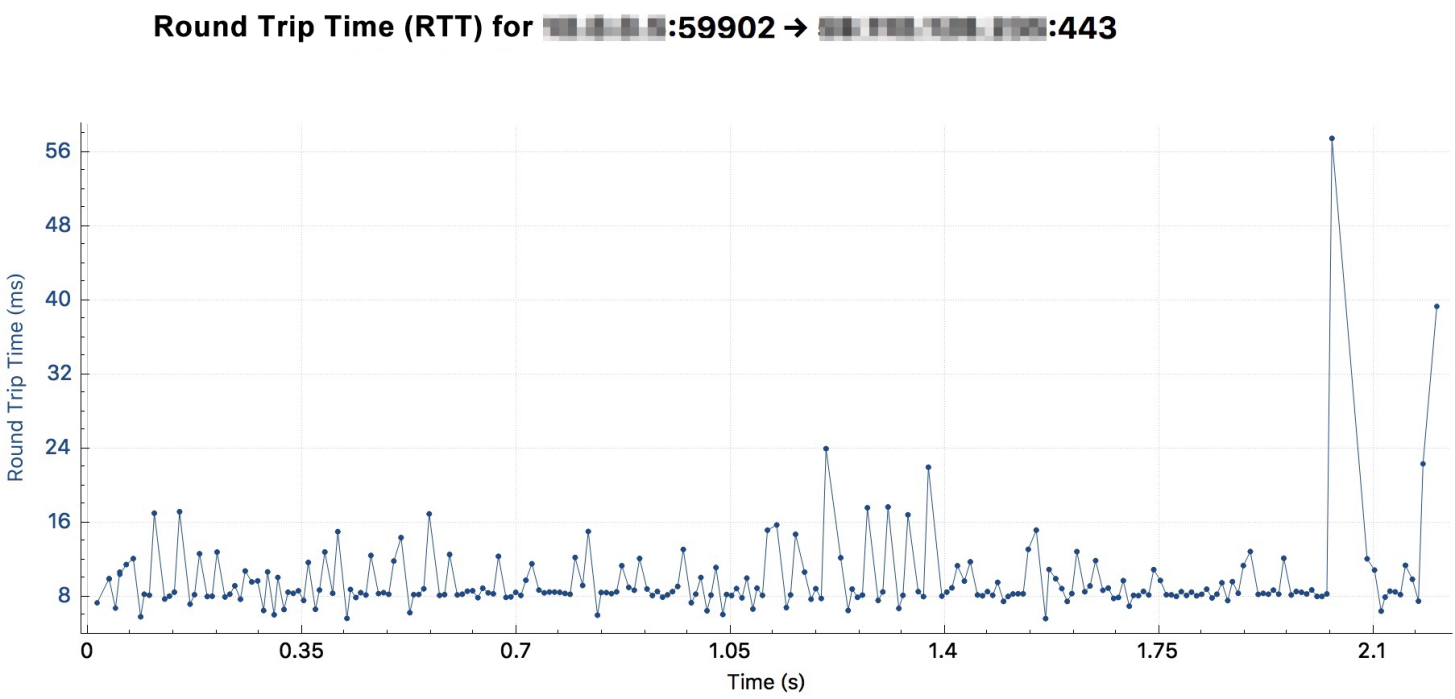

For the upload packets of the Anti-DDoS, the TCP Receive Window on the server has always been small, and the RTT through Anti-DDoS is relatively large, resulting in low TCP throughput. The test for the SLB can also reproduce the problem of small Receive Window. However, since the transmission from the client to the SLB is within the same metropolitan, RTT is much smaller, and the total time is also much smaller. The TCP Receive Window is small, so uploading files to the Anti-DDoS and to the SLB is almost linearly related to the RTT. This is almost impossible in normal TCP transmission because normal TCP windows must be increased and adjusted in the congestion control process.

The RTT from the client to the Anti-DDoS is shown in the following figure: about 35 milliseconds.

The RTT from the client to the SLB is shown in the following figure: about 8 milliseconds.

Next, in this section, we are going to discuss the solutions for the problems we found above. First, let's look at in more detail the factors that are affecting the TCP receive window.

In Linux, the size of the TCP Receive Window depends on the size of the TCP receive buffer. By default, the size of the TCP receive buffer is dynamically adjusted by the kernel according to the available memory of the system and kernel parameter net.ipv4.tcp_rmem. The parameter net.ipv4.tcp_rmem is introduced in Linux 2.4. The settings include: min, default, max. So the system level is as follows:

(net.ipv4.tcp_rmem/net.core.rmem_max/net.ipv4.tcp_adv_win_scale)Next, parameter used can be defined as so:

The following is the default setting for an ECS instance with kernel version 3.10.0 and 8 GB memory:

sysctl -a | grep tcp_rmem

net.ipv4.tcp_rmem = 4096 87380 6291456At the same time, the size of the TCP receive buffer is not equal to the size of the TCP Receive Window. bytes/2^tcp_adv_win_scale of the size is allocated to the application. If the size of net.ipv4.tcp_adv_win_scale is 2, this indicates that 1/4 of the TCP buffer is allocated to the application, and TCP allocates the remaining 3/4 to the TCP Receive Window.

Next, let's look at the process settings. The process can use the system to call setsockopt() to set the socket property, and use the SO_RCVBUF parameter to manually set the size of the TCP receive buffer. For example, NGINX can configure rcvbuf=size in the Listen command.

Next, as we discussed before in this blog, to make the TCP Receive Window exceed 64 KB, the TCP Window Scale option field needs to be used. In the kernel parameter setting of the system, the corresponding parameter is the net.ipv4.tcp_window_scaling parameter, which is enabled by default. However, in this case, we found that it is clearly not because of net.ipv4.tcp_window_scaling. This is because the size of the TCP Receive Window is still much smaller than 64 KB in size.

So, overall, after checking the relevant kernel parameters, no problem was actually found. Finally, it is clear that the problem is caused by the rcvbuf value being set too small on the Web server. After adjusting the parameters, the upload speed was significantly improved.

Why Are Thousands of TIME_WAIT Sockets Stacked on the Client?

7 posts | 5 followers

FollowOpenAnolis - March 7, 2022

OpenAnolis - July 14, 2022

JeffLv - December 2, 2019

Apache Flink Community China - September 16, 2020

Alibaba Cloud Native - July 18, 2024

OpenAnolis - June 26, 2023

7 posts | 5 followers

Follow Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn MoreMore Posts by William Pan