By SIG for High Performance Networking

Shared Memory Communication over RDMA (SMC-R) is a kernel network protocol based on RDMA technology, which is compatible with the socket interface. It was proposed by IBM and contributed to the Linux kernel in 2017. SMC-R can help TCP network applications transparently use RDMA to obtain network communication services with high bandwidth and low latency. The operating system Alibaba Cloud Linux 3 and Anolis 8 of OpenAnolis community, coupled with Elastic RDMA (eRDMA) of fourth-generation SHENLONG architecture, brought SMC-R to cloud scenarios for the first time. This helps cloud applications have better network performance. Alibaba Cloud Releases Fourth Generation X-Dragon Architecture; SMC-R Improves Network Performance by 20%.

Due to the wide use of RDMA technology in the data center field, the OpenAnolis high-performance network SIG believes that SMC-R will become one of the important directions of the data center kernel protocol stack in the next generation. To this end, we have made a lot of optimizations and fed these back to the upstream Linux community. Currently, the OpenAnolis high-performance network SIG is the largest SMC-R code contribution group besides IBM. You are welcome to join the community and communicate together.

As the first article in a series, this article explores SMC-R from a macro perspective.

The name of the Shared Memory Communication over RDMA contains a major feature of the SMC-R network protocol – it is based on RDMA. Therefore, before introducing SMC-R, let's look at Remote Direct Memory Access (RDMA).

With the development of data centers, distributed systems, and high-performance computing, network device performance has improved. The bandwidth of mainstream physical networks has reached 25-100 Gb/s, and the network latency is ten microseconds.

While the network device performance improves, a problem is revealed. Network performance and CPU computing power are mismatching. In traditional networks, the CPU, which is responsible for network message encapsulation, parsing, and data handling between user states /kernel states, is becoming powerless and under increasing pressure in the face of high-speed network bandwidth.

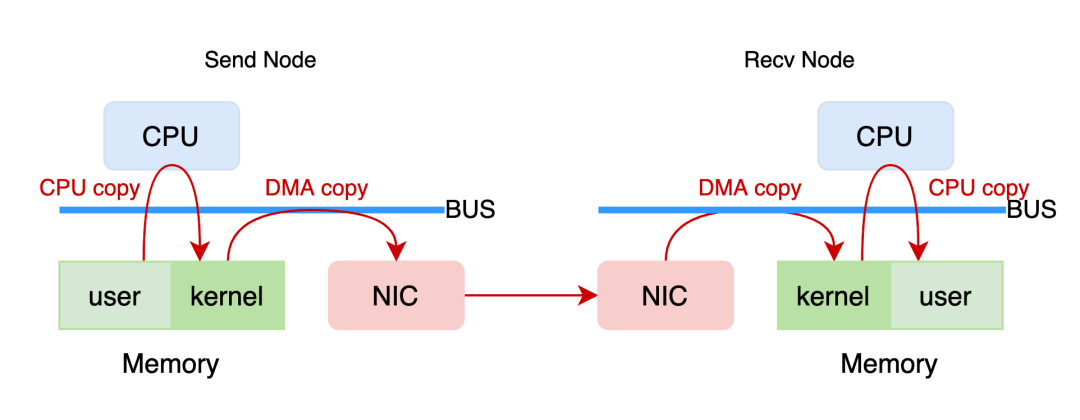

Let's take a data send and receive process in a TCP/IP network as an example. First, the send node CPU copies the data from the user state memory to the kernel state memory and completes the packet encapsulation in the kernel state protocol stack. Then, the DMA controller transfers the encapsulated data packet to the NIC and sends it to the peer end. The receiver NIC obtains the packet and carries it to the kernel memory through the DMA controller, which is parsed by the kernel protocol stack. After stripping the frame header or packet header layer by layer, the CPU copies the payload to the user memory to complete data transmission.

Figure: Traditional TCP/IP Network Transmission Model

In this process, the CPU is responsible for:

1) Copying the data between the user state and the kernel state

2) Encapsulation and analysis of network messages

This low-level and repetitive work consumes large amounts of CPU (such as running a full bandwidth with 100 Gb/s network interface controller that needs multiple CPU core resources), which makes the CPU in data-intensive scenarios fail to optimally use the computing power.

Therefore, solving the problem of mismatch between network performance and CPU computing power has become the key to the development of high-performance networks. Considering the gradual failure of Moore's Law and the slow development of CPU performance in a short period, the idea of offloading network data processing from CPU to hardware equipment has become the mainstream solution. This makes RDMA (which was used in specific high-performance fields) apply more in general scenarios.

Remote Direct Memory Access (RDMA) has become an important component of high-performance networks, with more than 20 years of development. How does RDMA complete data transmission?

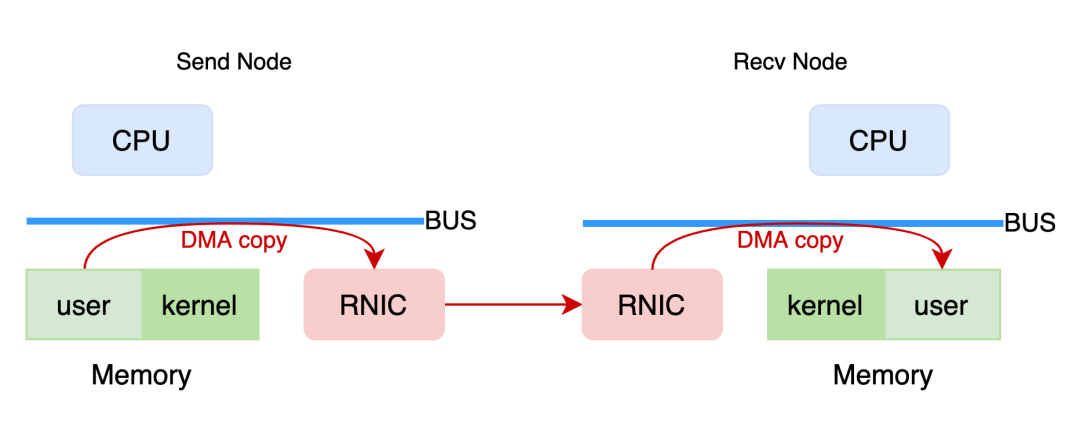

Figure: User State RDMA Network Transmission Model

In the RDMA network (user mode), the network interface controller RNIC with RDMA capability obtains data from the user-mode memory of the sending end, completes data encapsulation in the network interface controller, and transmits it to the receiver. Then, the receiver RNIC parses and strips the data and puts the payload into the user-mode memory to complete the data transmission.

In this process, the CPU hardly needs to participate in data transmission except for the necessary control panel functions. Data is written into the memory of the remote node through the RNIC. Compared with traditional networks, RDMA frees the CPU from network transmission, making the transmission as convenient and fast as direct access to remote memory.

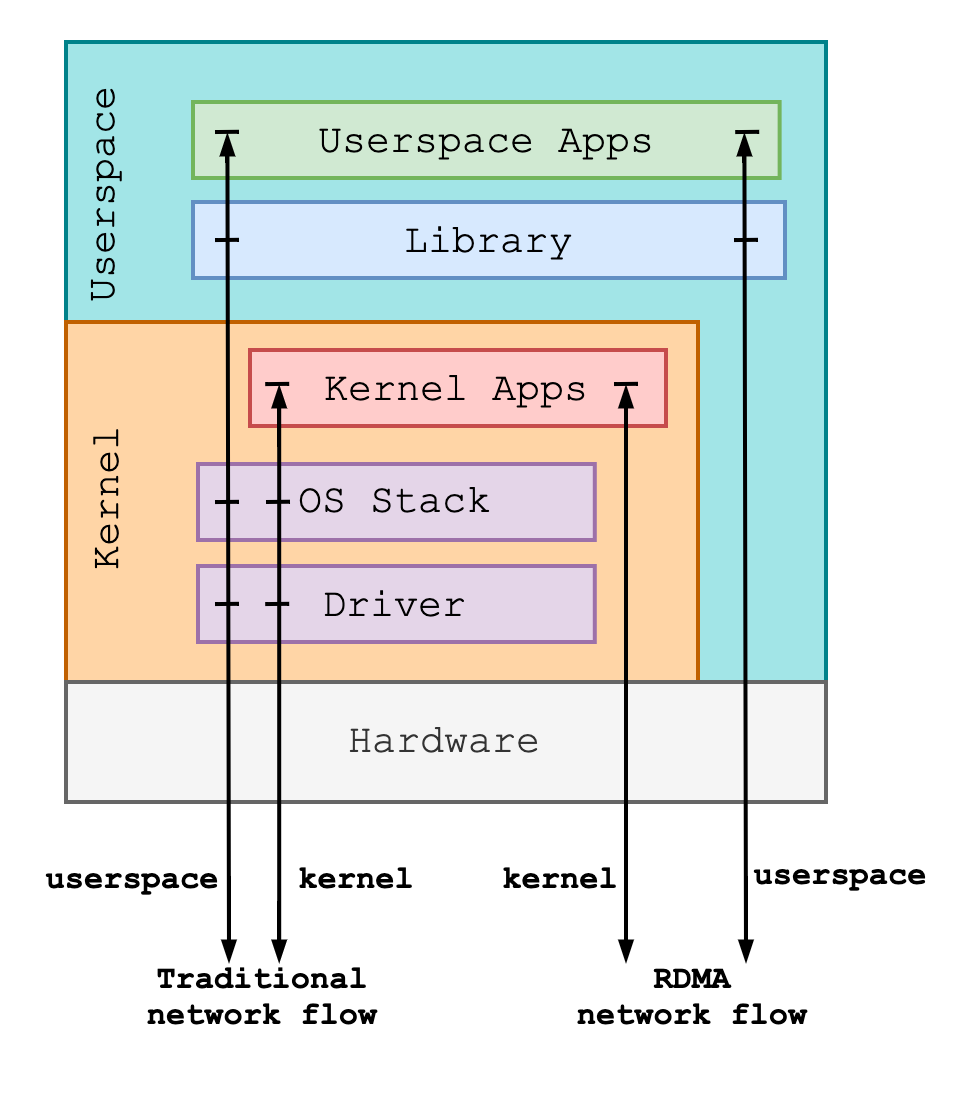

Figure: Comparison of Protocol Stacks between Traditional and RDMA Networks

Compared with traditional network protocols, the RDMA network protocol has the following three features:

1. Bypass Software Protocol Stack

RDMA networks rely on RNIC to complete packet encapsulation and resolution inside the network interface controller, bypassing the software protocol stack related to network transmission. The data path of the RDMA network bypasses the entire kernel for user-mode applications. It bypasses part of the protocol stack in the kernel for kernel applications. RDMA can reduce network latency by bypassing the software protocol stack and offloading data processing to hardware devices.

2. CPU Offload

The CPU is only responsible for the control plane in the RDMA network. On the data path, the payload is copied by the DMA module of the RNIC in the application buffer and the network interface controller buffer (under the premise that the application buffer is registered in advance and the network interface controller is authorized to access). The CPU is no longer required to participate in data handling, thus reducing the CPU utilization rate in network transmission.

3. Direct Memory Access

In RDMA networks, once RNIC has access to remote memory, it can write to or read data from remote memory without the participation of remote nodes, which is suitable for large data transmission.

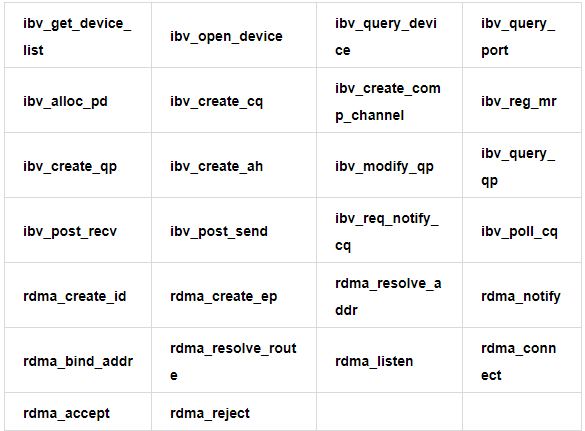

Through the preceding introduction, we have a preliminary understanding of the main features and performance advantages of RDMA. RDMA technology can bring gratifying network performance improvements, but it is difficult to use RDMA to transparently improve the network performance of existing TCP applications. This is because the use of RDMA networks relies on a series of new semantic interfaces, including ibverbs and rdmacm interface (hereinafter referred to as verbs interface).

Part of ibverbs and rdmacm interface [1]

Compared with traditional POSIX socket interfaces, the number of verbs interfaces is larger and closer to hardware semantics. For existing TCP network applications based on POSIX socket interfaces, you have to make a lot of modifications to the application at a huge cost to enjoy the performance dividends brought by RDMA.

We hope to use the socket interface while using the RDMA network so existing socket applications can transparently enjoy RDMA services. In response to this demand, the industry has proposed the following two solutions:

One is the libvma-based user mode solution. The principle of libvma is to use LD_PRELOAD to introduce all socket calls to a custom implementation. In the custom implementation, the verbs interface is called to receive data. However, libvma lacks unified kernel resource management and has poor compatibility with socket interfaces because it is implemented in user mode.

Second, it is based on the SMC-R kernel state scheme. As a kernel-state protocol stack, the compatibility of SMC-R to TCP applications is better than user-state solutions. This 100% compatibility means low promotion and reuse costs. In addition, the implementation in the kernel state enables RDMA resources in the SMC-R protocol stack to be shared by different processes in the user state, improving resource utilization while reducing the overhead of frequent resource application and release. However, full compatibility with the socket interface means no extreme RDMA performance. (User-mode RDMA programs can bypass the kernel and the zero-copy of the data path. However, in order to be compatible with the socket surface, SMC-R cannot achieve zero-copy.) This brings compatibility, ease of use, and improved transparent performance compared with the TCP protocol stack. We also plan to expand the interface and apply the zero-copy feature to the SMC-R at the expense of some compatibility in the future.

SMC-R is an open socket over RDMA protocol that provides transparent exploitation of RDMA (for TCP-based applications) while preserving key functions and qualities of service from the TCP/IP ecosystem that enterprise level servers/networks depend on.

From: https://www.openfabrics.org/images/eventpresos/workshops2014/IBUG/presos/Thursday/PDF/05_SMC-R_Update.pdf

As a set of kernel protocol stacks parallel to the TCP/IP protocol and upward compatible with the socket interface, SMC-R uses RDMA at the bottom to complete shared memory communication. Its design intention is to provide a transparent RDMA service for TCP applications while retaining key functions in the TCP/IP ecosystem.

Therefore, SMC-R defines a new network protocol family AF_SMC in the kernel, whose proto_ops behaves the same as TCP.

/* must look like tcp */

static const struct proto_ops smc_sock_ops = {

.family = PF_SMC,

.owner = THIS_MODULE,

.release = smc_release,

.bind = smc_bind,

.connect = smc_connect,

.socketpair = sock_no_socketpair,

.accept = smc_accept,

.getname = smc_getname,

.poll = smc_poll,

.ioctl = smc_ioctl,

.listen = smc_listen,

.shutdown = smc_shutdown,

.setsockopt = smc_setsockopt,

.getsockopt = smc_getsockopt,

.sendmsg = smc_sendmsg,

.recvmsg = smc_recvmsg,

.mmap = sock_no_mmap,

.sendpage = smc_sendpage,

.splice_read = smc_splice_read,

};Since the SMC-R protocol supports socket interfaces that behave the same as TCP, it is simple to use the SMC-R protocol. In general, there are two methods:

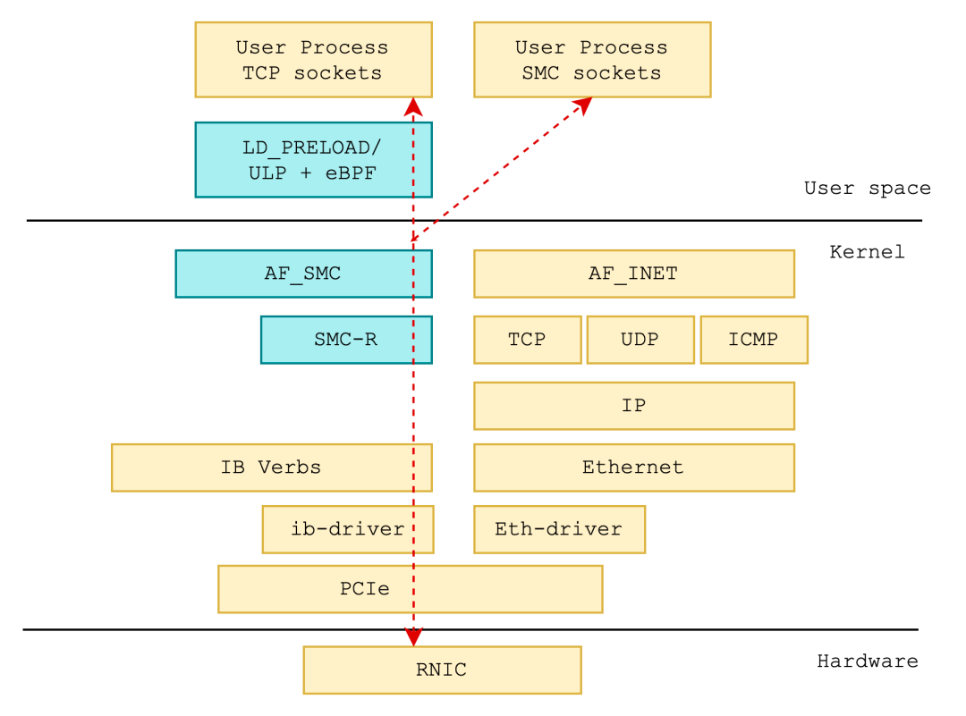

Figure: The Usage of SMC-R

First, use the SMC-R protocol family AF_SMC for development. Application traffic will enter the SMC-R protocol stack by creating an AF_SMC socket.

Second, transparent replacement of protocol stack. Transparently replace the TCP type socket created by the application with the SMC type socket. Transparent replacement can be implemented in the following two ways:

int socket(int domain, int type, int protocol)

{

int rc;

if (!dl_handle)

initialize();

/* check if socket is eligible for AF_SMC */

if ((domain == AF_INET || domain == AF_INET6) &&

// see kernel code, include/linux/net.h, SOCK_TYPE_MASK

(type & 0xf) == SOCK_STREAM &&

(protocol == IPPROTO_IP || protocol == IPPROTO_TCP)) {

dbg_msg(stderr, "libsmc-preload: map sock to AF_SMC\n");

if (domain == AF_INET)

protocol = SMCPROTO_SMC;

else /* AF_INET6 */

protocol = SMCPROTO_SMC6;

domain = AF_SMC;

}

rc = (*orig_socket)(domain, type, protocol);

return rc;

}The smc_run command in the open-source user-mode toolset smc-tools implements the preceding functions[2].

static int smc_ulp_init(struct sock *sk)

{

struct socket *tcp = sk->sk_socket;

struct net *net = sock_net(sk);

struct socket *smcsock;

int protocol, ret;

/* only TCP can be replaced */

if (tcp->type != SOCK_STREAM || sk->sk_protocol != IPPROTO_TCP ||

(sk->sk_family != AF_INET && sk->sk_family != AF_INET6))

return -ESOCKTNOSUPPORT;

/* don't handle wq now */

if (tcp->state != SS_UNCONNECTED || !tcp->file || tcp->wq.fasync_list)

return -ENOTCONN;

if (sk->sk_family == AF_INET)

protocol = SMCPROTO_SMC;

else

protocol = SMCPROTO_SMC6;

smcsock = sock_alloc();

if (!smcsock)

return -ENFILE;

<...>

}SEC("cgroup/connect4")

int replace_to_smc(struct bpf_sock_addr *addr)

{

int pid = bpf_get_current_pid_tgid() >> 32;

long ret;

/* use-defined rules/filters, such as pid, tcp src/dst address, etc...*/

if (pid != DESIRED_PID)

return 0;

<...>

ret = bpf_setsockopt(addr, SOL_TCP, TCP_ULP, "smc", sizeof("smc"));

if (ret) {

bpf_printk("replace TCP with SMC error: %ld\n", ret);

return 0;

}

return 0;

}Based on the preceding introduction, the transparent use of RDMA services by TCP applications can be reflected in the following two aspects:

| Destination | Implementation |

| Transparent Replacement of TCP Protocol Stacks with SMC-R Protocol Stacks | Relying on LD_PRELOAD + custom socket() or ULP + eBPF implementation, TCP applications can run on the SMC-R protocol stack without code modification. |

| Transparent Use of RDMA Technology with SMC-R Protocol Stack | SMC-R protocol stack proto_ops conforms to TCP behavior, and the RDMA network is used to complete data transmission within the protocol stack so applications can use RDMA services without inductance. |

Figure: SMC-R Architecture

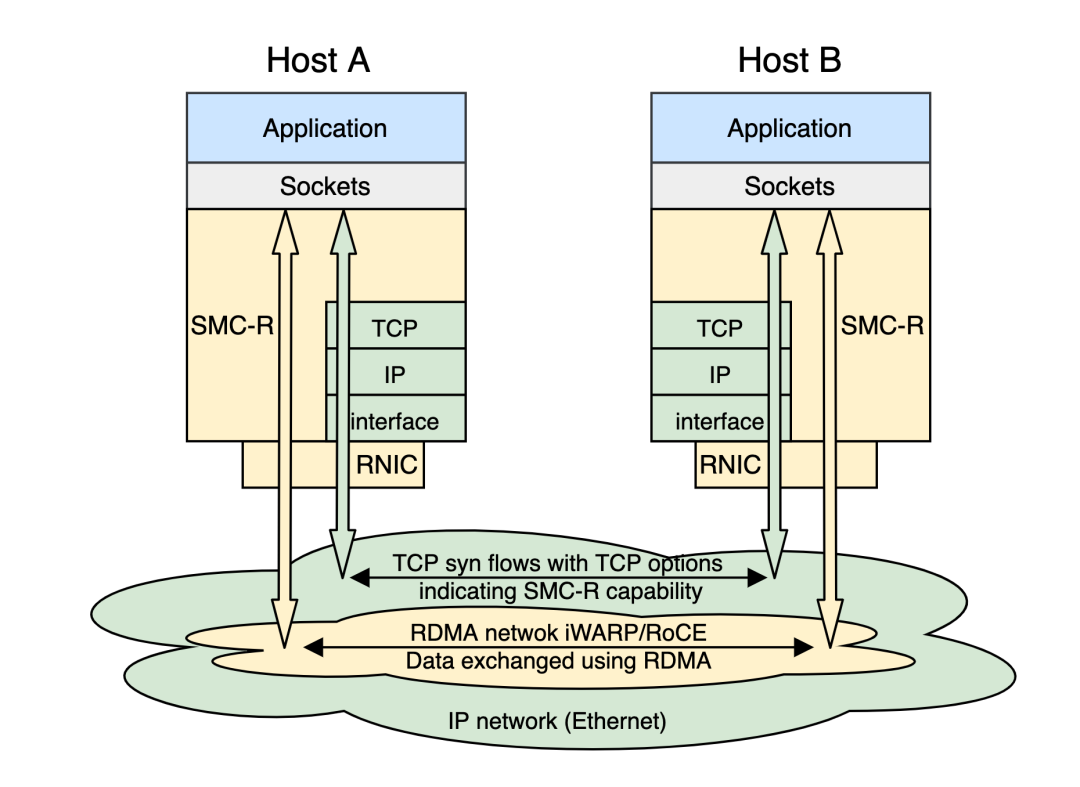

The SMC-R protocol stack is below the socket layer and above the RDMA kernel verbs layer in the system. It is a kernel network protocol stack with hybrid features. The hybrid here is reflected in the mixture of RDMA flow and TCP flow in the SMC-R protocol stack:

SMC-R uses the RDMA network to transfer the data of user-mode applications so applications can transparently enjoy the performance dividends brought by RDMA, as shown in the yellow part of the preceding figure.

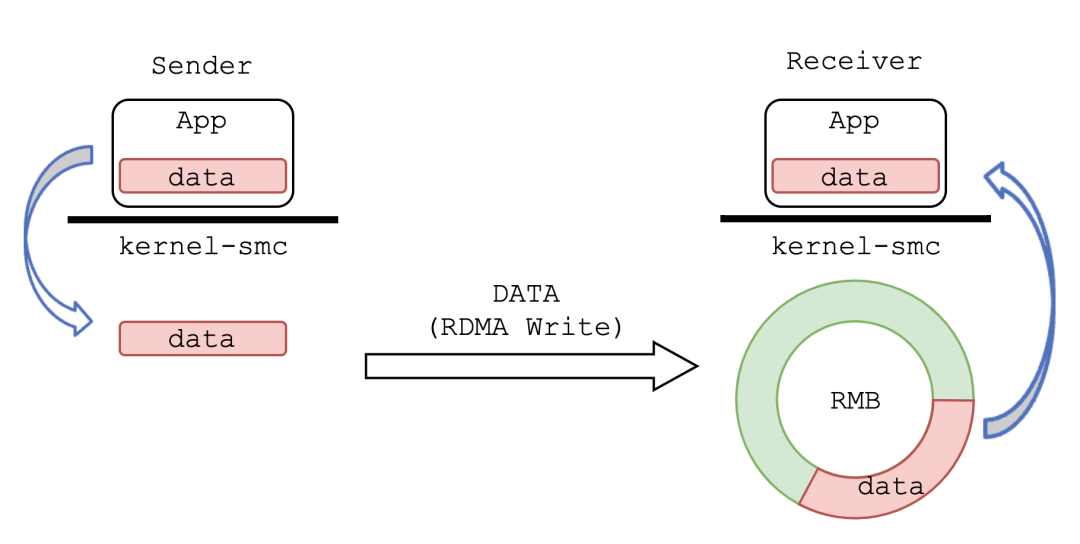

The data traffic of the sender application program comes from the application buffer to the kernel memory space through the socket interface. Then, it is written into a kernel state ringbuf (remote memory buffer or RMB) of the remote node through the RDMA network. Finally, the remote node SMC-R protocol stack copies the data from RMB to the receiver application buffer.

Figure: SMC-R Shared Memory Communication

Shared memory communication in the SMC-R name refers to communication based on remote node RMB. Compared with the traditional local shared memory communication, the SMC-R expands the two ends of the communication to two separate nodes and uses RDMA to realize the communication based on remote shared memory.

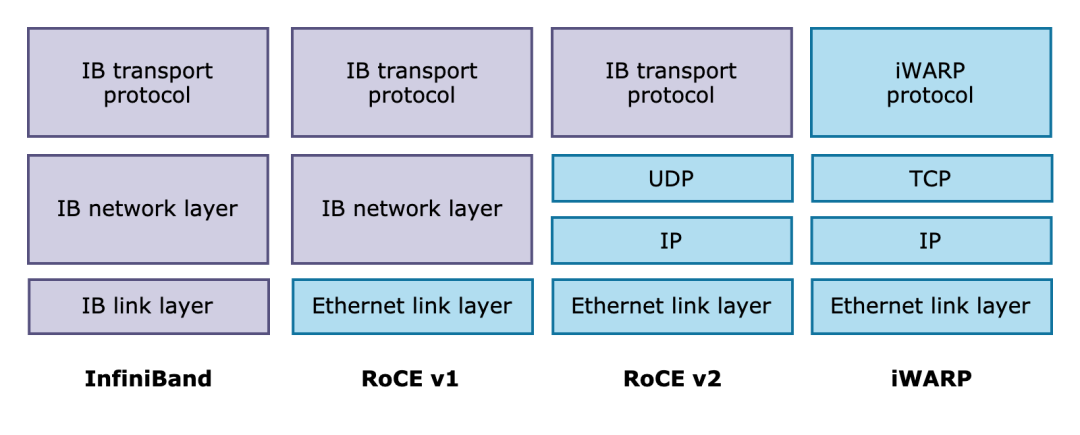

Figure: Mainstream RDMA Implementation

Currently, there are three mainstream implementations of RDMA networks: InfiniBand, RoCE, and iWARP. Among them, RoCE is a trade-off between high performance and cost. It is compatible with Ethernet protocol while using RDMA, which ensures good network performance and reduces network construction costs. Therefore, it is favored by enterprises. The SMC-R of Linux upstream community versions also use RoCE v1 and v2 as their RDMA implementation.

iWARP implements RDMA based on TCP, breaking through the rigid requirements of the other two for lossless networks. iWARP has better scalability and is suitable for cloud scenarios. Alibaba Cloud Elastic RDMA (eRDMA) brings the RDMA technology to the cloud based on iWARP. The SMC-R in Alibaba Cloud Linux 3 and OpenAnolis open-source OS Anolis 8 support eRDMA (iWARP), enabling cloud users to transparently use RDMA networks.

In addition to the RDMA flow, SMC-R will be equipped with a TCP connection for each SMC-R connection, and both have the same lifecycle. TCP flows have the following responsibilities in the SMC-R protocol stack:

1) Dynamic Discovery of Peer SMC-R Capabilities

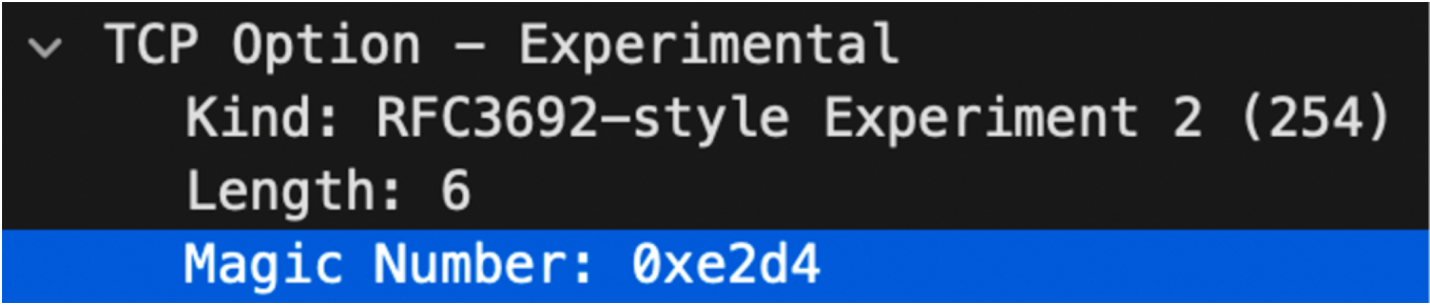

Before the SMC-R connection is established, both ends of the communication do not know whether the peer end supports SMC-R. Therefore, both ends will establish a TCP connection first. During the TCP connection with a three-way handshake, the support of SMC-R is indicated by sending SYN packets carrying special TCP options. At the same time, the TCP options in SYN packets sent by the peer are verified.

Figure: TCP Options That Represent SMC-R Capabilities

2) Fallback

If one of the communication ends cannot support the SMC-R protocol or fails to continue during the SMC-R connection establishment process, the SMC-R protocol stack will fall back to the TCP protocol stack. During the fallback process, the SMC-R protocol stack replaces the socket corresponding to the file descriptor held by the application with the socket of the TCP connection. Application traffic will be carried through this TCP connection to ensure the data transmission will not be interrupted.

3) Help Establish SMC-R Connections

If both ends of the communication support the SMC-R protocol, the SMC-R connection establishment message will be exchanged over the TCP connection. (The connection building process is similar to the SSL handshake.) In addition, this TCP connection needs to be used to exchange RDMA resource information on both sides to help establish RDMA links for data transmission.

Now, we have a preliminary understanding of the overall structure of the SMC-R. As a hybrid solution, SMC-R makes full use of the versatility of TCP flows and the high performance of RDMA flows. Part 2 will analyze a complete communication process in the SMC-R and explain the characteristic of hybrid.

Part 1 of the SMC-R series serves as an introduction. Looking back, we have answered these questions:

1. Why RDMA-Based?

RDMA can improve network performance (throughput/latency/CPU utilization).

2. Why Does RDMA Bring Performance Gains?

A large number of software protocol stacks are bypassed, and the CPU is freed from the network transmission process, making the data transmission as simple as writing to remote memory.

3. Why Do We Need SMC-R?

RDMA applications are implemented based on the verbs interface, so the existing tcp applications use RDMA technology at a high cost of refactor.

4. What Are the Advantages of SMC-R?

SMC-R is fully compatible with socket interfaces and simulates TCP socket interface behavior. This enables TCP user-state applications to transparently use RDMA services and enjoy the performance benefits brought by RDMA without any modification.

5. What Are the Architectural Features of the SMC-R?

The SMC-R architecture has the feature of hybrid and combines RDMA flow and TCP flow. The SMC-R protocol uses the RDMA network to transmit application data and uses the TCP flow to confirm peer SMC-R capabilities and help establish the RDMA link.

[1] https://network.nvidia.com/pdf/prod_software/RDMA_Aware_Programming_user_manual.pdf

[2] https://github.com/ibm-s390-linux/smc-tools

96 posts | 6 followers

FollowOpenAnolis - July 14, 2022

OpenAnolis - June 15, 2022

OpenAnolis - June 26, 2023

OpenAnolis - March 7, 2022

Alibaba Cloud Community - July 15, 2022

ApsaraDB - November 1, 2022

96 posts | 6 followers

Follow Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn MoreMore Posts by OpenAnolis