Join us at the Alibaba Cloud ACtivate Online Conference on March 5-6 to challenge assumptions, exchange ideas, and explore what is possible through digital transformation.

CoreDNS is an incubation-level project under the CNCF umbrella that was formerly known as SkyDNS. Its main purpose is to build a fast and flexible DNS server that allows users to access and use DNS data in different ways. Based on the Caddy server framework, CoreDNS implements a plugin chain architecture that abstracts large volumes of logic into the form of a plugin, which it then exposes to its users. Each plugin performs DNS functions, such as DNS service discovery of Kubernetes, Prometheus monitoring, and so on.

In addition to pluginization, CoreDNS also has the following features:

Corefile is the configuration file of CoreDNS (the configuration file Caddyfile originated from the Caddy framework). It defines the following content:

A typical Corefile format is displayed below:

ZONE:[PORT] {

[PLUGIN] ...

}The parameters are described as follows:

When CoreDNS starts up, it then starts different servers according to the configuration file. Each server has its own plugin chain. When there is a DNS request, it proceeds through the following 3-step logic in turn:

There are several possibilities:

During the processing of the plugin, if there is a possibility that the request might jump to the next plugin, then this process is called Fallthrough, and the keyword 'Fallthrough' is used to decide whether to allow this operation. For example, for the host plugin, when the query domain name is not located in /etc/hosts, the next plugin is called.

During this process, the request contains hints.

The request is processed by the plugin and continues to be processed by the next plugin after some information (hints) is added to its response. This additional information forms the final response given to the client, for example, the metric plugin.

Starting from Kubernetes 1.11, CoreDNS reached GA status as a DNS plugin for Kubernetes. Kubernetes recommends using CoreDNS as the DNS service within the cluster. For Alibaba Cloud Container Service for Kubernetes 1.11.5 and later, the default installation uses CoreDNS as the DNS service. Configuration information for CoreDNS can be viewed using the following command:

# kubectl -n kube-system get configmap coredns -oyaml

apiVersion: v1

data:

Corefile: |-

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

reload

}

kind: ConfigMap

metadata:

creationTimestamp: "2018-12-28T07:28:34Z"

name: coredns

namespace: kube-system

resourceVersion: "2453430"

selfLink: /api/v1/namespaces/kube-system/configmaps/coredns

uid: 2f3241d5-0a72-11e9-99f1-00163e105bdfThe meaning of each parameter in the configuration file is as follows:

| Name | Description |

| errors | Errors are logged to the standard output. |

| health | The health status can be viewed at http://localhost:8080/health |

| kubernetes | Respond to DNS query requests based on the IP of the service. The cluster domain defaults to cluster.local. |

| prometheus | Monitoring data in prometheus format can be obtained through http://localhost:9153/metrics |

| proxy | If it cannot be resolved locally, the upper address is queried. The /etc/resolv.conf configuration of the host is used by default. |

| cache | Cache time |

Alibaba Cloud Container Service for Kubernetes 1.11.5 is now available. Using its management console, you can quickly and very easily create a Kubernetes cluster. See Creating a Kubernetes Cluster for more information.

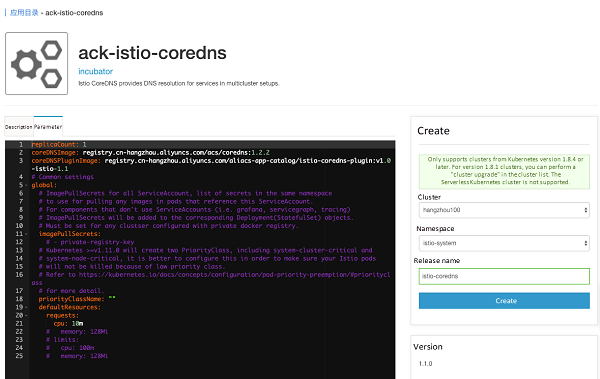

Click Application Directory on the left, select ack-istio-coredns on the right, and click Parameters in the page opened. You can change the parameters to customize the settings (see below):

The parameters are described as follows:

| Name | Description | Value | Default |

| replicaCount | Specify the number of replicas | number | 1 |

| coreDNSImage | Specifies the CoreDNS image name | valid image tag | coredns/coredns:1.2.2 |

| coreDNSPluginImage | Specifies the plugin image name | valid image name | registry.cn-hangzhou.aliyuncs.com/aliacs-app-catalog/istio-coredns-plugin:v1.0-istio-1.1 |

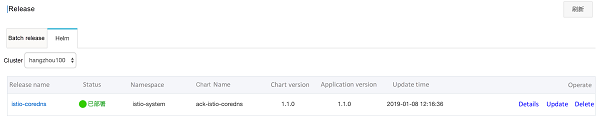

After modification, select the corresponding cluster, namespace istio-system, as well as the release name istio-coredns on the right, then click Deploy. After a few seconds, an Istio CoreDNS release can be created, as shown in the following figure:

The cluster IP of the istiocoredns service can be obtained by executing the following command:

# kubectl get svc istiocoredns -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istiocoredns ClusterIP 172.19.10.196 <none> 53/UDP,53/TCP 44mUpdate the configuration item configmap in the cluster's CoreDNS. Set the istiocoredns service as the upstream DNS service of the .global domain, then add the domain .global to the configuration item configmap, as follows:

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |-

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

reload

}

global:53 {

errors

cache 30

proxy . {replace this with the actual cluster IP of this istiocoredns service}

}After changing the configuration item, the cluster's CoreDNS container reloads the configuration content. The load log can be viewed using the following command line:

# kubectl get -n kube-system pod | grep coredns

coredns-8645f4b4c6-5frkg 1/1 Running 0 20h

coredns-8645f4b4c6-lj59t 1/1 Running 0 20h

# kubectl logs -f -n kube-system coredns-8645f4b4c6-5frkg

....

2019/01/08 05:06:47 [INFO] Reloading

2019/01/08 05:06:47 [INFO] plugin/reload: Running configuration MD5 = 05514b3e44bcf4ea805c87cc6cd56c07

2019/01/08 05:06:47 [INFO] Reloading complete

# kubectl logs -f -n kube-system coredns-8645f4b4c6-lj59t

....

2019/01/08 05:06:31 [INFO] Reloading

2019/01/08 05:06:32 [INFO] plugin/reload: Running configuration MD5 = 05514b3e44bcf4ea805c87cc6cd56c07

2019/01/08 05:06:32 [INFO] Reloading completeWith ServiceEntry, additional entries can be added to the service registry within Istio, allowing services automatically discovered in the grid to be accessed and routed to these manually added services. ServiceEntry describes the attributes of the service, including DNS names, virtual IPs, ports, protocols, and endpoints. This kind of service may be an API outside the grid, or an entry in the service registry within the grid but not in the platform, such as a group of VM-based services that need to communicate with the Kubernetes service.

In the following example, wildcard characters are used to define hosts and the address is specified, as follows:

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: external-svc-serviceentry

spec:

hosts:

- '*.test.global'

addresses:

- 127.255.0.2

ports:

- number: 8080

name: http1

protocol: HTTP

location: MESH_INTERNAL

resolution: DNS

endpoints:

- address: 47.111.38.80

ports:

http1: 15443Execute the following command to view the container istiocoredns. You can see that the domain name mapping relationship of the ServiceEntry above has been loaded:

# kubectl get po -n istio-system | grep istiocoredns

istiocoredns-cdc56b67-ngtkr 2/2 Running 0 1h

# kubectl logs --tail 2 -n istio-system istiocoredns-cdc56b67-ngtkr -c istio-coredns-plugin

2019-01-08T05:27:22.897845Z info Have 1 service entries

2019-01-08T05:27:22.897888Z info adding DNS mapping: .test.global. ->[127.255.0.2]To create a test container using the image tutum/dnsutils:

kubectl run dnsutils -it --image=tutum/dnsutils bashAfter entering the container command line, execute dig to view the corresponding domain name resolution:

root@dnsutils-d485fdbbc-8q6mp:/# dig +short 172.19.0.10 A service1.test.global

127.255.0.2

root@dnsutils-d485fdbbc-8q6mp:/# dig +short 172.19.0.10 A service2.test.global

127.255.0.2Istio supports several different topologies for distributing application services outside a single cluster. For example, services in a service grid can use ServiceEntry to access independent external services or services exposed by another service grid (this is commonly referred to as grid federation). Using Istio CoreDNS to provide DNS resolution for services in a remote cluster obviates the need to modify existing applications, allowing users to access services in the remote cluster as if accessing services in this cluster.

Traffic Management with Istio (3): Traffic Comparison Analysis based on Istio

56 posts | 8 followers

FollowXi Ning Wang(王夕宁) - December 16, 2020

Alibaba Cloud Native Community - July 13, 2022

Alibaba Developer - September 7, 2020

Alibaba Container Service - May 18, 2021

Alibaba Cloud Native - November 15, 2022

Xi Ning Wang(王夕宁) - December 16, 2020

56 posts | 8 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Xi Ning Wang(王夕宁)