By Jian Feng

Before discussing the Apache Flink ecosystem, let's first take a look into what an ecosystem actually is. In IT, an ecosystem can be understood as a community of components that are all derived from a common core component, which directly or indirectly use this core component and are used together with this core component to accomplish a bigger or more special kind of task. Then, following this, Flink ecosystem refers to the ecosystem that surrounds Flink as the core component.

In the ecology of big data, Flink is a computational component that only deals with the computational side of things, and does not involve any storage systems of its own. With that said, you may find that Flink alone cannot meet requirements in many practical scenarios. For example, you may need to consider where your data is read from, where the data processed by Flink should be stored, how the data is to be consumed, and how you use Flink to accomplish a special task in a vertical business field. To accomplish these tasks, which involve both the downstream and upstream aspects as well as higher level of abstraction, you need one powerful ecosystem.

Now that we have understood what an ecosystem is, let's talk about what you could call is the status quo of the Flink ecosystem. On the whole, the Flink ecosystem is still in its infancy. Currently, the Flink ecosystem mainly supports a variety of connectors in the upstream and downstream as well as several kinds of clusters.

You could list the connectors currently supported by Flink all day. But to name a few there are Kafka, Cassandra, Elasticsearch, Kinesis, RabbitMQ, JDBC, and HDFS. Next, Flink supports almost all major data sources. As for clusters, Flink currently supports Standalone and YARN. Based on the current status of this ecosystem, Flink is mainly used to compute stream data. Using Flink in other scenarios (such as machine learning and interactive analysis) can be relatively complex work, and the user experience in these scenarios still leaves much to desire. But with that said, there are certainly loads of opportunities amid these challenges with the Flink ecosystem.

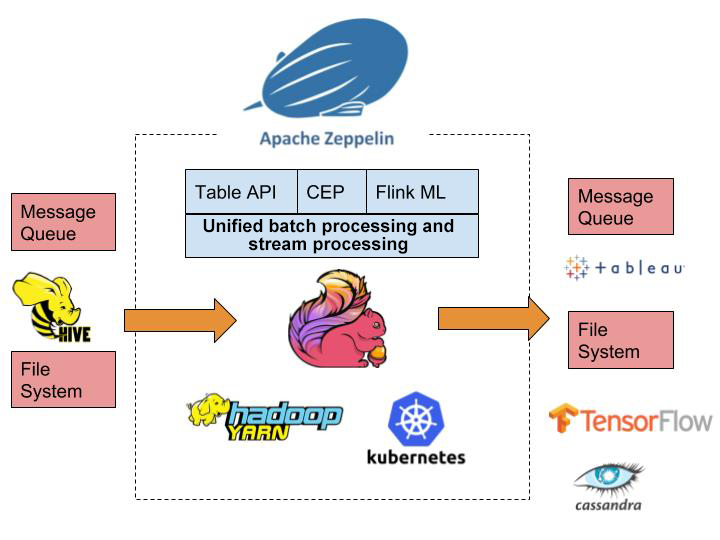

Even though Flink primarily serves as a big data computing platform that is used for unified batch and stream processing, Flink has much greater potential for other uses beyond this. In my opinion, it is necessary for there to be a more powerful, robust ecosystem for Flink to maximize on its potential. To understand Flink better, we can evaluate its ecosystem from two different scaling dimensions:

The following figure shows the Flink ecosystem considering if it were to scale horizontally and vertically in the way we described above.

Next, I will describe the main components of the ecosystem one by one.

Apache Hive is a top-level Apache project developed nearly 10 years ago. This project initially encapsulated SQL statements on top of MapReduce. Users only need to write familiar and simple SQL statements, without having to write complex MapReduce jobs any more. SQL statements from users are translated into one or more MapReduce jobs. During the continuous project evolution progress, the Hive computing engine became pluggable. Currently, Hive supports three computing engines: MR, Tez, and Spark. Apache Hive has become an industry standard for data warehouses in the Hadoop ecosystem. Many companies have been running their data warehouse systems on Hive for years.

Because Flink is a computing framework for unified batch and stream processing, naturally it needs to integrate with Hive. For example, in the case that we can use Flink to perform ETL and build real-time data warehouses, we will need to use Hive SQL for real-time data queries.

In the Flink community, FLINK-10556 has already been created to enable better integration with and support for Hive. The following are its main features:

The Flink community is taking gradual steps to implement these above features. If you want to experiment with these features in advance, you can try Blink, an open-source project developed by Alibaba Cloud. The open-source Blink project has connected Flink and Hive in the metadata and data layers. Users can directly use Flink SQL to query data in Hive and seamlessly switch between Hive and Flink in a real sense. To connect to metadata, Blink restructures the implementation of Flink catalogs and adds another two catalogs: the memory-based FlinkInMemoryCatalog and the HiveCatalog that connects to Hive MetaStore. With this HiveCatalog, Flink jobs can read metadata from Hive. To connect to data, HiveTableSource is implemented in Blink to allow Flink jobs to directly read data from normal tables and partition tables in Hive. Therefore, with Blink, users can use Flink SQL to read existing hive meta and data for data processing. In the future, Alibaba will improve the Flink compatibility with Hive, including support for Hive-specific queries, data types, and Hive UDFs. These improvements will be gradually contributed to the Flink community.

Batch processing is another common application scenario for Flink. Interactive analysis is a large part of batch processing, and it is especially important to data analysts and data scientists.

Fink itself needs further enhancements to improve its performance requirements when it comes to interactive analysis projects and tools. Take FLINK-11199 as an example. Currently, data in the same Flink App across multiple jobs cannot be shared. The DAG of each job remains isolated. FLINK-11199 is designed to solve this problem, providing more friendly support for interactive analysis.

In addition, an interactive analysis platform is needed to allow data analysts and data scientists to use Flink in a more efficient way. Apache Zeppelin has done a lot in this regard. Apache Zeppelin is also a top-level Apache project that provides an interactive development environment and supports multiple programming languages such as Scala, Python, and SQL. In addition, Zeppelin supports a high level of scalability and supports many big data engines, including Spark, Hive, and Pig. Alibaba has made huge efforts to implement better support for Flink in Zeppelin. Users can write Flink code (in the Scala or SQL language) directly in Zeppelin. Also, instead of packaging locally and then manually submitting jobs by running the bin/flink script, users can submit jobs directly in Zeppelin and see job results. Job results can either be shown in text or visualized. For SQL results, visualization is especially important. Zeppelin mainly provides the following support for Flink:

Some of these changes are implemented on Flink and some on Zeppelin. Before all these changes are contributed to the Flink community and the Zeppelin community, you can use this Zeppelin Docker Image to test and use these features. For more details about downloading and installing a Zeppelin Docker Image, see the examples described in Blink documents. To help users try these features more easily, we've added three built-in Flink tutorials in this version of Zeppelin: One gives an example of Streaming ETL, and the other two provide examples of Flink Batch and Flink Stream.

As the most important computing engine component in the big data ecology, Flink is now primarily used for the traditional segments of data computing and processing, that is, traditional business intelligence (or BI) (for example, real-time data warehouses and real-time statistics reports). However, the 21st century is now the age of artificial intelligence, or AI. Increasingly more enterprises acorss several different industries are choosing AI technologies to make fundamental changes to way they do business. I think you can argue that the big data computing engine Flink is indispensable in the waves of these changes across the business world. Even though Flink is not developed exclusively for machine learning, machine learning still plays an irreplaceable role in the Flink ecosystem. And in the future, we should see Flink provide three major features to support machine learning:

When looking at a machine learning pipeline, you can easily make the assumption that machine learning can be simply boiled down to two major phases: the training and predication phases. However, training and predication are only a small fraction of what machine learning is. Before training, tasks like data cleaning, data conversion, and normalization are integral parts of the process of preparing data for machine learning models. And, after training, model evaluation is also a crucial step. The same is true for the predication phase, too. In a complex machine learning system, the combination of individual steps in a proper way is key to producing a machine learning model that is both robust and easily scalable. In many ways, FLINK-11095 is what the community is currently working on to implement that goal, making Flink a key player in building machine learning models throughout all of these steps.

Currently, the flink-ml module in Flink has implemented some traditional machine learning algorithms, but it needs further improvement.

The Flink community is actively providing support for deep learning. Alibaba provides the TensorFlow on Flink project, which allows users to run TensorFlow in Flink jobs, use Flink for data processing and then send the processed data to the Python process in TensorFlow for deep learning training. For programming languages, the Flink community is working on the support for Python. Currently, Flink only supports Java and Scala APIs. Both the languages are JVM-based. Therefore, currently Flink is suitable for big data processing in the system but not so suitable for data analysis and machine learning. In general, people in the fields of data analysis and machine learning prefer using more advanced languages like Python and R. The Flink community is also planning support for these languages in the near future. Flink will support Python first, because Python has enjoyed fast development in recent years, in large part due to the development of AI and deep learning. At present, all popular deep learning libraries provide Python APIs, including TensorFlow, Pytorch, and Keras. When Python is supported in Flink, users will be able to connect all pipelines for machine learning in just one language, which should improve their development by leaps and bounds.

In the development environment, Flink jobs are generally submitted with the shell command bin/flink run. However, if used in production, this job submission method may cause many issues, in reality. For example, it may be hard to track and manage job statuses, retry failed jobs, start multiple Flink jobs, or easily modify and submit job parameters. These problems could possible be solved with manual intervention, of course, but manual intervention is extremely dangerous thing in production, not to mention time consuming! Ideally, we need to automate all the operations that can be automated. Unfortunately, no suitable tool can be found in the Flink ecosystem as of now. Alibaba has already developed a suitable tool for internal use, which has been running in production for a long time and has proven to be a stable and dependable tool for submitting and maintaining Flink jobs. Currently, Alibaba plans to remove some components that Alibaba relies on internally, and then publish the source code for this project. This project is expected to become open source in the first half of 2019.

To sum things up, the current Flink ecosystem has many problems with it, but at the same time has so much room for so many development opportunities. The Apache Flink community is a dymanic one, constantly making birght, big efforts to build a more powerful Flink ecosystem in the hopes that Flink can be brought to its full potential.

Got ideas? Feel inspired? Join the community, and let's build a better Flink ecosystem together.

206 posts | 54 followers

FollowAlibaba Clouder - October 15, 2019

Apache Flink Community - August 1, 2025

Alibaba Clouder - September 11, 2018

Alibaba Clouder - December 24, 2018

Your Friend in a need - March 9, 2020

roura356a - July 24, 2019

206 posts | 54 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community