By Li Jingui (Jinxi), Development Engineer of Open-Source Big Data Platform of Alibaba Cloud, and Wang Xiaolong (Xiaolong), Technical Expert of Open-Source Big Data Platform of Alibaba Cloud

Databricks is a leading enterprise specializing in data and artificial intelligence (AI). It is the founding company of Apache Spark and the largest code contributor to Spark. It focuses on open-source ecosystems, such as Spark, Delta Lake, and MLFlow, to build enterprise-class lake house products. In 2020, Databricks and Alibaba Cloud jointly built a full hosting big data analysis and AI platform based on Apache Spark called Databricks DataInsight (DDI). DDI provides users with services in data analysis, data engineering, data science, and AI to build an integrated lake house architecture.

Delta Lake is a transaction-enabled data lake product developed by Databricks since 2016. It officially became open-source in 2019. In addition to the open-source Delta Lake OSS led by the community, the commercial products of Databricks provide the Spark and Delta Lake engine of the Enterprise Edition. This article describes how to optimize the performance of the product features provided by the Enterprise Edition to help you efficiently access lake houses.

If you frequently perform merge, update, and insert operations in Delta Lake or insert data into Delta tables in scenarios of stream processing, a large number of small files will be generated in Delta tables. On the one hand, the increase in the number of small files reduces the amount of data that Spark reads serially each time, which reduces the reading efficiency. On the other hand, it increases the metadata of Delta tables and slows down the acquisition of metadata, which reduces the reading efficiency of the table from another dimension.

Databricks provides three optimization features from two dimensions to solve the problem of small files in Delta Lake, avoiding the generation of small files and automatically (or manually) merging small files.

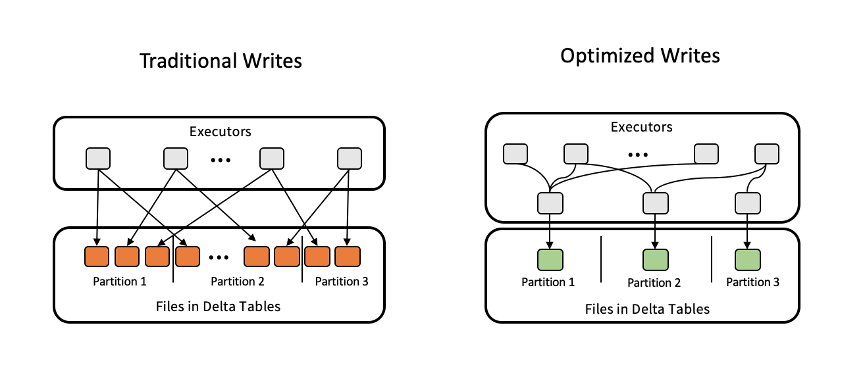

When each executor writes data to a partition in open-source Spark, it creates a table file for writing. In the end, many small files are generated in one partition. Databricks optimizes the process of writes of Delta tables. For each partition, a special executor is used to merge writes to the partition by other e xecutors. This avoids the generation of small files.

This feature is controlled by the table attribute delta.autoOptimize.optimizeWrite:

CREATE TABLE student (id INT, name STRING)

TBLPROPERTIES (delta.autoOptimize.optimizeWrite =true );ALTER TABLE table_name

SET TBLPROPERTIES (delta.autoOptimize.optimizeWrite =true );This feature has two benefits:

The weakness of this feature is also clear. Since one executor is used to merge the writes of table files, the parallel writes of table files are reduced. An additional layer of executor needs to shuffle the written data, which brings additional overhead. Therefore, when using this feature, you need to evaluate these scenarios:

It is necessary to continuously insert the arrived data into Delta tables in stream processing scenarios, such as streaming data into the lake. Each time the data is inserted, a new table file is created to store the newly arrived data. If it is triggered every ten seconds, the number of table files generated by such operation of stream processing in one day will reach 8,640. The operation of stream processing is usually long-running, so running this operation of stream processing will generate millions of table files within 100 days. For such a Delta table, only the maintenance of metadata is a great challenge, and the query performance is sharply deteriorating.

Databricks provides the feature of automatically merging small files to solve the above problems. After data is written to the Delta table each time, the system checks the number of table files in the Delta table. If the number of small files whose size is less than 128 MB in the Delta table reaches the threshold, the system merges the small files in the Delta table into a large file.

This feature is controlled by the table attribute delta.autoOptimize.autoCompact and is the same as the feature delta.autoOptimize.optimizeWrite. You can specify this feature when you create a table or modify the created table. The threshold for automatic merging is controlled by the spark.databricks.delta.autoCompact.minNumFiles. The default value is 50. If the number of small files reaches 50, table file merging will be performed. The maximum size of generated files after merging is 128 MB. If you need to adjust the size of the merged target file, you can adjust the configuration spark.databricks.delta.autoCompact.maxFileSize.

Automatic small file merging is written to Delta tables, and the written small files in tables are triggered when reaching the threshold. In addition to automatic merging, Databricks provides the Optimize command to enable users to manually merge small files, optimize the table structure, and make the structure of table files more compact. The Optimize command uses the bin-packing algorithm, which merges small files in Delta tables and generates more balanced table files after merging. (The table file size is similar to each other.) For example, you only need to run the following command to optimize the table file of the student table in Delta tables:

OPTIMIZE student;The Optimize command supports the merging of small files in the whole table and the merging of table files in specific partitions. For example, you can only merge small files in partitions whose date is after 2017-01-01:

OPTIMIZE student WHERE date >= '2017-01-01'According to the test data of DDI products, the Optimize command can improve the query performance by more than eight times.

Databricks has made many optimizations in the data query, including:

There are two classic technologies of query optimization in the data query system. One technology processes data at a faster speed, and the other technology reduces the amount of data that needs to be scanned by skipping irrelevant data. Data Skipping belongs to the latter optimization technology. It uses statistics on table files to skip irrelevant table files, thus improving query performance.

When you add a table file to Delta tables, Delta Lake stores statistics of the first 32 columns of data of the table file in the metadata of Delta tables, including the maximum and minimum values of the data columns and the number of rows that are null. During queries, Databricks uses these statistics to improve query performance. For example, for the x column of a Delta table, we assume the minimum value of the x column of a table file of the table is 5, and the maximum value is 10. If the query condition is where x < 3, according to the statistics of the table file, we can conclude that the table file cannot contain the data we need. Thus, we can directly skip the table file to reduce the amount of scanned data, improving query performance.

The implementation principle of Data Skipping is similar to the Bloom filter. Query conditions are used to determine whether the data to be queried may exist in a table file. This reduces the amount of data to be scanned. If the table file cannot contain queried data, you can skip it. If the table file may contain queried data, you need to scan the table file.

In order to skip as many table files as possible that are not related to queries, we need to narrow the min-max gap of table files so similar data can be aggregated in the file as much as possible. For example, we assume that a table contains ten table files, and the value of the x column in the table is [1,10]. If the distribution of x columns in each table file is [1,10], for the query condition: where x < 3, no table file cannot be skipped. Therefore, performance cannot be improved. If the min-max of each table file is 0, the distribution of x columns in table file 1 is [1, 1], and the distribution of x columns in table file 2 is [2, 2], for the query condition: where x < 3, you can skip 80% of table files. Inspired by this idea, Databricks supports the use of Zordering to aggregate data, narrow the min-max gap of table files, and improve query performance. We describe the principles and usage of Zordering optimization in the following section.

As explained in the preceding section, the columns as query conditions in a table file should be as compact as possible to skip as many irrelevant table files as possible. It means the min-max gap should be as small as possible. Zordering can implement the feature, which can store the associated information in multiple dimensions into the same group of files. Therefore, to be exact, Zordering is a data layout optimization algorithm, and it can significantly improve query performance by combining with Data Skipping.

The use of Zordering is very simple. For table events, if column eventType and generateTime are often used as query conditions, you can execute the command below:

OPTIMIZE events ZORDER BY (eventType, generateTime)Delta tables use column eventType and generateTime to adjust the data layout to make the eventType and generateTime in the table file as compact as possible.

According to our experiments on DDI, Zordering optimization can achieve a performance improvement of 40 times. Please refer to the official documents of DDI at the end of this article for specific test cases.

The Bloom filter is also a very useful Data-skipping technology. This technology can quickly determine whether a table file contain the data to be queried. If it does not contain the data, the file will be skipped in time. This reduces the amount of scanned data and improves query performance.

If you create a Bloom filter index on a column of a table and use where col = "something" as a query condition, you can use the Bloom filter index to draw two conclusions when scanning the files in the table. The file does not contain col = "something" rows, and the file may contain col = "something" rows.

col = "something" rows, you can skip the file. This reduces the amount of scanned data and improves query performance.col = "something" rows, the engine will process the file. Note: This only determines that the file may contain target data. The Bloom filter defines an indicator that describes the probability of a judgment error. It is the probability that the system judges the file containing the data to be queried, but the file does not contain the target data. It is called False Positive Probability (FPP).Databricks supports file-level Bloom filters. If Bloom filter indexes are created in some columns of a table, each table file of the table is associated with an index file of Bloom filter. Those index files are stored in the subdirectory (the_delta_index) of the same directory as the table file. Before reading those table files, Databricks checks the index files and determines whether the table files contains the data to be queried, according to the preceding steps. If a table file does not contain the data, it is skipped directly. Otherwise, it processes the file.

The creation of the Bloom filter index is similar to a traditional database index, but you need to specify the FPP and the number of possible values in the column:

CREATE BLOOMFILTER INDEX ON TABLE table_name

FOR COLUMNS(col_name OPTIONS (fpp=0.1, numItems=50000000))According to our experiments on DDI, Bloom filter indexes can achieve a performance improvement by more than three times. Please refer to the official documentation of DDI at the end of this article for test cases.

Dynamic File Pruning (DFP) is similar to Dynamic Partition Pruning. Both are pruning in the Join execution phase of dimension tables and fact tables to reduce the amount of scanned data and improve query efficiency.

Let's take a simple query as an example to introduce the principle of DFP:

SELECT sum(ss_quantity) FROM store_sales

JOIN item ON ss_item_sk = i_item_sk

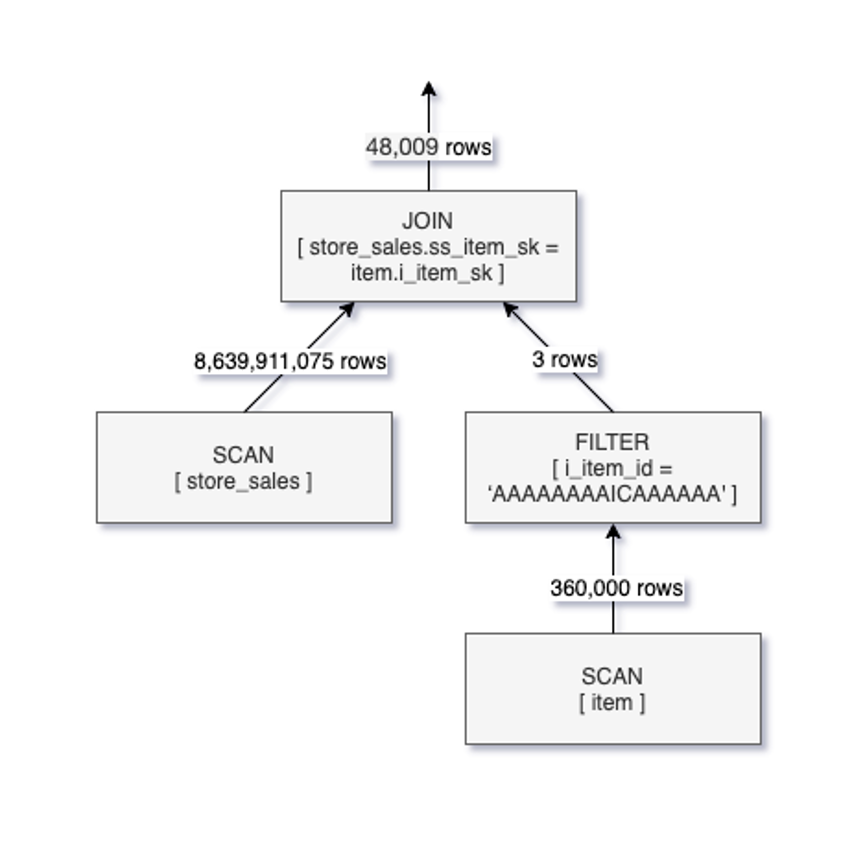

WHERE i_item_id = 'AAAAAAAAICAAAAAA'In this query, item is a dimension table in which the amount of data is small, store_sales is a fact table in which the amount of data is very large, and the where query condition applies to the dimension table. If DFP is not enabled, the logical execution plan of the query is listed below:

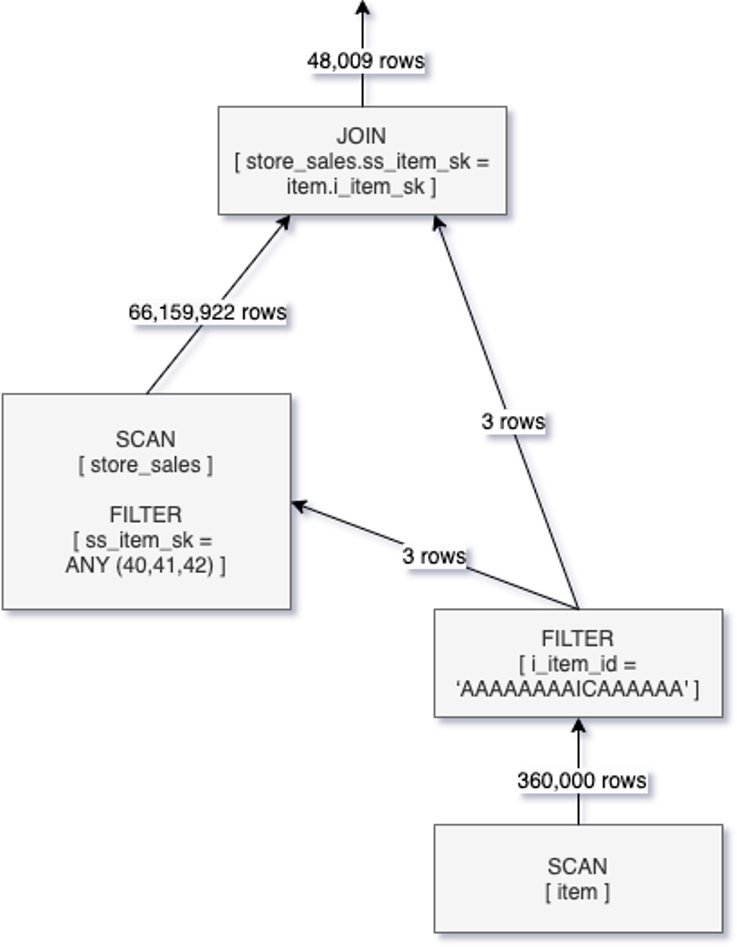

As shown in the preceding figure, a full table scan is performed on store_sales, and the rows in the filtered item table are joined. Although more than 40,000 data records are returned, over 8 billion data records in the store_sales table are scanned. There is a very intuitive optimization for this query. First, query out i_item_id = 'AAAAAAAAICAAAAAA' data rows in the item table. Then, use the i_item_sk value of these data rows as the query condition for the ss_item_sk of the table store_sales to filter in the SCAN phase of the table store_sales. Combined with the Data Skipping technology described above, the scanning of table files can be greatly reduced. This idea is the fundamental principle of DFP. The logical execution plan after DFP is enabled is shown in the following figure:

After DFP is enabled, the filtering condition is pushed down to the SCAN operation, and only more than 600 million pieces of data in store_sales are scanned. From the results, after DFP is enabled, the query has achieved a 10-fold performance improvement. Databricks also tests the TPC-DS for this feature. The test has found that after DFP is enabled, the 15th query of the TPC-DS has achieved an 8-fold performance improvement. There are 36 queries that have achieved a performance improvement by two times or more.

This article describes the performance benefits of the Delta Lake of Databricks Enterprise Edition. Those features can improve the query performance of Spark SQL and accelerate the query speed of Delta tables. Databricks provide value for many enterprises based on the lake house architecture Enterprise Edition.

Zuoyebang's Best Practices for Building Data Lakes Based on Delta Lake

The Principles of EMR StarRocks' Blazing-Fast Data Lake Analytics

62 posts | 7 followers

FollowAlibaba EMR - October 12, 2021

Alibaba EMR - August 22, 2022

Alibaba EMR - September 13, 2022

Alibaba EMR - September 23, 2022

Alibaba EMR - July 20, 2022

Alibaba EMR - September 2, 2022

62 posts | 7 followers

Follow Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR