By Chang Gaowei (Chang Shan)

Collated by LiveVideoStack

From Taobao Technology Department, Alibaba New Retail

Editor's note: This speech is from Chang Gaowei, a Technical Expert from the Taobao Technology Department, on LiveVideoStack 2019 Shenzhen. It is aimed at practitioners in the live streaming industry and technicians who are interested in low-latency live streaming and WebRTC technologies. This article introduces the exploration of Taobao low-latency live streaming technologies, how to realize low-latency live streaming in one second based on WebRTC technology, and the business values of low-latency streaming compared to e-commerce live streaming.

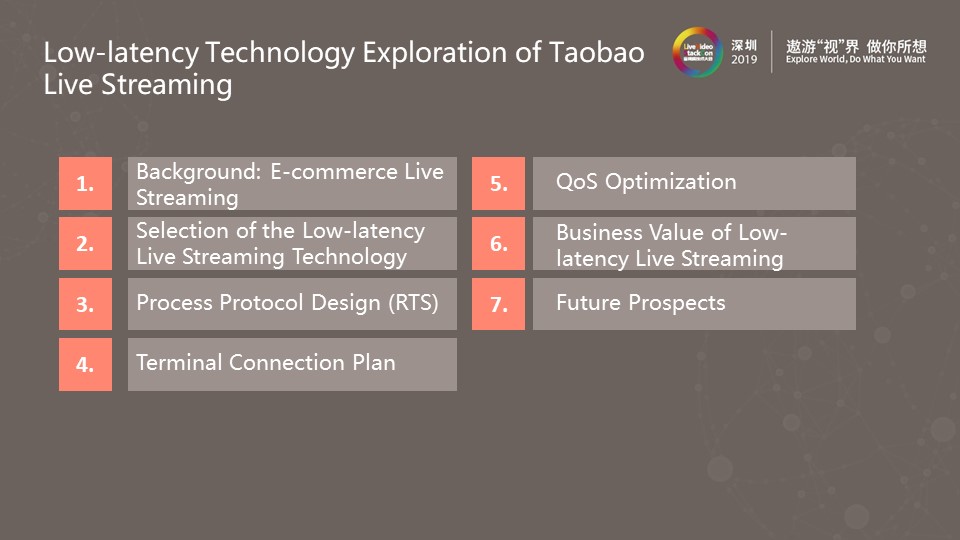

Author: Chang Gaowei (Changshan), Technical Expert from Taobao Technology Department. This article introduces how Taobao live streaming reduces the latency in e-commerce live streaming. Low-latency live streaming is a new trend of current live streaming technologies. The following focuses on our practices in this area. Now, we will look at each of the aspects shown in the preceding figure.

Today, Taobao live streaming is becoming increasingly familiar to all of us. Since its launch in 2016, the turnover of Taobao live streaming has set new highs in recent years. In 2018, its turnover exceeded RMB 100 billion. During the last Double 11 Global Shopping Festival, the turnover reached RMB 20 billion.

E-commerce live streaming and powerful interaction are the two major strengths of Taobao live streaming.

Traditional live streaming suffers from a long latency time. Generally, it takes more than 10 seconds for the anchor to respond to the audience's comments. Reducing the latency of live streaming and improving interaction efficiency between anchors and their fans by using multimedia technologies were our initial intentions for low-latency live streaming research.

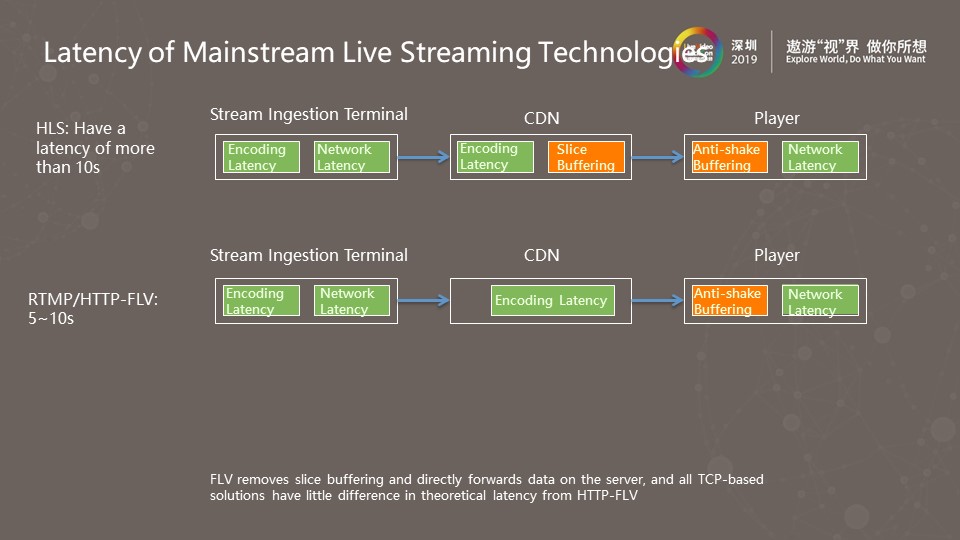

After analyzing current mainstream live broadcasting technologies, we found that HLS and RTMP/HTTP-FLV were two major protocols used in this scenario before the emergence of low-latency live streaming.

For HLS, the latency problem mainly pertains to encoding and decoding, network latency, and content distribution network (CDN) delivery latency. Since HLS is a sliced protocol, the latency in it can be divided into two parts. The first part is that the server has a latency in slice buffering, and the other part is that the player has a latency in picture anti-shake buffering. In this case, both the size and number of slices can affect the HLS latency, which generally exceeds 10 seconds.

For RTMP/HTTP-FLV, most vendors in China are using RTMP, whose server has been optimized compared with HLS. The RTMP server is not used for slices, but forwards each frame separately so that the CDN delivery latency can be minimized. The RTMP latency results from the anti-shake buffering of the player. To improve the smoothness of live streaming with jitters, a buffering latency ranging from 5 to 10 seconds is introduced.

These two protocols are based on TCP. As Chinese vendors have achieved the minimum latency of RTMP over TCP, it would be hard to use a TCP-based protocol to optimize the latency for better performance than the current RTMP protocol.

TCP is not suitable for low-latency live streaming due to its inherent features, for the following reasons.

Due to the preceding reasons, we chose a UDP-based solution instead.

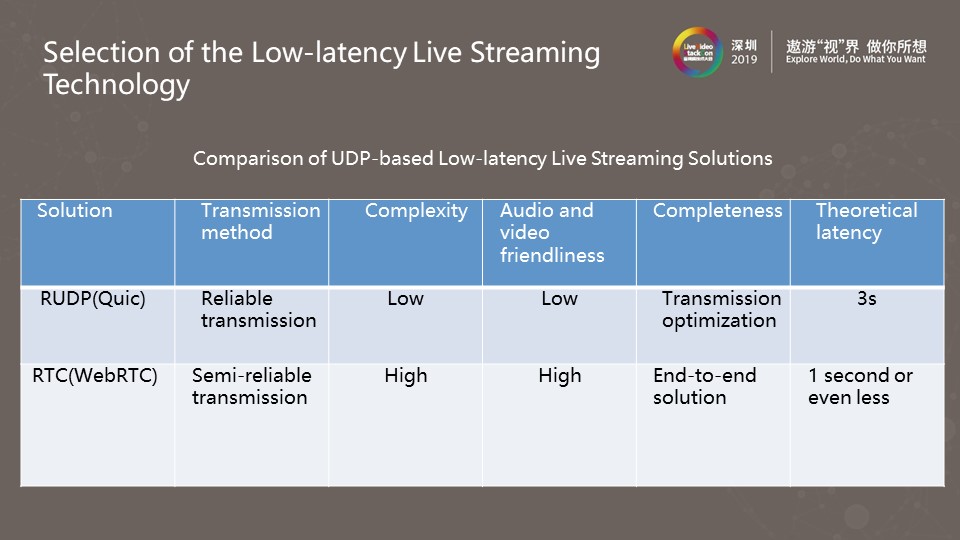

The preceding figure shows the comparison of two UDP-based solutions. The first is a proven UDP solution, such as Quic, and the other is an RTC solution, such as WebRTC.

We compared the two solutions from five dimensions:

To sum up, the greatest advantage of the Quic solution lies in its low complexity. However, this solution can be more complex for achieving a lower latency. Eventually, we chose WebRTC as our low-latency solution due to its technical sophistication. We also believe that the integration of RTC and live streaming technologies will be a trend of audio and video transmission in the future.

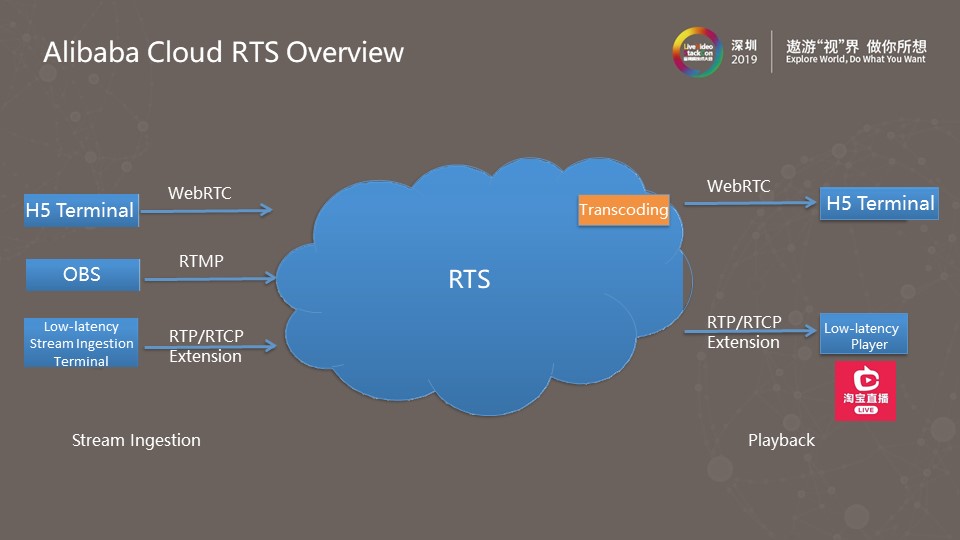

RTS is a low-latency live streaming system co-built by Alibaba Cloud and Taobao Live. This system is divided into two parts:

Next, I will introduce low-latency live streaming technologies in a logical sequence from process protocol to terminal solution. Also, I will answer these common questions:

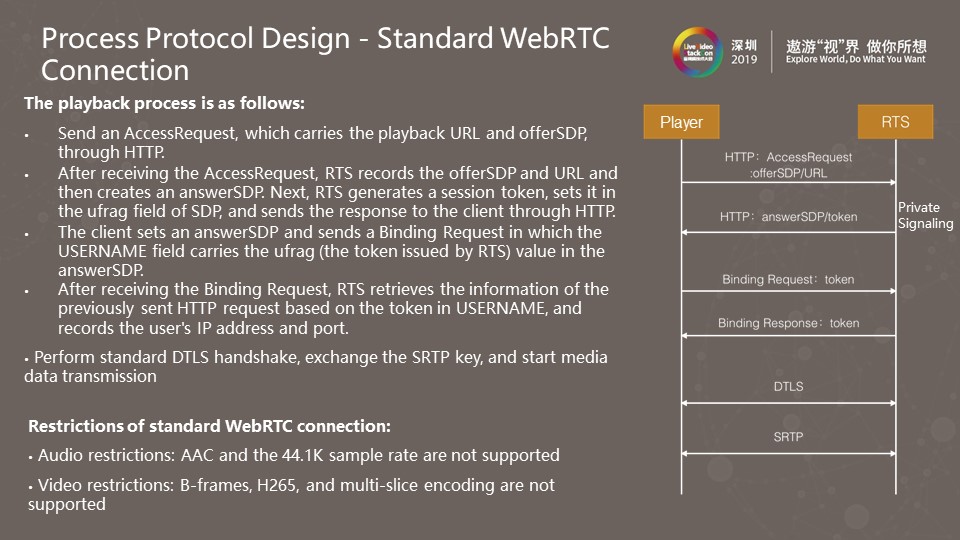

Generally, the playback process is:

Given that RTS is a single-port solution, the token was passed on through the USERNAME of the Binding Request. According to the token in the UDP request, the exact user that sent the request can be identified. Conventionally, WebRTC differentiates users by port.

However, setting a port for each user by RTS incurs huge O&M costs.

In addition, the standard WebRTC connection process has the following restrictions.

It does not support AAC and the 44.1K sample rate for audio, which are commonly used in live streaming.

In addition, it does not support coding features such as B-frames and H265 for videos, and picture blurring of multi-slice encoding occurs in weak network conditions. Establishing a WebRTC connection takes a long time, which affects the user experience on quick startup.

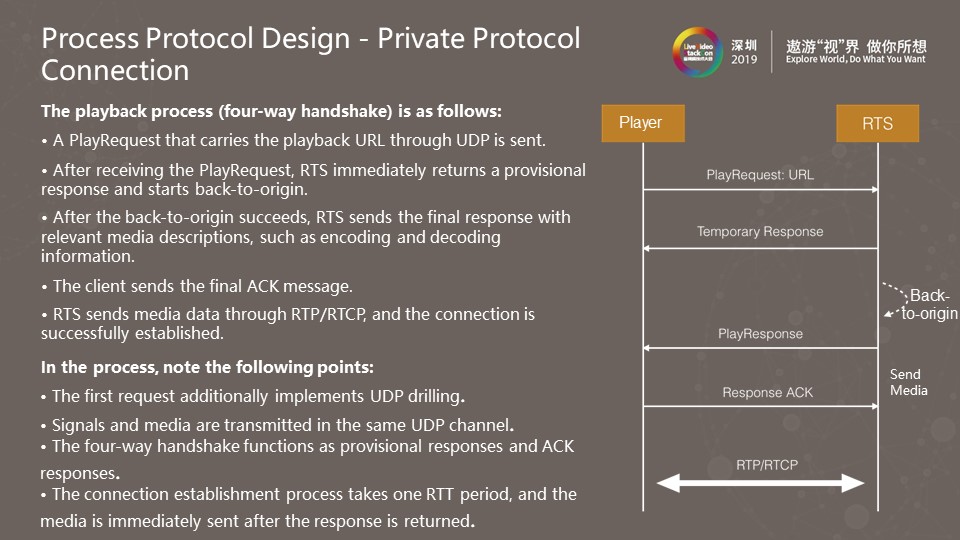

Based on the preceding problems, we designed a private protocol-based connection method with better efficiency and compatibility.

The playback process (four-way handshake) is:

RTS sends media data through RTP/RTCP, and the connection is established. In this process, note the following points:

The four-way handshake process is designed to ensure the connection speed and the reliable delivery of important information.

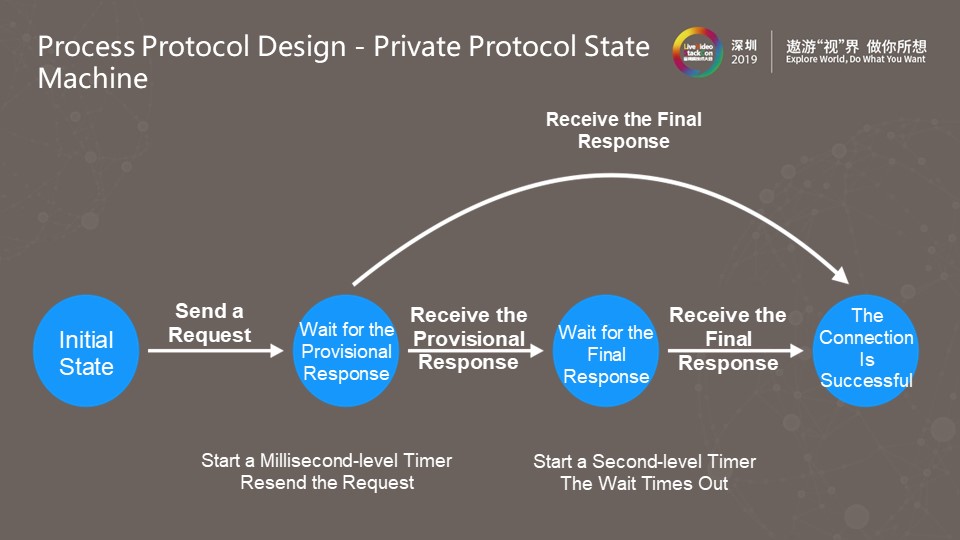

The preceding figure shows the process state machine of a private protocol.

Playback requests are sent in the initial state and then enter the pending provisional response state.

In the provisional response state, the system starts a millisecond-level quick retransmission timer. If the playback requests time out and no provisional responses are returned, these requests are quickly retransmitted to ensure the connection speed. After receiving the provisional response, the system enters the pending final response state and starts a second-level timer.

After the final response is received, the connection is successfully established.

During the process, lost or disordered provisional responses can occur. As a result, the final response may be received earlier than the normal arrival time, which directly transforms the playback requests from the provisional response state to the final state.

For private signaling, we chose the RTCP protocol. The reason is that RFC3550 defines four functions of RTCP, of which the fourth optional function is described like this: RTCP can be used in a "loosely controlled" system to transmit minimal session control information. For example, the standard defines a BYE message to notify that the source is no longer active. On this basis, we extended the signaling messages of connection establishment, including request, provisional response, final response, and final response ACK messages.

At the same time, we chose APP messages among RTCP messages to carry private signaling messages. APP messaging is an extension protocol that RTCP provides for new applications and features. It is an official extension provided by RTCP, and the application layer can customize message types and content. In addition, this protocol was chosen based on the following considerations:

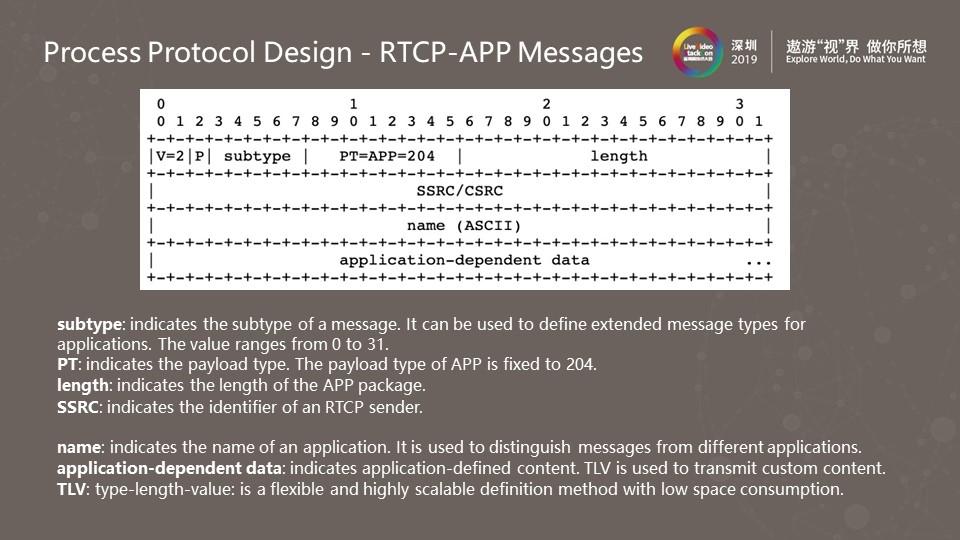

The preceding figure shows the RTCP-APP message header, which contains the following key fields.

1. subtype

The message subtype can be used to define private application extension messages. Requests, provisional responses, and final responses of private signaling are distinguished by the subtype. The subtype value ranges from 0 to 31, where 31 is reserved for future message type extension.

2. payload type

The payload type of APP is fixed to 204. It can be used to distinguish other RTP and RTCP messages.

3. SSRC

SSRC is the identifier of an RTCP sender.

4. name

"name" indicates the application name, which is used to distinguish messages from different applications. In RFC3550, two fields are used to distinguish message types. "name" is used to identify the application type, and "subtype" is used to determine the message type. Multiple subtypes are allowed for the same name.

5. application-dependent data

To extend the message content at the application layer, the type, length, and value (TLV) format is used. It is a flexible and highly scalable binary data format with low space consumption.

The protocol body for media complies with the RTP/RTCP and WebRTC specifications. More specifically, H264 follows RFC6184 and H265 follows RFC7798.

For the RTP extension, the standard RTP extension method is used. For compatibility with WebRTC, the definition of the standard RTP extension header follows RFC5285.

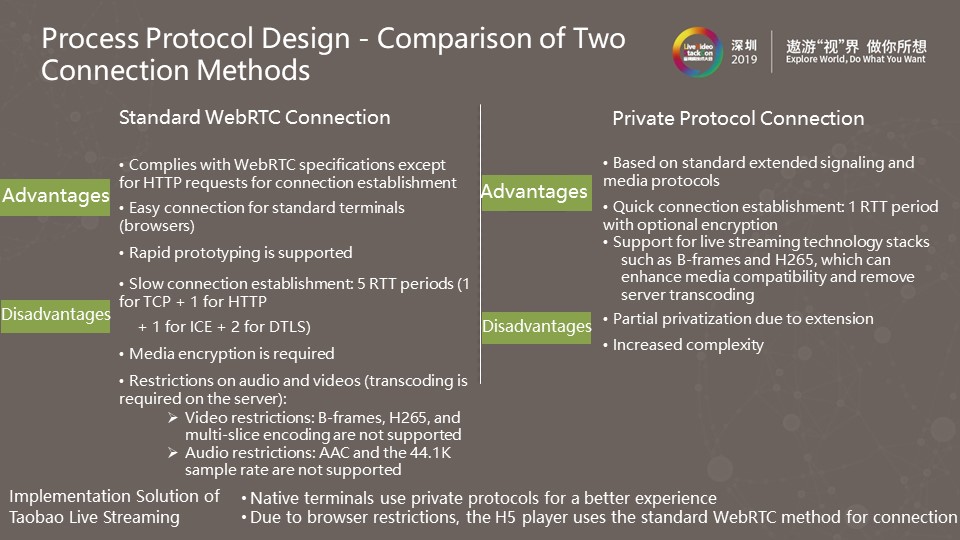

Standard WebRTC connection has the following advantages:

Standard WebRTC connection has the following disadvantages:

Private protocol connection has the following advantages:

Private protocol connection has the following disadvantages:

Therefore, the use of private protocols increases the complexity of this solution.

In the final Taobao live streaming solution, to obtain a better user experience, we use private protocols to connect native terminals, and this method has been widely adopted online.

There are three principles for designing a process protocol:

In practice, we did not overturn all RTP/RTCP protocols to use completely new private protocols for two reasons. The first lies in the workload. Redesigning protocols requires more workloads than using standard protocols. Second, the RTP/RTCP protocol design is streamlined and proven. In contrast, a new design may not take all potential problems into account. For the preceding reasons, we chose a standard-based extension rather than redesign.

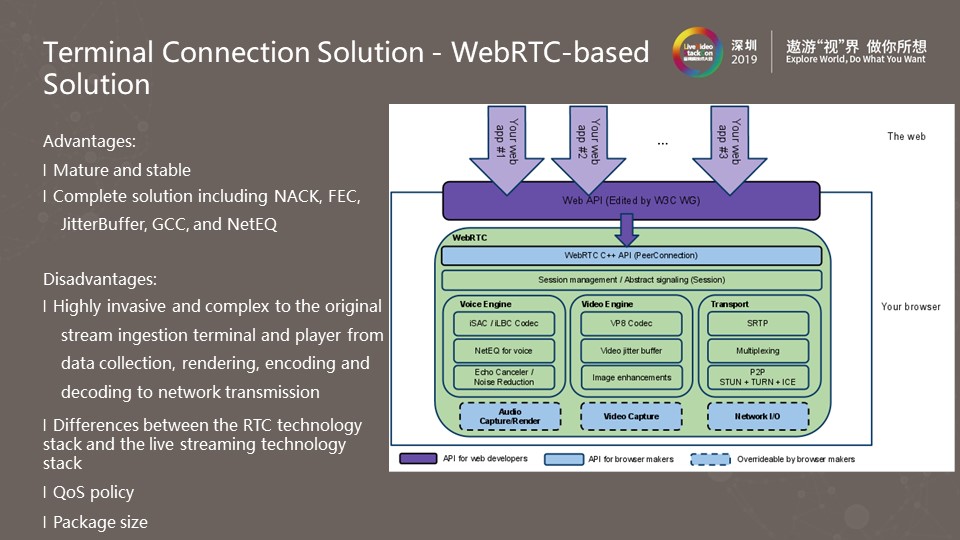

The connection solution based on the complete set of WebRTC modules uses all WebRTC modules and modifies some of them to implement low-latency live streaming capabilities.

This solution has both pros and cons.

After years of development, this solution has become very mature and stable. At the same time, it provides a complete solution, including NACK, JitterBuffer, and NetEQ, which can be directly used for low-latency live streaming.

The preceding figure shows the overall WebRTC architecture. This solution is a complete end-to-end solution, which covers data collection, rendering, encoding, and decoding, as well as network transmission. Therefore, it is highly invasive and complex to existing stream ingestion terminals and players.

The RTC technology stack is different from the live streaming technology stack. The former does not support features like B-frames and H265. In terms of the QoS policy, the native application scenario of WebRTC is phone calls. The basic QoS policy of WebRTC is to prioritize latency over picture quality, which is not necessarily true for live streaming.

As for the package size, all WebRTC modules are packed into an APP package with at least a 3 MB increase in the package size.

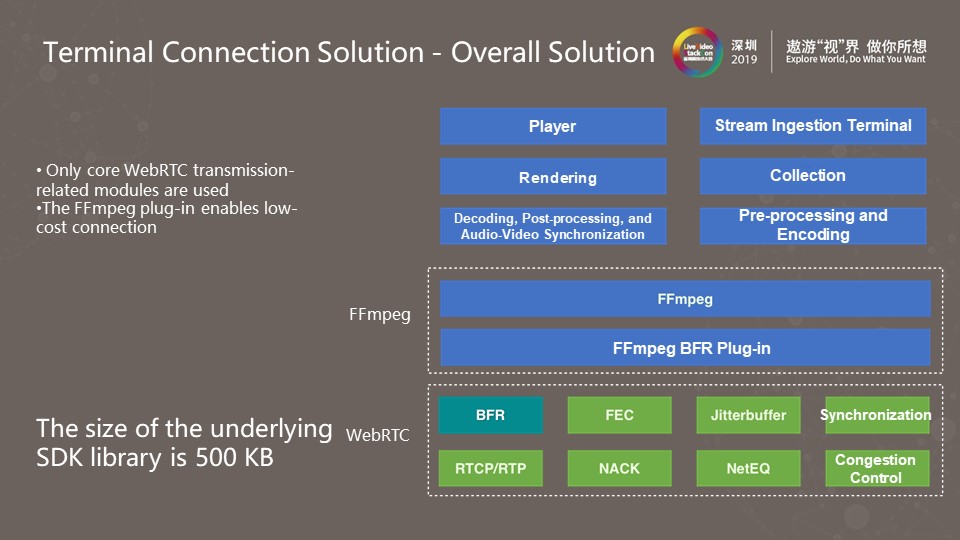

The current overall connection solution for terminals is based on WebRTC, as shown in the preceding figure. However, we only use several core transmission-related modules of WebRTC, including RTP/RTCP, FEC, NACK, JitterBuffer, audio and video synchronization, and congestion control.

We encapsulated these basic modules as an FFmpeg plug-in and then injected it to FFmpeg. Then, the player can directly call the FFmpeg method to open the URL and access our private low-latency live streaming protocol. This dramatically reduces modifications of the player and stream ingestion terminal and lessens intrusions of low-latency live streaming technologies into the original system. In addition, the utilization of basic modules greatly reduces the resulting package size.

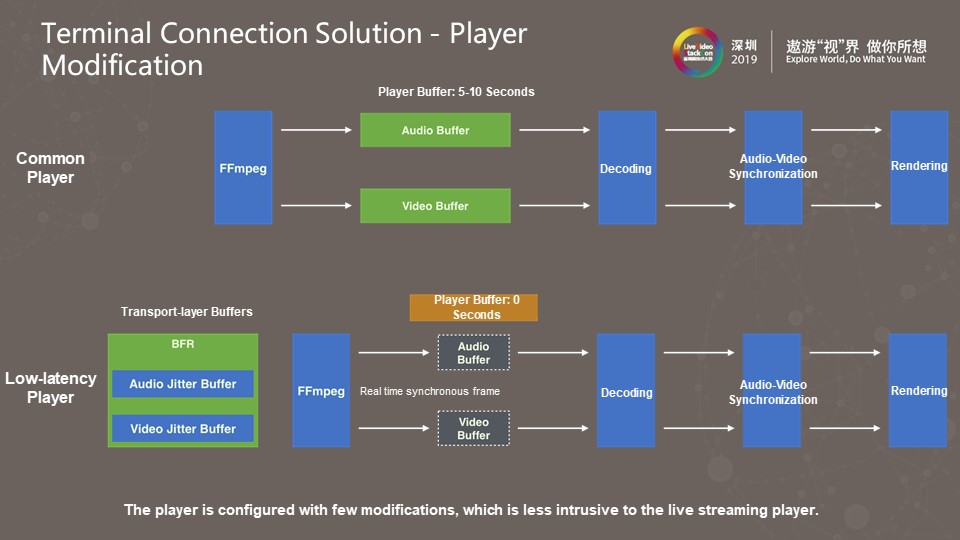

The preceding figure shows the architecture of a common player. The player uses FFmpeg to access the network connection, reads audio and video frames, and caches them in the player buffer. Then, these frames will be decoded, audio-video synchronized, and rendered.

The following figure shows the overall architecture of the low-latency live streaming system. As you can see, a low-latency live streaming plug-in is added to FFmpeg to support our private protocols. The buffer duration of the player is set to be 0 seconds. The output audio and video frames from FFmpeg are directly transmitted to the decoder for decoding, and then synchronized and rendered. In this architecture, we moved the original buffer of the player to the transport-layer SDK and used JitterBuffer to dynamically adjust the buffer size based on the network condition.

This solution requires minor modifications to the player and does not affect the original player logic.

Low-latency live streaming technologies have been widely applied in Taobao live streaming. They reduce the latency of Taobao live streaming and improve the user interaction experience, which is of great value for Alibaba Taobao.

After all, all technical optimizations are meant to yield business value, and any improvement in the user experience should help drive business development. From online tests, we found that low-latency live streaming can significantly promote the transactions of e-commerce live streaming, with the unique visitor (UV) conversion rate increased by 4% and the gross merchandise volume (GMV) by 5%.

Additionally, low-latency live streaming technologies support various business scenarios, such as live auction and live customer service.

We are looking for three prospects for the low-latency live streaming technologies:

Breakdown! A Detailed Comparison Between the Flutter Widget and CSS in Terms of Layout Principles

Alibaba Clouder - March 29, 2021

Alibaba Cloud Community - November 29, 2021

淘系技术 - October 28, 2020

Alibaba Clouder - November 22, 2019

Alibaba Clouder - April 2, 2021

Alibaba Clouder - January 22, 2020

Broadcast Live Solution

Broadcast Live Solution

This solution provides tools and best practices to ensure a live stream is ingested, processed and distributed to a global audience.

Learn More Real-Time Streaming

Real-Time Streaming

Provides low latency and high concurrency, helping improve the user experience for your live-streaming

Learn More Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn More Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn MoreMore Posts by 淘系技术