Step up the digitalization of your business with Alibaba Cloud 2020 Double 11 Big Sale! Get new user coupons and explore over 16 free trials, 30+ bestselling products, and 6+ solutions for all your needs!

Live stream shopping is a rapidly growing e-commerce trend. However, audio-visual technology may be unfamiliar territory for many front-end engineers. This article explores the world of Taobao live broadcast and uncovers a variety of information about streaming media technology, such as text, graphics, images, audio, and video. It also introduces players, web media technology, and mainstream frameworks. This article envisions opening the door to the realm of frontend multimedia.

| Bitrate | The higher the sample rate per unit of time, the higher the clarity, and the closer the processed file is to the original file. |

|---|---|

| Frame Rate | For videos, the frame rate affects the visual lag during video playback. A higher frame rate produces higher fluency. A low frame rate may cause visual lag. |

| Compression Rate | Calculate the compression rate using the formula: File size after compression/Size of original file × 100%. For encoding, the smaller the file size after compression, the better the compression performance. However, it takes a longer time to decompress the file. |

| Resolution | A parameter used to measure the amount of data in an image. It is closely related to the video definition. |

Popular container formats include MP4, AVI, FLV, TS, M3U8, WebM, OGV, and MOV.

| H.264 | H.264 is the most popular encoding format today. |

|---|---|

| H.265 | H.265 is a new format for efficient video encoding. H.265 replaces the H.264 and AVC encoding standards. |

| VP9 | VP9 is the next-generation video encoding format developed by the WebM Project. VP9 supports the full range of web and mobile use cases from low bitrate compression to high-quality ultra-high definition. VP9 also supports 10- and 12-bit encoding and high dynamic range (HDR) encoding. |

| AV1 | AV1 is an open-source, copyright-free video encoding format developed by Alliance for Open Media (AOM). AV1 was developed by Google as the successor of VP9. AV1 is a strong competitor of H.265. |

| Sample Rate | The audio sample rate is the average number of samples that a recording device collects from audio signals per second. Sound sampled at a higher audio sample rate sounds more real and natural when reproduced. |

|---|---|

| Sample Size | The number of samples collected per second is measured by the bitrate. The number of bits in each sample is measured by the bit depth, which reflects the sample accuracy in bits. |

| Bitrate | The bitrate measures the number of bits transmitted per second. It is also known as the data signal rate. The units for bitrates include bit/s, kbit/s, and Mbit/s. The higher the bitrate, the more data is transmitted per unit of time. |

| Compression Rate | The compression rate is the ratio of the original audio data size to the size of audio data when compressed by pulse code modulation (PCM) or other compression coding technologies. |

Popular audio formats include WAV, AIFF, AMR, MP3, and OGG.

| PCM | PCM is one of the encoding methods used in digital communication. |

|---|---|

| AAC-LC(MPEG AAC Low Complexity) | Advanced Audio Codec - Low Complexity (AAC-LC) is a high-performance audio codec that provides high-quality audio at low bitrates. |

| AAC-LD | Advanced Audio Coding - Low Delay (AAC-LD) or MPEG-4 AAC-LD is an audio decoder with the low delay necessary for conference calls and over-the-top (OTT) services. |

| LAC (Free Lossless Audio Codec) | FLAC is the most popular free lossless audio compression codec. Since 2012, FLAC has been supported by many software and hardware audio products, such as compact disks (CDs). |

Over the Internet, audio and video media data is transmitted through network protocols, which are distributed at the session layer, presentation layer, and application layer.

Commonly used protocols include the Real-time Messaging Protocol (RTMP), Real-time Transport Protocol (RTP), Real-time Control Protocol (RTCP), Real-time Streaming Protocol (RTSP), HTTP-Flash Video (FLV) protocol, HTTP Live Streaming (HLS) protocol, and Dynamic Adaptive Streaming over HTTP (DASH) protocol. Each protocol has its own advantages and disadvantages.

After a live shopping host starts a live stream, a recording device collects the host's voice and image and ingests them into a streaming media server through the corresponding protocol. Then, streaming data is pulled from the streaming media server through a stream pulling protocol to play the streams for viewers.

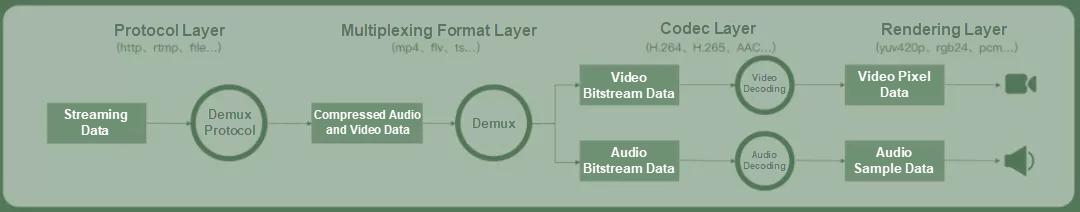

This section introduces player-related technologies and how players process pulled streams.

Video streams must be pulled before they are played back by a player.

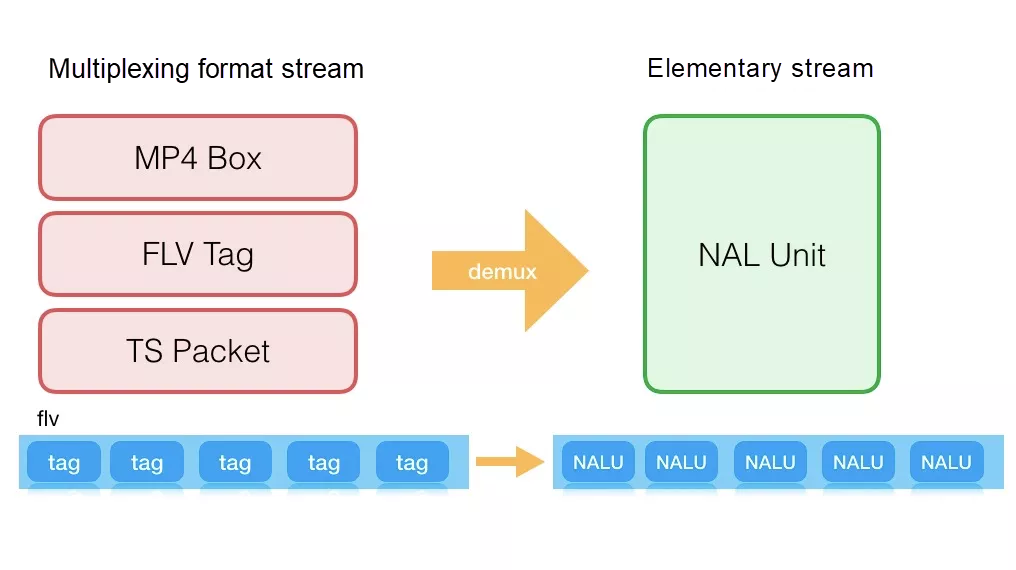

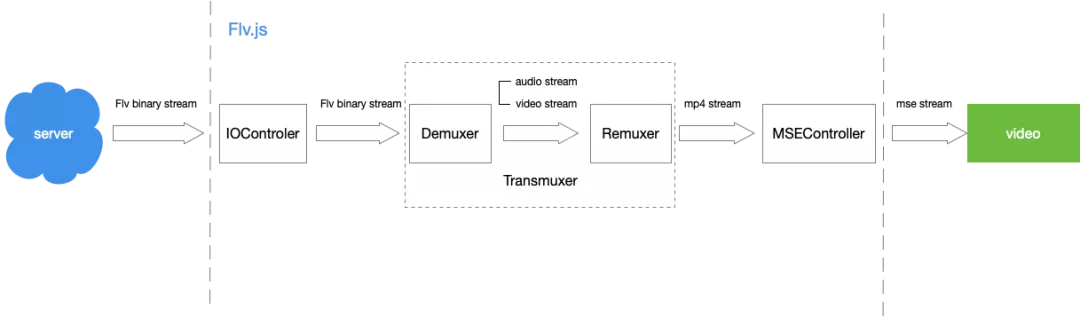

For example, video streaming data in FLV format is pulled by using the Fetch API and Stream API provided by a web browser.

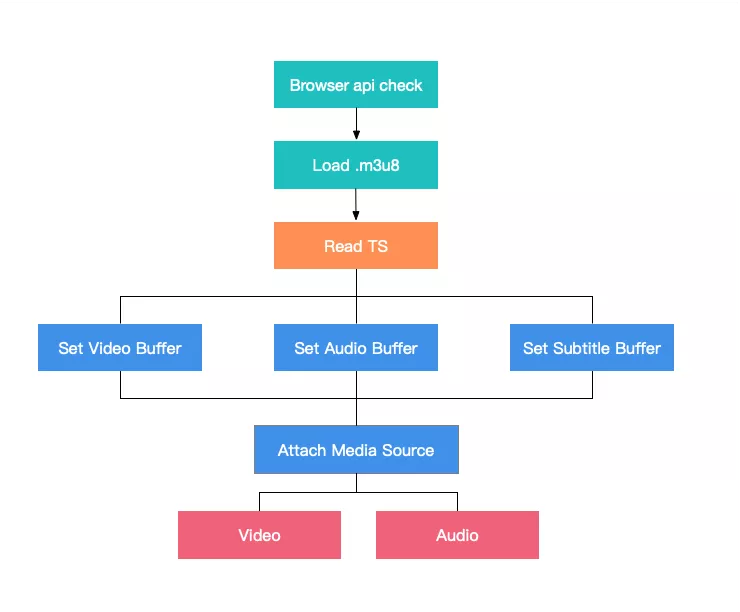

The pulled streaming data is then demultiplexed. Images, sounds, and subtitles (if any) are separated from the pulled streaming data by a demultiplexer. This process is called Demux.

The demultiplexed images, sounds, and subtitles are called basic streams, which are decoded by a decoder.

The demultiplexed elementary bitstreams are decoded into data that is played back by audio and video players.

The decoded data is of various types, some of which are introduced in the following sections.

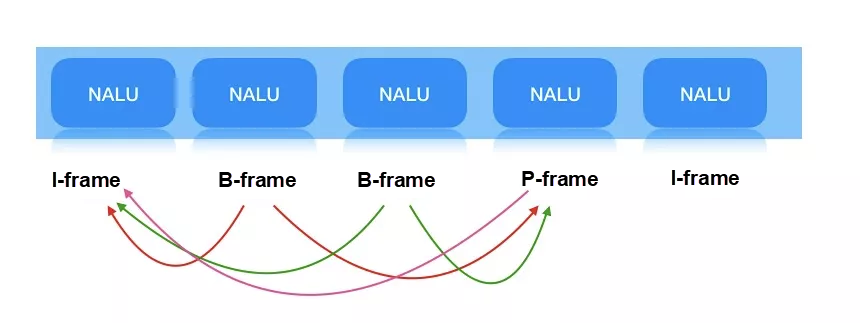

The Sequence Parameter Set (SPS) and Picture Parameter Set (PPS) jointly determine the maximum video resolution, frame rate, and a series of video playback parameters. The PPS and SPS are typically stored in the starting position of a bitstream.

The SPS and PPS store a set of global parameters for an encoded video sequence. If these parameters are lost, the decoding may fail.

Supplemental enhancement information (SEI) is optional when video encoders output video bit streams. For example, in live Q&A streams, much Q&A-related information is carried by SEI. This improves the synchronicity between question display and audio-visual presentation to viewers.

The DTS and PTS may directly determine the synchronicity of audio and video playback.

The decoding process generates a variety of products. For more information, see the last section of this article.

Remux is the opposite of Demux. Remux is the process of combining an audio elementary stream (ES), video ES, and subtitle ES into a piece of complete multimedia.

Both Remux and Demux are required to change the multiplexing and encoding formats of a video file. The specific processes are not described here.

Rendering refers to the playback of decoded data on PC hardware, such as monitors and speakers. The module responsible for rendering is called a renderer. Popular video renderers include the Enhanced Video Renderer (EVR) and Madshi Video Renderer (madVR). Renderers are typically built into web players by using video tags.

Custom rendering: For example, the H.265 player uses the APIs provided by a web browser to create a simulated video tag and uses the canvas and audio elements for rendering.

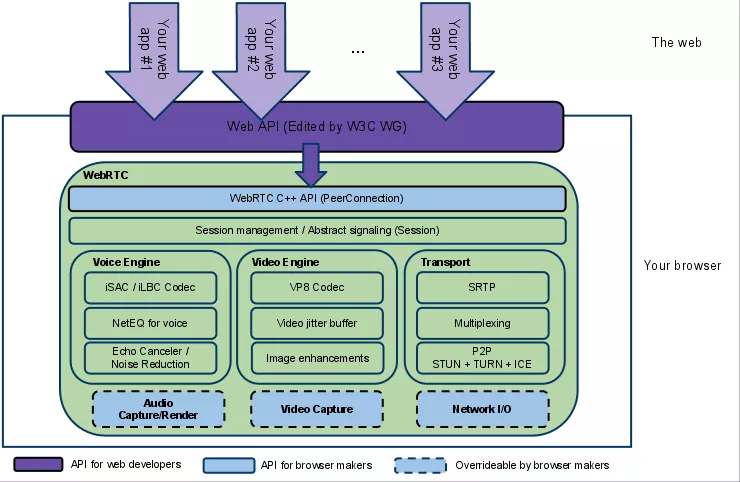

Web real-time communication (WebRTC) allows network applications and websites to establish peer-to-peer (P2P) connections between web browsers without using intermediate media. These connections support the fast transmission of audio streams, video streams, and other types of data.

WebRTC consists of a video engine, voice engine, session management, iSAC (designed for voice compression), VP8 (a video codec developed by Google's WebM project), and APIs, such as the native C++ APIs and web APIs.

Media Source Extensions (MSE) is a technology specification for players. The MSE API provides a web-based streaming media feature without using any plug-ins. MSE allows JavaScript to create media streams and playback the streams by using the audio and video elements.

MSE significantly extends the media playback features of web browsers and allows JavaScript to create media streams. MSE is applicable to adaptive streaming and has evolved to support live streaming and other scenarios.

Extended reality (XR) includes virtual reality (VR), augmented reality (AR), and mixed reality (MR). WebXR supports devices enabled with all types of realities. WebXR allows creating immersive content that runs on all VR and AR devices. This delivers a web-based VR and AR experience.

Web Graphics Library (WebGL) is a 3D drawing standard that supports user interaction. WebGL supports the combination of JavaScript and OpenGL ES 2.0. Add a JavaScript binding of OpenGL ES 2.0 to allow WebGL to accelerate the 3D rendering of hardware that uses HTML5 Canvas. During web-based development, use a system graphics card to display 3D scenes and models in a web browser more fluently and create complex navigation and visual data.

WebGL uses a canvas for rendering. In the "Players" section, we learned that players can use a canvas for image rendering. WebGL enhances the playback fluency and other capabilities of players.

WebAssembly (Wasm) is a new portable and web-compatible format that features small sizes and fast loading. Wasm is a new specification developed by the W3C community, which consists of mainstream web browser manufacturers.

For more information, visit https://webassembly.org/

Wasm allows players to be integrated with FFmpeg enabling players to decode H.265 videos that aren't recognized by web browsers.

The following sections introduce the most popular open-source products and frameworks at present.

flv.js is an open-source HTML5 FLV player developed by www.bilibili.com. Based on the HTTP-FLV protocol, flv.js transmutes FLV data through Pure JavaScript to ensure that FLV files are played back in web mode.

Official GitHub Page: https://github.com/bilibili/flv.js

hls.js is a JavaScript player library developed based on the HLS protocol. hls.js uses MSE to play HLS files in web mode.

The HLS protocol proposed by Apple Inc. is widely supported by mobile clients, so it is widely used in live streaming scenarios.

Official GitHub Page: https://github.com/video-dev/hls.js/

video.js is an HTML5-based player that playbacks H5 and Flash files and provides more than 100 plug-ins. video.js playbacks the files in HLS and DASH formats and allows creating custom themes and subtitle extensions. video.js is applicable to many scenarios worldwide.

Official GitHub Page: https://github.com/videojs/video.js

Website: https://videojs.com/

FFmpeg is a leading multimedia framework and an open-source, cross-platform multimedia solution. FFmpeg provides a range of features, such as audio and video encoding, decoding, transcoding, multiplexing, demultiplexing, streaming media, filters, and playback.

FFmpeg is used by the following frontend components:

Open Broadcaster Software (OBS) is flexible, open-source software for video recording and live streaming. Written in C and C++, OBS provides a range of features, such as real-time source and device capture, scenario composition, encoding, recording, and broadcast. OBS uses RTMP to transmit data to RTMP-enabled destinations, such as YouTube, Twitch.tv, Instagram, Facebook, and other streaming media websites.

OBS encodes video streams into H.264, MPEG-4 AVC, H.265, and HEVC formats using the x264 free software library, Intel Quick Sync Video, NVIDIA NVENC, and AMD video encoding engine. OBS also encodes audio streams sing MP3 and AAC encoders. If you are familiar with audio-visual encoding and decoding, use the codecs and containers provided by the libavcodec and libavformat libraries and output streams to custom FFmpeg URLs.

MLT is a non-linear video editor engine that is applicable to various types of apps, including desktop apps and apps that run on Android and iOS.

Official GitHub Page: https://github.com/mltframework/mlt/

Website: https://www.mltframework.org/

3D Rendering in Tmall: Cutting Edge Tech to Accelerate Image Preview 30x

淘系技术 - November 17, 2020

Alibaba Clouder - March 29, 2021

Alibaba Clouder - November 22, 2019

Alibaba Clouder - November 27, 2019

Alibaba Cloud Community - November 29, 2021

Alibaba Clouder - April 2, 2021

Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Broadcast Live Solution

Broadcast Live Solution

This solution provides tools and best practices to ensure a live stream is ingested, processed and distributed to a global audience.

Learn More Real-Time Streaming

Real-Time Streaming

Provides low latency and high concurrency, helping improve the user experience for your live-streaming

Learn MoreMore Posts by 淘系技术