By the MNN team, from Taobao Technology Department, Alibaba New Retail

In recent years, On-Device AI has developed gradually from an interesting attempt at mobile development to a core tool for mobile development engineers. AI is ubiquitous in today's mobile development with key additions, such as Pailitao in Taobao Mobile, commodity identification in Taobao Live, and personalized recommendations in TouTiao. AI is playing an increasingly important role in mobile apps. It has also gradually evolved from some amusing features, such as face stickers in Snapchat and other social media software to the application scenarios in Taobao that can truly empower businesses.

Under such a background, the MNN team from Taobao Technology Department, recently released an out-of-the-box tool kit, MNN Kit.

MNN Kit is a series of easy-to-use SDKs that were obtained by Alibaba by encapsulating the models that came from frequently used business scenarios. They have been battle-tested in many campaigns. Its underlying technology cornerstone is the inference engine, which MNN made open-source in May 2019.

If you are eager to try out the MNN Kit, visit this link for the MNN Kit documentation and open-source demo projects.

These models are backed by technology accumulated over many years from the algorithm teams within the Taobao Technology Department. For example, a series of models used in MNN Kit's face detection are provided by the PixelAI team. This team is from Taobao Technology Department and is focusing on client-side deep-learning computer vision algorithms.

Gesture recognition is MNN Kit's AI solution SDK for mobile clients. It provides real-time gesture detection and recognition capabilities for mobile clients. Apps based on it can develop a wealth of business scenarios and usage methods. For example, you can develop a finger-guessing game based on the gesture detection model, or you can use the model as the basis for developing an app applied with and controlled by gestures, which allows users to swipe their mobile phone screens remotely when touching the screen with their fingers is inconvenient (such as during a meal.) Only your imagination limits how this technology could be used.

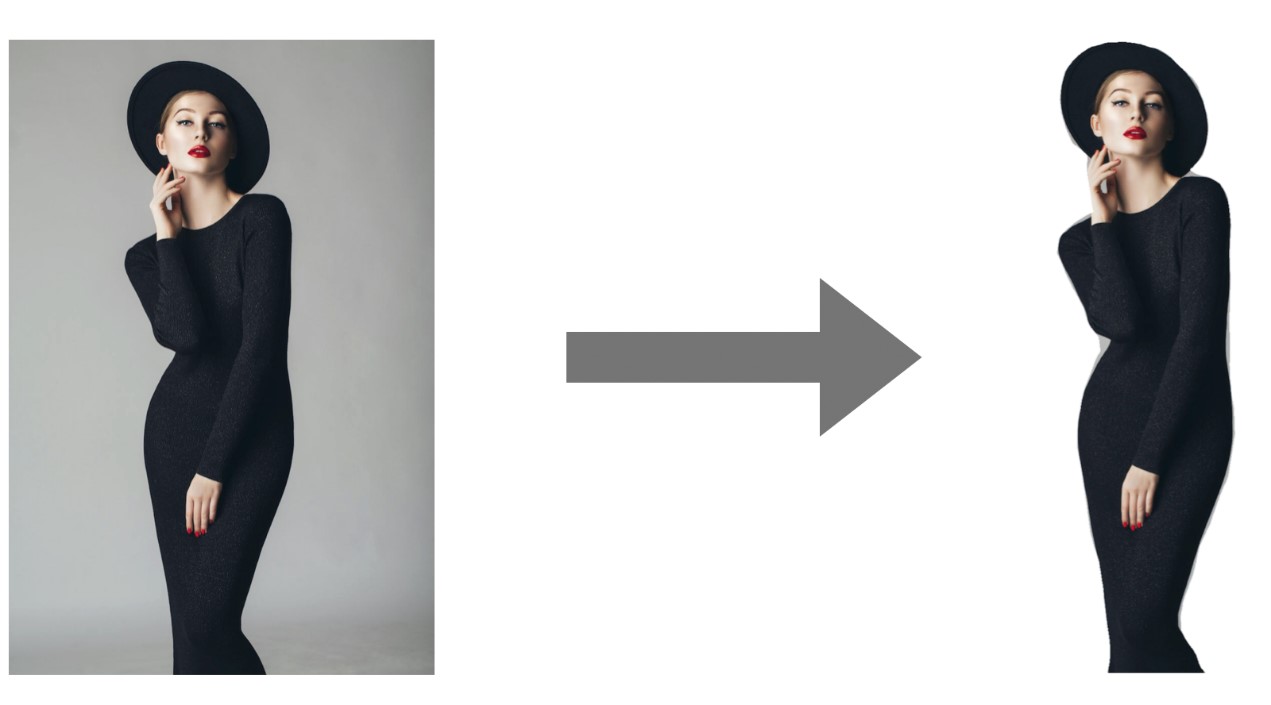

Portrait segmentation is MNN Kit's AI solution SDK for mobile clients. It provides a real-time portrait segmentation capability on mobile clients. It is also a basic model capability, which can be applied to scenarios, such as automatic matting on ID photos and assisting PS.

The effect is shown below:

MNN Kit was created in the Alibaba Taobao Technology Department, which has an environment where launching quick online businesses was required in promotional campaigns, such as Double 11, Double 12, and the New Year's Sale. It is an essential feature of the MNN Kit to quickly and simply integrate models into apps.

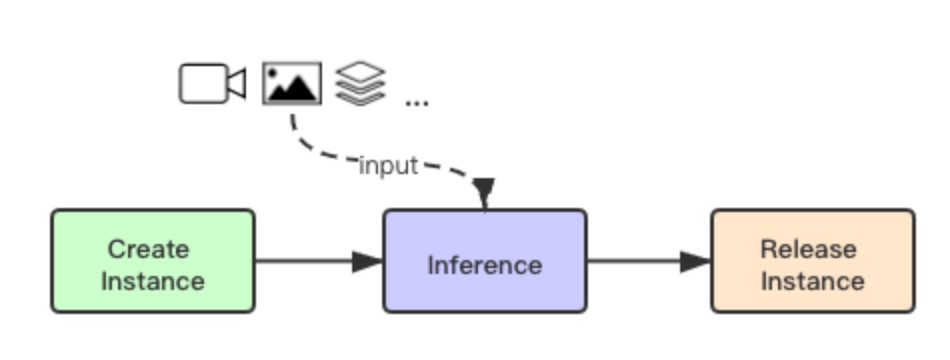

There are only three basic APIs in MNN Kit: create an instance, make an inference, and release an instance. The process is shown below:

Let's take the face detection on Android as an example to briefly introduce the access code.

We create a FaceDetector instance asynchronously, which will call back onSucceeded in the main thread:

void createKitInstance() {

// 定义Detector Config

FaceDetector.FaceDetectorCreateConfig createConfig = new FaceDetector.FaceDetectorCreateConfig();

createConfig.mode = FaceDetector.FaceDetectMode.MOBILE_DETECT_MODE_VIDEO;

FaceDetector.createInstanceAsync(this, createConfig, new InstanceCreatedListener<FaceDetector>() {

@Override

public void onSucceeded(FaceDetector faceDetector) {

// 获取回调实例

mFaceDetector = faceDetector;

}

@Override

public void onFailed(int i, Error error) {

Log.e(Common.TAG, "create face detetector failed: " + error);

}

});

}We can get the camera frame data from Android Camera API's onPreviewFrame callback. Define the face actions needed in the video stream (such as blinking and mouth opening), and the face detection can be performed on the current frame.

// 从相机API回调中获取视频流的一帧

byte[] data = ....;

// 定义哪些人脸的动作需要在视频流中进行检测

long detectConfig =

FaceDetectConfig.ACTIONTYPE_EYE_BLINK |

FaceDetectConfig.ACTIONTYPE_MOUTH_AH |

FaceDetectConfig.ACTIONTYPE_HEAD_YAW |

FaceDetectConfig.ACTIONTYPE_HEAD_PITCH |

FaceDetectConfig.ACTIONTYPE_BROW_JUMP;

// 进行推理

FaceDetectionReport[] results = mFaceDetector.inference(data, width, height,

MNNCVImageFormat.YUV_NV21, detectConfig, inAngle, outAngle,

mCameraView.isFrontCamera() ? MNNFlipType.FLIP_Y : MNNFlipType.FLIP_NONE);Apps need to actively free up the memory occupied by the model according to its application scenario. Take our demo as an example. The memory occupied by the model is released at onDestroy on the Activity. You can decide when to release according to the process of your app.

protected void onDestroy() {

super.onDestroy();

if (mFaceDetector! =null) {

mFaceDetector.release();

}

}In the implementation of a scenario, in addition to the three steps above, there are some pre-processing and post-processing for camera video streams. In many cases, these processing actions are similar. For example, when it comes to pre-processing and how to correctly process camera frames, we need to consider whether the camera is pre- or post-positioned as well as the camera rotation. For the post-processing, we need to map the output of the model (such as the coordinates of the key face points) to the original image for rendering. MNN Kit puts these best engineering practices into the demo for reference. The code for the demo is open-source in the GitHub repository for MNN Kit.

The MNN Kit has greatly simplified the process of using general-purpose models in mobile development. What if your application scenario doesn't exactly fit this general-purpose scenario? In many cases, with an excellent basic model as the starting point, and through transfer learning, you can get a model that is suitable for your scenario. The MNN team will release products later to help you continue to transfer learning more easily and efficiently.

Furthermore, MNN Kit will gradually cover other models for general-purpose scenarios. Keep tuned to learn about upcoming announcements!

MNN Kit has its own user agreement. Here are some points that should be noted:

Developing and Deploying Frontend Code in Taobao: Eight-year Long Case Study Analysis

"The King of Goods" on Taobao: What Is Behind the Low Latency of Live Streaming?

Alibaba Cloud ECS - June 3, 2021

Alibaba Clouder - September 2, 2019

Alibaba Cloud New Products - March 10, 2021

GXIC - February 27, 2019

Alibaba Cloud Data Intelligence - September 6, 2023

Neel_Shah - October 27, 2025

mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn MoreMore Posts by 淘系技术