By Xia Ming (Yahai)

The call chain is a huge data library that records the complete request status and flow information. However, the cost and performance problems brought by the huge amount of data are the difficult problems unavoidable to those using Tracing. This article describes how to record the most valuable links and their associated data on-demand at the lowest cost.

If you are troubled by the high cost of the full-storage call chain, and the data cannot be found, or the charts are inaccurate after sampling, please read this article carefully.

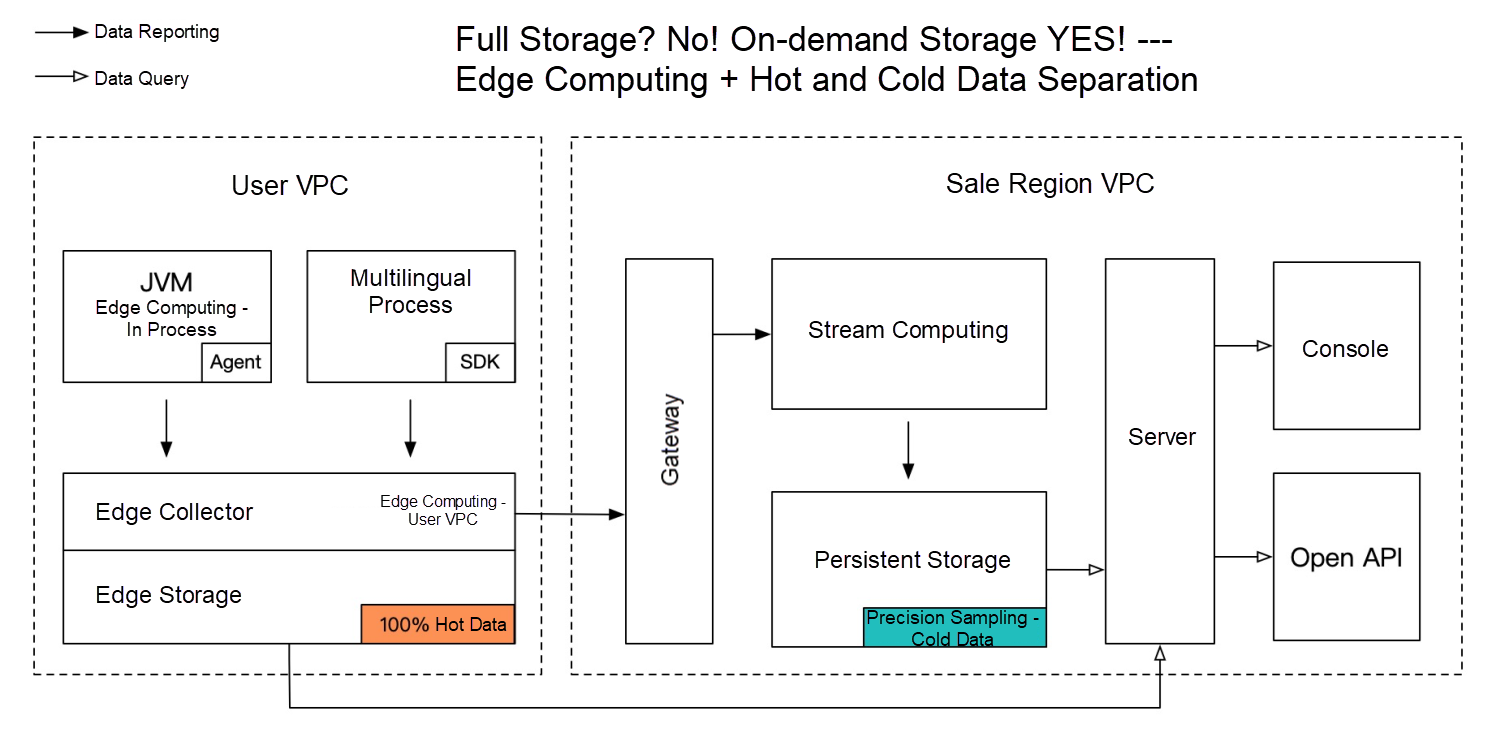

Edge computing, as its name implies, is data computing on edge nodes. It can also be called calculation shifting to the left. With limited network bandwidth and difficulties in solving the problems of transmission overhead and global data hotspots, edge computing is effective in finding the optimal balance between cost and value.

The most common edge computing practice in the Tracing field is to filter and analyze data during a user process. In the public cloud environment, the data processing of user clusters or VPC networks is also edge computing, which saves a lot of public network transmission overhead and distributes the pressure of global data computing.

In addition, from the data perspective, edge computing can filter out more valuable data and process and extract deep data values to record the most valuable data at a minimum cost.

The data value of links is unevenly distributed. According to incomplete statistics, the query rate of call chains is less than one-millionth. Full storage of data will cause a huge cost waste and affect the performance and stability of the entire data link significantly. The following are two common filtering methods.

Whatever the screening strategy is, its core idea is to discard useless or low-value data and retain abnormal scenarios or high-value data that meets specific conditions. This selective reporting policy based on data value is much more cost-effective than full data reporting, which may become a mainstream trend for Tracing in the future.

In addition to data filtering, data processing (such as pre-aggregation and compression) on the edge nodes can reduce transmission and storage costs effectively while meeting user needs.

Edge computing is applicable in most pre-aggregation analysis scenarios but not in the diversified post-aggregation analysis scenario. For example, if a business requires a statistics collection of interfaces that take more than three seconds and their source distributions, it would be impossible to list all the personalized post-aggregation analysis rules. When we cannot define analysis rules in advance, we can only use the extremely costly full raw data storage. Is there any room for optimization? The answer is yes. Now, let's introduce a low-cost solution to post-aggregation analysis – hot and cold data separation.

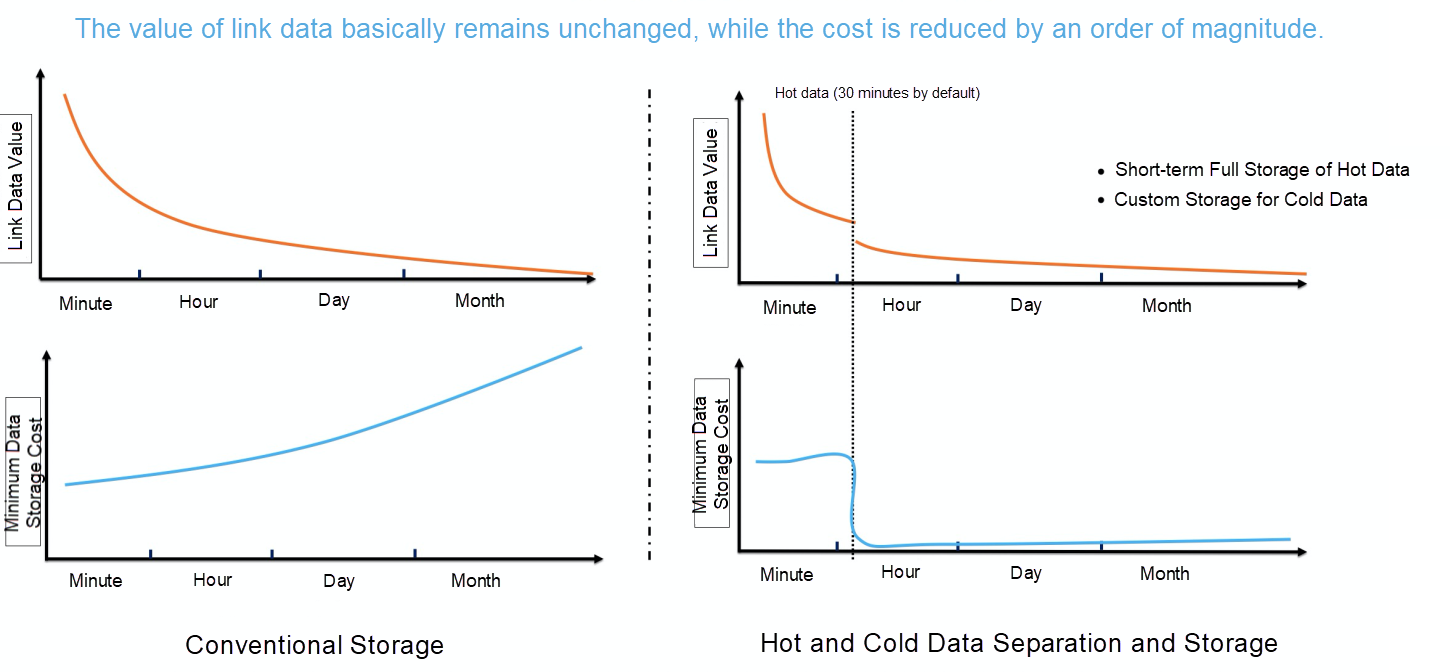

What makes the hot and cold data separation valuable is that it works when users' query behaviors meet the localization principle in time. The recent data is the most frequently queried data, but the probability of cold data query is low. For example, due to the timeliness of problem diagnosis, more than 50% of link query analysis occurs within 30 minutes, and link queries after seven days are usually centralized in the wrong and slow call chains. Now, with the theoretical basis established, let's discuss how to separate hot and cold data.

Firstly, there is timeliness in hot data. If the latest hot data is only required to be recorded, the requirement for storage space decreases significantly. In addition, data of different users is isolated in the public cloud environment. Therefore, the hot data computing and storage solution in users' VPC has higher cost-effectiveness.

In addition, the query of cold data is directional, and cold data that meets the diagnosis requirements can be filtered out through different sampling policies for persistent storage, including wrong and slow sampling or sampling in specific business scenarios. Cold data has high stability requirements when stored for long periods. Thus, they can be managed in a Region in a unified manner.

To sum up, the hot data has a short storage period and is cost-effective but can meet the requirements for real-time full data post-aggregation analysis while the total data volume of cold data decreases significantly after accurate sampling, usually reducing to only 1% to 10% of the raw data. It can also meet the diagnostic requirements in most scenarios. The combination of the two technologies realizes the optimal balance between cost and experience. Hot and cold data separation is applied to leading APM products across the globe, such as ARMS, Datadog, and Lightstep, as the storage solution.

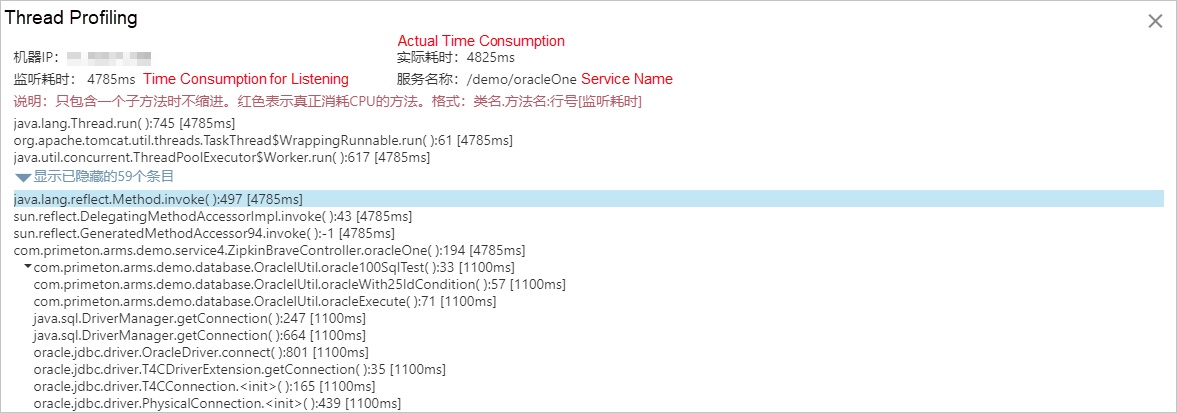

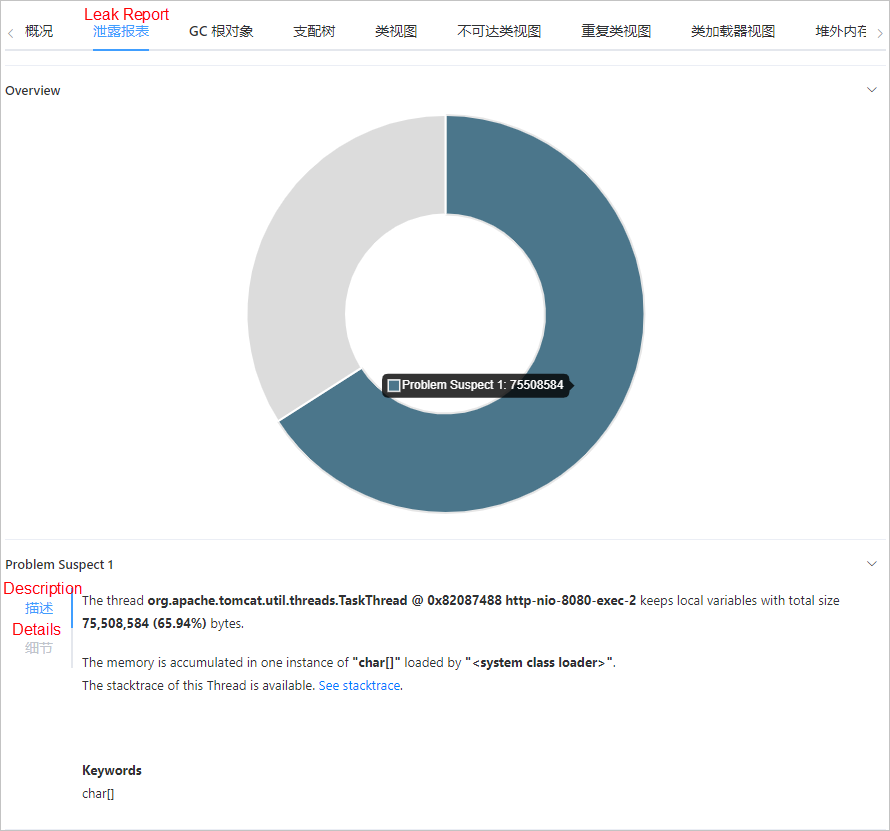

The link detail data contains the most complete and the richest call information. The most common views in the APM field, including the service panel, upstream and downstream dependencies, as well as application topology, are all based on the link detail data statistics. Post-aggregation analysis based on link detail data can help locate problems based on the specific needs of users. However, the biggest challenge for post-aggregation analysis is to make statistics based on the full data. Otherwise, the conclusion may stray far from the reality due to sample skew.

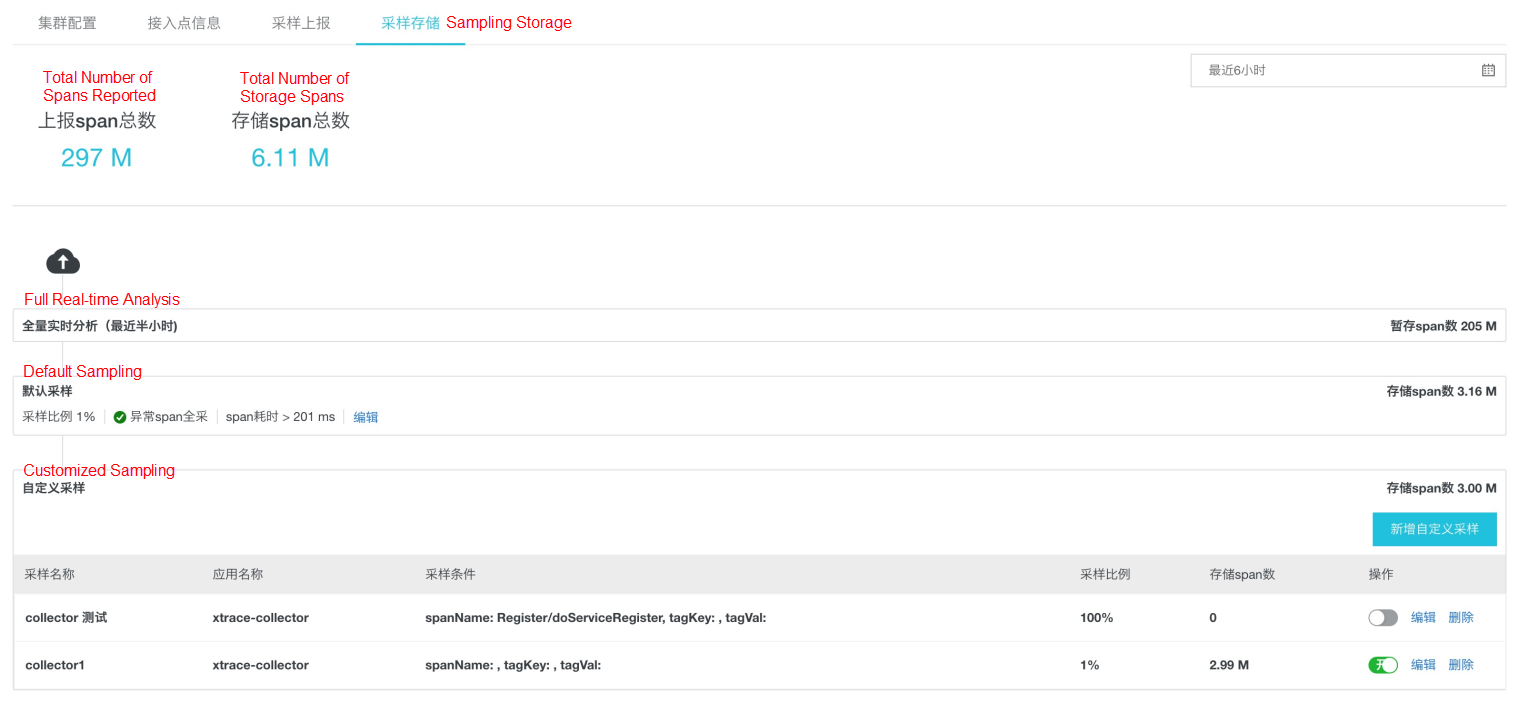

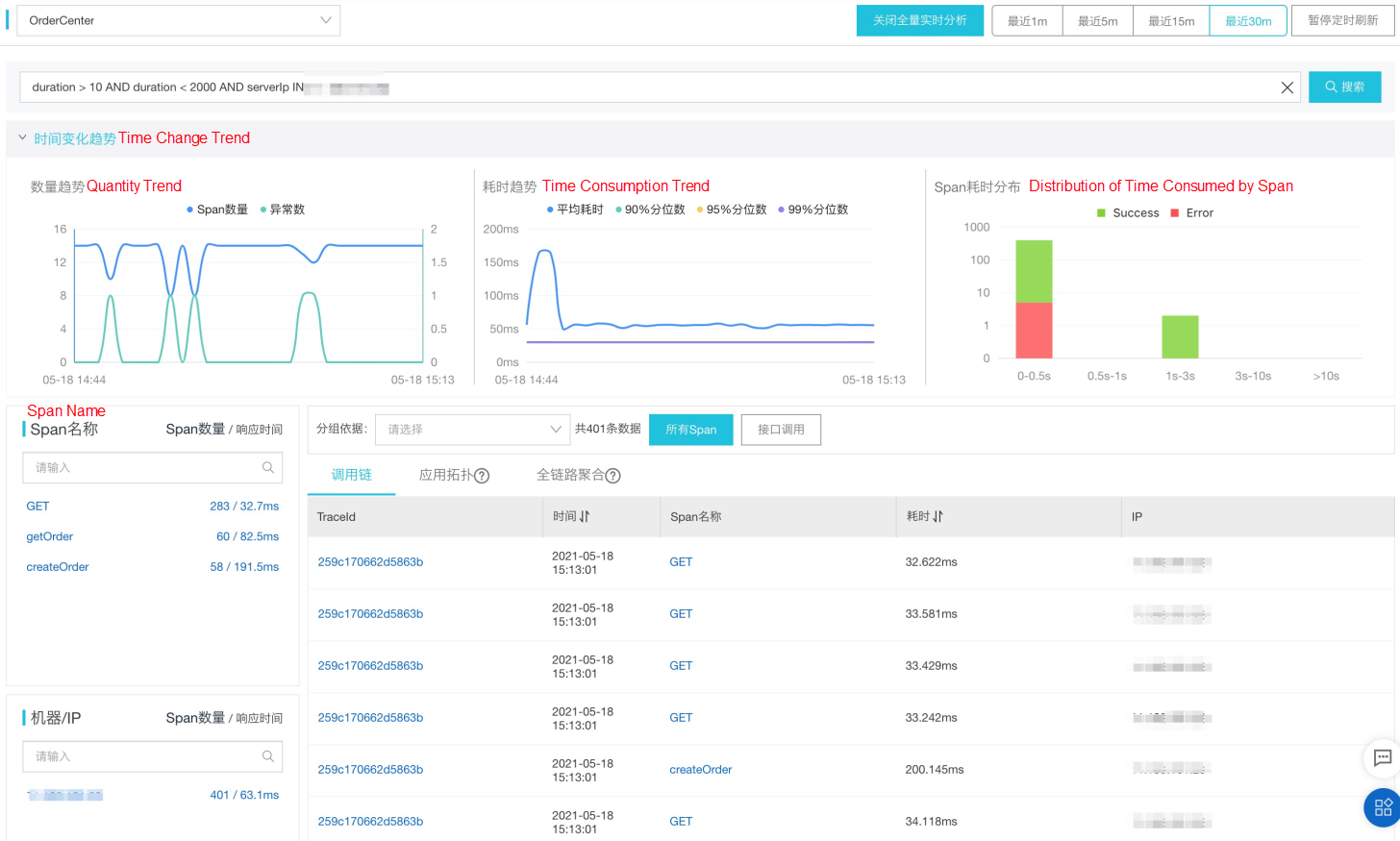

As the only Chinese cloud vendor entering the 2021 Gartner APM Magic Quadrant, Alibaba Cloud ARMS provides within-30-minute full analysis of hot data, achieving a variety of filtering and aggregation under various conditions, as shown in the following figure:

The persistent storage cost of a full trace is very high. As mentioned earlier, the actual query rate of the call chain after 30 minutes is less than one-millionth, and most queries run on the wrong and slow call chains or on the links that meet specific business characteristics. Therefore, we need to keep a few call chains that meet the precise sampling rules, thus saving the cost of cold data persistence storage.

How can you implement precise sampling? The methods commonly used in the industry include Head-based Sampling and Tail-based Sampling. Head sampling is generally performed on edge nodes, such as Agent on the client end. For example, throttling sampling or fixed-ratio sampling is performed based on the interface service. Tail sampling is typically performed based on full hot data, such as wrong and slow full sampling.

An ideal sampling policy stores only the data in real need of query. APM products should provide flexible sampling policy configuration and best practices for users to make adaptive adjustments based on their business scenarios.

As more enterprises and applications migrate to the cloud, the scale of public cloud clusters has witnessed explosive growth. Costs will be a key measure of enterprise cloud usage. In the cloud-native era, it has been mainstream in the APM field for developers to make full use of the computing and storage capabilities of edge nodes, in conjunction with hot and cold data separation, to achieve cost-effective data value. The traditional full data reporting, storage, and re-analysis solutions are facing bigger challenges. What will happen in the future? Let's wait and see.

How Can Container Startup Be Accelerated in the Event of Burst Traffic?

Constructing a Comprehensive Stress Testing System for Double 11

212 posts | 13 followers

FollowAlibaba Clouder - April 29, 2021

Alibaba Cloud Community - January 23, 2025

Alibaba Cloud Native Community - February 13, 2023

Serverless - November 10, 2025

Alibaba Cloud Community - December 25, 2024

Alibaba Cloud Community - December 10, 2021

212 posts | 13 followers

Follow Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Managed Service for OpenTelemetry

Managed Service for OpenTelemetry

Allows developers to quickly identify root causes and analyze performance bottlenecks for distributed applications.

Learn More Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn More Whole Genome Sequencing Analysis Solution

Whole Genome Sequencing Analysis Solution

This technology can accurately detect virus mutations and shorten the duration of genetic analysis of suspected cases from hours to just 30 minutes, greatly reducing the analysis time.

Learn MoreMore Posts by Alibaba Cloud Native