By Liu Yiming, Senior Product Expert of Alibaba Cloud Intelligence

This article describes how to use MaxCompute to add tags to a large number of people and carry out analysis and modeling through Hologres. Then, interactive experiences in complex scenarios for crowd selection of a large number of people can be provided, and the best practices of API-based data services can be implemented.

Crowd selection is not a new business. Almost all Internet companies are working on it because it is a basic marketing scenario. The correct people are needed for companies to send coupons, obtain traffic, and conduct targeted promotions. How can they do this? People should be divided into different groups by behavior, purchasing habits, attention, interests, and even education level. After dividing people into groups, resources are allocated to those most likely to be converted into regular customers.

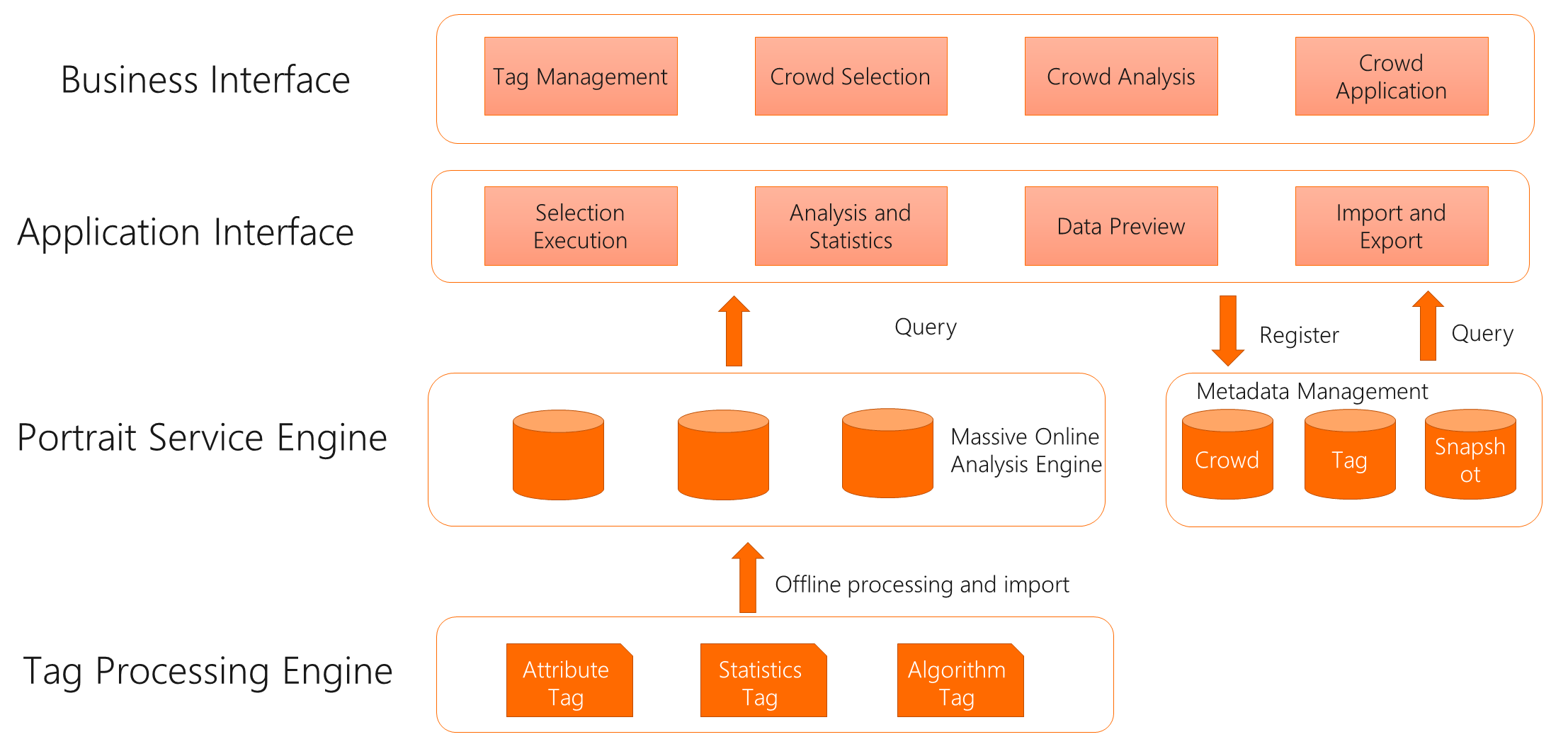

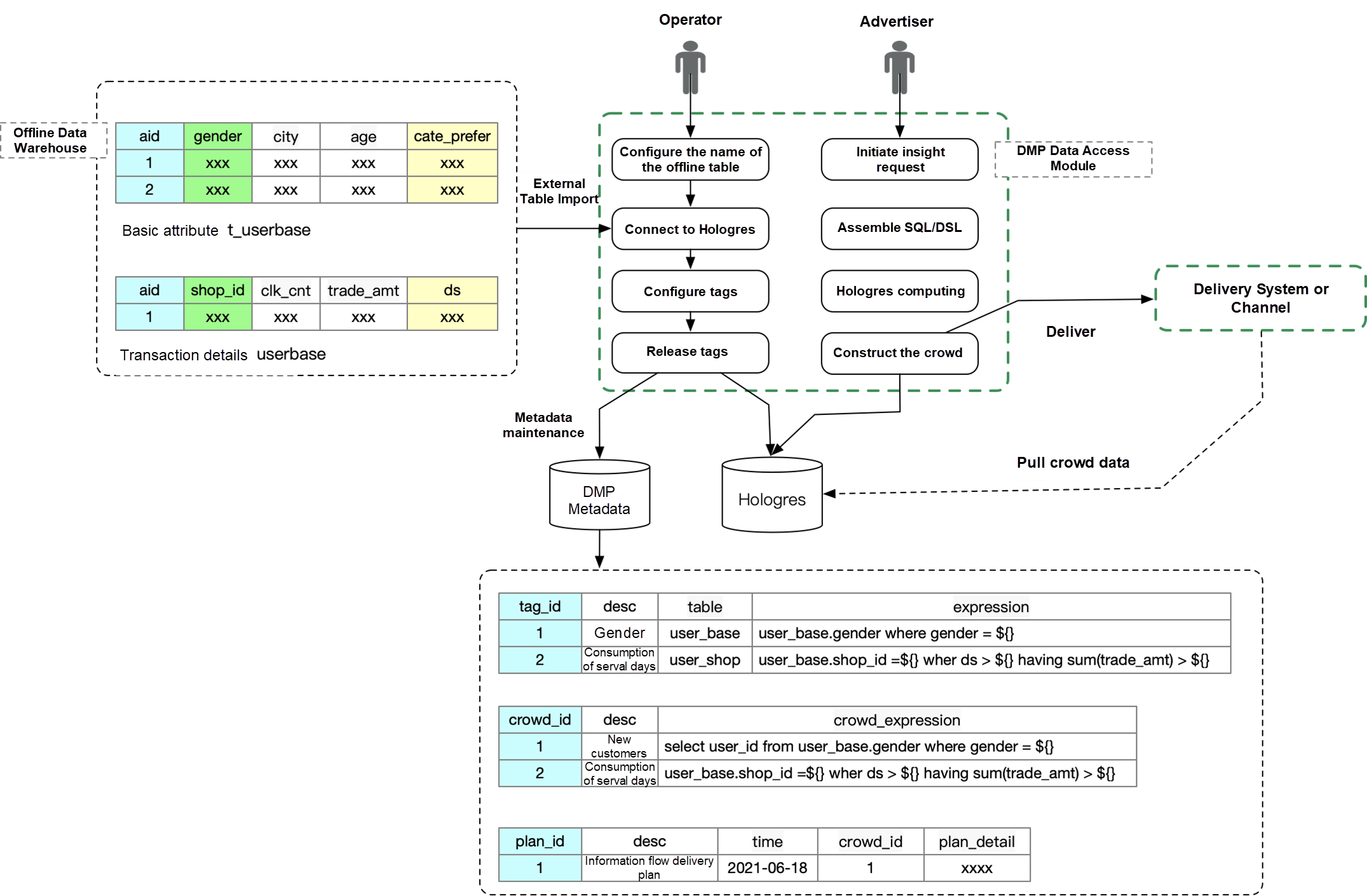

As shown in the following figure, the basic business logic is a bottom-up architecture. The first is the tag processing engine, which mainly focuses on offline processing. In the tag processing engine, a lot of tags will be generated based on the user's historical data of purchasing, access, and attention behavior. The data shows what products users focus on and the times and frequency users have looked at them. Then, these tags are imported into the online portrait service engine. The service engine provides operators and advertisers with an interactive query experience. They can seek out the user group they prefer based on user behavior characteristics. This user group might pay attention to certain products or related products but not buy them within 30 days. The seeking process is highly interactive because a person's behavior characteristics are very complex. Therefore, it is necessary to add a filtering condition or remove a condition frequently. Conditions may be merged and deduplicated. It is not completed until the scale of the group is limited to the range that the budget can support. For example, if we want to deliver advertisements to 10,000 individuals, we have to find these 10,000 individuals through various qualifications. It is not yet possible to do direct delivery, but it also requires a more fine-grained analysis of the group. We need to determine whether these 10,000 individuals are the target group through behavior analysis of historical data. After that, the target group will be exported to the delivery system in the form of delivery packages. This is the basic business logic.

What are the technical requirements behind this business requirement?

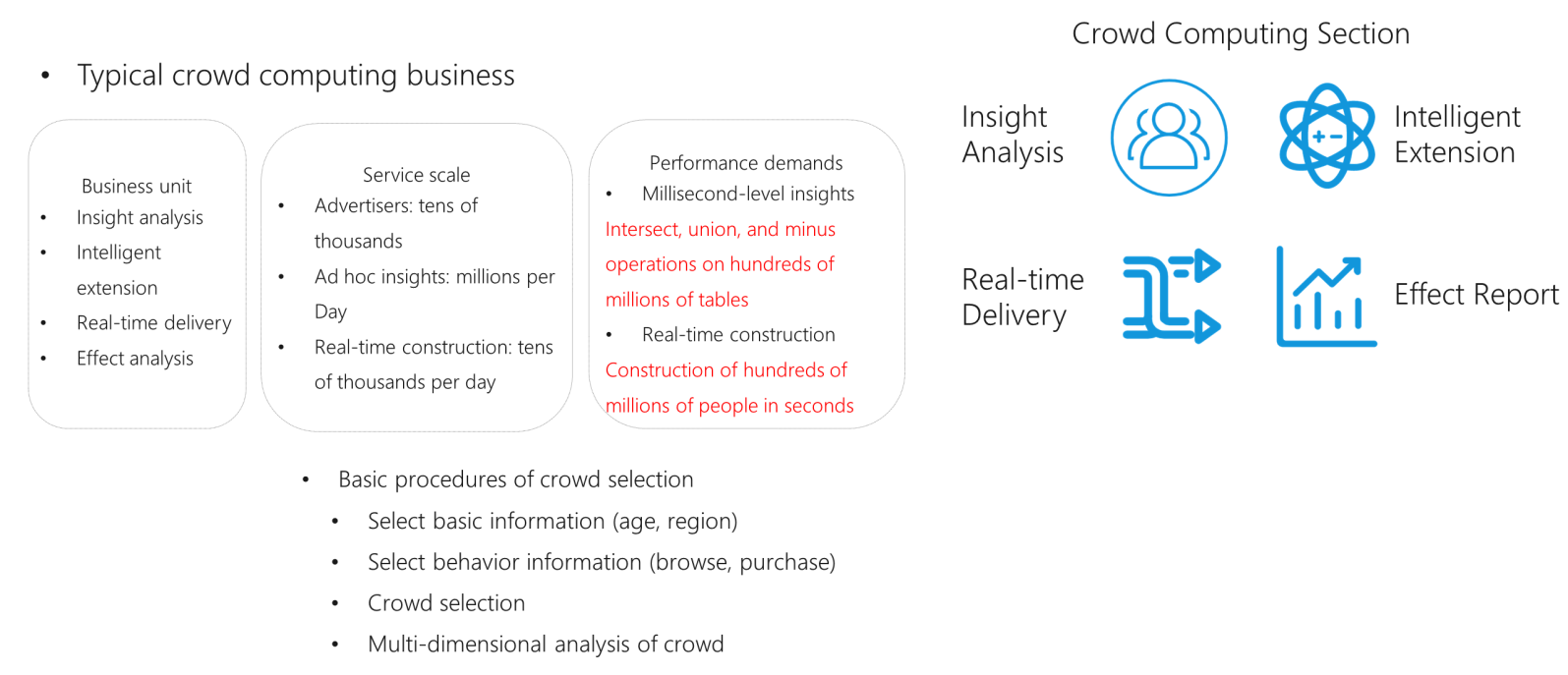

The core of the typical crowd business section is insight analysis. The general scenario of insight analysis is to support tens of thousands of different advertisers. They freely choose groups they are interested in on the platform. Each advertiser has different demands on the crowd. Some pay attention to the purchasing power, and some pay attention to the collection behavior. Every day, tens of thousands of advertisers issue millions of query requests and generate tens of thousands of various crowd packages. Thus, the computational complexity of each system is very demanding. The core demands here cover several points, including millisecond insight. All queries are expected to be interactive. Every interaction, every drop-down menu, every selection, and every combination of conditions on the interface are expected to provide an interactive experience. Advertisers want to see the change in the scope of the crowd and whether the target crowd conforms to their demand. This is very demanding on performance. Meanwhile, remember that data masking is required to protect users' privacy. All analysis should be based on data that meets compliance requirements.

Tens of thousands of advertisers submit millions of data queries that require millisecond-level responses. Quick search is a must. This kind of quick search is in terms of scale data. Millions-level data cannot be counted as scale data, though its behavior logs are very large. The search function is expected to provide a good interactive analysis for tens of billions of data entries while being responded to in seconds.

The filtering behaviors of users are varied, such as equivalent comparison, range comparison of numerical values, and time range comparison. A variety of filtering criteria can be combined flexibly, and the ability of the computing engine is indicated in presenting the filtering results.

User tags are not static. When all things are real-time and online, all behavioral data changes are expected to be triggered in real-time and provide feedback of the system decision at the next moment in real-time. For example, the service engine can detect what products are in the latest favorites and decide whether this behavior can become part of online portraits. Therefore, the requirement for real-time throughput is very high.

In terms of computing, it can be divided into the following computing modes.

Tag filtering includes equivalent filtering, which can use Equal/In/Between. The filtering can be operated on tens of billions of data entries. The result set after the operation needs to be further processed by the union, intersect, and minus operations. For example, when a user pays attention to the competitor's products and the company's products but does not buy both, there is a relationship of union, intersect, and minus. Therefore, the result set need to be processed with multiple intersect, union, and minus operations. Finally, there is a strong need for precise deduplication because the calculation result will eventually be turned into a unique user ID. This ID will be used for advertising delivery. These needs, reflected at the engine level, are the efficiency of data reading. If row-oriented storage is adopted for data read, will there be an I/O amplification problem? Data is stored by row, while filtering is based on a certain column. However, I/O reading will read the whole row, and I/O amplification will occur. Column-oriented storage also has problems with index and filtering effects. Should we use the Hash JOIN mode or the Nest Loop JOIN mode to handle table connections in operators? How effective is precise deduplication? These are all very demanding for the efficiency of the computing engine. Therefore, essentially, it is to solve the problems between efficient data storage and filtering, memory and CPU consumption of relational computing, and memory and CPU consumption of precise deduplication.

Here, we have many different solutions and optimization methods concerning whether to use more memory or CPU. There are two common solutions in the industry.

One is pre-settlement with technologies such as Kylin/Druid. These technologies can pre-process data in advance on some predefined dimensions. After pre-processing, data sets are essentially reduced. For example, when finding a user group that pays attention to one product instead of the other, each result set can be expressed by a bitmap array. The efficiency of performing union, minus, and intersect operations between arrays is very high. Pre-calculation has great benefits in precise deduplication and intersect, minus, and union operations. However, the defects are also obvious. The biggest defect is inflexibility and weak ability in the expression of complete SQL. The other is MPP distributed database technology, which provides better query performance through column-oriented storage, distribution, and indexing.

Therefore, when implementing a crowd selection scheme, usually more than one scheme is adopted because the pre-calculation scheme and the MPP scheme have some essential defects.

Which technologies in the market are more suitable for storage and search?

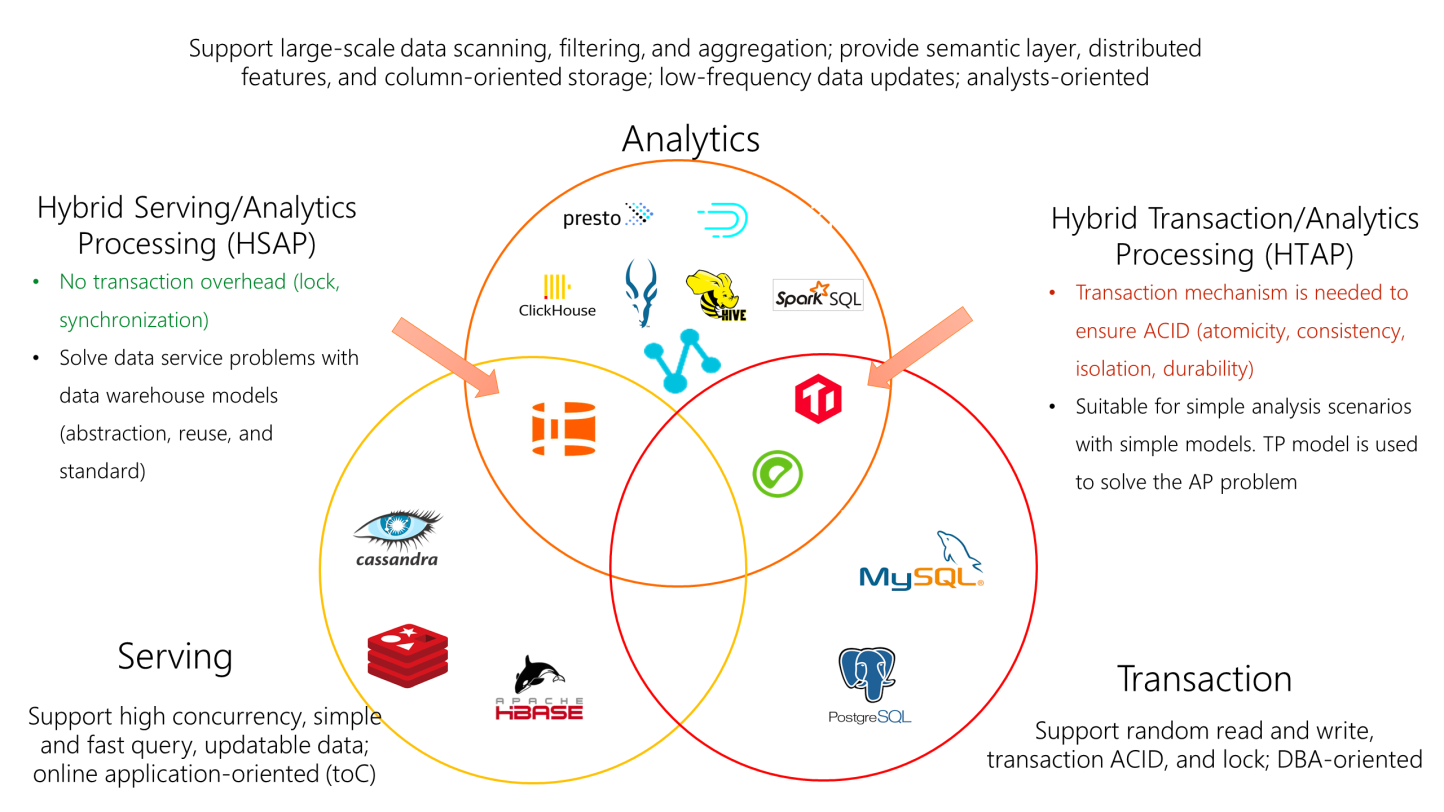

The first type of technology is the common transaction database. The transaction database uses row-oriented storage. Its efficiency of writing and storing a single row of data is very high. It is used for queries and filtering statistics. For more than ten million data entries, the consumption of resources is very high. Therefore, the TP system is not used for analysis directly.

The second type is the AP system, namely, a common OLAP system. This type of system is optimized for large-scale data scanning scenarios and uses column-oriented storage, compression, indexing, distributed technologies, etc. This kind of technology provides fast search, but their essential defect is that they are not very friendly to most system updates. The data is not organized well enough to achieve fast data searches. This leads to a weak update capability. Another type of system is also common in big data analysis. It is called Serving system, which is a type of system that supports online business. This type of system supports fast search at the cost of search flexibility. For example, the search method of the document database and KeyValue system has many limitations. Users can only search according to its key, limiting flexibility. However, the performance is enlarged since it can be scaled out horizontally. The access efficiency of the key is relatively the highest, and the update efficiency is also very high. The entire record can be replaced by updating based on the key. In the past, we had to split the data into TP, AP, and Serving systems for different scenarios. Data was transferred back and forth between several systems. This made us rely more on the whole system. As long as the data is dependent on the system, there will be data inconsistency. The data inconsistency means that the data needs to be corrected, and the development cost of the data becomes higher. Therefore, we need to make innovations in many fields. The first type of innovation is to develop the combined load capacity in the TP and AP fields. We try to solve problems in these two scenarios through one technology. We hope the system with transaction and analysis support will be implemented successfully one day. Such systems also have certain limitations. An overhead of various distributed locks is still necessary to support transaction operations. These systems with more capabilities require a large overhead of concurrency and performance, so there is a certain performance bottleneck.

Some innovations can also be made in the left part of the following figure. Through these innovations, the biggest problem is that transactions are not supported. Since we do not require many transactions, we can weaken the transaction capability to make search and update fast enough. Therefore, it is possible to do technological innovation in this area. This technology has a flexible analysis capability and good data writing and a complete SQL expression capability. The technology shown in the intersection part on the left is very suitable for applying the three technical requirements just mentioned. This is the product that we want to share today - Hologres.

Hologres = vectorized SQL engine + flexible multidimensional analysis + high-throughput real-time update

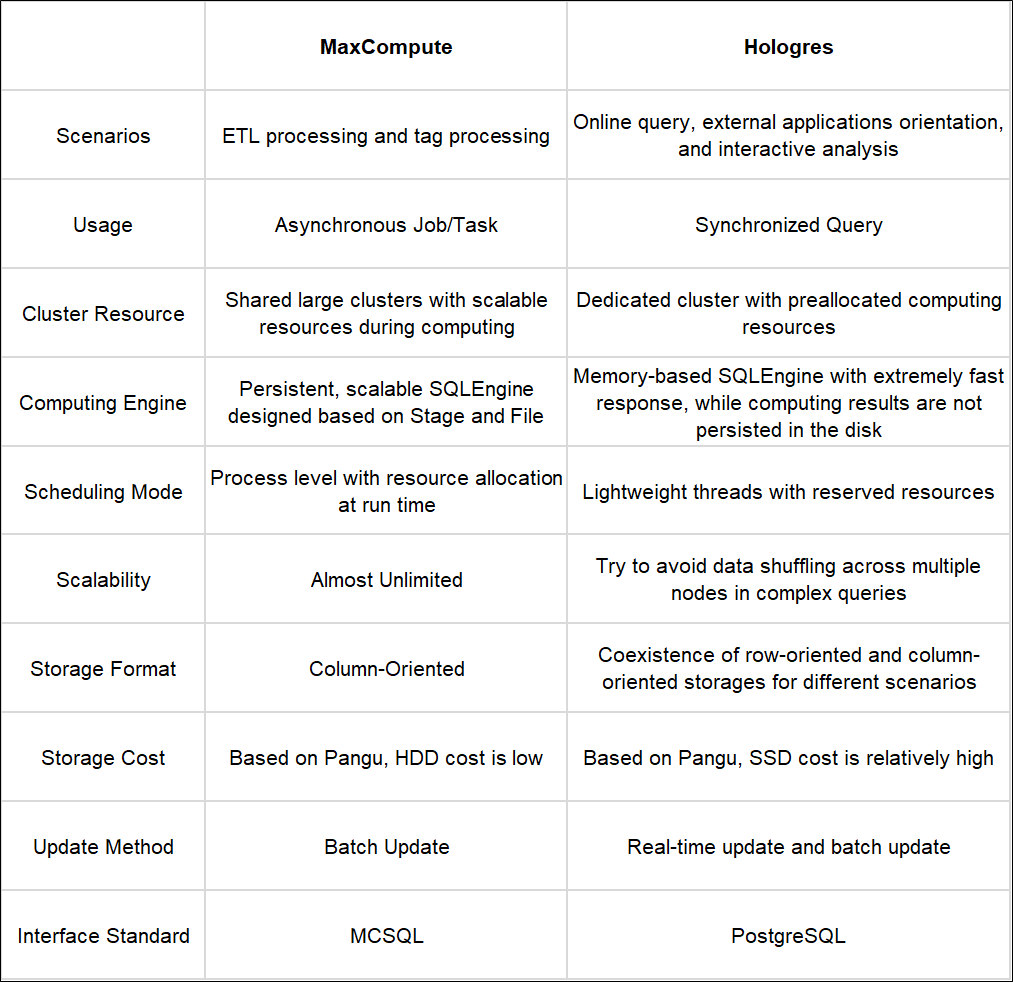

Hologres is a comprehensive real-time data warehouse that provides real-time analysis (OLAP) and online services (point query). It connects with MaxCompute seamlessly and implements a set of architectures with multiple coexisting loads (OLAP, online services, and Hologres.) It reduces data silos, avoids data fragmentation, streamlines procedures, and improves user experience.

Why does Hologres support high-performance and high-throughput writing?

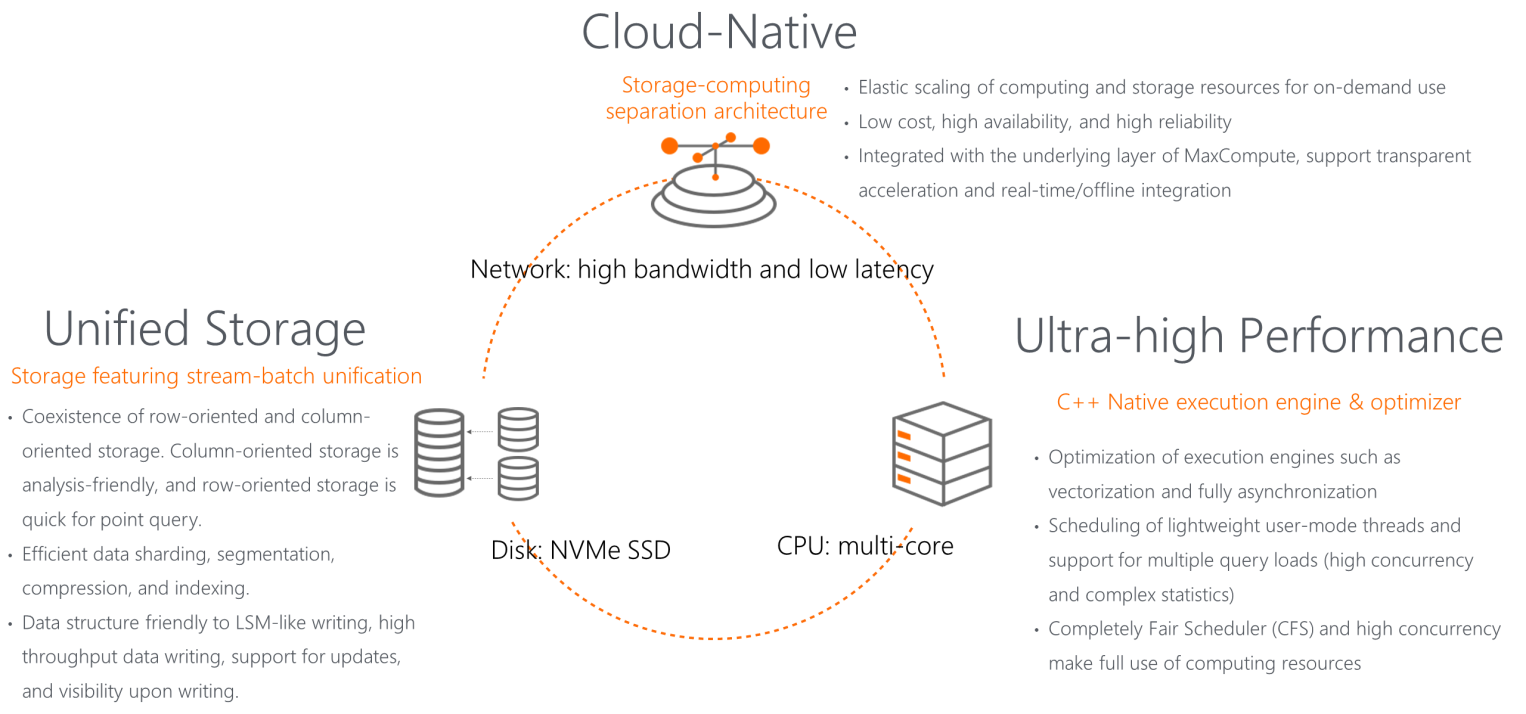

It is not very complex. Hologres still relies on the entire IT industry, with many underlying technological advances. For example, the bandwidth is wider, and the delay is lower. Its advantage is that you can reduce dependence on local operations of on-premise disks. You can rely more on network disks instead of local operations. The underlying storage of Hologres is divided into multi-copy storage and highly reliable storage. State management is handed over to Alibaba Cloud. The underlying layer is the storage engine of Pangu (Apsara Distributed File System), which has multiple copies, compression, cache, and high reliability. This makes the logic of the entire compute node simpler and high reliability possible. If any node crashes, a distributed network disk can restore the state quickly. This will make the computing layer stateless. This is the first merit of Hologres. The second merit is the utilization of disks. In the past, the rotational speed of disks had mechanical bottlenecks. The mechanical disk is rotated in circles, referring to how many circles per second. Therefore, our I/O operations are optimized for scanning scenarios. We want all data to be updated, read, and written in blocks. Therefore, in the past, this kind of scenario with frequent updates was difficult to implement in the entire data warehouse. Hologres adopts the SSD design. Solid-state drives (SSD) support better random read/write capabilities. This allows us to design the entire storage data structure while setting aside the need to rely on this scanning scenario when designing the storage architecture. Hologres supports row-oriented storage and column-oriented storage to adapt to different scenarios. It also adopts the log structured merge tree method. It supports scenarios where high-throughput data is written and updated. The third is the multi-core CPU. Currently, the main frequency of CPU is not likely to be improved significantly. However, in the multi-core scenario, if multiple cores inside a CPU can be used in parallel, CPU resources can be brought into full play. This requires a good command of the underlying language of the operating system. Hologres uses C++ to implement the data warehouse. The operators at the underlying layer of Hologres are rewritten in a vectorized way to maximize the multi-core parallel computing power.

As shown in the following figure, we have made many improvements to the network, storage, computing, and hardware. If these improvements are fully utilized, we will see an amazing and different system.

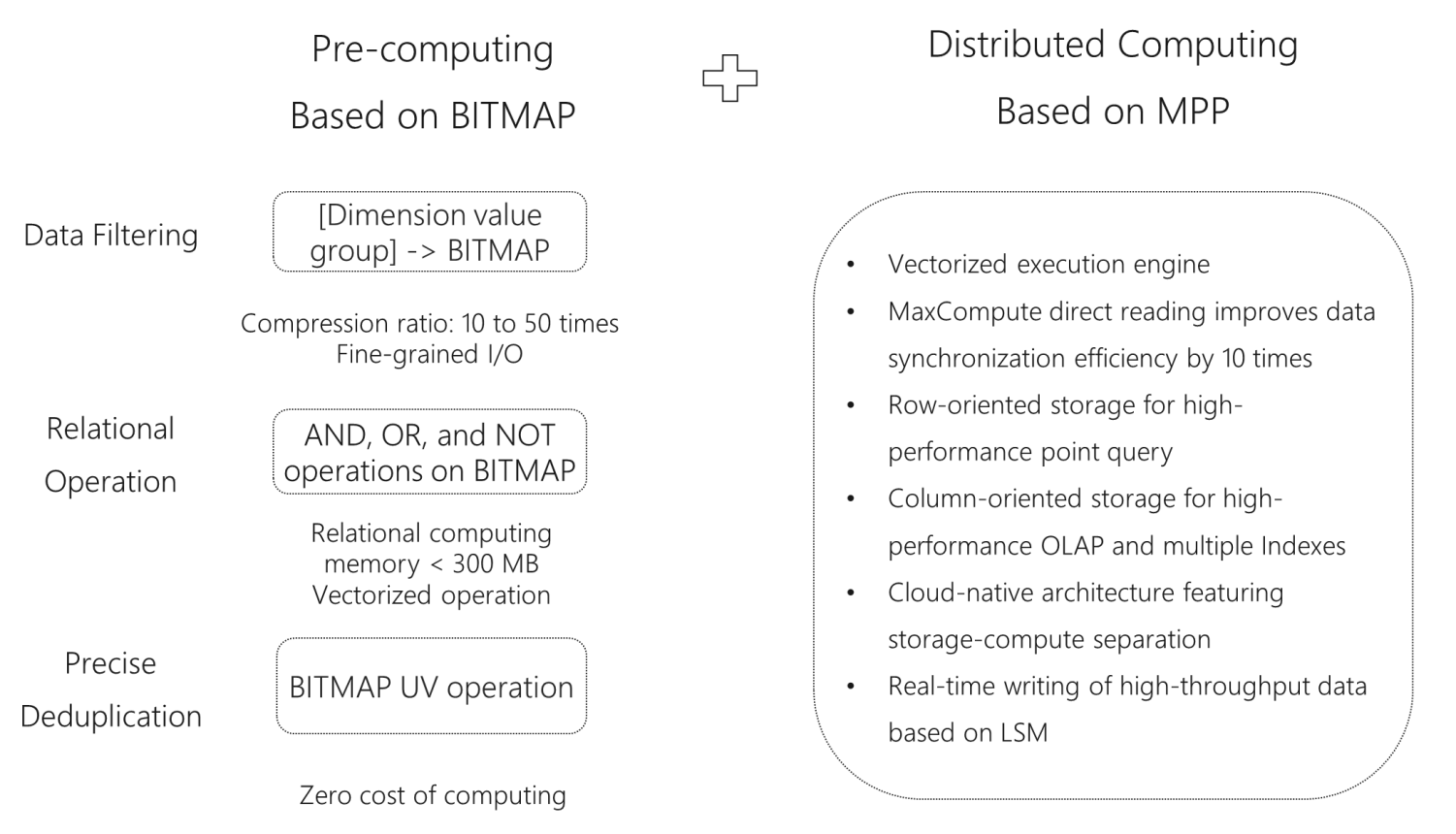

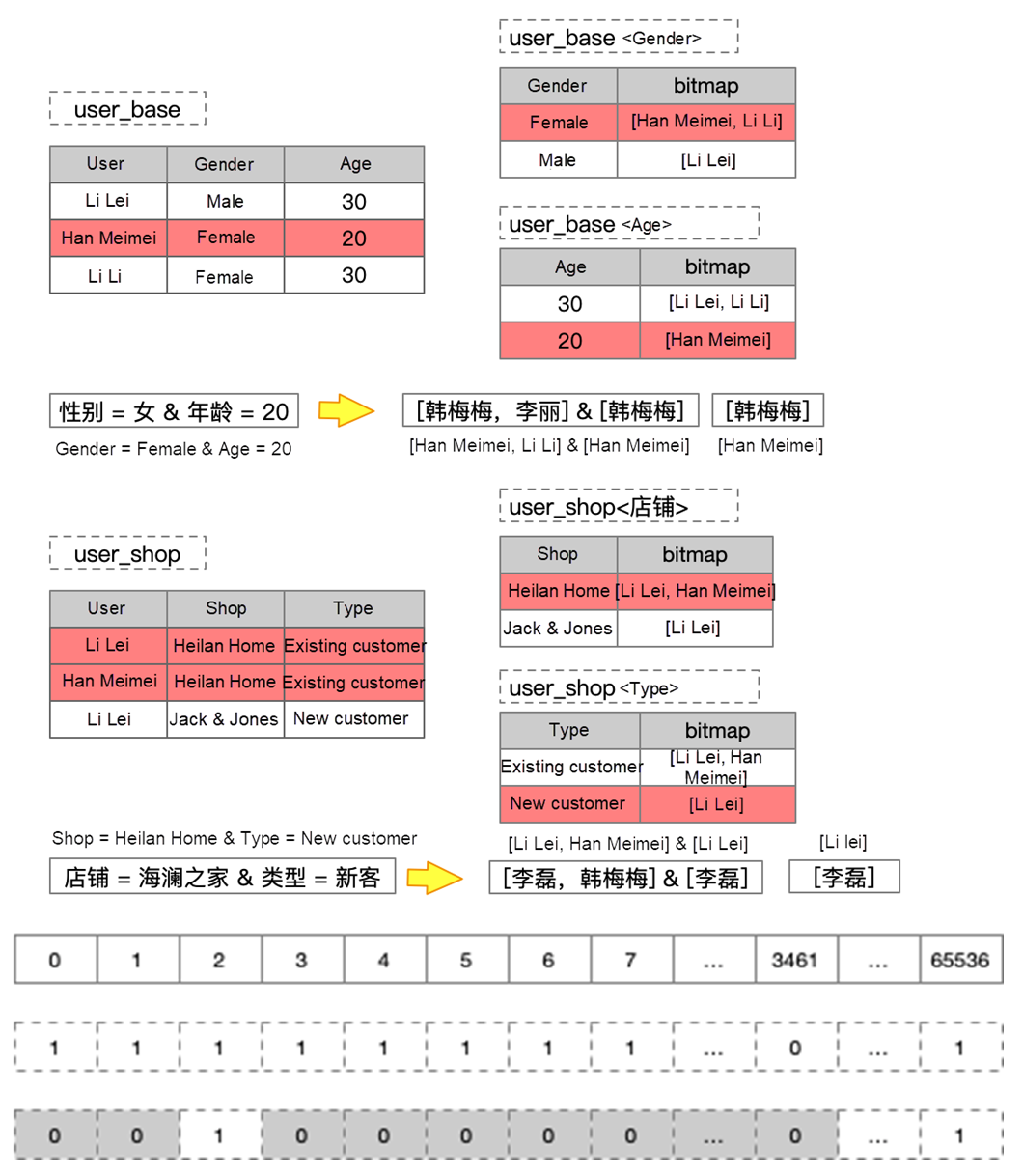

As mentioned in crowd selection, it contains pre-computing scenarios and MPP distributed computing scenarios. It is often not suitable to use a single technology. During implementation, it is better if both pre-computing and distributed computing are adopted and integrated well. For example, the dimension filtering scenario is very suitable for BITMAP because bitmap indexes can be made with BITMAP. For example, scenarios that need to be filtered, such as true and false, purchase level, and product attention, are suitable for bitmap indexing. Hologres supports bitmap indexing.

The second is the relational operation, which is the intersect, union, and minus operations among various data sets. It is also very suitable for bitmap calculations because bitmap calculation is equivalent to doing a lot of AND, OR, and NOT operations between 0 and 1 in parallel. Its efficiency is very high.

Precise deduplication is a natural capability of BITMAP. When a bitmap is built, ID is determined through the subscript bit. We can calculate the value of precise deduplication quickly by simply accumulating the values (1) of a different subscript bit. This almost converts an O(N) problem into an O(1) problem. The improvement of efficiency is visible. Therefore, pre-computing is a very important technology in crowd selection. Hologres supports the RoaringBitmap data type to perform union, intersect, and minus operations of BITMAP efficiently.

As mentioned above, pre-computing lacks flexibility. When using distributed computing to increase its computing power, pre-computing adopts the vectorization execution engine of Hologres. Direct acceleration of MaxCompute data from external tables, including the synchronization of MaxCompute data to Hologres, will improve the performance of MaxCompute data synchronization to other data sources by ten times.

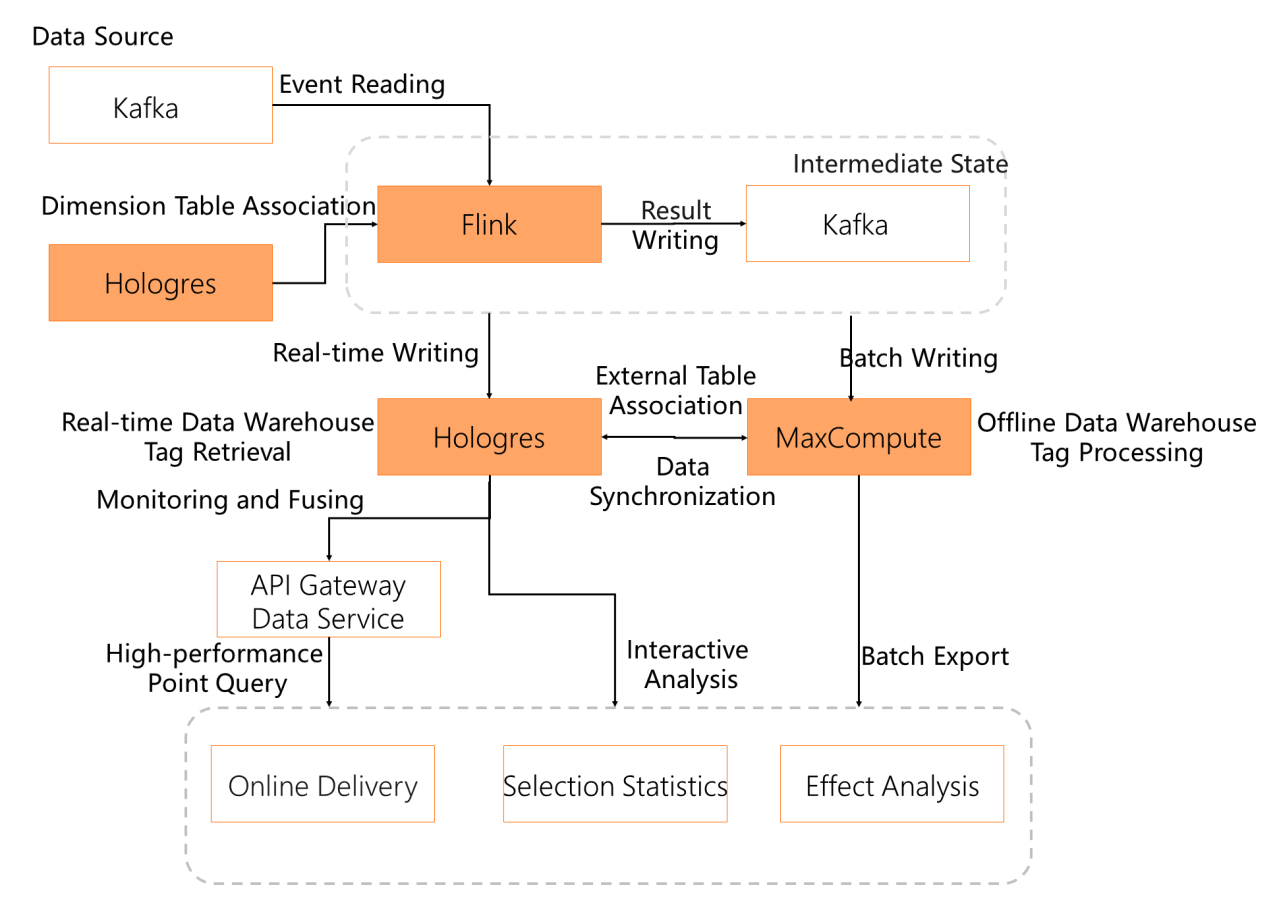

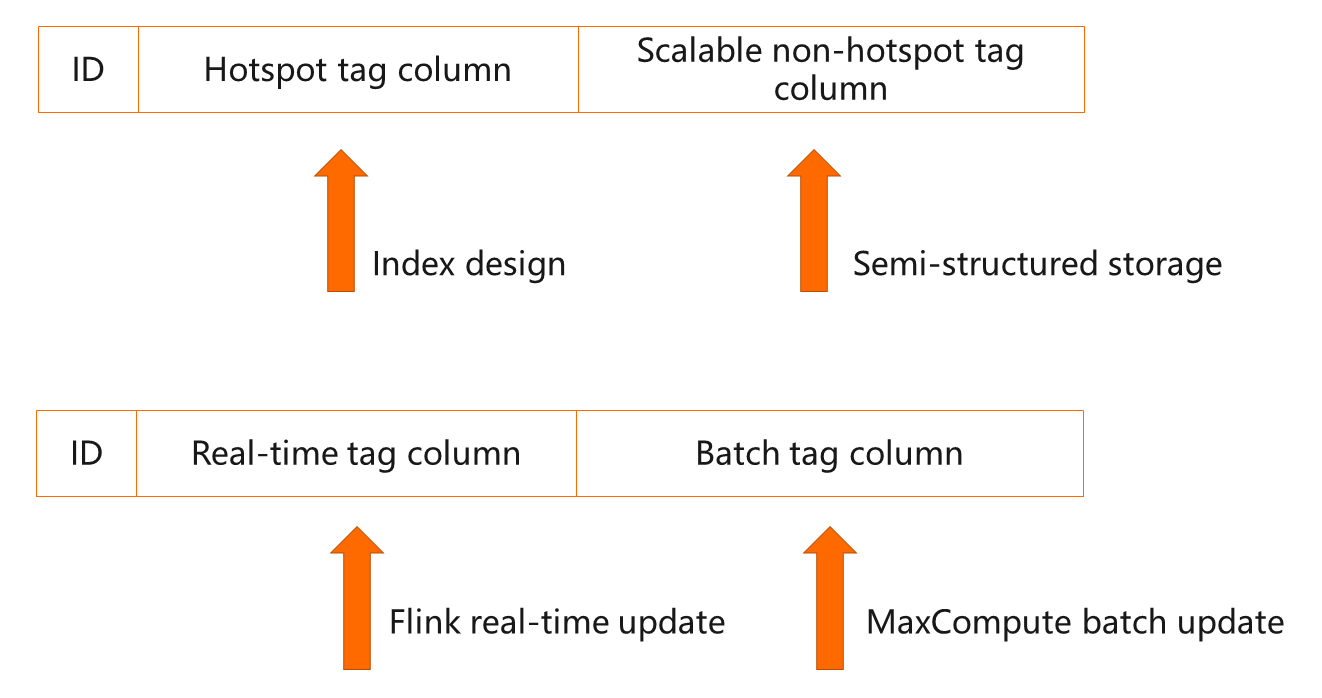

The data source delivers data to Flink by tracking point data through message middleware Kafka. Then, the data is subject to preliminary data processing, including data governance correction, data aggregation, and data dimension widening. Dimension association is a very important scenario. The real tracking point data records some IDs, which must be converted into dimension information with attribute meaning. The first thing is to widen the dimension. For this purpose, you can use the row-oriented table of Hologres. Primary key is typically used for dimension association. You can store billions of dimension information with the row-oriented table of Hologres. This information can be updated in real-time. The processing results will be written in Kafka. The data is not completely processed at one time but in several cycles. Data is processed in Flink several times with Kafka as the message driver. Typically, one part of the result is written to Hologres in real-time, and the other is written to MaxCompute in batch mode. Data transfer from an offline data warehouse to a real-time data warehouse is a typical scenario involving data correction. Data will be corrected. Therefore, this scenario is very common, including tag processing. Therefore, some behaviors need to be synchronized to the real-time data warehouse after being processed by the offline data warehouse. There are other attributes related to current decisions. These can be directly written to the real-time data warehouse of Hologres. Tags are divided into two groups. Real-time tags are written to Hologres in real-time. Offline tags are synchronized to Hologres after being processed with MaxCompute.

There are several methods to provide external data services. The recommended way is to add a gateway when providing external services. The gateway service is responsible for throttling and circuit breaking. So, adding a gateway contributes to the stability of data services. For internal interactive analysis, we can connect to Hologres directly by using JDBC. For online applications, it is recommended to connect to Hologres through API Gateway.

The offline data warehouse processes two tables. One table is the user basic attribute table, which records some user attributes, such as gender, city, and age. The other table is the detailed transaction table, which records how much a user has bought and how many times a user has seen and collected for a certain commodity in one day. After the data is processed through offline data warehouses, it is imported to Hologres. After configuring the table column description information in a human-readable manner, configure the relevant attribute tags. After the tag is launched, the advertiser performs configuration filtering through the interactive interface. This kind of filtering is achieved with various SQL statements, namely, a variety of SQL expressions. Thus, queries are sent to the underlying engine. How should the underlying engine build a table when queries are sent to it?

Scheme Description:

Case

-------------------- Dimension Table of User Tag -----------------

begin;

-- Three hotspot tag fields (text, integer, and boolean types) and two extended tag fields (text[] and JSON types)

create table user_tags

(

user_id text not null primary key,

city_id text,

consume_level integer,

marriaged boolean,

tag_array text[],

tag_json json

);

call set_table_property('user_tags', 'orientation', 'column');

-- Distribution columns

call set_table_property('user_tags', 'distribution_key', 'user_id');

-- Set the bitmap index of the text type.

call set_table_property('user_tags', 'bitmap_columns', 'city_id,tag_array');

-- Hotspot tag. Here is dictionary encoding.

call set_table_property('user_tags', 'dictionary_encoding_columns', ‘city_id:auto’);

commit;

-------------------- Fact Table of User Behavior -----------------

begin;

create table shop_behavior

(

user_id text not null,

shop_id text not null,

pv_cnt integer,

trd_amt integer,

ds integer not null

);

call set_table_property('shop_behavior', 'orientation', 'column');

call set_table_property('shop_behavior', 'distribution_key', 'user_id');

--- Aggregation keys that are more friendly to operations such as group by.

call set_table_property('shop_behavior', 'clustering_key', 'ds,shop_id');

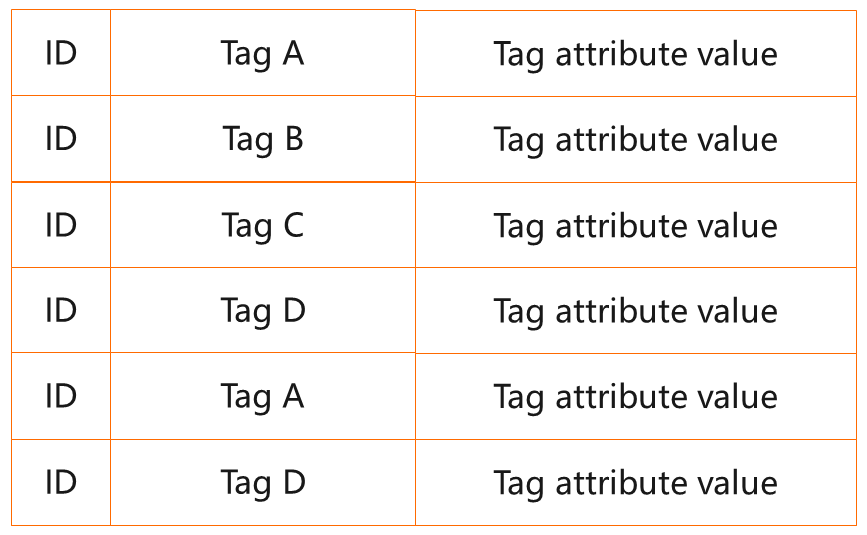

Commit;Convert the user_tag table to a narrow table. Each tag represents a row of records. The tag name corresponds to one column, and the tag value corresponds to another column.

Data types are degenerated to the string type, which is suitable for unfixed and sparse tags, sacrificing some performance but improving the flexibility of tag definition. This will support tens to hundreds of thousands of tags.

Case

-------------------- Dimension Table of User Tag -----------------

begin;

create table tag2.user_tags

(

userid text not null,

tag_key text,

tag_value text,

ds text

) partition by list(ds);

call set_table_property('tag2.user_tags', 'orientation', 'column’);

-- Distribution columns

call set_table_property('tag2.user_tags', 'distribution_key', 'user_id');

call set_table_property('tag2.user_tags', 'bitmap_columns', 'tag_key,tag_value');

call set_table_property('tag2.user_tags', 'dictionary_encoding_columns', 'tag_key:auto,tag_value:auto');

commit;

-- Query example --

WITH

f1 AS (

SELECT userid

FROM tag2.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_single'

AND tag_value = 'myname'

),

f2 AS (

SELECT userid

FROM tag2.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_date'

AND tag_value > '20210101'

),

f3 AS (

SELECT userid

FROM tag2.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_numeric'

AND to_number(tag_value, '99G999D9S') > 90

),

f4 AS (

SELECT userid

FROM tag2.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_multi'

AND tag_value IN ('HONOR', 'MI')

)

SELECT COUNT(DISTINCT userid)

FROM ((SELECT userid FROM f1 UNION SELECT userid FROM f2) INTERSECT (SELECT userid FROM f3 EXCEPT SELECT userid FROM f4)) crowd;Better data structure bitmap is used for the crowd of dimensional combination.

Case

-------------------- Dimension Table of User Tag -----------------

BEGIN;

CREATE TABLE tag3.user_tags (

"tag_key" text,

"tag_value" text,

"userlist" roaringbitmap,

ds text

) partition by list(ds);

CALL SET_TABLE_PROPERTY('tag3.user_tags', 'orientation', 'column');

CALL SET_TABLE_PROPERTY('tag3.user_tags', 'bitmap_columns', 'tag_key,tag_value');

CALL SET_TABLE_PROPERTY('tag3.user_tags', 'dictionary_encoding_columns', 'tag_key:auto,tag_value:auto');

COMMIT;

begin;

create table tag3.usermapping

(

userid_int serial,

userid text

);

commit;

-- Build RoaringBitmap --

INSERT INTO tag3. user_tags SELECT tag_key ,tag_value ,rb_build(array_agg(user_id::INT)) FROM tag2.user_tags GROUP BY tag_key ,tag_value

-- Query example --

SELECT Rb_cardinality(Rb_and(Rb_or(t1.r, t2.r), Rb_andnot(t3.r, t4.r)))

FROM

(

SELECT Rb_and_agg(userlist) AS r

FROM tag3.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_single'

AND tag_value = 'myname' ) AS t1,

(

SELECT rb_and_agg(userlist) AS r

FROM tag3.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_date'

AND tag_value > '20210101' ) AS t2,

(

SELECT rb_and_agg(userlist) AS r

FROM tag3.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_numeric'

AND to_number(tag_value, '99G999D9S') > 90 ) AS t3,

(

SELECT rb_and_agg(userlist) AS r

FROM tag3.user_tags

WHERE ds = '20210101'

AND tag_key = 'tag_multi'

AND tag_value IN ('HONOR',

'MI') ) AS t4Scientific Analysis of Large-Scale Data Based on the Distributed Python Capabilities of MaxCompute

137 posts | 21 followers

FollowHologres - June 16, 2022

Alibaba Cloud MaxCompute - December 22, 2021

Apache Flink Community - August 14, 2025

Alibaba Cloud MaxCompute - January 21, 2022

Hologres - May 31, 2022

Alibaba Cloud MaxCompute - June 2, 2021

137 posts | 21 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute