This article is based on a speech delivered at the 2025 Apsara Conference.

Speaker: Huang Pengcheng — Head of Realtime Compute for Apache Flink, Computing Platform Business Unit, Alibaba Cloud Intelligence.

In today’s data-driven economy, real-time data processing has become a cornerstone of enterprise digital transformation. Over the past decade, Realtime Compute for Apache Flink has evolved from technology introduction to full-fledged innovation, becoming a benchmark in the real-time computing industry.

At the 2025 Apsara Conference, Alibaba Cloud announced a major upgrade to Realtime Compute for Apache Flink — delivering breakthroughs in both computing and storage, and opening a new chapter of real-time intelligence in the AI era.

This presentation revolves around four parts:

The evolution of Alibaba Cloud’s Realtime Compute for Apache Flink spans four pivotal stages.

Phase 1: Internal Adoption In the early days, the Flink framework was widely adopted within Alibaba Groupfor core business scenarios. Alibaba even developed an in-house version called Blink — well known to industry insiders — which marked the first large-scale production deployment of Apache Flink in mission-critical environments.

Phase 2: Strategic Expansion Alibaba Cloud acquired DataArtisans, the original creators of Apache Flink, merging Chinese and German engineering teams. Blink was contributed back to the open-source community, and Alibaba Cloud took on a leadership role in fostering Flink’s ecosystem — even bringing the Flink Forward conference to China.

Phase 3: Cloud Service Launch The official cloud-based Realtime Compute for Apache Flink service debuted with a Serverless architecture, enabling elastic scaling and cost optimization.

Phase 4: Rapid Growth In recent years, the Alibaba Cloud's Realtime Compute for Apache FLink expanded internationally across multiple regions, extending Flink’s footprint. Over the past decade, Apache Flink's architecture has evolved to be completely cloud-native, simplifying deployment for developers in cloud environments. Consequently, most cloud vendors, including Alibaba Cloud, now offer managed services for Apache Flink, making it more accessible to enterprises globally.

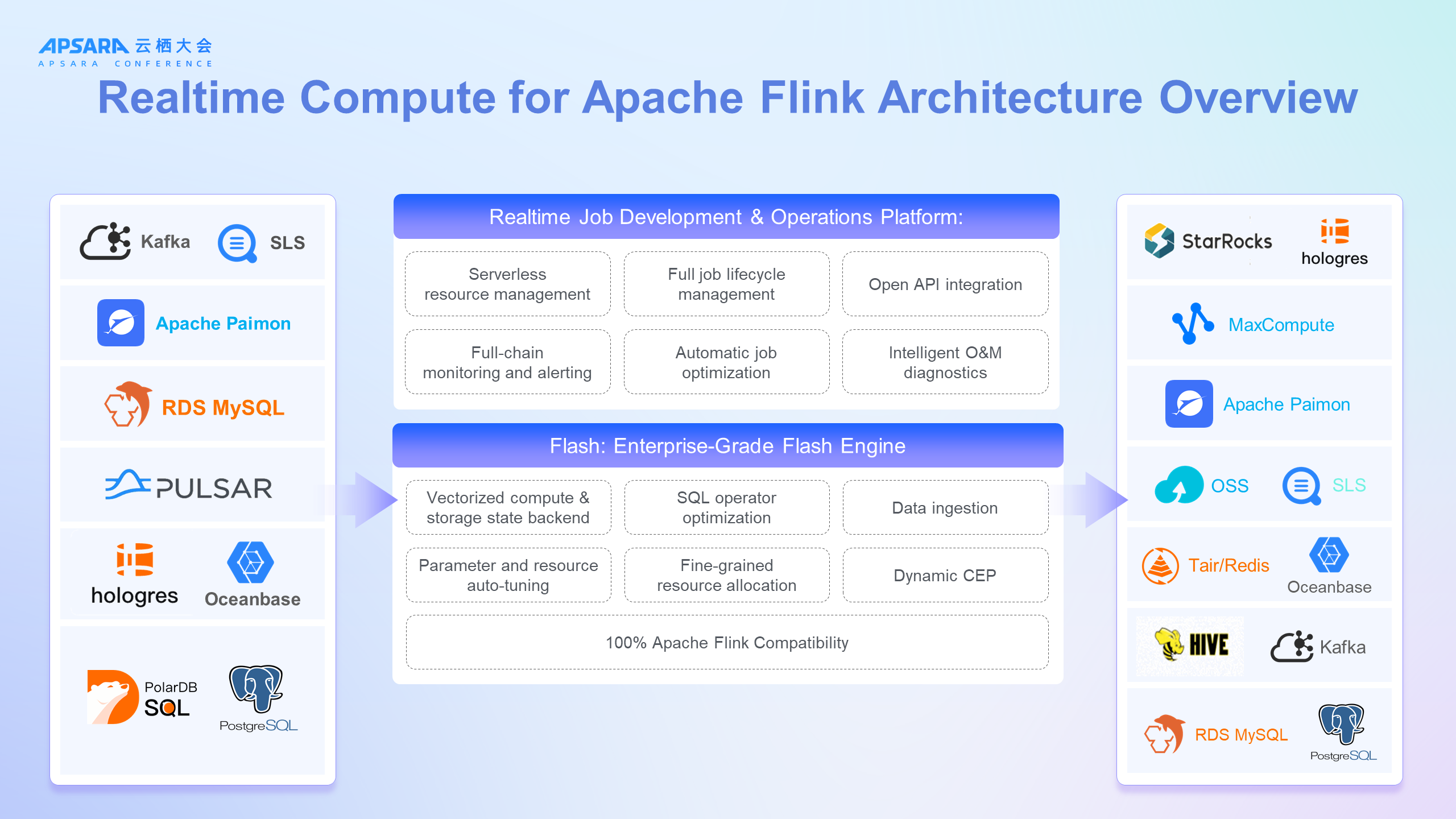

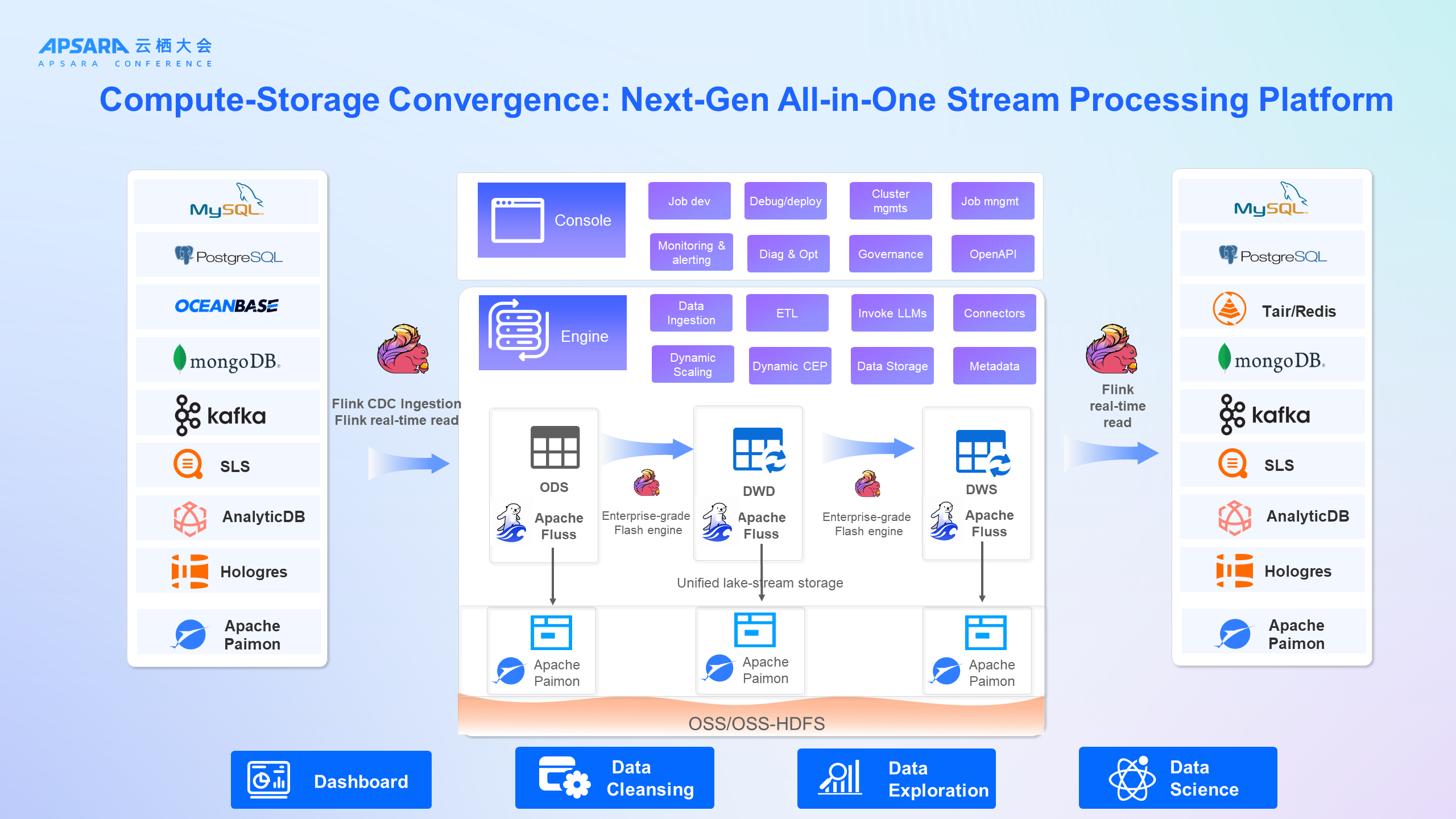

The solution adopts a two-layer architecture:

At the core lies the enterprise-grade Flash engine — a self-developed vectorized processing engine delivering 3–4× the performance of open-source Flink in Nexmark benchmarks. It supports optimized SQL operators, advanced storage backends, dynamic Complex Event Processing (CEP), elastic scaling, and is 100% Apache Flink compatible, ensuring frictionless migration for users.

On top sits the job development and operations platform — the Console — offering end-to-end management: from resource allocation and job lifecycle management, to APIs, observability features, performance tuning, and diagnostics. Data source connectors and output destinations form a rich ecosystem around the platform.

Key strengths of Realtime Compute for Apache Flink include:

End-to-End Real-Time DevOps: Fully managed, Serverless environment covering development, debugging, deployment, monitoring, diagnostics, and optimization.

Serverless Elasticity: Compute Units (CUs) scale on demand without pre-provisioning, reducing resource costs by over 30% with sub-second elasticity, and ensuring job isolation for stability.

Enterprise-Grade Kernel: Extreme performance, complete Apache Flink compatibility, and enhanced features for scenarios like real-time data lakes, dynamic CEP rule configuration, and unified ingestion pipelines.

At the 2025 Apsara Conference, the upgrade spanned computing and storage innovations.

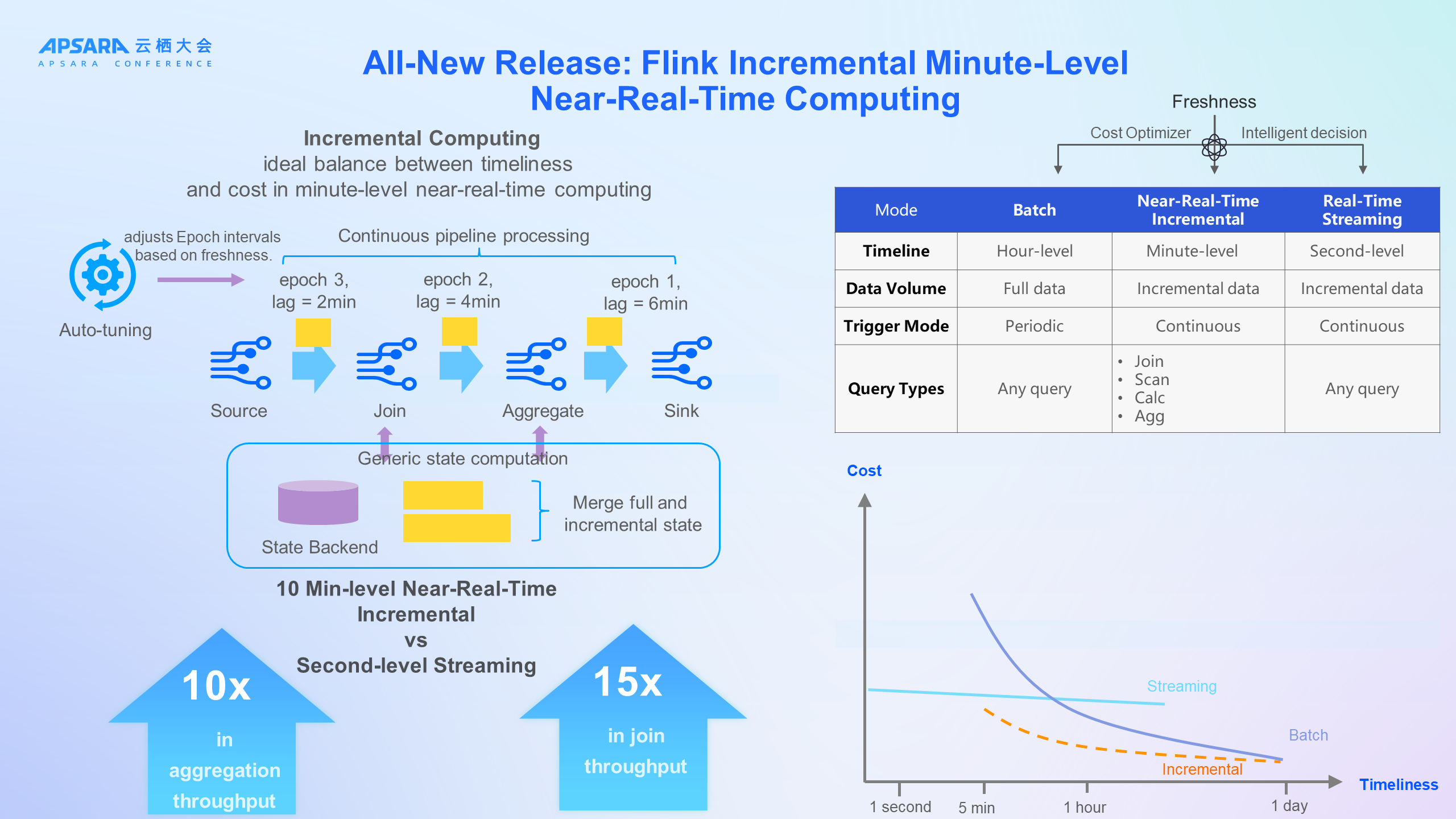

Originally stream-focused, Flink evolved toward batch capabilities, driving the unified "stream-batch integration" vision. However, users in granular scenarios seek optimal balance between timeliness and cost.

In traditional terms:

Incremental Processing addresses this gap by segmenting continuous data streams into bounded sets using Epochs (micro-batches), marked by Epoch Markers. The DamStage execution unit ensures results only emit at Epoch boundaries. An AutoPilot mechanism tunes Epoch size dynamically based on Freshness requirements and dataLag metrics, adjusting resources accordingly.

Benchmark data from Alibaba Cloud’s public service shows:

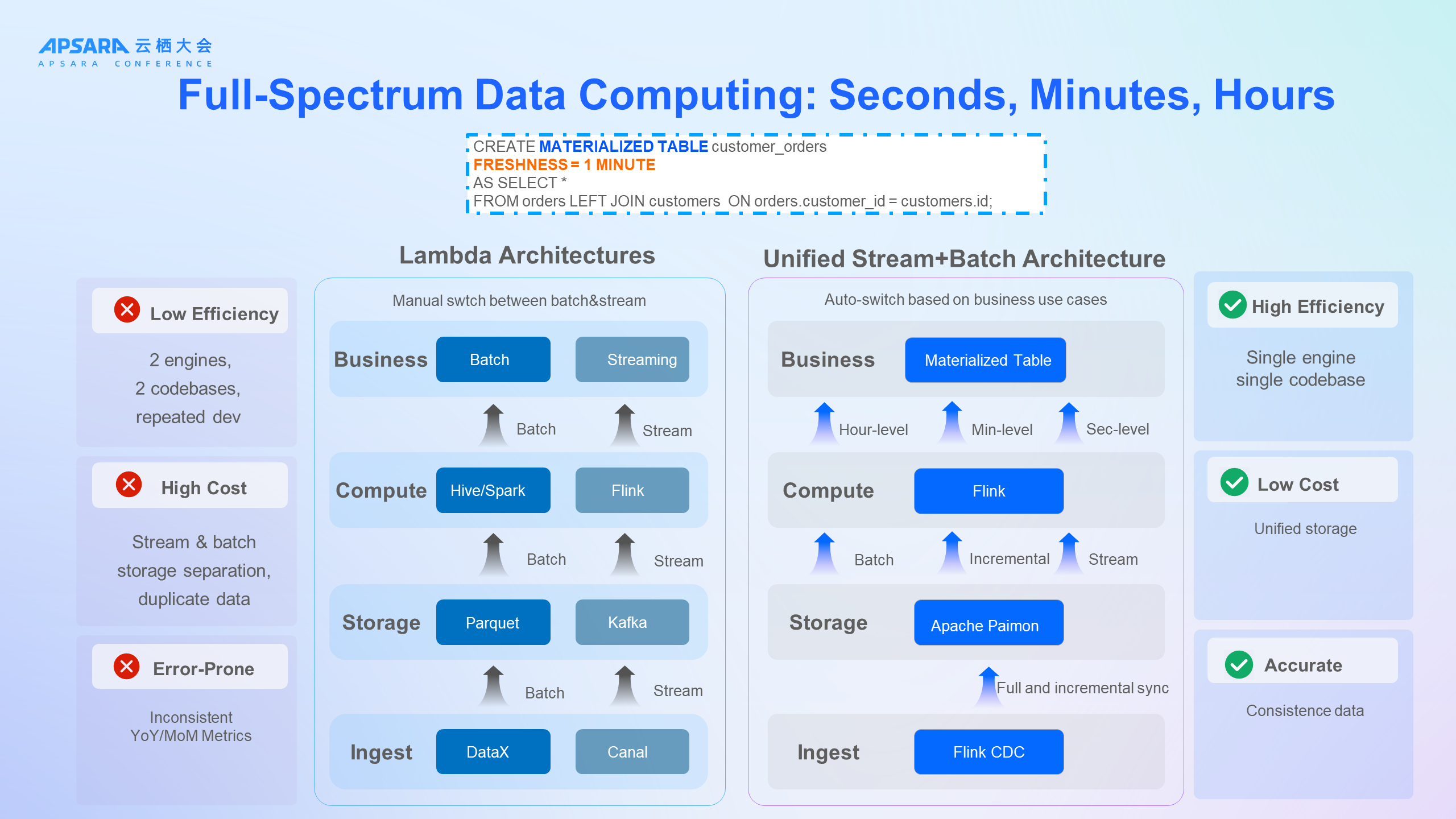

The UniFlow unified architecture replaces the Lambda model’s split batch/stream paradigm. With Materialized Tables and declarative syntax, developers can adjust Freshness to derive data at varying timeliness — all atop Apache Paimon lake storage and Flink CDC ingestion.

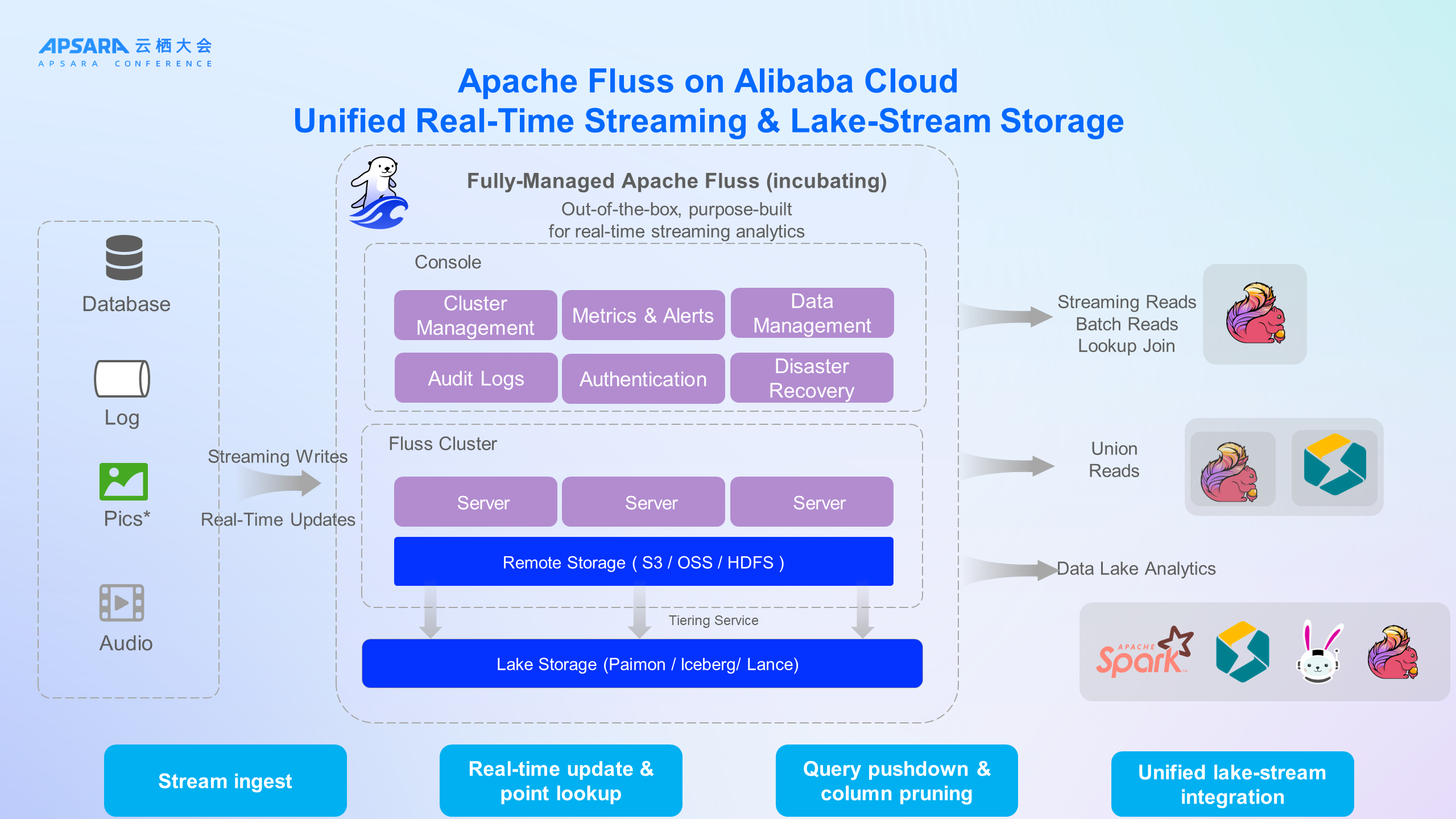

Traditional Apache Flink + Apache Kafka architectures face IO overhead, poor scalability correlation, and minute-level latencies. Managing separate stream and lake storage layers increases operational complexity.

Streaming Storage for Apache Fluss (incubating) — Alibaba Cloud’s next-gen stream storage based on Apache Fluss (incubating) — is purpose-built for real-time and lake-stream integration.

The open-source project behind it Apache Fluss (incubating) has been donated to the Apache Software Foundation (ASF) and is now in incubation.

Core advantages of Stream Storage for Apache Fluss (incubating) on Alibaba Cloud includes:

Combined with Flink, Fluss delivers high-performance, low-cost stream processing. Delta join capabilities eliminate large-state burdens, dramatically reducing CU consumption (e.g., one Taobao group case cut 100TB+ state jobs by 86% CU reduction, latency from 90s to 1s).

Data volumes, types, and sources are exploding. Yet AI models often rely on stale offline datasets, harming accuracy and diminishing impact. Businesses need fresh, real-time AI inference.

Realtime Compute for Apache Flink offers full-chain capabilities:

Applications span real-time sentiment analysis, personalized recommendations, anomaly detection, semantic search, and intelligent virtual assistants.

Traditional AI risk control systems face serious limitations. When decisions are based on data that is one hour or even half a day old, fraudulent transactions can go undetected for far too long. For example, a stolen credit card might be used dozens of times before detection—leading to substantial financial losses. These legacy approaches are plagued by delayed detection, high false‑positive rates, and slow response times, making it impossible to block suspicious transactions in real time.

The real‑time AI risk control solution addresses these challenges by integrating multiple behavioral data streams, including login traffic, purchase traffic, payment transactions, system interaction logs, and logistics event flows.

All incoming data streams are ingested into the real‑time computing platform. The system performs feature engineering on the fly, extracting relevant patterns and characteristics in seconds. The processed features are fed directly into AI models, enabling risk control decisions at minute‑level or even second‑level latency.

In practice, this means every behavioral signal—from user login to payment confirmation—can trigger instant analysis, with the model making decisions fast enough to block suspicious activity before damage occurs.

At the core of this solution is the self‑developed Flash enhanced vectorized processing engine. When performing vectorization and model inference, it delivers:

Compared with traditional systems, Flash offers superior performance, ensures tighter cost controls, and achieves lower reaction times in high‑volume transaction environments.

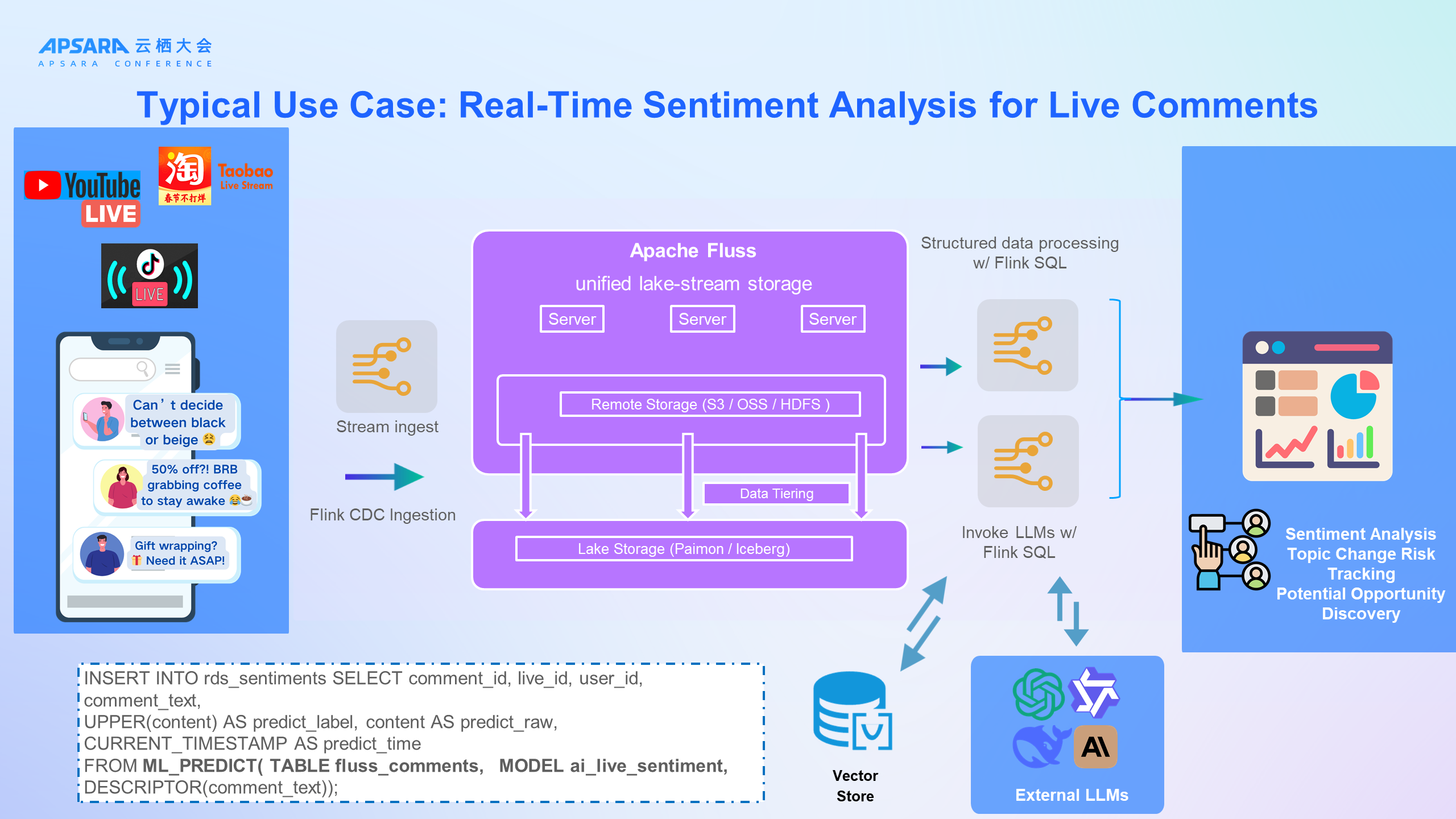

Consider the typical application of real‑time sentiment analysis on live‑stream comments. By using Flink SQL to invoke LLMs—integrated with external LLMs services and the Apache Fluss (incubating) unified lake‑stream storage—you can perform a wide range of advanced analyses, including:

Flink SQL can also be used to augment structured data, with processed results written to a vector database for downstream use, such as semantic search or recommendation systems.

A sample SQL query demonstrates how the ML_PREDICTfunction can directly call an AI model within a streaming pipeline to execute real‑time sentiment analysis—showcasing the seamless integration between Flink SQL, AI models, and Fluss for high‑velocity data intelligence.

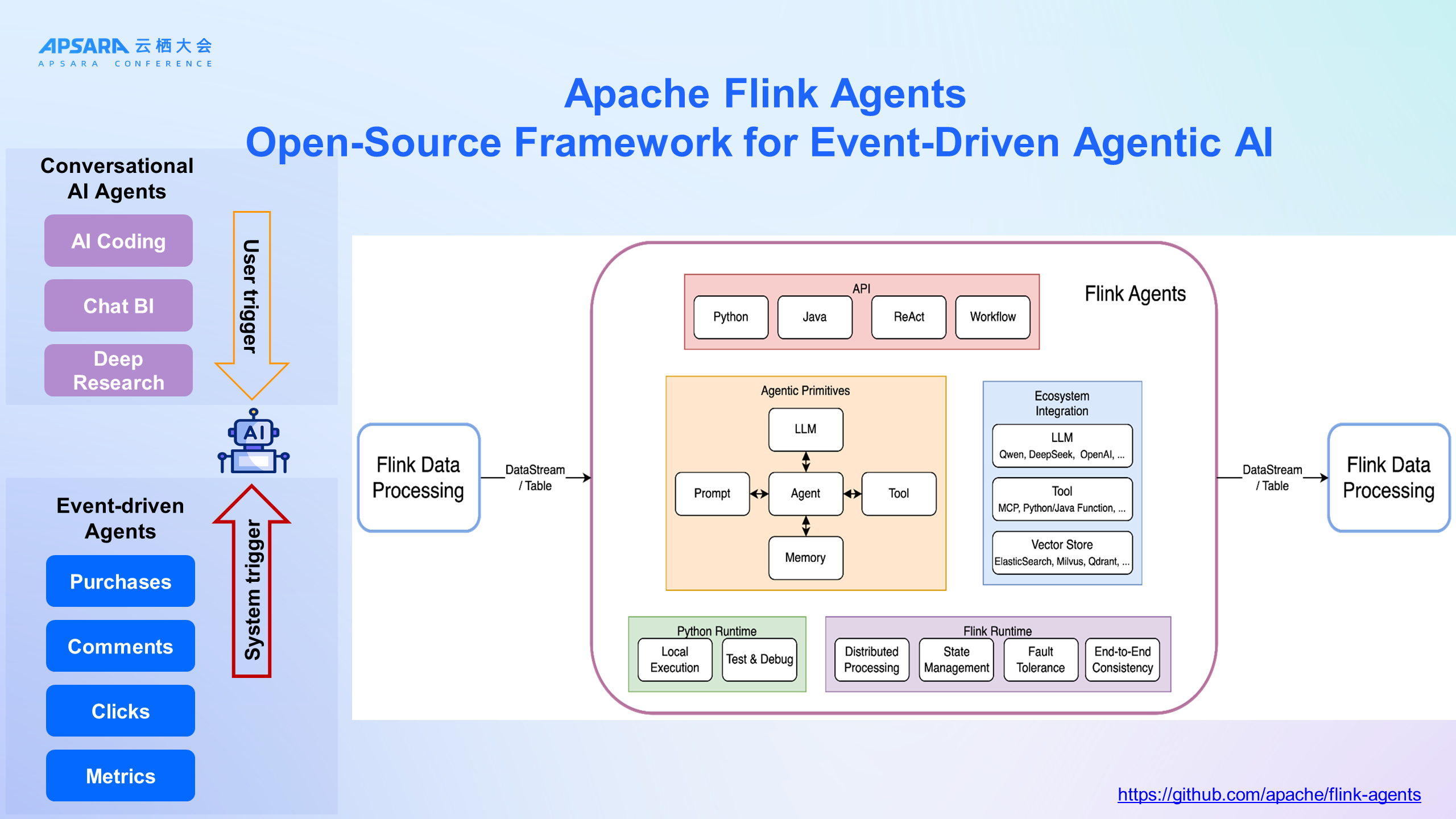

Apache Flink Agents, a new sub‑project in the Apache Flink community initiated by Alibaba Cloud and others, is an open‑source framework for building event‑driven agents. It supports event‑driven AI agents, Chat BI, deep research, and both conversational and reactive AI.

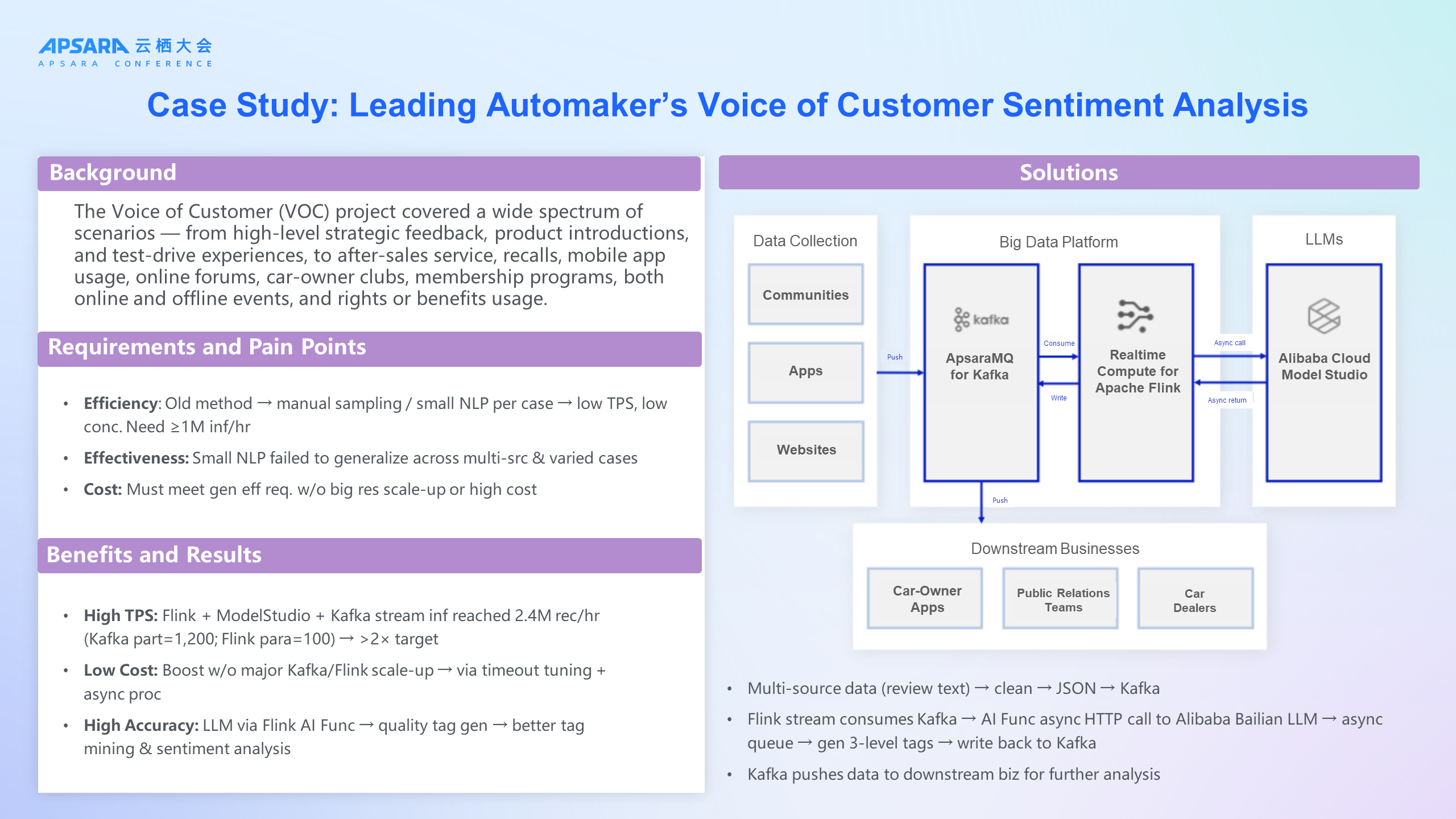

In a real‑time Voice of Customer (VOC) analysis project for a top automobile manufacturer, the application covered a wide spectrum of scenarios — from high‑level strategic feedback, product introductions, and test‑drive experiences, to after‑sales service, recalls, mobile app usage, online forums, car‑owner clubs, membership programs, both online and offline events, and rights or benefits usage.

LLMs were employed to structure and label both speech and text data, enabling sentiment analysis, information extraction, VOC annotation, and tag mining. These capabilities supported use cases such as public opinion monitoring, activity introductions, and after‑sales issue tracking.

The implementation pipeline worked as follows:

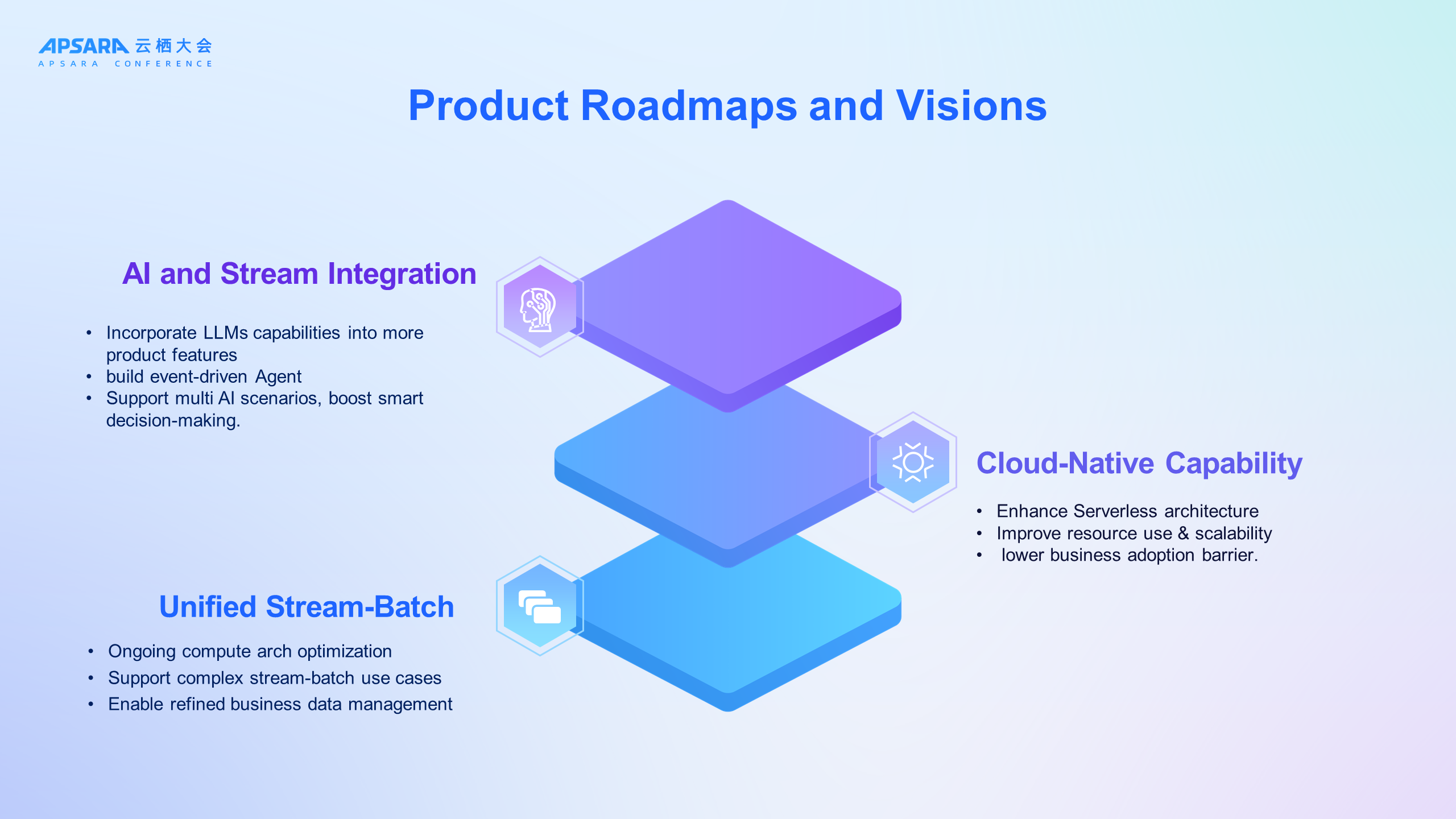

Alibaba Cloud will deepen AI–stream processing integration, focusing on advanced real-time agent platforms and expanding into areas such as live video understanding for industrial, community, and public safety use cases.

Serverless storage-compute optimization will enhance elasticity and efficiency, while the unified stream-batch architecture will cover all timeliness tiers — from seconds to days — unlocking broader scenario value.

The latest evolution of Realtime Compute for Apache Flink marks a new stage in stream processing. Incremental computing achieves the balance between timeliness and cost, Streaming Storage for Apache Fluss builds a truly integrated lake-stream architecture, and AI Functions usher in a new era of real-time intelligence.

In the AI-driven future, real-time isn’t just infrastructure — it’s the engine of intelligent transformation. Alibaba Cloud is enabling enterprises to release data value instantly and showcase AI power in real time.

Real-time AI is the future — not just a trend, but an inevitable choice for digital transformation. With continued innovation, Realtime Compute for Apache Flink is poised to redefine intelligent, instantaneous data processing.

6 posts | 0 followers

FollowAlibaba Cloud Indonesia - March 23, 2023

Apache Flink Community - March 14, 2025

Apache Flink Community - August 1, 2025

Apache Flink Community - October 12, 2024

Apache Flink Community - August 29, 2025

Apache Flink Community - August 14, 2025

6 posts | 0 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Big Data and AI