By Yucheng from Idle Fish Technology

Idle Fish is a trading application for unwanted items and a leader in the second-hand trading market. Idle Fish has grown for seven years, from its birth in 2014 to now. Its rapid growth is responsible for nearly ten billion exposure, page clicks, and page views every day. The huge scale of the data also brings many real-time problems:

We created a real-time data warehouse for Idle Fish to solve these problems.

We investigated the design and architecture of various data warehouses inside and outside the Group before designing Idle Fish's real-time data warehouse. Some of them are of relatively old architecture designs, and others are innovative solutions brought about through technological breakthroughs. This article classifies the old and new designs of these real-time data warehouses:

First of all, we abandoned the relatively old solution. Due to the rapid technological innovation, many excellent products have emerged for us to use. In addition, based on Idle Fish's business needs, we finally chose Hologres[1] + Blink[2] to build a real-time data warehouse.

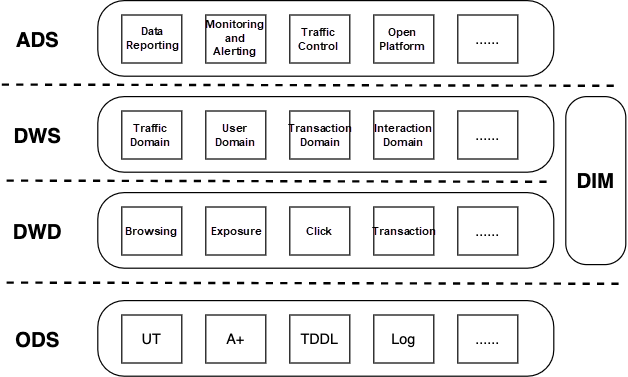

Considering the computing cost, ease of use, reusability, and consistency, we must avoid the chimney-style development model and build real-time data warehouses by designing a middle layer. The chimney-style architecture has many disadvantages. It cannot coordinate with other systems effectively, which is not conducive to business maintenance, and the later maintenance cost is very high. The following figure shows the architecture diagram of the data model design of the Idle Fish real-time data warehouse.

As seen in the figure above, the data model of the real-time data warehouse is divided into four layers from the bottom to the top: ODS, DWD, DWS, and ADS. Data processing can be divided and completed in each layer through a multi-layer design. For example, the DWD layer completes the data filtering, cleaning, standardization, and masking. Then, the DWS layer processes the data summarized by common multi-dimensional indicators, improving the code reuse rate and overall production efficiency. At the same time, the types of tasks handled at each layer are similar, and a unified technical solution can be adopted to optimize performance and make the technical architecture of the data warehouse more concise. The following part is a brief introduction to these four layers:

1. ODS (Operational Data Store)

This layer is closest to the data source, and the amount of data to be stored is the largest and most primitive. Their data formats are virtually inconsistent for many data sources. After unified standardization, regular data can be obtained, and the data in the data source can be loaded into the ODS layer after extraction, cleaning, and transmission.

2. DWD (Data Warehouse Detail)

This layer isolates the business layer, and the data warehouse and mainly performs some data cleaning and standardization operations on the ODS layer. It can divide the data according to different behavior dimensions. For example, this article divides the data into different dimensions, such as browsing, exposure, click, and transaction. These different dimensions can provide more fine-grained data services to upper-layer callers.

3. DWS (Data Warehouse Service)

This layer moderately summarizes each domain and builds the Data Warehouse Summary layer mainly based on the concept of data domain + business domain. It is different from offline data warehouses because the summary layer of real-time data warehouses is divided into a light summary layer and a high summary layer. For example, data at the light summary layer is written into ADS for complex OLAP query scenarios of frontend products, meeting the requirements of self-service analysis and report output.

4. ADS (Application Data Store)

This layer is the application layer mainly built for specific requirements. It provides external services through the RPC framework, such as data report analysis and display, monitoring and alerting, traffic control, open platform, and other applications mentioned in this article.

5. DIM (Dimension)

DIM is very important in real-time computing and is also a major focus of maintenance. Dimension tables need to be updated in real-time, and downstream calculations are based on the latest dimension tables. For example, Idle Fish's dimension tables in the real-time data warehouse involve commodity tables, user tables, crowd tables, scene tables, and bucket tables of Idle Fish.

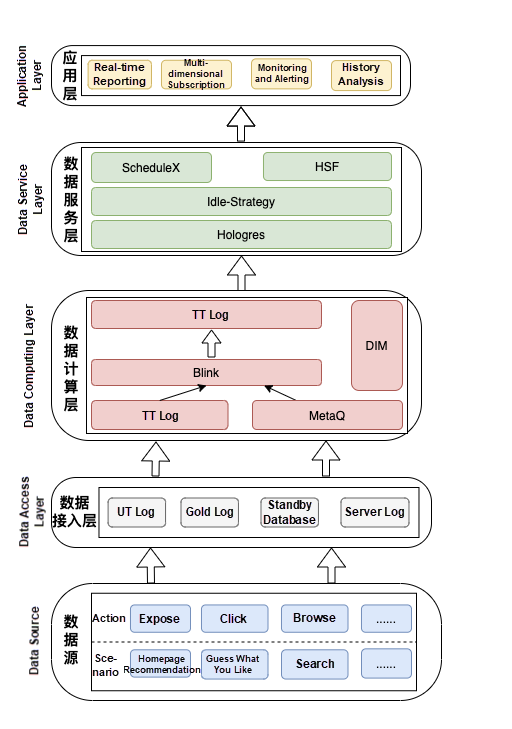

The data model of the real-time data warehouse of Idle Fish is dissected, and the design ideas and practical application of all model layers are introduced in detail. The following figure is a technical architecture diagram based on the data model. It is divided into five layers, including the data source, data access layer, data computing layer, data service layer, and application layer.

The data source part is the base of the entire real-time data warehouse. Idle Fish has many scenarios, such as homepage recommendation, Guess What You Like, and search. In these scenarios, different user behaviors occur. Behavior logs, such as exposure, clicks, and browsing are acquired by the upper-layer storage tool. For example, the data access layer in the figure above can connect data sources to UT[3] logs, gold logs, standby databases, or server logs for storage.

Data cleaning and standardization is the core process of building a real-time data warehouse. The data computing layer uses the real-time processing capability of Blink to clean, supplement, and standardize data in different formats and store them in TT[4]. The data service layer is the gateway layer of real-time data warehouses. It provides data services and API gateway capabilities after the logical processing of real-time data.

The application layer is closest to users. This layer is built for specific needs. It can display real-time reports on data in various dimensions, monitor and alert online abnormal traffic, control traffic in commodity domains, and open relevant interfaces for other applications.

The overall technical architecture is shown in the figure above. The key to building a real-time data warehouse is the ability of real-time data processing and real-time interaction. Idle Fish generates nearly ten billion gigabytes of tracking data and server logs every day. Idle Fish faces the following key difficulties when building a real-time data warehouse:

First of all, knowing how to process data stably and efficiently is an urgent problem that needs to be solved. We chose Blink when faced with computing and massive data processing. Blink is a stream computing framework within the Group and a new generation of stream computing engine that is re-encapsulated based on the open-source framework Flink.

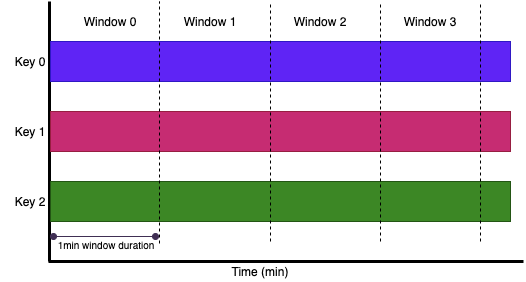

When we display real-time reports, we aggregate real-time data at the minute-level based on the performance and situation. This problem can be solved efficiently using Blink’s rolling window aggregation. In rolling windows, each element is assigned to a window with a specified size. Rolling windows are fixed in size and do not overlap. For example, if a one-minute rolling window is specified, the data of the infinite stream will be divided into windows of [0:00 - 0:01), [0:01, 0:02), [0:02, 0:03), etc., according to time. The specifications of the rolling window are shown in the following figure:

When writing a minute-level Blink task, you only need to define a rolling window in the GROUP BY clause. The pseudo-code is listed below:

GROUP BY TUMBLE(<time-attr>, <size-interval>)The parameter in the SQL statement above must be a valid time attribute field in the stream. Time attributes include Processing Time and Event Time.

According to the situation of the project, we use Event Time since we aggregate the data at the time of the tracking event. Another advantage of Event Time is that we can also maintain the consistency of results when rerunning tasks for a certain time.

With Blink, we can process massive amounts of data efficiently in real-time. How can we avoid the storage and computing drawbacks of traditional OLAP when data analysis scenarios are complex and interact frequently? When looking for a real-time tool featuring service/analysis integration, we found a useful tool, which was Hologres (Holographic + Postgres). It supports multi-dimensional analysis and business exploration with high concurrency and low latency for trillions of data. It can use existing BI tools to analyze all data easily and economically. It can still maintain the ability of second-level response when facing PB-level data and is easy to use.

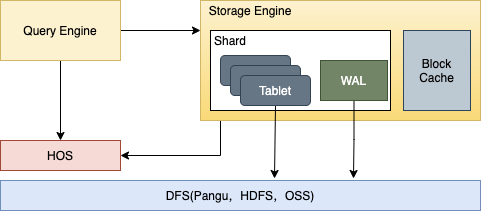

Hologres is built based on the design pattern of storage/computing separation. All data is stored in a distributed file system. The overall architecture of the storage engine is shown in the following figure:

Each shard constitutes a storage management unit and a recovery unit. The figure above shows the basic architecture of a shard. A shard consists of multiple tablets that share one log (WAL – Write-Ahead Log). All new data is inserted in append-only. Many files will accumulate in each tablet when data is written continuously. When small files in a tablet accumulate to a certain number, the storage engine will merge the small files in the background, which can reduce the use of system resources and the number of merged files. As a result, the reading performance is improved, and real-time and efficient analysis becomes possible.

The solution for real-time processing, storage, and analysis of massive data is described in detail above. How is the problem of connection to heterogeneous data sources handled? Idle Fish has a large number of scenarios and complex businesses. When handling heterogeneous data sources, we use domain dimension statistics to unify various data source fields in different domains. When Blink cleans data, it combines scenario, crowd, bucket, and other dimension information to solve the heterogeneous data source problem.

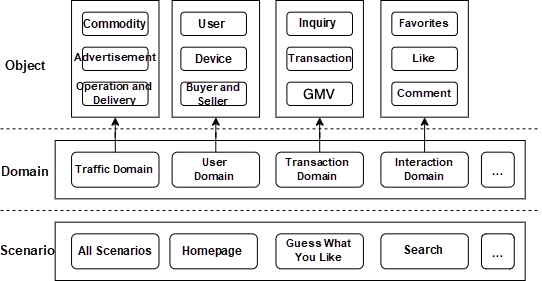

As shown in the figure above, the domain module is divided into traffic domain, user domain, transaction domain, and interaction domain. The corresponding objects are abstracted in each domain. For example, the traffic domain contains products, advertisements, and operations. The user domain contains users, devices, sellers, and buyers. The transaction domain contains inquiries, transactions, and GMV. The interaction domain contains favorites, likes, and comments.

When designing the solution for heterogeneous data sources, the need to build an open platform is taken into account. Therefore, the data access layer is abstracted into interfaces of different domains to provide access services, and the statistical interfaces of each dimension are opened in the application layer. By doing so, when there are business needs to be connected to the real-time data warehouse, you can access it quickly through the abstract interface opened at the data layer and application layer, regardless of the details in the middle of the entire procedure. This can reduce the development cycle significantly.

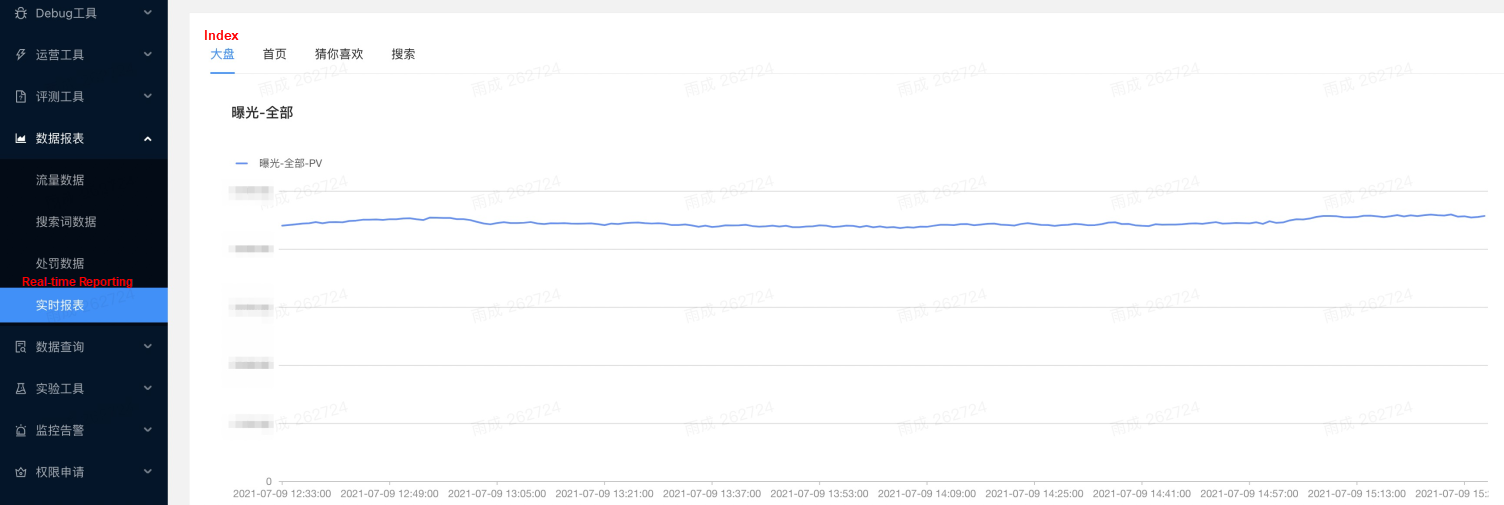

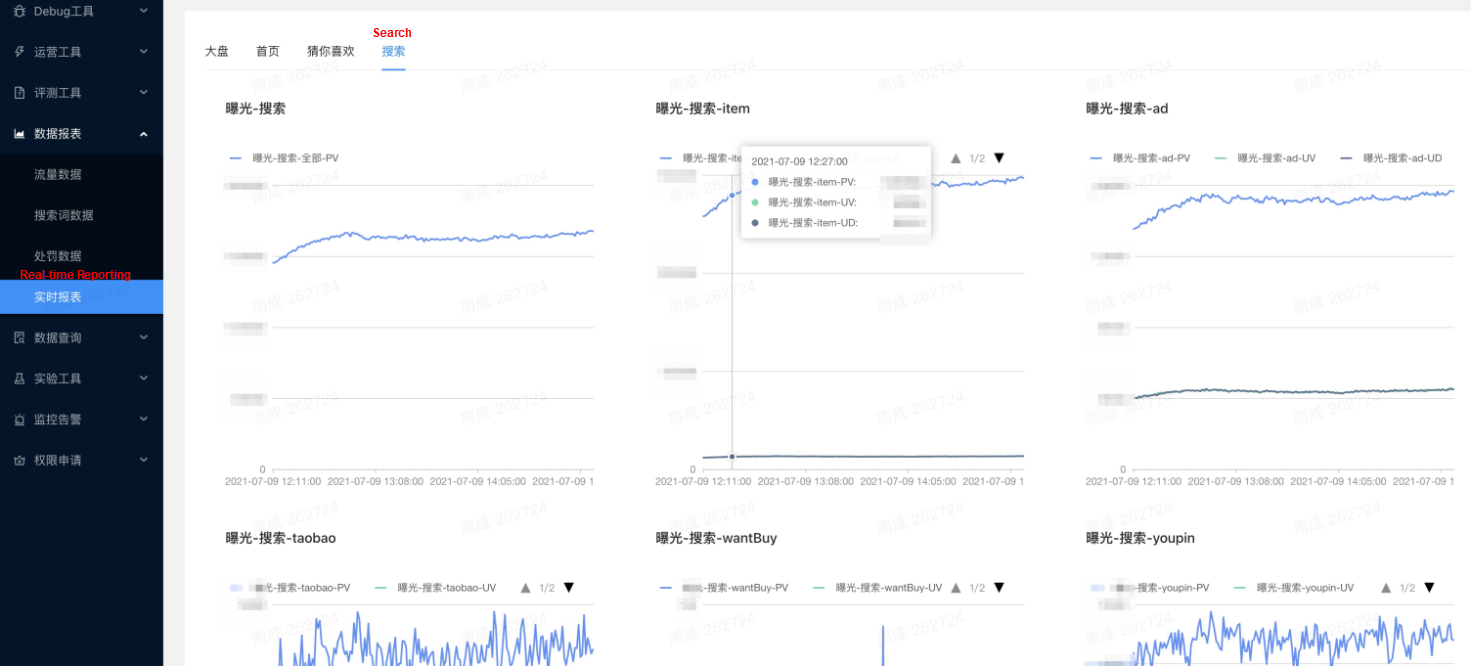

The real-time data warehouse built in this article has been applied in real-time reports, exposure exception feedback, and other aspects. Through the platform, you can browse the system dashboard, homepage, Guess What You Like, search, and other scenarios in real-time, improving the richness of real-time data in various scenarios of Idle Fish. Some results have been achieved through the application of real-time data warehouses:

Our development of real-time data warehouses is still in the early stage. We will increase our efforts to invest in research and development in the future. This way, real-time data warehouses can be applied in more scenarios. We will create a real-time, comprehensive, and stable traffic application platform. In the future, we will explore and optimize the following aspects:

[1] Hologres: Hologres is a real-time interactive and analytical service developed by Alibaba Cloud. Hologres is compatible with PostgreSQL 11 and integrates with big data ecosystems seamlessly. It can also analyze and process petabytes of data with high concurrency and low latency.

[2] Blink: Blink is an internal product created by Alibaba's Real-Time Compute Department by improving the open-source Apache Flink project.

[3] UT: UserTrack mainly refers to various user behavior and operation logs from a wireless client. It is the foundation of all operation reports based on user behavior analysis.

[4] TT: TimeTunnel is an efficient, reliable, and scalable messaging platform.

How Is SWAK, the Decoupling Tool for Idle Fish Business Code, Implemented?

56 posts | 4 followers

FollowAlibaba Cloud Community - September 17, 2021

Alibaba Developer - May 21, 2021

ApsaraDB - October 24, 2025

Apache Flink Community China - September 27, 2020

Alibaba Cloud MaxCompute - January 22, 2021

Apache Flink Community China - November 12, 2021

56 posts | 4 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by XianYu Tech