In the past few weeks, OpenClaw (formerly Clawdbot) has become a cautionary tale for what happens when powerful AI agents meet real-world prompt injection. Security researchers showed that a single crafted email or web page was enough to trick exposed OpenClaw instances into exfiltrating private SSH keys and API tokens, all without any direct access to the underlying systems. In other experiments, hidden instructions embedded in messages or Moltbook-style posts quietly hijacked agents into running unsafe shell commands, reading sensitive files, or leaking chat logs and secrets scattered across connected tools. These incidents make one thing clear: prompt injection is no longer a theoretical LLM risk—it is already being weaponized against popular AI agents in the wild, and any AI application that reads untrusted text and holds sensitive permissions is exposed to the same class of attacks.

According to the OWASP Top 10 for LLM Applications, Prompt Injection (LLM01) is ranked as the #1 security risk for large language model (LLM) systems—attackers are actively exploiting carefully crafted inputs to bypass system safeguards, trick models into revealing internal instructions, exfiltrate user data, or even generate prohibited content.

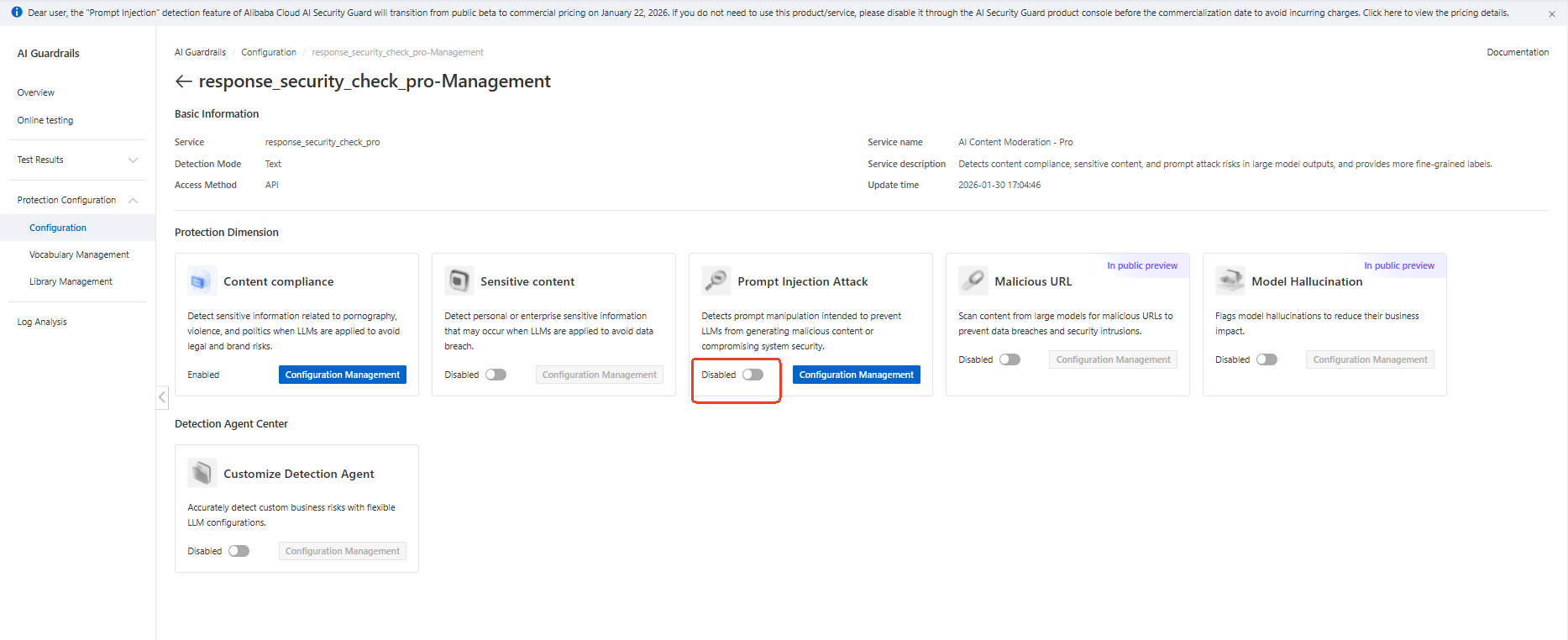

Now, you can defend against this critical threat across the entire input-output pipeline. Alibaba Cloud AI Guardrails provides dedicated, real-time detection of prompt-based attacks—accurately identifying typical evasion techniques such as jailbreaking, persona-based prompt injection, and instruction hijacking.

Alibaba Cloud AI Guardrails comprehensively defends against injection attacks targeting generative AI by accurately identifying adversarial behaviors such as jailbreak prompts, role-play inducements, and instruction hijacking. This builds an "immune defense" for AI systems.

● Model Jailbreaking: Jailbreaking is the overarching objective of these and other attack vectors—it involves crafting prompts (often over multi-turn conversations or via clever combinations of inputs) that bypass or break through the model's safety and alignment protocols, ultimately causing it to generate content it ordinarily would restrict.

● Role-Play Inducements: The model is coaxed into adopting a persona, such as pretending to be someone with fewer restrictions (e.g., the "Grandma Exploit," in which the model impersonates a grandmother telling stories). This can trick the model into disclosing sensitive or restricted information, such as activation keys or instructions for prohibited actions.

● Instruction Hijacking: The attacker appends directives like "ignore previous instructions" to the prompt, in an attempt to override system-level presets or guidelines set for the model.

AI applications are vulnerable to prompt-based attacks. Attackers craft malicious prompts to trick models into leaking sensitive data or performing unintended actions—Alibaba Cloud AI Guardrails helps you defend against these threats.

● Prevent attackers from bypassing AI safety mechanisms using carefully crafted prompts.

● Prevent sensitive information leakage—such as system instructions or user data—induced through malicious prompting.

● Reduce compliance and operational risks arising from inadequate prompt-based detection capabilities that may violate AI regulations in multiple jurisdictions.

● Instruction interaction security protection for AI Agents

● Adversarial attack defense for open-domain dialogue systems

● Permission control for third-party plugin calls

● Flexible and Fast Integration

Integrates easily through an All-in-One API, where a single call performs omni-modal detection. You can enable mitigation capabilities as needed for simple and efficient integration. The service is also natively integrated with platforms such as Alibaba Cloud Model Studio, AI Gateway, and WAF for one-click enablement. It is listed on the Dify plugin marketplace and adapts to mainstream AI application architectures, helping you launch applications quickly.

● End-to-End Protection

Creates a security loop from input to output. This feature addresses key challenges for large models in business scenarios, such as content security, external attacks, privacy leaks, and uncontrolled outputs.

● Long-Context Awareness

Supports threat detection in single-turn and multi-turn Q&A scenarios. It incorporates historical conversation data to detect cross-turn induction, semantic drift, and jailbreaking behaviors. This provides an accurate understanding of the full conversational intent and prevents misjudgments caused by fragmented context.

● Meets Compliance Requirements

Complies with explicit regulatory requirements, including Section 2.6 of Malaysia’s AI Governance Framework, Indonesia’s draft AI provisions under the Electronic Information and Transactions Law (UU ITE), and Sections 3.3–3.4 of the U.S. NIST AI 100-2e (2025) on mitigating direct and indirect prompt-based attacks.

● Billing Model: Charged per successful detection request

● Price: $6.00 USD per 10,000 requests ( $0.0006 per call).

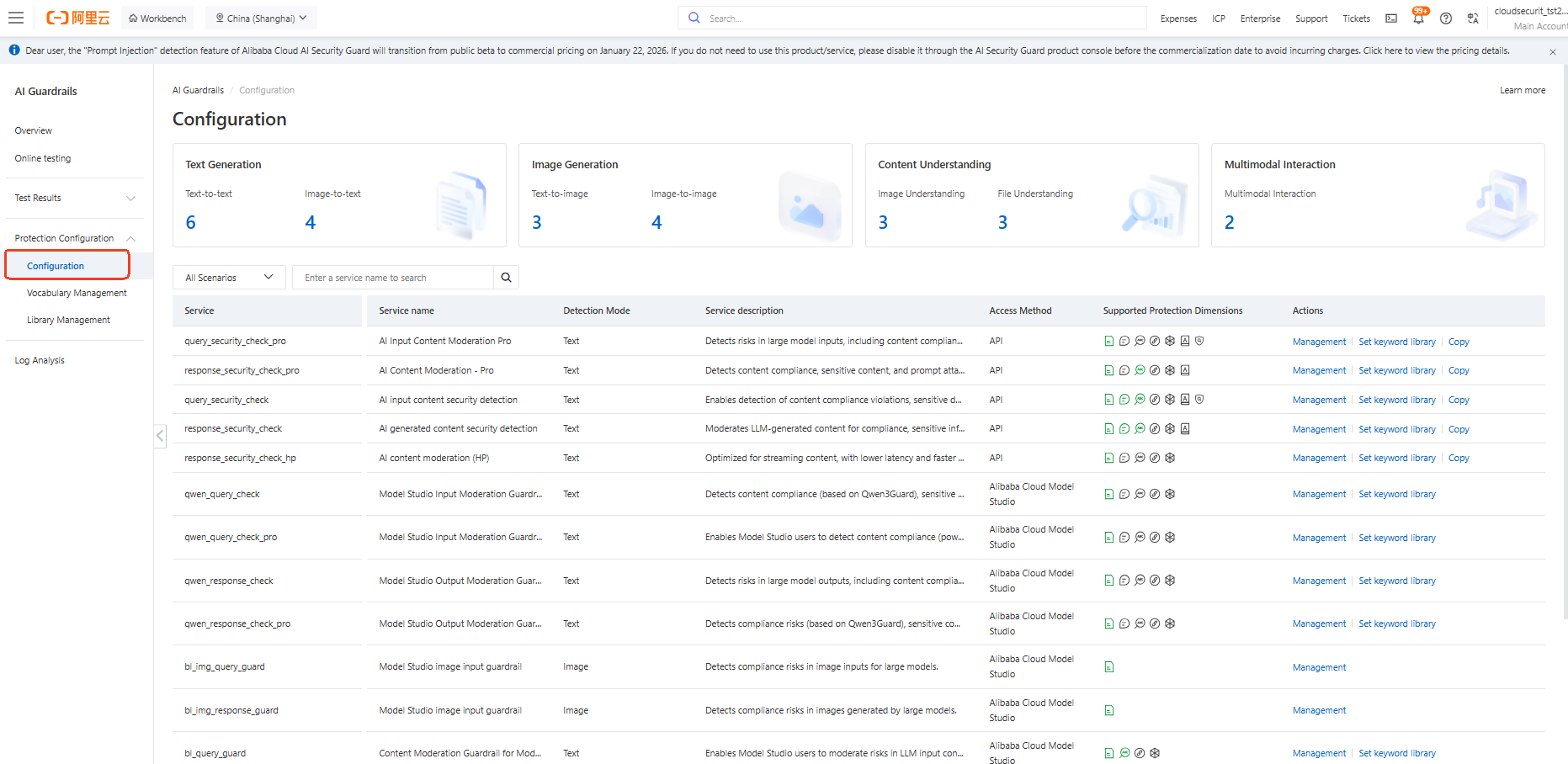

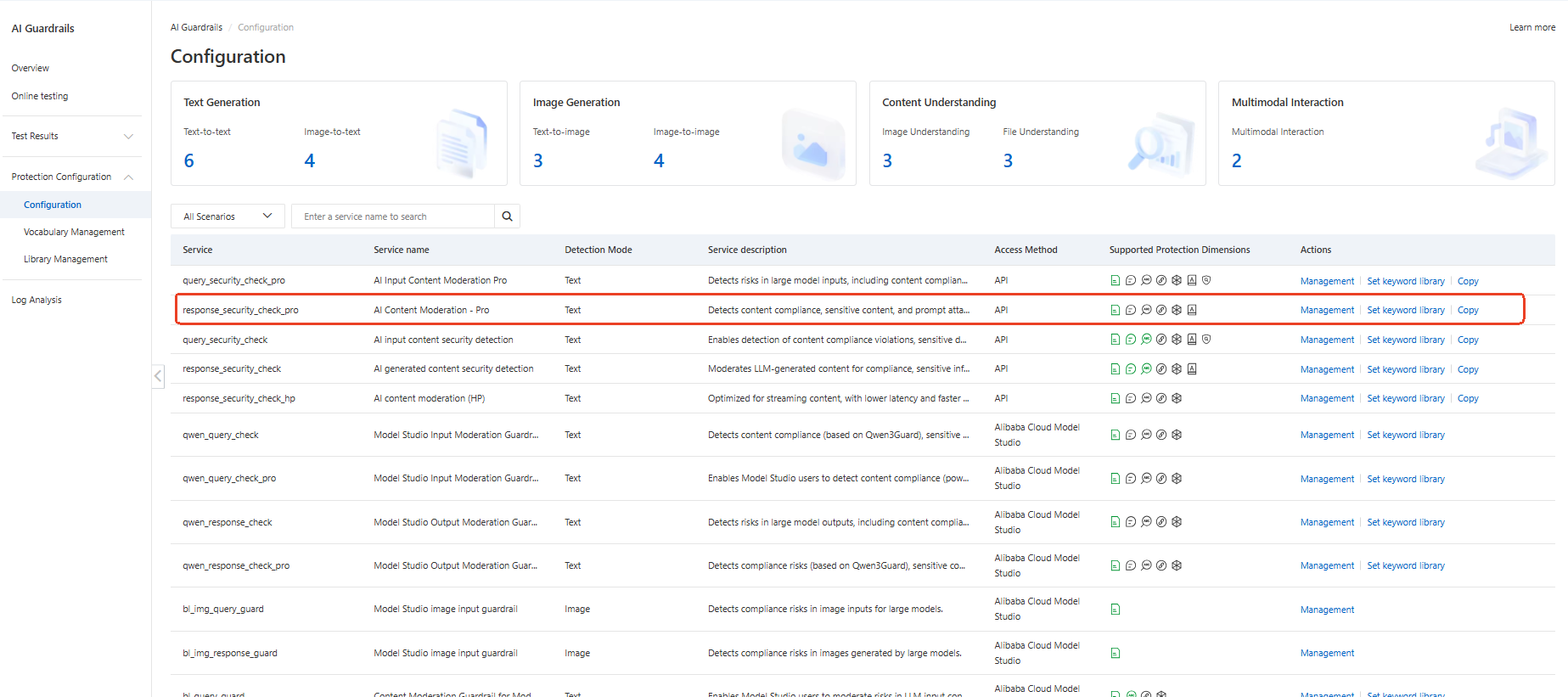

● Step 1: Log in to AI Guardrails management console

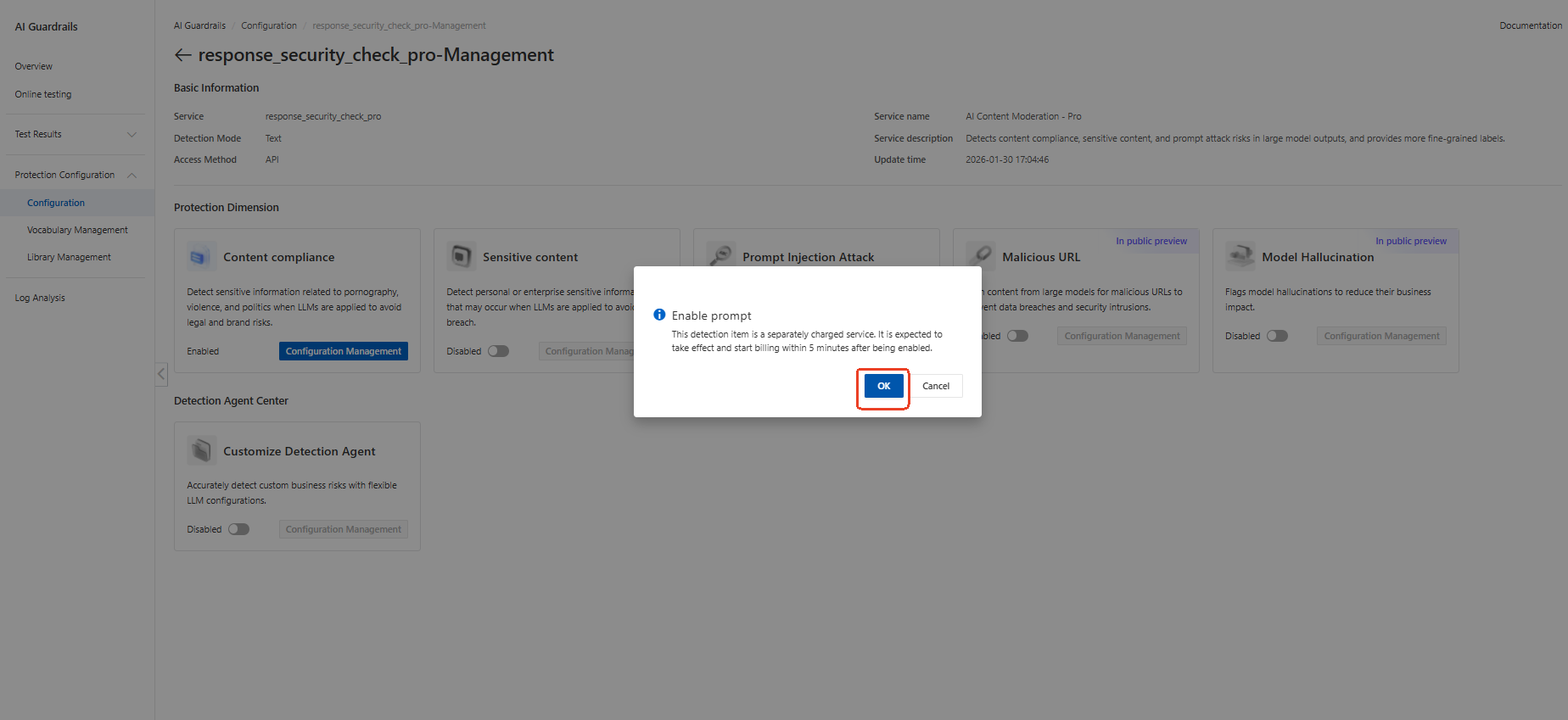

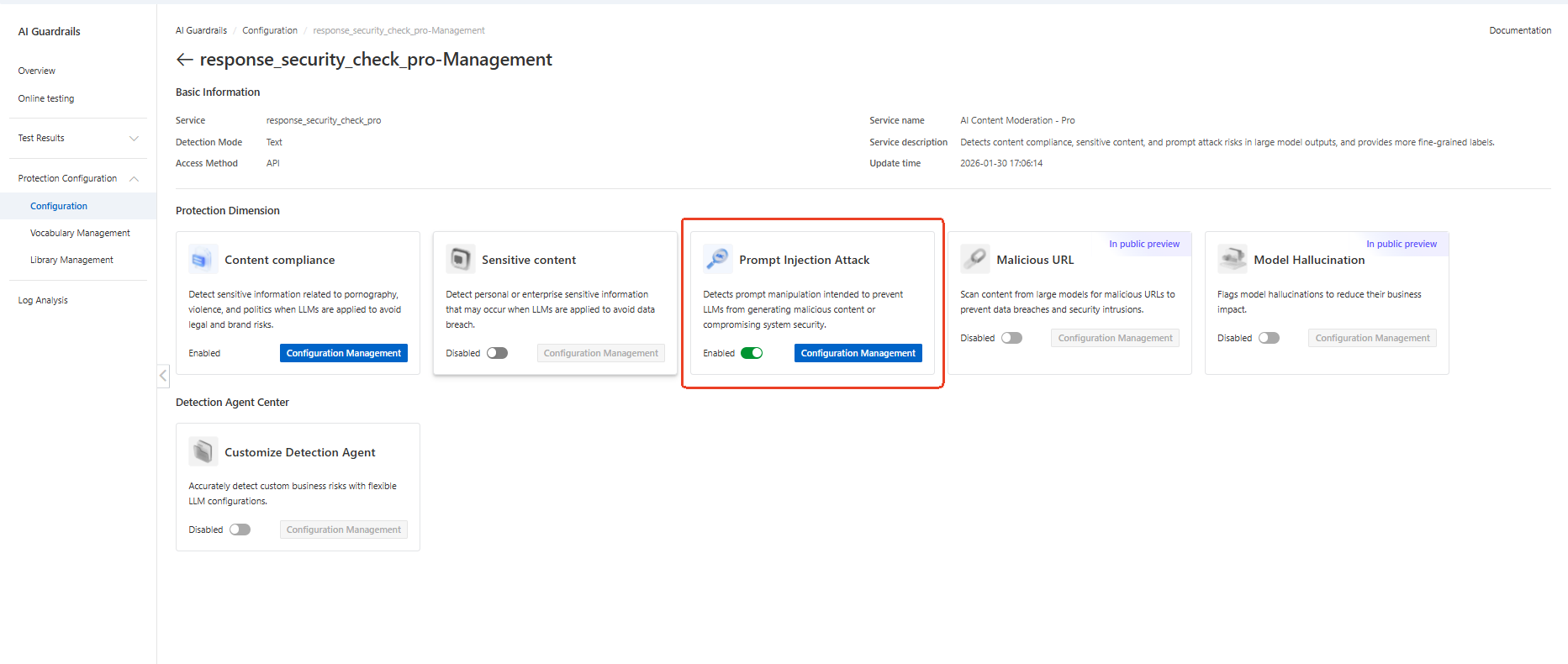

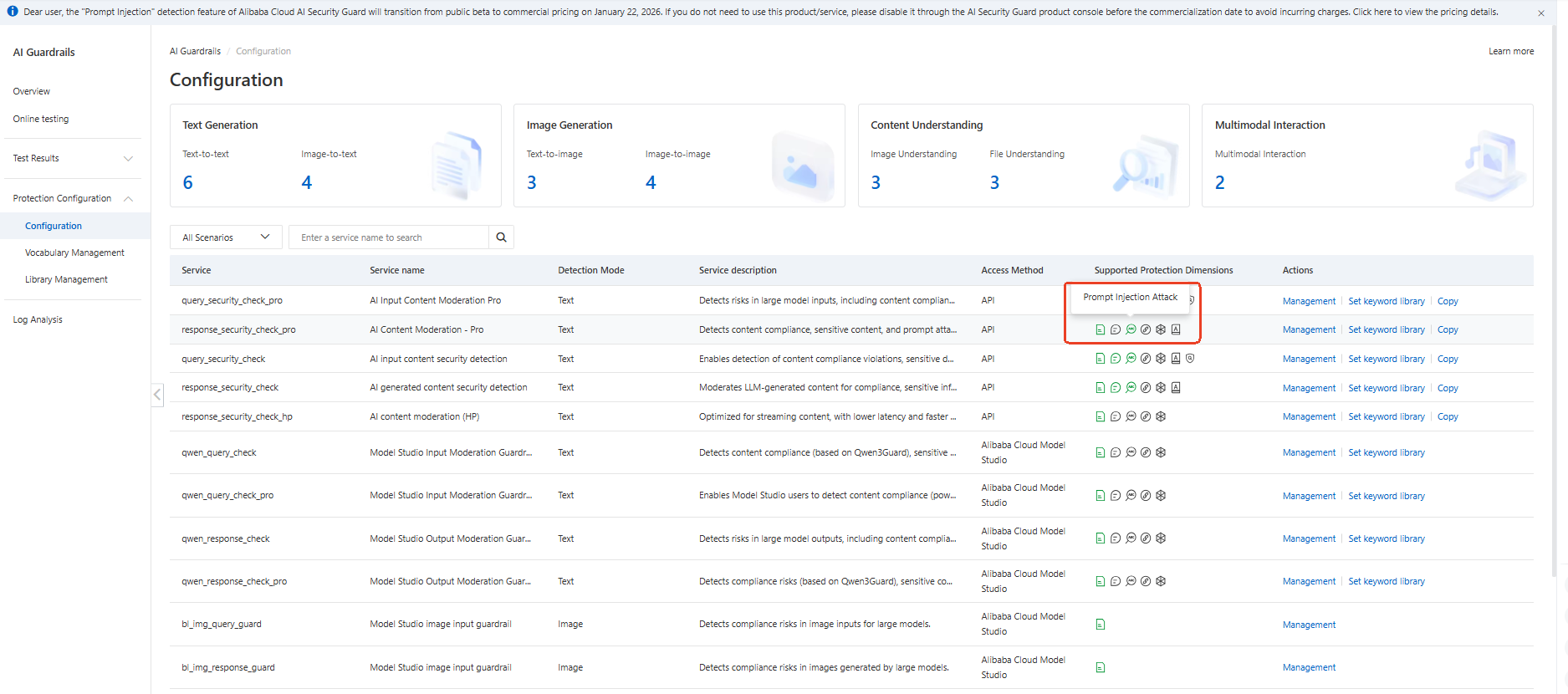

● Step 2: Find the detection item configuration under the protection configuration.

● Step 3: Select the Service you want to open and click manage

● Step 4: Enable the prompt injection attacks toggle

● Step 5: Save configuration

OpenClaw’s recent security incidents highlight a simple but uncomfortable truth: if your AI application can read untrusted text and act on sensitive permissions, then prompt injection is your new perimeter. Agents that look like productivity boosters can quickly become data exfiltration tools or remote-control interfaces for attackers.

When developing and operating AI applications and AI Agents, developers and AI companies face not only prompt-based attacks, but also content compliance risks and data leakage risks. These AI-related threats not only jeopardize normal business operations but also expose enterprises to significant regulatory and reputational risks.

Alibaba Cloud AI Guardrails is purpose-built to ensure the compliance, security, and stability of your AI business. It delivers an end-to-end protection framework tailored for diverse AI workloads—including pre-trained large language models (LLMs), AI services, and AI Agents. In particular, for generative AI input and output scenarios, AI Guardrails provides precise risk detection and proactive defense capabilities against malicious prompts, harmful content, and sensitive data exposure.

Activate AI Guardrails to protect your AI Infrastructure now: https://common-buy-intl.alibabacloud.com/?commodityCode=lvwang_guardrail_public_intl

Documentation: https://www.alibabacloud.com/help/content-moderation/latest/what-is-ai-security-barrier?

Moltbot Security Incident: Technical Analysis & Defense Guide

10 posts | 0 followers

FollowAlibaba Cloud Community - January 30, 2026

Alibaba Cloud Community - January 30, 2026

Kidd Ip - September 22, 2025

Alibaba Cloud Native Community - June 16, 2025

Alibaba Clouder - July 12, 2019

Alibaba Clouder - January 14, 2021

10 posts | 0 followers

Follow WAF(Web Application Firewall)

WAF(Web Application Firewall)

A cloud firewall service utilizing big data capabilities to protect against web-based attacks

Learn More Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More Web App Service

Web App Service

Web App Service allows you to deploy, scale, adjust, and monitor applications in an easy, efficient, secure, and flexible manner.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn MoreMore Posts by CloudSecurity