By Mobile Neural Network (MNN) Team

Recently, Alibaba officially published the source code of its lightweight mobile-side deep learning inference engine - Mobile Neural Network (MNN) - on GitHub.

Jia Yangqing, a famous AI scientist, commented that "compared with general-purpose frameworks like TensorFlow and Caffe2 that cover both training and inference, MNN focuses on the acceleration and optimization of inference and solves efficiency problems during model deployment so that services behind models can be implemented more efficiently on the mobile side. This is actually in line with ideas in server-side inference engines like TensorRT. In large-scale machine learning applications, the number of computations for inference are usually 10+ times more than that for training. Therefore, optimization for inference is especially important."

How is the technical framework behind MNN designed? What are future plans regarding MNN? Today, let's have a closer look at MNN.

Mobile Neural Network (MNN) is a lightweight mobile-side deep learning inference engine that focuses on the running and inference of deep neutral network model. MNN covers the optimization, conversion, and inference of deep neutral network models. Currently, MNN has been adopted in more than 20 apps such as Mobile Taobao, Mobile Tmall, Youku, Juhuasuan, UC, Fliggy, and Qianniu, covering live broadcast, short video capture, search recommendation, product searching by image, interactive marketing, equity distribution, security risk control and other scenarios. MNN stably runs more than 100 million times per day. In addition, MNN is also applied in IoT devices like Cainiao will-call cabinets. During the 2018 Double 11 event, MNN was used in scenarios like smiley face red envelopes, scans, and a finger-guessing game.

MNN has already been made an open-source project on GitHub. Follow the official WeChat account "Alibaba Technology" and enter "MNN" in the dialog box to learn more and obtain the GitHub download link to this project.

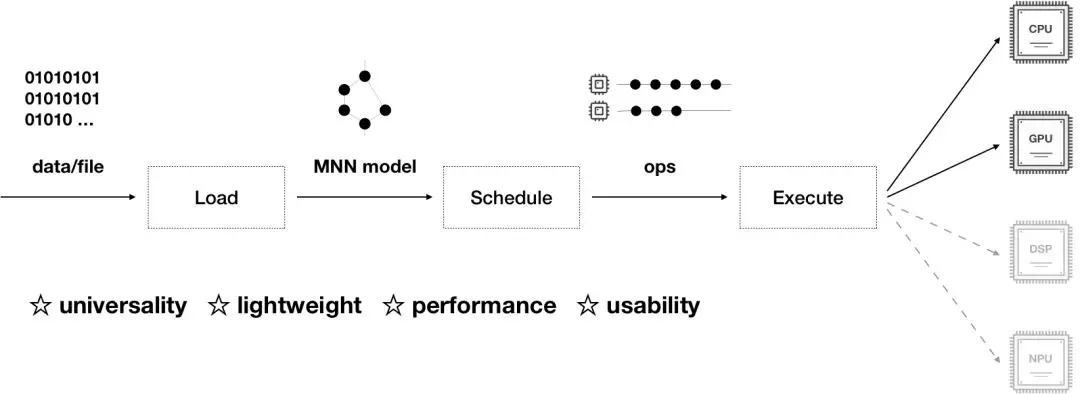

MNN loads network models, does inference, make predictions and returns relevant results. The inference process consists of loading and parsing models, scheduling computational graphs, and running models efficiently on heterogeneous back-end devices. MNN has four advantages: lightweight, versatility, high performance, and ease of use.

Versatility:

Lightweight:

High performance:

Ease of use:

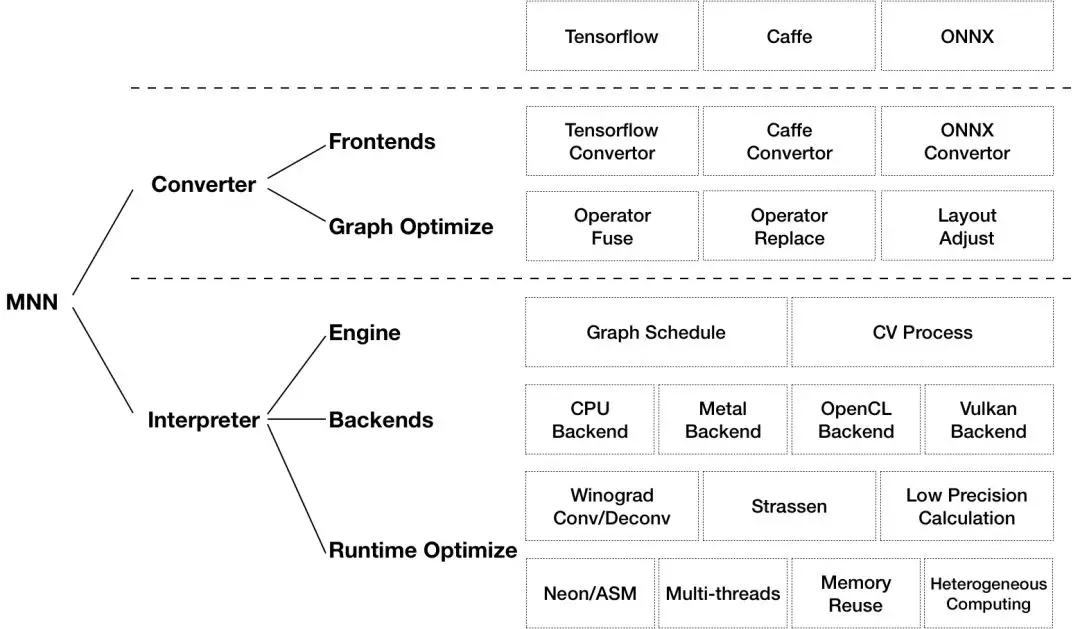

As shown in the preceding figure, MNN consists of two parts: Converter and Interpreter.

Converter consists of Frontends and Graph Optimize. The former supports different training frameworks. Currently, MNN supports TensorFlow Lite, Caffe, and ONNX. The latter optimizes graphs by operator fusion, operator substitution, and layout adjustment.

Interpreter consists of Engine and Backends. The former is responsible for loading the model and the scheduling calculation graph; the latter includes the memory allocation and the op implementation under each computing device. In Engine and Backends, MNN applies a variety of optimization schemes, including applying the Winograd algorithm in convolution and deconvolution, applying the Strassen algorithm in matrix multiplication, low-precision calculation, Neon optimization, hand-written assembly, multi-thread optimization, memory reuse, and heterogeneous computing.

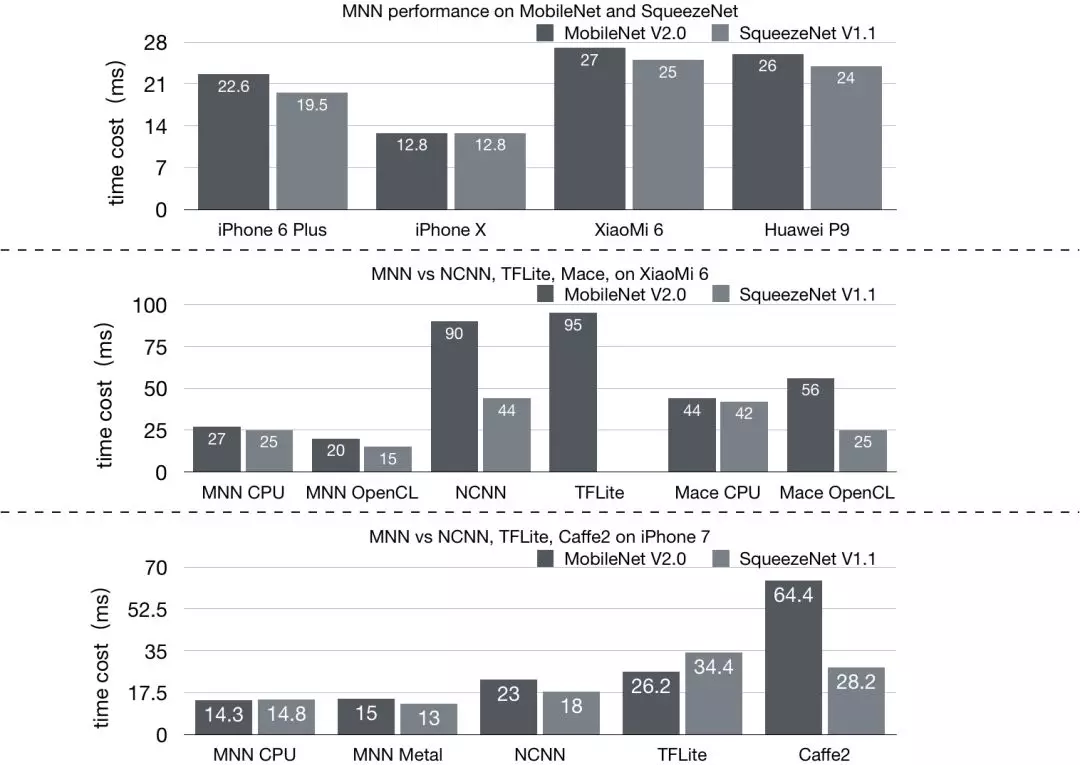

The following figures compare performance of popular open-source frameworks on MobileNet and SqueezeNet.

MNN shows over 20% better performance than NCNN, Mace, TensorFlow Lite, and Caffe2. We actually focus on optimizing our internal business models and has made in-depth optimizations for models like face detection. Detecting a single frame on iPhone6 only takes about 5 ms.

Note: Mace, TensorFlow Lite, and Caffe2 all use the master branch of the GitHub repository as of March 1, 2019. Due to compilations, NCNN uses the pre-compiled library released on December 28, 2018.

With the increasing computing power of mobile phones and the rapid development of deep learning, especially the growing maturity of small network models, inference and prediction originally done on the cloud can be implemented on mobile devices. Mobile-side intelligence is about deploying and running AI algorithms on mobile devices. Compared with server-side intelligence, mobile-side intelligence has a series of advantages, including low latency, higher data privacy, and saving on cloud resources. Currently mobile-side intelligence is an increasing trend and has played a big role in scenarios such as AI photography and visual effects.

Mobile Taobao is an e-commerce app that covers many kinds of services. Business scenarios like image search-based online shopping (Pailitao), live short videos, interactive marketing, makeup try-on, personalized recommendation/search require the use of mobile-side intelligence. Mobile-side intelligence can bring users a new interactive experience and facilitate break-through business innovations.

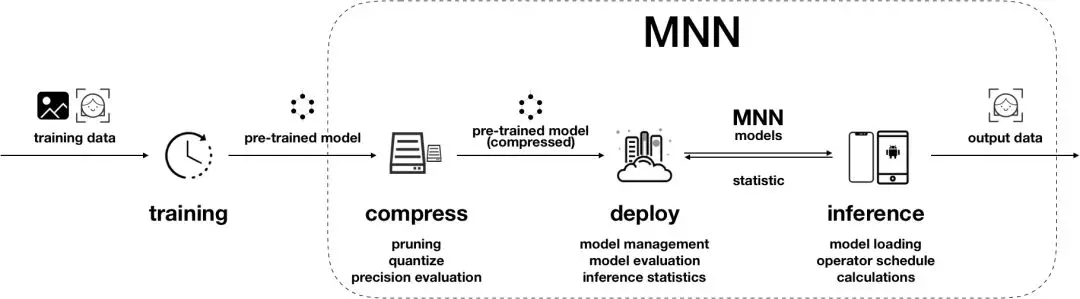

Generally, deep learning on mobile devices can be divided into the following stages:

The preceding information shows that a mobile-side inference engine is a core module of a mobile-side intelligence application. This core module needs to efficiently use resources and quickly complete the inference under restrictions such as limited computing power and limited memory. It is safe to say that the quality of the mobile-side inference implementation directly decides whether algorithm models can run on mobile devices and whether a business scenario can be implemented. Therefore, we need an excellent mobile-side inference engine.

Before we started our engine R&D work in early 2017, we had researched system solutions and open-source solutions quite thoroughly and conducted in-depth analysis from the perspective of versatility, lightweightness, performance, and security. CoreML is a system framework used across Apple products. MLKit and NNAPI are two system framework used on Android. The biggest advantage of system frameworks is lightweightness: System frameworks have a relatively low package size restriction. The biggest disadvantage of system frameworks is versatility. CoreML only supports iOS 11+, and MLKit and NNAPI only supports Android 8.1+. These system frameworks apply to only a highly limited range of device models and barely support scenarios where embedded devices are used. In addition, system frameworks only support a relatively small number of network types and op types. They have poor scalability and cannot make full use of the computing power of devices. Model security is another big issue for system frameworks. All the preceding disadvantages indicate that system frameworks are not a good choice. As to open-source projects, TensorFlow Lite was announced but has not been released yet. Caffe is a relatively mature project, but it is not designed and developed for mobile scenarios. NCNN is a recently released project, which is not mature enough. The final conclusion was that we cannot find a simple, efficient and secure mobile-side inference engine that targets different training frameworks and deployment environments.

Therefore, we decided to develop a simple, efficient and secure mobile-side inference engine that can be used in different business algorithm scenarios, different training frameworks, and different deployment environments. The engine is known as MNN later. MNN eliminates the limits imposed by different operating systems (Android and iOS), devices, and training frameworks. It supports fast deployment on mobile devices and allows flexible addition of ops and in-depth performance optimization on CPUs and GPUs based on business models.

Projects such as NCNN, TensorFlow Lite, Mace, and Anakin gradually become open source and get continuously upgraded over time, providing us with good input and meaningful reference. We are also continuously making iterations and optimizations of MNN as our business requirements grow. MNN has been proven in Double 11 events and is relatively mature and full-fledged. Therefore, we decided to make MNN an open source project so that it can benefit more application and IoT developers.

At present, MNN has been integrated into 20+ apps, including Mobile Taobao, Maoke, Youku, Juhuasuan, UC, Fliggy, and Qianniu. It is currently applied in business scenarios such as image search-based online shopping (Pailitao), live short videos, interactive marketing, real-name authentication, trying on makeup, and search recommendation. Currently, MNN runs more than 100 million times each day. During the 2018 Double 11 event, MNN was used in scenarios like smiley face red envelopes, scans, and a finger-guessing game.

Pailitao is an image search and recognition feature in Mobile Taobao. Since its first release in 2014, Pailitao has become an application with more than 10 million UVs over years of development. Technologies behind this feature are also continuously evolving and developing. Originally, to search an item, a photo was taken of an item and then uploaded to the cloud for image recognition. Currently, object recognition and picture editing are first performed on the mobile side and the edited pictures are uploaded, significantly improving the user experience and reducing the computing cost on the server side. Currently it supports recognizing common simple objects and logos in real time by using mobile-side models.

Smiley Face Red Envelopes was the first show performed on the 2018 Tmall Double 11 evening gala. This performance was based on real-time face detection and emotion recognition. Compared with interactive events based on screen touch, this show was a typical example of the transformation from tradition interaction through screen touch to natural interaction by using real-time facial detection and recognition algorithms in the camera. IT brings users a new experience.

Jiwufu (collection of five Fu cards) was one of Chinese new year campaigns in 2019. It was the first time that Mobile Taobao participated in this campaign by scanning goods for the Chinese new year. By scanning the red-colored goods purchased for the Chinese new year, people can obtain Fu cards and have a chance of winning physical rewards including duvets, Wuliangye, Maotai, and king crabs as well as Tmall supermarkets and Tmall Gene coupons with no threshold.

We plan to release a stable version every two months. Our current roadmap is as follows:

Model optimization:

Scheduling optimization:

Computation optimization:

Other:

6 Top AutoML Frameworks for Machine Learning Applications (May 2019)

Cross-Chain Interaction and Continuous Stability Based on SimpleChain Beta

2,593 posts | 793 followers

FollowAlibaba Clouder - September 1, 2020

Alibaba Clouder - November 5, 2019

淘系技术 - November 17, 2020

Alibaba Cloud Community - June 30, 2025

Alibaba Cloud Community - November 2, 2021

Alibaba Clouder - June 10, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More GPU(Elastic GPU Service)

GPU(Elastic GPU Service)

Powerful parallel computing capabilities based on GPU technology.

Learn MoreMore Posts by Alibaba Clouder

kuldeeeep September 10, 2019 at 1:45 pm

thanks for sharing great information with us such a great postHow Angular is Working?https://www.sevenmentor.com/angular5-training-institute-in-pune.php