By Yongming Wu

On January 18, 2019, the Big Data Technology Session of Alibaba Cloud Yunqi Developer Salon, co-hosted by the Alibaba Cloud MaxCompute developer community and Alibaba Cloud Yunqi Community, was held at Beijing Union University. During this session, Wu Yongming, Senior Technical Expert at Alibaba, shared MaxCompute, a serverless big data service with high availability, and the secrets behind the low computing cost of MaxCompute.

This article is based on the video of the speech and the related presentation slides.

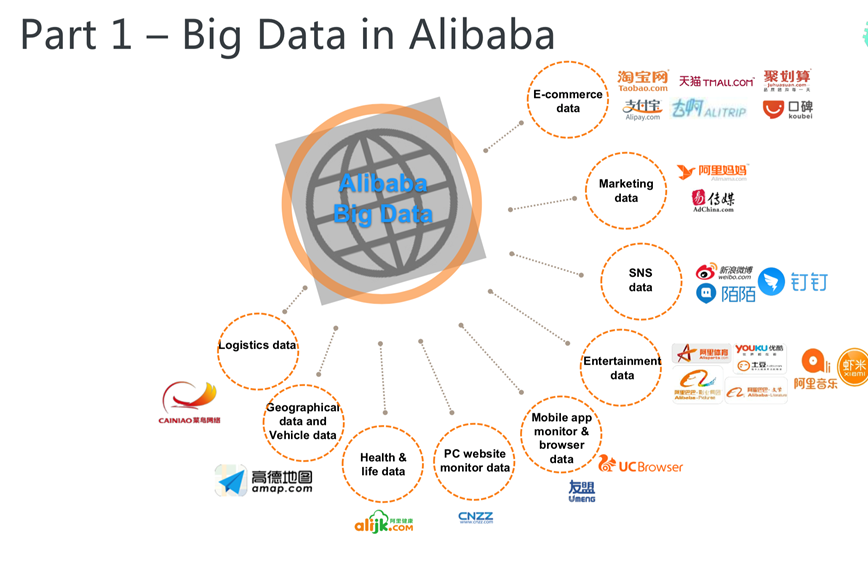

First, let's see some background information about big data technologies at Alibaba. As shown in the following figure, Alibaba began to establish a network of big data technologies very early, and it was safe to say that Alibaba Cloud was founded to help Alibaba solve technical problems related to big data. Currently, almost all Alibaba business units are using big data technologies. Big data technologies are applied both widely and deeply in Alibaba. Additionally, the whole set of big data systems at Alibaba Group are integrated together.

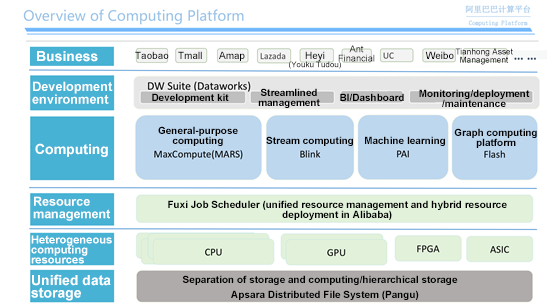

The Alibaba Cloud Computing Platform business unit is responsible for the integration of Alibaba big data systems and R&D related to storage and computing across the whole Alibaba Group. The following figure shows the structure of the big data platform of Alibaba, where the underlying layer is the unified storage platform - Apsara Distributed File System that is responsible for storing big data. Storage is static, and computing is required for mining data value. Therefore, the Alibaba big data platform also provides a variety of computing resources, including CPU, GPU, FPGA, and ASIC. To make better use of these computing resources, we need unified resource abstraction and efficient management. The unified resource management system in Alibaba Cloud is called Job Scheduler. Based on this resource management and scheduling system, Alibaba Cloud has developed a variety of computing engines, such as the general-purpose computing engine MaxCompute, the stream computing engine Blink, the machine learning engine PAI, and the graph computing engine Flash. In addition to these computing engines, the big data platform also provides various development environments, on which the implementation of many services is based.

This article mainly describes the general-purpose computing engine - MaxCompute. MaxCompute is a universal and distributed big data processing platform that can store large amounts of data and implement general-purpose computing based on data.

First, let's look at two figures: Currently MaxCompute is responsible for 99% of the data storage and 95% of the computing tasks in Alibaba. In fact, MaxCompute is the data middle-end of Alibaba. The data from all Alibaba business units eventually ends up in MaxCompute, leading to a continuous increase in Alibaba Group's data assets. Next, let's look at several metrics. MaxCompute shows 250% better performance than ordinary open-source systems in the BigBench test. In Alibaba Group, a single MaxCompute cluster can have tens of thousands of machines and can process one million jobs each day. Currently, MaxCompute clusters store large amounts of data (the stored data has reached EB-level data volume long before), making it one of the leading products in the global market. MaxCompute does not only provide services within the Alibaba Group. It is also open and available for other enterprises. MaxCompute currently provides more than 50 sets of industrial solutions. Today, the storage capacity and the computing capacity of MaxCompute are increasing at high speed every year. MaxCompute can be deployed not only in China but also in many foreign countries and regions thanks to Alibaba Cloud.

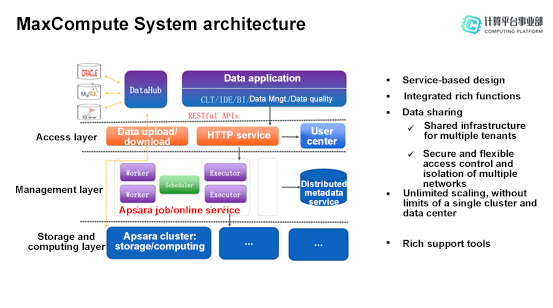

The system architecture of MaxCompute is very similar to those of other big data computing engines in the industry. As shown in the following figure, you can use clients to ingest and transfer data into MaxCompute through the access layer. In the middle is the management layer, which can manage a variety of user jobs and host many features, such as SQL compilation, optimization, execution, and other basic features in the big data field. MaxCompute also provides distributed metadata services. If metadata management is unavailable, we cannot know what the stored data is exactly about. The bottom layer in the MaxCompute architecture is where the actual storage and computing takes place.

Currently Serverless is a very popular concept. The popularity of Serverless is both gratifying and depressing to the developers of MaxCompute. It is gratifying because the MaxCompute team had begun to develop similar features long before the Serverless concept was born and the design concept of the team is fully consistent with Serverless. This indicates that MaxCompute has an early start regarding Serverless and certain technology advances. It is also depressing because although the MaxCompute team put forward ideas similar to Serverless and realized the value of this technology, they did not package such capabilities as early as possible.

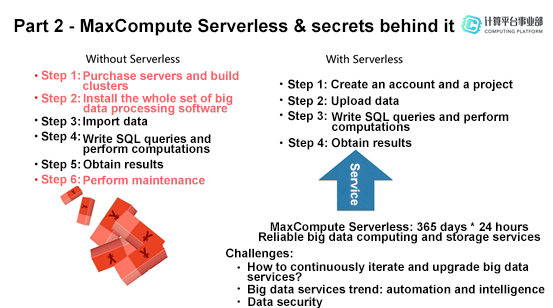

Before the Serverless concept, big data practices usually follow the steps shown in the preceding figure. First, purchase servers and build clusters. After the hardware is ready, install the whole set of big data processing software. Then import data, write queries required for business, perform calculations and obtain the final results. Note that all the preceding steps require maintenance work. If a business enterprise sets up its own big data system, maintenance is required throughout the system, and maintenance officers need to stand ready all the time to handle possible system problems.

However, among all the aforementioned steps, only the fourth step (write queries and perform actual computations) really creates business value. The other steps consume lots of resources and labor. For business-oriented companies, these steps are an extra burden. Today, Serverless allows enterprises to eliminate this extra burden.

Take the serverless MaxCompute for example. An enterprise can easily implement its required business value in four steps. The first step is to create an account and a project. This project is the carrier of both storage and computing. The second step is to upload data. The third step is to write query statements and perform computations. The fourth step is to obtain the results of the computations. No maintenance work is required throughout the process. The development team can focus on the core business operations and business processes that create business value. This is the advantage of the Serverless concept. In fact, this represents how a new technology can change the way we work. This new technology allows us to focus more on the business processes that can create core business value, without having to pay attention to additional or auxiliary work.

Unlike other open-source software or big data companies that directly provide a big data software package for their customers, MaxCompute provides big data services that can implement high reliability (365 days × 24 hours) and enable the capabilities of both storage and computing. MaxCompute really allows you to have out-of-the-box big data technology experience.

During the implementation of the serverless big data platform, we need to solve many challenges. This section describes the three main challenges:

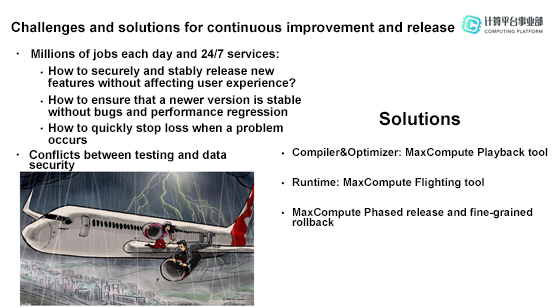

Continuous improvement and release is definitely an essential part of a big data system. A big data platform needs to be continuously upgraded and improved to meet a variety of new needs from users. During the process of making improvements, the big data system also needs to process millions of jobs running on the platform each day and ensure 24/7 services without interruption. How can we ensure a stable and secure platform during the upgrading process and make sure that the release of new features does not influence users? How can we ensure that the new version is stable and does not have bugs or performance regression? How can we quickly stop further loss after a problem occurs? All these questions need to be taken into consideration. In addition, we need to consider conflicts between testing and data security during continuous improvement and release. In other words, implementing continuous improvement and release on a big data platform is like changing the engine of a flying airplane.

The Alibaba Cloud MaxCompute team have put significant effort into solving these problems and challenges. Currently, it is commonly believed that MapReduce is not user-friendly in the big data field. SQL-like big data engines have become the mainstream trend. The three following steps are the most important for SQL-like processing: compilation, optimization, and execution. The MaxCompute team have noticed these critical issues and developed the Playback tool for compilation and optimization as well as the Flighting tool for execution. In addition to these two tools that are mainly used in the test and verification process, MaxCompute also provides tools for phased release.

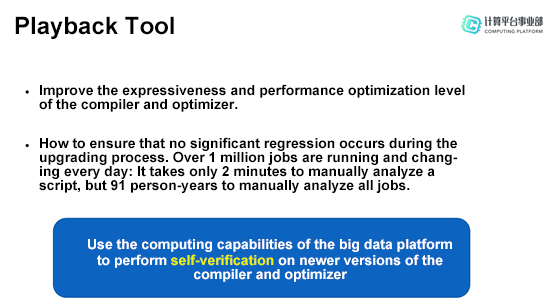

Playback is developed to allow MaxCompute to quickly improve the expressiveness and performance optimization level of the compiler and optimizer. Ensuring that the compiler and optimizer are problem-free is a new challenge that came up after significant improvements were made to the compiler and optimizer. The original method is to have existing SQL statements run on the compiler and optimizer and then manually analyze the execution results to find problems resulting from the compiler and optimizer improvements. However, manual analysis is highly time-consuming and therefore unacceptable. So the MaxCompute team wants to implement the self-verification of the improved optimizer and compiler by utilizing the big data computing platform.

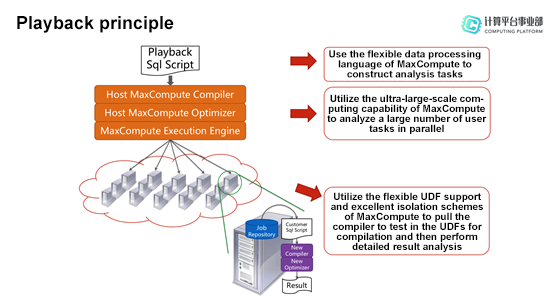

This is how this method works: Collect and put real user queries into MaxCompute by using the powerful and flexible capabilities of MaxCompute itself, run these user queries in a large-scale and distributed manner, and then compile and optimize these jobs by using the newer versions of the compiler and optimizer. Because MaxCompute adopts an industry-wide universal model, it is easy to apply a job plug-in in jobs and verify the compilation and optimization tasks. Any problems with the compiler or optimizer can easily be located.

In short, on one hand, Playback analyzes a large number of user tasks by utilizing the powerful and flexible computing capabilities of MaxCompute; on the other hand, it verifies the improved compiler and optimizer by using its support for UDFs and proper isolation solutions. This makes it easy to verify newer versions of the compiler and optimizer. Using the big data platform's powerful capabilities, it's now possible to implement tasks that were previously impossible.

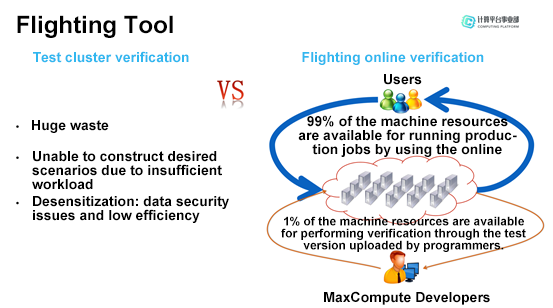

Flighting is a runtime tool. In fact, the most common and natural practice for testing the improved optimizer is to create a test cluster. However, this method can waste lots of resources due to the high cost of the test cluster. A test cluster also cannot simulate real scenarios because the workload in a test environment and the workload in an actual scenario are not the same. To perform the verification in a test environment, you have to create some test data, which may be too simple. Otherwise, you need to collect data from real scenarios. This is a complex process because it requires consent from users and data desensitization In addition to risking data breaches, this method may lead to some differences between the final data and the real data. Therefore, in most cases it is impossible to verify and find problems with the new features.

However, Flighting, the runtime verification tool of MaxCompute, uses real online clusters and environments. With the resource isolation capability, 99% of the computing resources in MaxCompute clusters are left for users to run jobs. The remaining 1% of the resources are used by the Flighting tool to perform the validation test on the new features of the executor. This tool does not require data dragging and can be run directly in the production environment. It can efficiently expose problems of real executors.

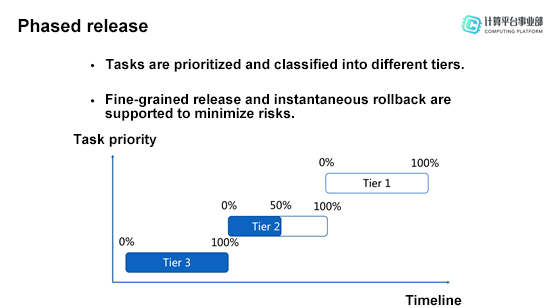

MaxCompute provides the phased release feature that can prioritize tasks for fine-grained release and support instantaneous rollback to minimize the risk.

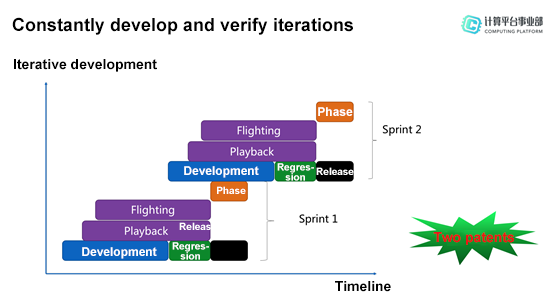

The following figure shows the procedures of continuous verification and development iteration in MaxCompute, which combine Playback for the compiler and optimizer, Flighting for the executor, and the phased release feature. This process allows Sprint 2 to start before Sprint 1 is finished, which makes the whole R&D process more efficient. Currently, the continuous development and delivery process of MaxCompute shows strong technical advantages regarding the compilation optimization, execution, and phased release and also strong technical competitiveness in the entire industry. These technical advantages and competitiveness can help MaxCompute evolve and bring customers more and better features while ensuring that upgrading MaxCompute does not influence the availability of the platform.

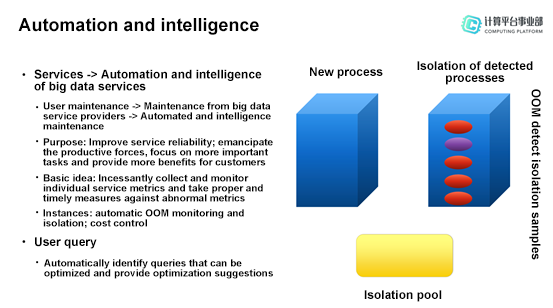

With the development of artificial intelligence and big data technologies, today services need to implement not only high availability but also automation. We may consider this requirement from two perspectives: services themselves and users' maintenance. Services themselves need to implement automation first and then artificial intelligence.

Any services may encounter various problems and bugs. When problems occur, traditional solutions need to rely on manual effort to solve them. However, the ultimate goal of service automation and intelligence is to locate and solve system problems by using the self-recovery capability of the system without manual intervention. This goal needs to be achieved step by step. From the user maintenance perspective, this means that the maintenance work which was originally done by users themselves is now performed by the big data platform on the cloud. Take Alibaba Cloud MaxCompute for example: Customers' maintenance work has now been transferred to the MaxCompute team. Then the MaxCompute team wants to stay free from the complex maintenance work on the big data platform and implement automated and intelligent service maintenance to focus on more important work. The goal of implementing automated and intelligent big data services is to improve the service reliability, emancipate the productive forces and provide more benefits for customers by focusing on more important work. The basic idea is to regularly collect and monitor individual service metrics and take proper and timely measures against abnormal metrics.

In addition to the automation and intelligence of the big data services, it is also critical to automatically identify queries that can be optimized and provide optimization suggestions when it comes to user queries. The SQL queries that you write may be not the most efficient. Perhaps more efficient methods or techniques can be used for the same purpose. Therefore, it is necessary to identify the parts of a user query that can be optimized and provide corresponding optimization suggestions. Automatic query optimization is trending industry-wide. Currently the MaxCompute team is also conducting relevant research work.

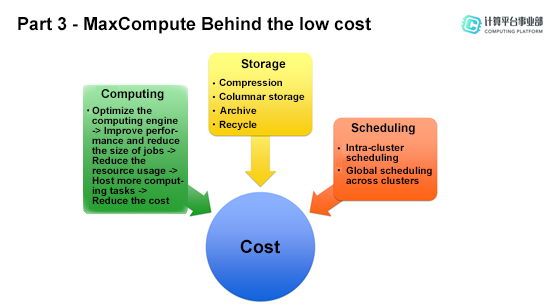

MaxCompute's low cost makes it more competitive. The low cost of MaxCompute is enabled by the technology dividend. To lower the cost of the big data tasks, MaxCompute makes some improvements on three key aspects: computing, storage, and scheduling.

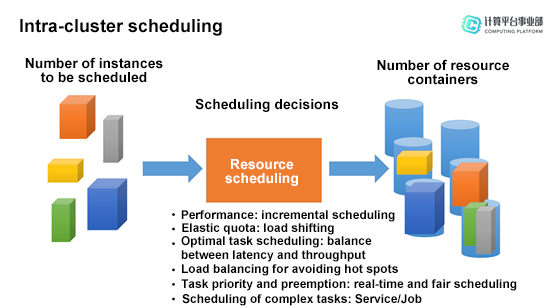

For intra-cluster scheduling, many problems need to be solved. On one hand, many job instances are waiting to be scheduled all the time; on the other hand, lots of resources are waiting to run jobs.

How to combine these two aspects is very critical and requires powerful scheduling capabilities. Currently the MaxCompute platform can make 4,000+ scheduling decisions per second. MaxCompute makes a scheduling decision by putting a certain number of jobs into corresponding resources and ensuring that the resources required for the jobs are sufficient and that no extra resources are wasted at the same time.

To improve performance, incremental scheduling is supported in MaxCompute to make fully use of resources. Additionally, the elastic quota is also provided to support load shifting when multiple users are performing computing tasks. Users often do not submit their tasks at the same time when they use the big data platform. Actually, users submit tasks in different periods in most cases. This leads to resource usage peaks and valleys. Elastic quota can shift and balance resource usage between the peaks and valleys so that resources can be fully used. To implement optimal task scheduling, it is necessary to consider the balance between latency and throughput. Load balancing must avoid some hot spots. The scheduling decision-making system of MaxCompute also supports task priority and preemption to achieve real-time and fair scheduling.

Scheduling complex tasks is divided into service scheduling and job scheduling. Here a task is a one-time job. However, once started, services will incessantly process jobs within a period of time. In fact, service scheduling is very different from job scheduling. Service scheduling requires high reliability and no interruptions. If service scheduling is interrupted, the cost of recovery is very high. Job scheduling is more tolerant of interruptions. Even if interrupted, job scheduling can continue to run later.

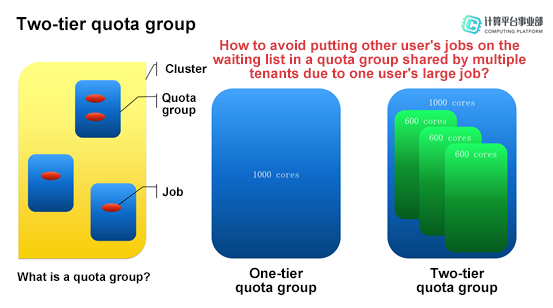

For scheduling within a single cluster, we mainly explain the two-tier quota group that is rare in common big data platforms. Before the introduction to the two-tier quota group, let's first look at some information about quota groups. To put it simply, a quota group is a group that contains some integrated resources after various resources in physical clusters make up a resource pool. On the big data platform, jobs are running in quota groups. Jobs within the same quota group share all the resources in that quota group. The common one-tier quota group policy has many problems. For example, consider a one-tier quota group that has 1,000 CPU cores and is used by 100 users. At a specific point in time, a user submits a very large job that uses up all the 1,000 CPU cores in that quota group, putting all the jobs of other users on the waiting list.

To solve the preceding problem, we need to use two-tier quota groups. A two-tier quota group is the subdivision of a one-tier quota group and limits the maximum resources that each user can use. Each one-tier quota group can be divided into multiple two-tier quota groups to make better use of the resources. In a two-tier quota group, resources can be shared. However, a user cannot use resources more than the maximum resources in that quota group. This ensures good user experience.

Unlike common big data systems, MaxCompute supports scheduling within a single cluster as well as global scheduling across clusters. This also indicates the powerful capabilities of MaxCompute. A single cluster may not be resized due to a variety of factors. Therefore, cluster-level horizontal scaling is required.

For applications that process a large number of services, we need to run these services on multiple clusters to implement high availability, that is, to ensure that services can still run normally when one cluster experiences downtime. For this purpose, global scheduling is required to analyze into which clusters an incoming job can be scheduled and which clusters are currently available and relatively idle. At this point, multiple versions of data are also a concern, because storing the same copy of data in different clusters may lead to data consistency. In addition, it is also required to consider specific computing and data replication policies for different versions of the same copy of data. Alibaba Cloud MaxCompute has improved these policies based on lots of accumulated experience.

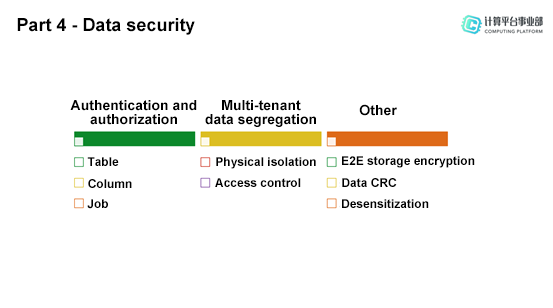

When an enterprise uses a big data computing platform, its biggest concern is data security. Judging from Alibaba's experience, users care about the three following date security issues most: Can others see the data after it is stored on a big data platform? Can the service provider see the data after data is put on the platform or service? What if problems occur on the big data platform that hosts my data?

Basically, users do not have to worry about data security because MaxCompute is well-equipped to handle these concerns. First, MaxCompute features well-developed authentication and authorization mechanisms. Data on MaxCompute belongs to users, not the platform. Although the platform can help users store and compute data, it does not have the ownership of data. Either data access or computing requires authentication and authorization. Neither other users nor the platform provider can see your data if they are not authorized. Multiple authorization dimensions are available, including table-level authorization, column-level authorization, and job-level authorization. As a big data platform on the cloud, MaxCompute naturally has the multi-tenant feature, which requires the isolation of data and computations of multiple tenants. MaxCompute supports the physical data isolation and fundamentally eliminates data security issues. MaxCompute also provides strict access control policies, which prohibits unauthorized users from accessing your data. In addition, MaxCompute provides end-to-end (E2E) storage encryption, which is especially important to financial institutions. It also provides cyclic redundancy checks (CRC) to ensure the data correctness. CRC has been used in Alibaba for many years and well developed. MaxCompute provides the data desensitization feature for users' convenience.

135 posts | 18 followers

FollowAlibaba Clouder - June 22, 2020

Alibaba Clouder - August 25, 2020

Alibaba Cloud MaxCompute - December 8, 2020

Rupal_Click2Cloud - September 13, 2022

Alibaba Cloud MaxCompute - May 5, 2019

Alibaba Cloud MaxCompute - March 25, 2021

135 posts | 18 followers

Follow Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Omnichannel Data Mid-End Solution

Omnichannel Data Mid-End Solution

This all-in-one omnichannel data solution helps brand merchants formulate brand strategies, monitor brand operation, and increase customer base.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Cloud MaxCompute