by Fajar Tontowi, Lead Data Engineer for Ingestion and Analytics at Mekari, at Flink Forward Asia in Jakarta 2024.

Refer to the presentation recording here: https://www.alibabacloud.com/en/events/flink_forward_asia_2024

This article is based on the keynote speech delivered by Fajar Tontowi, Lead Data Engineer for Ingestion and Analytics at Mekari, at Flink Forward Asia in Jakarta 2024.

Morning everyone. My name is Fajar and I am from Mekari. Today, I am excited to share the recent improvements we have implemented at Mekari using serverless Apache Flink and Alibaba Cloud, alongside MaxCompute for our data sources.

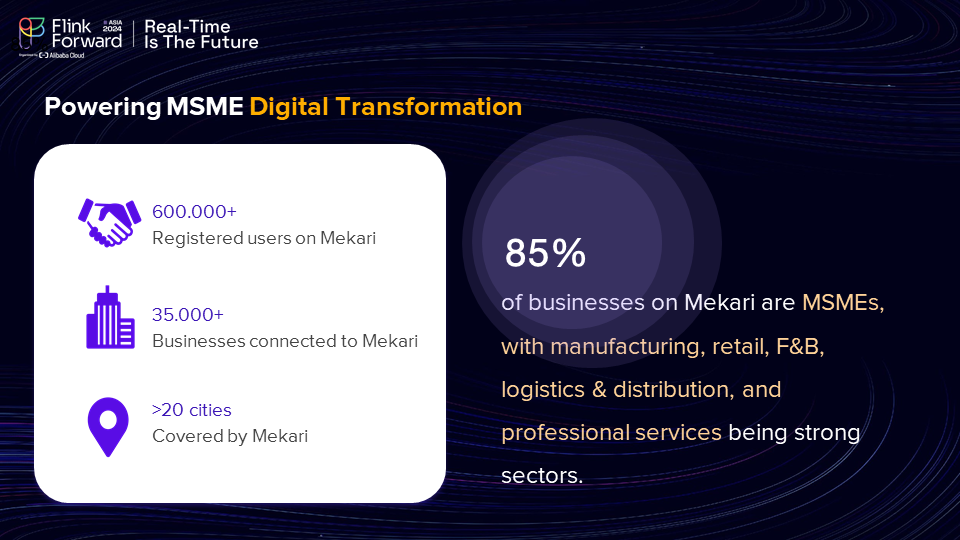

Firstly, let me give you a brief overview of Mekari. As one of the largest companies in Indonesia, Mekari provides a range of services to support the growth of other companies across various domains. We offer six major products and services:We have Talenta, Jurnal, Klikpajak, Flex, Qontak and E-sign. These products are tailored to help businesses grow and enhance their operations in specific areas.

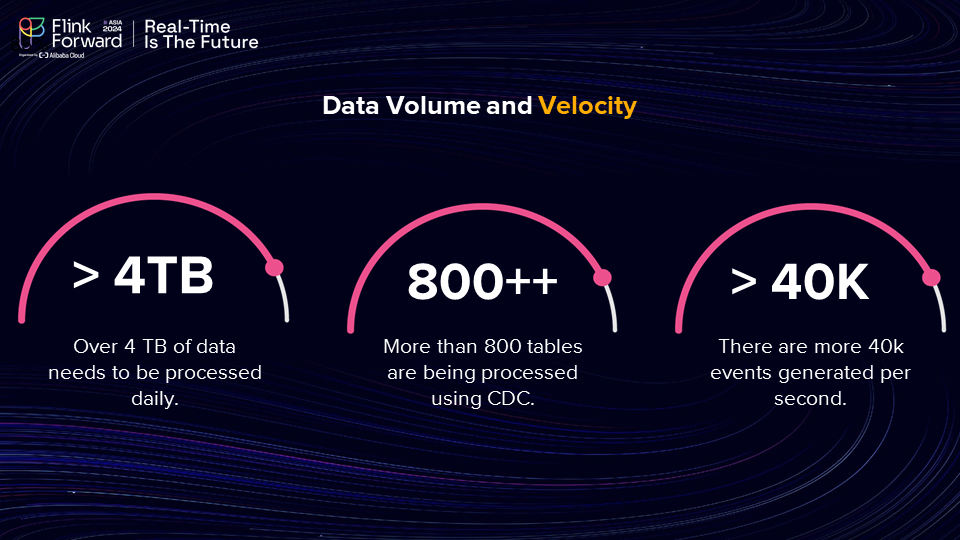

Today we have 600,000 registered users on Mekari, 35,000 businesses connected to Mekari, and we operate in 20 cities around Indonesia. At Mekari, we handle a massive amount of data daily, processing over 4 terabytes (TB) per day. This involves both real-time and batch processing to ensure the seamless performance of our various products. And also because we have several products like I mentioned before, we have Jurnal, etc., for connecting the data from the backend to our environment of the data, we use Change Data Capture (CDC), and currently we have more than 800 tables being processed using CDC in our pipeline. And also, there are more than 40,000 events generated per second. These come to CDC events or any of our microservices in Mekari.

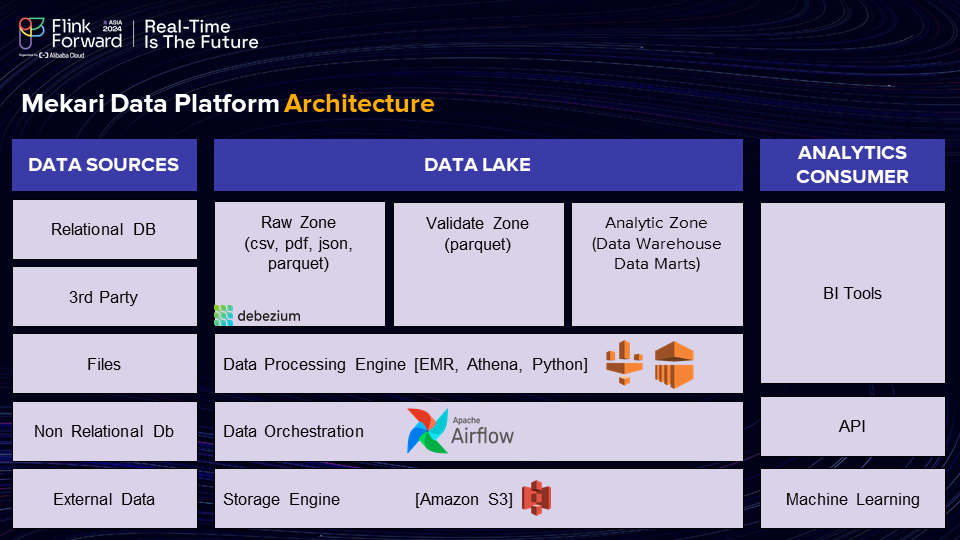

This is the legacy architecture in Mekari. We have previously used Amazon Web Service (AWS) for our cloud services. We have some data sources like relational database, 3rd party, files, non-relational database and external data. For relational database, to connect all the database from all our products, we use CDC.

So, like I mentioned earlier from the CDC data, we send the data into our data lake. In our data lake, we separate the zones into three zones. We have the Raw Zone, Validated Zone and Analytic Zone. Raw Zone means all the data from the sources will be put into Amazon S3 in the format as it is in the sources. So, if the source of the data is from CSV, we will put it into the Raw Zone as a CSV file. Also, if it's PDF, JSON, or parquet.

For CDC data, we send the data to the Raw Zone in a parquet file, containing metadata from Debezium. So, the parquet file will contain data from Debezium. After that, there is a Validated Zone. In the Validated Zone, basically, we do some cleansing of the data from the Raw Zone, standardize the data, and do some deduplication of the data, and put all it together in the Validated Zone in a standardized format in a parquet file. And after it’s in the Validated Zone in a parquet file, the BI or analytic teams can create some data mart or a data warehouse, using Athena to query the Validated Zone.

For the data processing engine, we have EMR, Athena and Python. So, data from the Raw Zone to the Validated Zone to do processing, transformation, standardizing, and cleansing. We use EMR and Spark for that. So, there will be a Spark job. We will be running EMR to do some the processing of the data from the Raw to Validated Zone. We have one big cluster of EMR serving all the products at Mekari.

So, for data orchestration we use Airflow to manage the workflow and the jobs to send data from Raw, Validated, and data mart or data warehouse. And we use S3 as our storage engine; we put all the data into S3. We have some BI tools also, we have a connected Athena with the Tableau and Metabase, also API for external data. If some of our customers want to pull out the data, they can use the API we created, and also machine learning for predictive modeling from the data science team, to create a model from our data in our data lake.

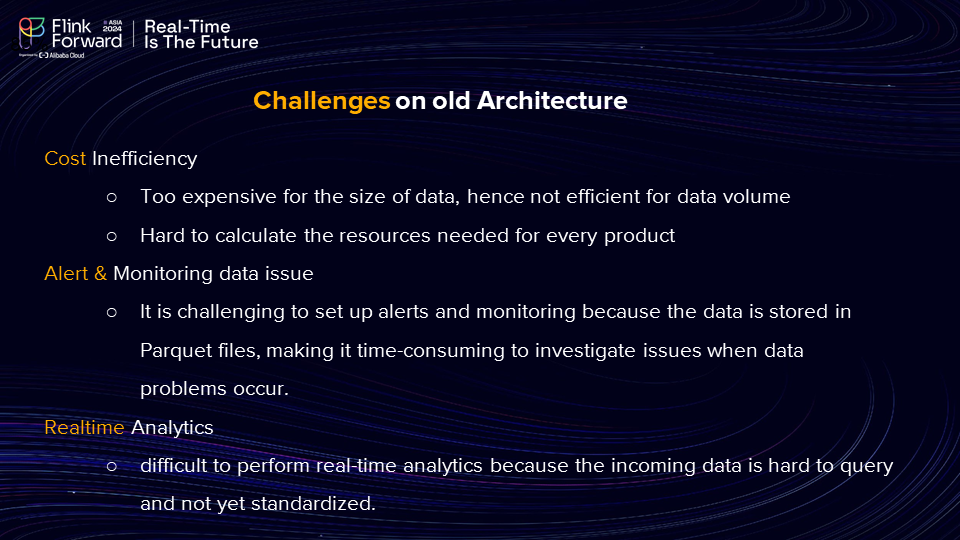

Next, what are the challenges of the old architecture? First, it is about cost inefficiency because when our customer is increase the data volume will increase incrementally. The data always grows incrementally on a daily basis. The architecture gets expensive when we need more resources in EMR. So, like I mentioned before, we use one EMR cluster to serve all the product to process, like standardizing, and deduplication, and anything we use EMR cluster, so we always need to vertically increase the results every day. If we need more resources, the cost of EMR is so uge, and it's not efficient for data to incrementally grow on a daily basis.

So, the second is it's hard to calculate the resources needed for every product. Like I mentioned before, in Mekari, we have several products; we have Talenta, Jurnal, etc. And because we have one EMR cluster, it is difficult for us to calculate how many resources or how much cost for us to process data from Talenta, from Jurnal, and for any other products. Because in the previous architecture we only had one cluster for all the products.

And the second is about alert and monitoring data issues. It is common that there are some data discrepancy issues in the data warehouse and data mart layer. when the data discrepancy occurs in the data mart layer, it will be difficult to see the root cause if the issue is happening in the Raw Zone, because in the Raw Zone the data is still in the format like in the sources. So, the format can be CSV, PDF, json, etc. So, it will be difficult for us to find the issue in the Raw Zone.

And also, it's difficult for us because the data not in standardized format from the Raw until the data mart and data warehouse. We’ve had challenges to create some monitoring for discrepancy. In this case, the challenges are because the data isn’t in a standardized format as it moves from the Raw Zone to the data mart and data warehouse. This makes it hard to monitor discrepancies. Maintaining data quality, like completeness and other key metrics, is important to us, but ensuring consistent quality from Raw to the warehouse has been difficult. We want to maintain data quality from Raw until the warehouse is clear. Also, we have some challenges on that.

And the third is about real-time analytics. Currently we are currently experiencing challenges in developing real-time analytics. Because real-time data is only stored in the Raw Zone. However, the Raw Zone data is still formatted in various data types, such as CSV and JSON, which cannot be directly queried for analysis. This presents challenges in creating real-time analytics within the legacy architecture. Additionally, because the data is not yet standardized from Raw until the data warehouse, it also makes some difficulty on that.

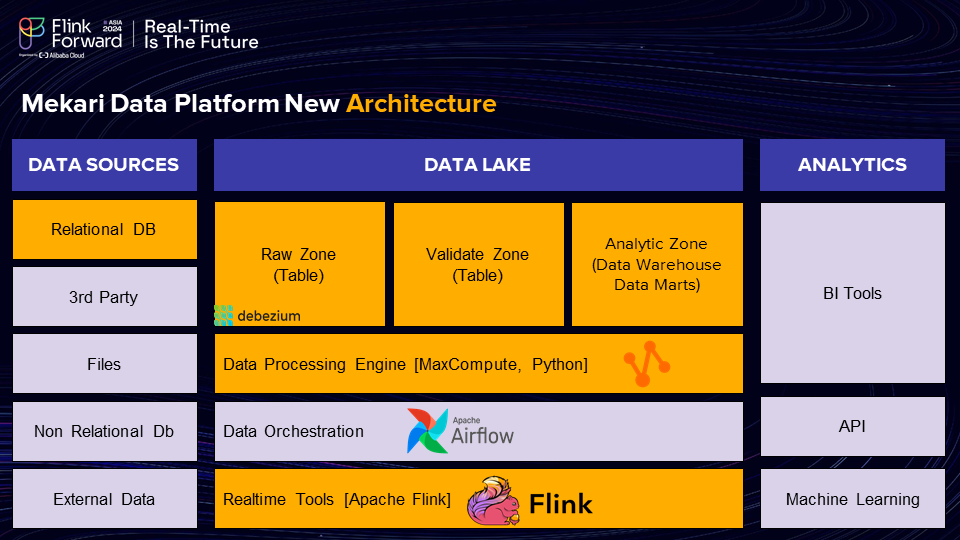

This is what the new architecture that we use at Mekari. We use Alibaba Cloud for our cloud service, and we use MaxCompute for our data store, we use serverless Apache Flink on Alibaba Cloud. So basically, it’s an Apache Flink with manageable service by Alibaba Cloud.

The difference between the legacy architecture and the new architecture is that In the new architecture, all data resides in MaxCompute tables, unlike the previous setup where data was stored in files and queried using Athena. In the Raw Zone until the Analytic Zone, the format is on the table, and the data will be stored on the table.

Using Apache Flink CDC, we connect data from relational databases such as MySQL and PostgreSQL to the Raw Zone. We use SQL Deployment in Flink to deploy the data, so it will be easy for us to connect the data from database like MySQL and PostgreSQL. We only need to manage the SQL deployment and create some SQL scripts to synchronize data from the relational database to the Raw Zone table. Since the data in the Raw Zone table is already structured in a table format, it is readily available for querying by the Analytics and BI team.

In the new architecture,we still have Raw Zone, Validated Zone, and Analytic Zone as before. But the difference is all data in three zones are now in table format. We use the data processing engine MaxCompute as our data processing engine, relying entirely on queries for data transformation. During the processing from the Raw Zone to the Validated Zone, we cleanse, standardize, and deduplicate the data using queries. This query-driven approach streamlines the process, allowing us to easily modify the data transformation logic by simply updating the queries as needed. This approach simplifies the process, as we only need to adjust the query if we wish to modify how the data is processed between these zones.

The advantages of the new architecture are below:

Apache Flink on Alibaba Cloud offers us the flexibility to efficiently allocate resources across our different products. For instance, if Jurnal requires additional resources, we can easily allocate more to it. Similarly, if Talenta demands more resources, we have the capability to adjust accordingly. This dynamic resource allocation in Apache Flink not only facilitates easier data scaling but also simplifies cost calculation for each product. By aligning resources with product-specific needs, we can more accurately assess and manage our expenses.

In our recent infrastructure optimization efforts, we've streamlined several processes by integrating CDC (Change Data Capture) and Apache Flink. By doing so, we have eliminated some redundant steps and simplified our workflow to focus primarily on SQL deployment. Specifically, this involves creating and deploying SQL scripts using data captured from sources like PostgreSQL, MySQL, and other relational databases, and then funneling this data into MaxCompute for further processing.

A significant enhancement in our setup is the utilization of autopilot mode in Flink's SQL deployment. This feature allows for dynamic resource scaling in response to data spikes or increased backend activities. Essentially, whenever there is a surge in data processing demands, Flink automatically adjusts the resources allocated to the SQL deployment. This capability not only ensures seamless handling of large data volumes but also simplifies server and infrastructure management for our engineering teams, offering them a more efficient and responsive system.

In today's fast-paced business environment, many of our clients rely heavily on real-time analytics to make critical decisions, such as understanding customer churn or optimizing operations. Our new architecture now empowers us to deliver these insights seamlessly. By leveraging Flink for real-time data transformation and executing queries through Flink SQL directly into our relational databases, we funnel valuable insights straight into our Analytics Zone, including data marts and warehouses. This capability not only accelerates decision-making but ultimately supports stakeholders in driving company growth through actionable, real-time analytics.

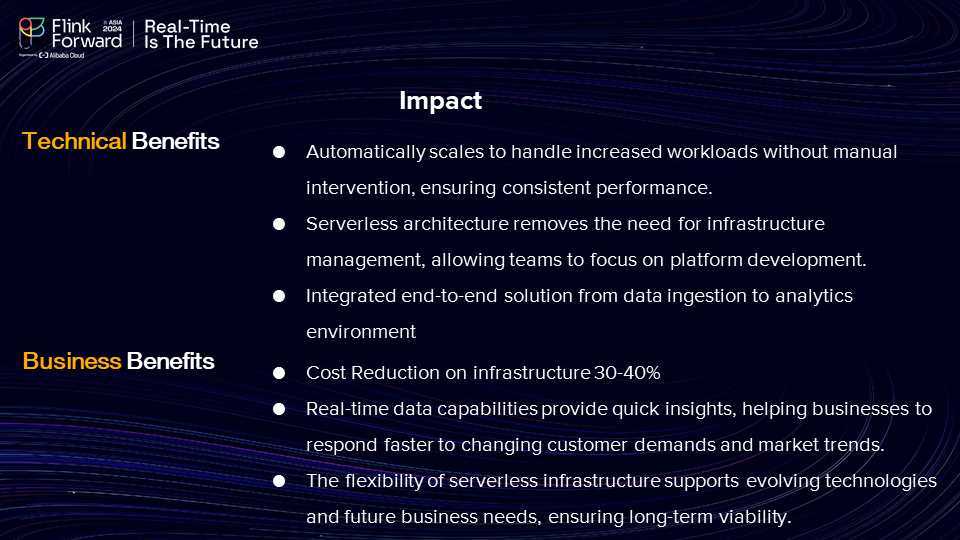

From a technical standpoint, the benefits are substantial. Flink's automatic scaling simplifies workload management, minimizing the need for constant job monitoring and troubleshooting.

Additionally, the serverless architecture eliminates the burden of infrastructure management, enabling our team to concentrate on enhancing platform development. This streamlined approach ensures that we can deliver high-quality analytics swiftly and efficiently.

Serverless architecture greatly simplifies the deployment of infrastructure by allowing us to quickly implement SQL scripts and SQL deployment without the need for complex monitoring alerts. Instead, we leverage monitoring capabilities available in Flink and MaxCompute services, making it easier to maintain a serverless setup. This streamlined process allows our team to concentrate on platform development, enhancing usability for our customers, who benefit from quicker data access and processing.

Third, we implement integrated end-to-end solutions using Alibaba Cloud services for seamless data transition from the relational databases to our Analytic Zone. This integration includes centralized monitoring and alerting systems, enabling us to promptly detect any data issues or anomalies, whether they originate from Raw, Validated, or CDC infrastructure. This approach ensures robust data management and reliability.

From a business perspective, the new architecture offers significant benefits. It has reduced infrastructure costs by approximately 30% to 40%. Additionally, resource usage is optimized across all products, ensuring that resources are efficiently shared rather than being allocated separately for each product. And also, we maintain the CU, or compute unit, on Flink correctly and manageable. Hence, we can manage cost reduction of store up to 40%. And also, the SLR readiness of our data is increased by like 40%. With the new architecture, our data readiness has significantly improved. Previously, data was available by 8 AM, but now it can be ready as early as 6 AM, ensuring earlier access to the data which more beneficial for our customers.

The next part is about real-time data capability. We provide some quick insights because now we can provide them with real-time analytics. And also, we collaborate with BI to create some dashboards in real time. We can help the company grow with real-time data so they can make faster decisions with correct data on a real-time basis.

And the third is the flexibility of serverless infrastructures. Because with the flexibility of the serverless infrastructure we deploy on Alibaba Cloud, now we can focus on creating platforms for our customers and our users. It will make it easy for us to focus on future long-term feasibility to see the opportunities for our growth, and supports other evolving technology at Mekari.

Okay, I think this is my last part about what we’ve improved at Mekari from the legacy architecture to the new architecture. I hope this can enlighten us to give some new information, and feel free to reach out to me at my email or links on social media if you want to ask me any questions or discuss something about this. Feel free to reach out to me, I will be happy to share with you everything that we do to improve our architecture. Thank you, everyone.

To Summarize, at Mekari, we've made significant improvements by transitioning from our legacy architecture to a new, more efficient architecture on Alibaba Cloud. This update not only optimizes our operations but also provides better service for our users. I hope this gives you valuable insights into our progress. Thank you for your interest and support!

Accelerate Your Transformation in The GenAI-Era: Community Gathering Session Recap

117 posts | 21 followers

FollowAlibaba Cloud Community - January 4, 2026

Apache Flink Community - March 7, 2025

Apache Flink Community - August 1, 2025

Apache Flink Community - March 14, 2025

Alibaba Cloud Indonesia - March 23, 2023

ApsaraDB - February 29, 2024

117 posts | 21 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Indonesia