By Alex Mungai Muchiri, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

The Kubernetes system manages containerized application in clustered environments. With it, you have an application's entire lifecycle handled from deployment to scaling. We have previously looked at Kubernetes basics and in this session, we are looking at how to get started on CoreOS with Kubernetes. In this tutorial, we demonstrate Kubernetes 1.5.1 but keep note that these versions keep changing. To see your installed version, run the command below: kubecfg –version

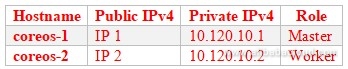

We shall start with a basic CoreOS cluster. Alibaba Cloud already provides you with configurable clusters and thus we shall not dwell much on the details. What we need in our cluster is at least one master node and one worker node. We shall assign our nodes specialized roles within Kubernetes, but for reference purposes, they are interchangeable. One of the nodes, the master, will run a control manager and an API server.

This tutorial relied on implementation guides available on CoreOS website. With your CoreOS cluster all set up, let us now proceed. We are going to use two sample BareMetal nodes on our cluster.

Our Node 1 shall be the master. We start with SSH in our CoreOS node.

Create a directory called certs

$ mkdir certs

$ cd certsPaste the script below in the certs directory as cert_generator.sh .

#!/bin/bash

# Creating TLS certs

echo "Creating CA ROOT**********************************************************************"

openssl genrsa -out ca-key.pem 2048

openssl req -x509 -new -nodes -key ca-key.pem -days 10000 -out ca.pem -subj "/CN=kube-ca"

echo "creating API Server certs*************************************************************"

echo "Enter Master Node IP address:"

read master_Ip

cat >openssl.cnf<<EOF

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

IP.1 = 10.3.0.1

IP.2 = $master_Ip

EOF

openssl genrsa -out apiserver-key.pem 2048

openssl req -new -key apiserver-key.pem -out apiserver.csr -subj "/CN=kube-apiserver" -config openssl.cnf

openssl x509 -req -in apiserver.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out apiserver.pem -days 365 -extensions v3_req -extfile openssl.cnf

echo "Creating worker nodes"

cat > worker-openssl.cnf<< EOF

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

IP.1 = \$ENV::WORKER_IP

EOF

echo "Enter the number of worker nodes:"

read worker_node_num

for (( c=1; c<= $worker_node_num; c++ ))

do

echo "Enter the IP Address for the worker node_$c :"

read ip

openssl genrsa -out kube-$c-worker-key.pem 2048

WORKER_IP=$ip openssl req -new -key kube-$c-worker-key.pem -out kube-$c-worker.csr -subj "/CN=kube-$c" -config worker-openssl.cnf

WORKER_IP=$ip openssl x509 -req -in kube-$c-worker.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out kube-$c-worker.pem -days 365 -extensions v3_req -extfile worker-openssl.cnf

done

echo "Creating Admin certs********************************************************"

openssl genrsa -out admin-key.pem 2048

openssl req -new -key admin-key.pem -out admin.csr -subj "/CN=kube-admin"

openssl x509 -req -in admin.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out admin.pem -days 365

echo "DONE !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"Configure the script to executable.

$ chmod +x cert_generator.shWhen the executable script runs, it should prompt you to enter the details of your nodes. Thereafter, it should generate certificates to run on your Kubernetes installation.

Create a directory to create the generated keys like so:

$ mkdir -p /etc/kubernetes/sslCopy following certs to /etc/kubernetes/ssl from certs.

/etc/kubernetes/ssl/ca.pem

/etc/kubernetes/ssl/apiserver.pem

/etc/kubernetes/ssl/apiserver-key.pemNext, let us configure flannel to obtain its local configuration in /etc/flannel/options.env and source its cluster-level configuration in etcd. Create a script containing the contents below and note the following:

${ADVERTISE_IP} with your machine's public IP.${ETCD_ENDPOINTS} FLANNELD_IFACE=${ADVERTISE_IP}

FLANNELD_ETCD_ENDPOINTS=${ETCD_ENDPOINTS}You will then create the drop-in below that will contain the configurations above at flanell start, /etc/systemd/system/flanneld.service.d/40-ExecStartPre-symlink.conf

[Service]

ExecStartPre=/usr/bin/ln -sf /etc/flannel/options.env /run/flannel/options.envYou need to have Docker configured for the flannel to manage the cluster's pod network. We need to require that flannel runs before Docker starts. Let us apply a systemd drop-in, /etc/systemd/system/docker.service.d/40-flannel.conf

[Unit]

Requires=flanneld.service

After=flanneld.service

[Service]

EnvironmentFile=/etc/kubernetes/cni/docker_opts_cni.envLaunch a Docker CNI Options file, etc/kubernetes/cni/docker_opts_cni.env

DOCKER_OPT_BIP=""

DOCKER_OPT_IPMASQ=""Configure the Flannel CNI configuration with the command below if you are using flannel networking:

/etc/kubernetes/cni/net.d/10-flannel.conf

{

"name": "podnet",

"type": "flannel",

"delegate": {

"isDefaultGateway": true

}

}Kubelet is responsible for starting and stopping pods and other machine level tasks. It communicates with the API server that runs on the master node with our TLS certificates we installed earlier.

Create /etc/systemd/system/kubelet.service .

${ADVERTISE_IP} with this node's public IP.${DNS_SERVICE_IP} with 10.3.0.10

[Service]

Environment=KUBELET_VERSION=v1.5.1_coreos.0

Environment="RKT_OPTS=--uuid-file-save=/var/run/kubelet-pod.uuid \

--volume var-log,kind=host,source=/var/log \

--mount volume=var-log,target=/var/log \

--volume dns,kind=host,source=/etc/resolv.conf \

--mount volume=dns,target=/etc/resolv.conf"

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/manifests

ExecStartPre=/usr/bin/mkdir -p /var/log/containers

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/run/kubelet-pod.uuid

ExecStart=/usr/lib/coreos/kubelet-wrapper \

--api-servers=http://127.0.0.1:8080 \

--register-schedulable=false \

--cni-conf-dir=/etc/kubernetes/cni/net.d \

--network-plugin=cni \

--container-runtime=docker \

--allow-privileged=true \

--pod-manifest-path=/etc/kubernetes/manifests \

--hostname-override=${ADVERTISE_IP} \

--cluster_dns=${DNS_SERVICE_IP} \

--cluster_domain=cluster.local

ExecStop=-/usr/bin/rkt stop --uuid-file=/var/run/kubelet-pod.uuid

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.targetThe API server handles much of the workload and where most activities take place. An API server is stateless and handles requests, provides feedback and stores results in etcd when necessary.

Create /etc/kubernetes/manifests/kube-apiserver.yaml

${ETCD_ENDPOINTS} with your CoreOS hosts.${SERVICE_IP_RANGE} with 10.3.0.0/24

${ADVERTISE_IP} with this node's public IP.apiVersion: v1

kind: Pod

metadata:

name: kube-apiserver

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-apiserver

image: quay.io/coreos/hyperkube:v1.5.1_coreos.0

command:

- /hyperkube

- apiserver

- --bind-address=0.0.0.0

- --etcd-servers=${ETCD_ENDPOINTS}

- --allow-privileged=true

- --service-cluster-ip-range=${SERVICE_IP_RANGE}

- --secure-port=443

- --advertise-address=${ADVERTISE_IP}

- --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota

- --tls-cert-file=/etc/kubernetes/ssl/apiserver.pem

- --tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem

- --client-ca-file=/etc/kubernetes/ssl/ca.pem

- --service-account-key-file=/etc/kubernetes/ssl/apiserver-key.pem

- --runtime-config=extensions/v1beta1/networkpolicies=true

- --anonymous-auth=false

livenessProbe:

httpGet:

host: 127.0.0.1

port: 8080

path: /healthz

initialDelaySeconds: 15

timeoutSeconds: 15

ports:

- containerPort: 443

hostPort: 443

name: https

- containerPort: 8080

hostPort: 8080

name: local

volumeMounts:

- mountPath: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

readOnly: true

- mountPath: /etc/ssl/certs

name: ssl-certs-host

readOnly: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

- hostPath:

path: /usr/share/ca-certificates

name: ssl-certs-hostAs we did with the API server, we are going to run a proxy, which shall be responsible with traffic direction to services and pods. Our proxy keeps up-to-date by regular communication with the API server. The proxy supports both master and worker nodes in the cluster.

Begin with creating /etc/kubernetes/manifests/kube-proxy.yaml, without any configurations necessary.

apiVersion: v1

kind: Pod

metadata:

name: kube-proxy

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-proxy

image: quay.io/coreos/hyperkube:v1.5.1_coreos.0

command:

- /hyperkube

- proxy

- --master=http://127.0.0.1:8080

securityContext:

privileged: true

volumeMounts:

- mountPath: /etc/ssl/certs

name: ssl-certs-host

readOnly: true

volumes:

- hostPath:

path: /usr/share/ca-certificates

name: ssl-certs-hostCreate a /etc/kubernetes/manifests/kube-controller-manager.yaml that uses the TLS certificate in the disk.

apiVersion: v1

kind: Pod

metadata:

name: kube-controller-manager

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-controller-manager

image: quay.io/coreos/hyperkube:v1.5.1_coreos.0

command:

- /hyperkube

- controller-manager

- --master=http://127.0.0.1:8080

- --leader-elect=true

- --service-account-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem

- --root-ca-file=/etc/kubernetes/ssl/ca.pem

resources:

requests:

cpu: 200m

livenessProbe:

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

initialDelaySeconds: 15

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

readOnly: true

- mountPath: /etc/ssl/certs

name: ssl-certs-host

readOnly: true

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

- hostPath:

path: /usr/share/ca-certificates

name: ssl-certs-hostNext, set up the scheduler to track API's unscheduled pods and allocate them machines and updates the AP with the decision.

Create a /etc/kubernetes/manifests/kube-scheduler.yaml File.

apiVersion: v1

kind: Pod

metadata:

name: kube-scheduler

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-scheduler

image: quay.io/coreos/hyperkube:v1.5.1_coreos.0

command:

- /hyperkube

- scheduler

- --master=http://127.0.0.1:8080

- --leader-elect=true

resources:

requests:

cpu: 100m

livenessProbe:

httpGet:

host: 127.0.0.1

path: /healthz

port: 10251

initialDelaySeconds: 15

timeoutSeconds: 15Let us instruct the system to rescan all the changes we have implemented like so:

$ sudo systemctl daemon-reloadWe have already mentioned that the etcd stores cluster level configurations for the flannel. Accordingly, lets have an IP range for our pod network. Etcd is already running, now is the right time to set it. Alternatively, start your etcd

$POD_NETWORK place 10.2.0.0/16$ETCD_SERVER place url (http://ip:port) from $ETCD_ENDPOINTS

$ curl -X PUT -d "value={\"Network\":\"$POD_NETWORK\",\"Backend\":{\"Type\":\"vxlan\"}}" "$ETCD_SERVER/v2/keys/coreos.com/network/config"Restart the flannel for the changes to take effect, which, by extension, restarts the docker daemon and may affect the running of containers.

$ sudo systemctl start flanneld

$ sudo systemctl enable flanneldWe have everything configured and kubelet is ready to be started. It will also have the controller, scheduler, proxy and Pod API server manifests up and running.

$ sudo systemctl start kubeletMake sure kubelet starts after reboots:

$ sudo systemctl enable kubeletFor the worker node, we begin by creating a directory and placing the SSL keys we generated in the worker node like so:

$ mkdir -p /etc/kubernetes/sslPaste the certs to /etc/kubernetes/ssl from certs.

/etc/kubernetes/ssl/ca.pem

/etc/kubernetes/ssl/kube-1-worker-apiserver.pem

/etc/kubernetes/ssl/kube-1-worker-apiserver-key.pemAs we did previously, the local configuration of the flannel should be sourced from /etc/flannel/options.env while the etcd stores cluster-level configuration. Replicate this file and make the necessary adjustments:

${ADVERTISE_IP} replace with machine's public IP.${ETCD_ENDPOINTS}

FLANNELD_IFACE=${ADVERTISE_IP}

FLANNELD_ETCD_ENDPOINTS=${ETCD_ENDPOINTS}Create a drop-in to use the configuration above with flannel restart, /etc/systemd/system/flanneld.service.d/40-ExecStartPre-symlink.conf

[Service].

ExecStartPre=/usr/bin/ln -sf /etc/flannel/options.env /run/flannel/options.envNext, we configure Docker to use the flannel for it to manage the cluster's pod network. The method is similar to the implementation we did above, flannel should initiate before Docker is running.

Let us apply a systemd drop-in, /etc/systemd/system/docker.service.d/40-flannel.conf

[Unit]

Requires=flanneld.service

After=flanneld.service

[Service]

EnvironmentFile=/etc/kubernetes/cni/docker_opts_cni.envInitiate a Docker CNI Options file like so: etc/kubernetes/cni/docker_opts_cni.env

DOCKER_OPT_BIP=""

DOCKER_OPT_IPMASQ=""Set up the Flannel CNI configuration if relying on Flannel networking like so: /etc/kubernetes/cni/net.d/10-flannel.conf

{

"name": "podnet",

"type": "flannel",

"delegate": {

"isDefaultGateway": true

}

}In the worker node, let us create a kubelet service like so:

Create /etc/systemd/system/kubelet.service .

${ADVERTISE_IP} with node's public IP.${DNS_SERVICE_IP} with 10.3.0.10

${MASTER_HOST}

Environment=KUBELET_VERSION=v1.5.1_coreos.0

Environment="RKT_OPTS=--uuid-file-save=/var/run/kubelet-pod.uuid \

--volume dns,kind=host,source=/etc/resolv.conf \

--mount volume=dns,target=/etc/resolv.conf \

--volume var-log,kind=host,source=/var/log \

--mount volume=var-log,target=/var/log"

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/manifests

ExecStartPre=/usr/bin/mkdir -p /var/log/containers

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/run/kubelet-pod.uuid

ExecStart=/usr/lib/coreos/kubelet-wrapper \

--api-servers=${MASTER_HOST} \

--cni-conf-dir=/etc/kubernetes/cni/net.d \

--network-plugin=cni \

--container-runtime=docker \

--register-node=true \

--allow-privileged=true \

--pod-manifest-path=/etc/kubernetes/manifests \

--hostname-override=${ADVERTISE_IP} \

--cluster_dns=${DNS_SERVICE_IP} \

--cluster_domain=cluster.local \

--kubeconfig=/etc/kubernetes/worker-kubeconfig.yaml \

--tls-cert-file=/etc/kubernetes/ssl/worker.pem \

--tls-private-key-file=/etc/kubernetes/ssl/worker-key.pem

ExecStop=-/usr/bin/rkt stop --uuid-file=/var/run/kubelet-pod.uuid

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.targetCréate a /etc/kubernetes/manifests/kube-proxy.yaml file without any configration settings like so:

apiVersion: v1

kind: Pod

metadata:

name: kube-proxy

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-proxy

image: quay.io/coreos/hyperkube:v1.5.1_coreos.0

command:

- /hyperkube

- proxy

- --master=${MASTER_HOST}

- --kubeconfig=/etc/kubernetes/worker-kubeconfig.yaml

securityContext:

privileged: true

volumeMounts:

- mountPath: /etc/ssl/certs

name: "ssl-certs"

- mountPath: /etc/kubernetes/worker-kubeconfig.yaml

name: "kubeconfig"

readOnly: true

- mountPath: /etc/kubernetes/ssl

name: "etc-kube-ssl"

readOnly: true

volumes:

- name: "ssl-certs"

hostPath:

path: "/usr/share/ca-certificates"

- name: "kubeconfig"

hostPath:

path: "/etc/kubernetes/worker-kubeconfig.yaml"

- name: "etc-kube-ssl"

hostPath:

path: "/etc/kubernetes/ssl"For Kubernetes components to communicate securely, use kubeconfig for authentication settings definition. For this use case, the configuration read by kubelet and proxy enable them to communicate with the API.

First create the file: /etc/kubernetes/worker-kubeconfig.yaml:

/etc/kubernetes/worker-kubeconfig.yaml

apiVersion: v1

kind: Config

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ssl/ca.pem

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ssl/worker.pem

client-key: /etc/kubernetes/ssl/worker-key.pem

contexts:

- context:

cluster: local

user: kubelet

name: kubelet-context

current-context: kubelet-contextThe Worker services are ready to start.

Let us instruct the system to rescan disk to update the changes we have made like so:

$ sudo systemctl daemon-reloadInitiate kubelet, which in turn starts the proxy.

$ sudo systemctl start flanneld

$ sudo systemctl start kubeletEnforce the starting of services on each boot:

$ sudo systemctl enable flanneld

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /etc/systemd/system/flanneld.service.

$ sudo systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

To check the health of the kubelet systemd unit that we created, run systemctl status kubelet.service.If you have made it this far, congratulations! You can readily configure Kubernetes to run on your CoreOS cluster. For more information on Kubernetes, please refer to other articles in this tutorial. As a reminder, ensure that you are making the correct changes on this tutorial especially on IP addresses for it to run on your cluster. Cheers!

Do you have an Alibaba Cloud account? Sign up for an account and try over 40 products for free worth up to $1200. Get Started with Alibaba Cloud to learn more.

Time Series Database vs. Common Database Technologies for IoT

Industrial AI - Unlocking Upcoming Opportunities in the Next Five Years

2,605 posts | 747 followers

FollowAlibaba Clouder - April 22, 2019

Alibaba Clouder - April 12, 2021

Alibaba Clouder - April 21, 2021

OpenAnolis - July 14, 2022

Alibaba Developer - March 25, 2019

Alibaba Developer - June 15, 2020

2,605 posts | 747 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreLearn More

Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder