By Tongshu

Databases are important infrastructure in enterprises' IT systems. They store large amounts of data, and oftentimes valuable data, such as transaction data, customer data, and order data. Because of this, databases typically assume the role of "data producer" in enterprises. When data gets accumulated in databases over a long period, these databases will become a huge treasure trove for businesses. However, with increasing data volumes and diverse data formats in their databases, enterprises are facing great challenges in storing, migrating, and processing data and to get the most value out of it.

To solve these issues, Alibaba Cloud Data Management Service (DMS) has recently launched a task orchestration feature. DMS task orchestration helps you easily migrate, process, and convert data in your database regardless of its deployment position (such as Alibaba Cloud, on-premises data centers, or clouds provided by other cloud vendors) or type (such as OLTP or OLAP database). DMS task orchestration provides the following functions:

• Extensive data migration capabilities: DMS task orchestration can migrate data between databases (such as between OLTP databases and OLAP databases) and between databases and elastic storage (such as between MySQL and OSS).

• Diversified data processing methods: DMS task orchestration supports single-database SQL tasks, cross-database SQL tasks, data migration tasks, Spark tasks, and data backup and recovery services (coming soon). You can use SQL statements to process data in a single database or multiple databases and write Spark tasks for complex data processing and AI analysis.

• Task flows and periodic scheduling: You can visually orchestrate multiple task nodes to create a task flow and flexibly set different time intervals for scheduling as needed.

• Template-based task flow creation: DMS task orchestration provides multiple scenario-based task flow templates, such as for archiving historical data to OSS. You can use a template to quickly create a task flow and run it after simple configurations.

• High data security: Based on the powerful data security capabilities of DMS, task orchestration strictly checks user permissions and allows only authorized users to perform corresponding tasks.

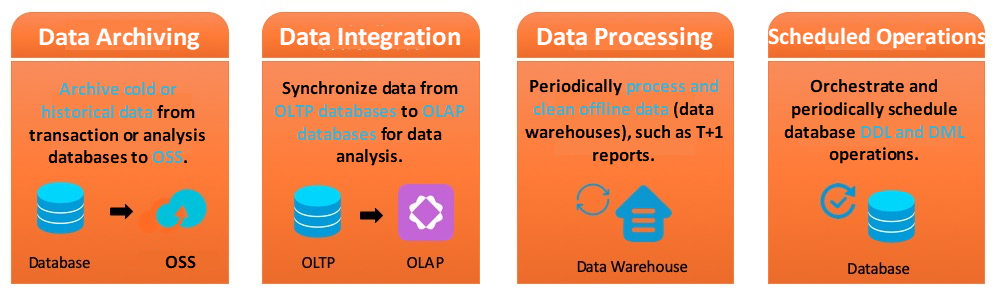

After we understand the functions of DMS task orchestration, we will talk about its application scenarios. The following figure shows four main application scenarios of DMS task orchestration.

Currently, most databases are traditional standalone databases (such as MySQL) and cloud-native databases (such as Apsara PolarDB and AnalyticDB for MySQL from Alibaba Cloud). Traditional standalone databases have limited storage space. Cloud-native databases support storage scale-out but typically at high cost. As the data volumes in databases increase, we need solutions to reduce the storage costs. Many users want to dump cold or historical data in their databases to a reliable and cost-effective storage service, such as Alibaba Cloud Object Storage Service (OSS). With DMS task orchestration, you can easily archive database data to OSS periodically, such as on a daily or weekly schedule. In addition, DMS can interwork with Alibaba Cloud Data Lake Analytics (DLA). This allows you to easily access DLA and query and analyze data archived to OSS in DMS.

Enterprises' data may be distributed across multiple data sources (such as MySQL and SQL Server) and across multiple regions (such as Beijing, Hangzhou, and Shenzhen) due to vertical business classification, microservices, local application deployment, or other reasons. Data distribution is inevitable. Therefore, many enterprises require the integration of data distributed in different regions for global analysis, such as summary and AI analysis. A typical data integration requirement is synchronizing data from an OLTP database to an OLAP database for offline analysis. Now, DMS task orchestration can easily meet this requirement. First, DMS interconnects various network environments, such as Alibaba Cloud VPCs or classic networks and local IDC networks and can connect to data sources in different regions. Second, DMS supports data integration between heterogeneous data sources, such as between ApsaraDB RDS for MySQL and AnalyticDB. In addition, DMS task orchestration provides various integration methods, such as one-time full integration and periodic incremental integration.

After data integration, we have to process, clean, and analyze aggregated source data to explore the value of the data. For example, we need to count the number of users (UV) of a product every day and generate reports by week. DMS task orchestration provides task flows and periodic scheduling. Based on a task flow, you can disassemble and orchestrate a complex processing task and configure the scheduling information. DMS supports single scheduling and periodic scheduling (such as by day, week, or month). In addition, DMS supports multiple types of processing tasks. You can use SQL statements to process data and write Spark tasks for complex data processing and AI analysis. With diversified scheduling configurations and task types, DMS task orchestration can meet data processing requirements in various simple and complex scenarios.

Databases involve a lot of scheduled DML, DDL, and DCL operations. For example, you need to clean historical data (DELETE) every week to prevent excessive tables and update statistical information (ANALYZE TABLE) every day to obtain better query optimization results. Some databases have provided the event scheduling feature at the kernel layer, such as MySQL Event. However, certain costs are incurred to create and maintain events with special syntax. DMS task orchestration provides a scheduling function to easily and visually create scheduled tasks without depending on the capabilities of the database engine. This makes scheduled tasks simpler, more flexible, and applicable to more scenarios.

In this chapter, we will use data archiving as an example to illustrate how you can use DMS task orchestration and Alibaba Cloud DLA to periodically archive data in ApsaraDB RDS for MySQL to OSS. For more information about the procedure, see the DMS documentation.

In the ApsaraDB RDS for MySQL instance of a user, assume a table (such as one that stores transaction records or logon and operations logs) has an increasing amount of data, which occupies a large amount of storage space and affects the database performance. The data is valuable for auditing, reports, statistical analysis, or other scenarios and cannot be deleted. Therefore, the user has the following three core requirements:

• Reduce the storage load of the database instance.

• Incrementally archive historical business data.

• Partition the archived data to ensure efficient data querying by partition.

To meet these three requirements, we use Alibaba Cloud DLA because it connects to both OSS and ApsaraDB RDS for MySQL and can migrate data between them and analyze the data. However, DLA does not have periodic scheduling or incremental data migration capabilities. Therefore, we also use DMS task orchestration to meet these requirements.

In the following operations, we assume that the table to be archived in the ApsaraDB RDS for MySQL instance is an order table named orders. The table structure is as follows (created_date indicates the order creation date):

create table orders(

order_id bigint,

product_name varchar(32),

price double,

total_amount double,

created_date date

);The following table describes the purchase requirements and functions of required products.

| Product | Required Region | Function |

| DMS | No limits | Used for periodic scheduling |

| DLA | Same region as ApsaraDB RDS for MySQL | Migrates data in ApsaraDB RDS for MySQL to OSS and queries data archived in OSS |

| OSS | Same region as ApsaraDB RDS for MySQL | Stores data archived from ApsaraDB RDS for MySQL |

This section describes how you can periodically archive data in ApsaraDB RDS for MySQL to OSS in DMS.

Step 1: Create a DLA Archive Library

To archive data to DLA, you must first create a schema in DLA for storing the tables to archive. On the DMS homepage, choose SQLConsole > Single Database query in the top navigation bar. In the query window that appears, enter and execute the following SQL statement:

CREATE DATABASE demo_schema

WITH DBPROPERTIES (

catalog = 'oss',

location = 'oss://xxxxxx/dla_demo/'

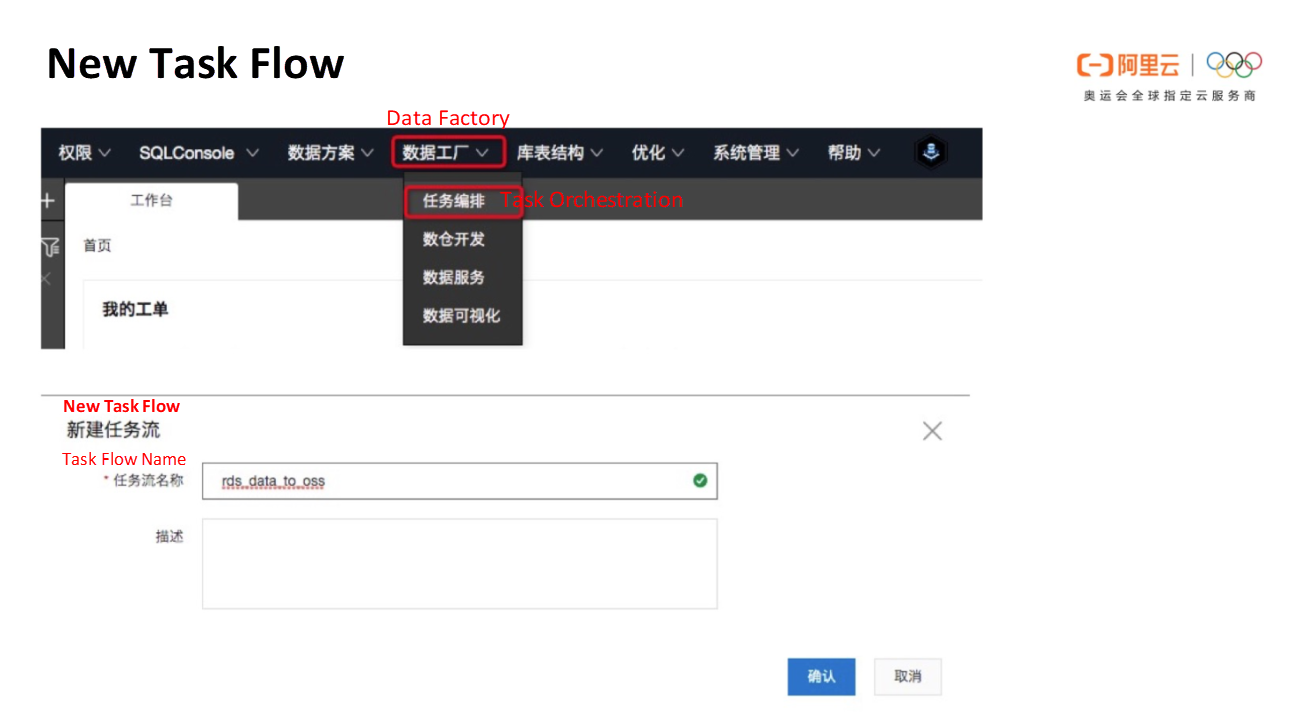

)Step 2: Create a Task Flow

On the DMS homepage, choose Data Factory > Task Orchestration in the top navigation bar to go to the task orchestration page. Click New task flow, or click the left tab to go to the development space and find the new task flow entry. In the New Task Flow dialog box, set Task Flow Name to rds_data_to_oss.

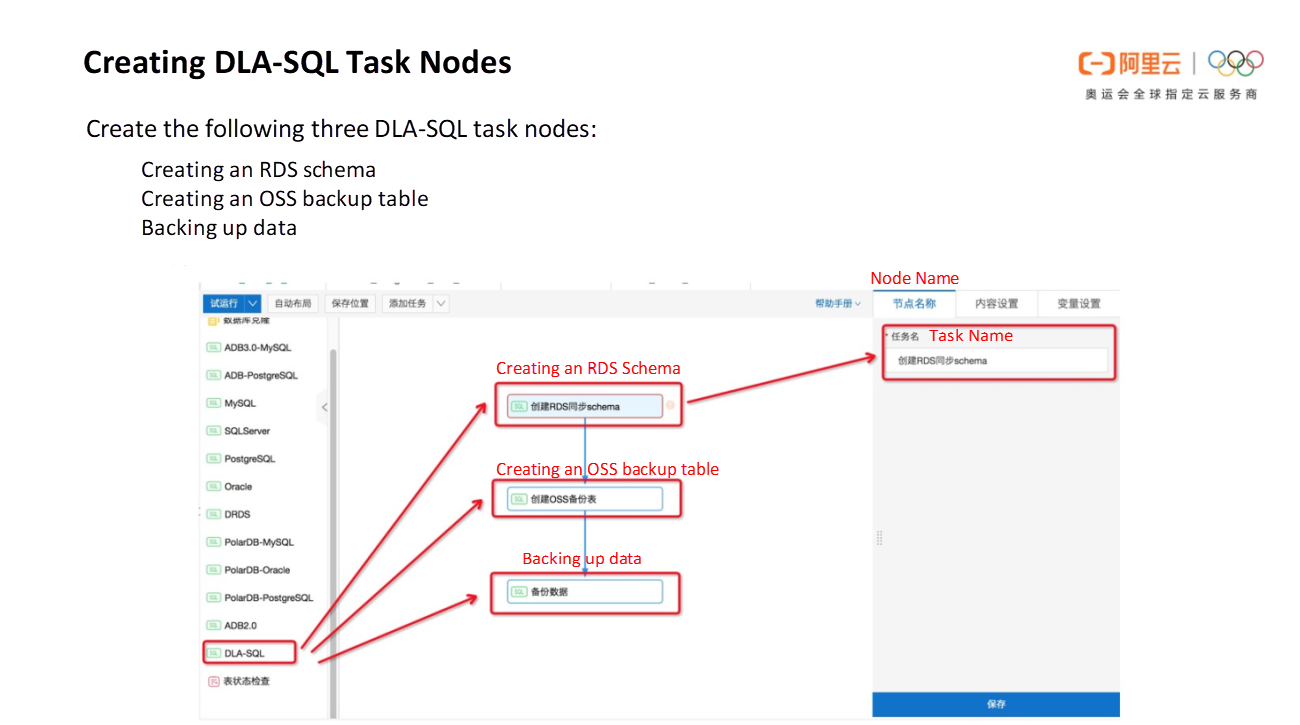

Step 3: Configure Task Nodes

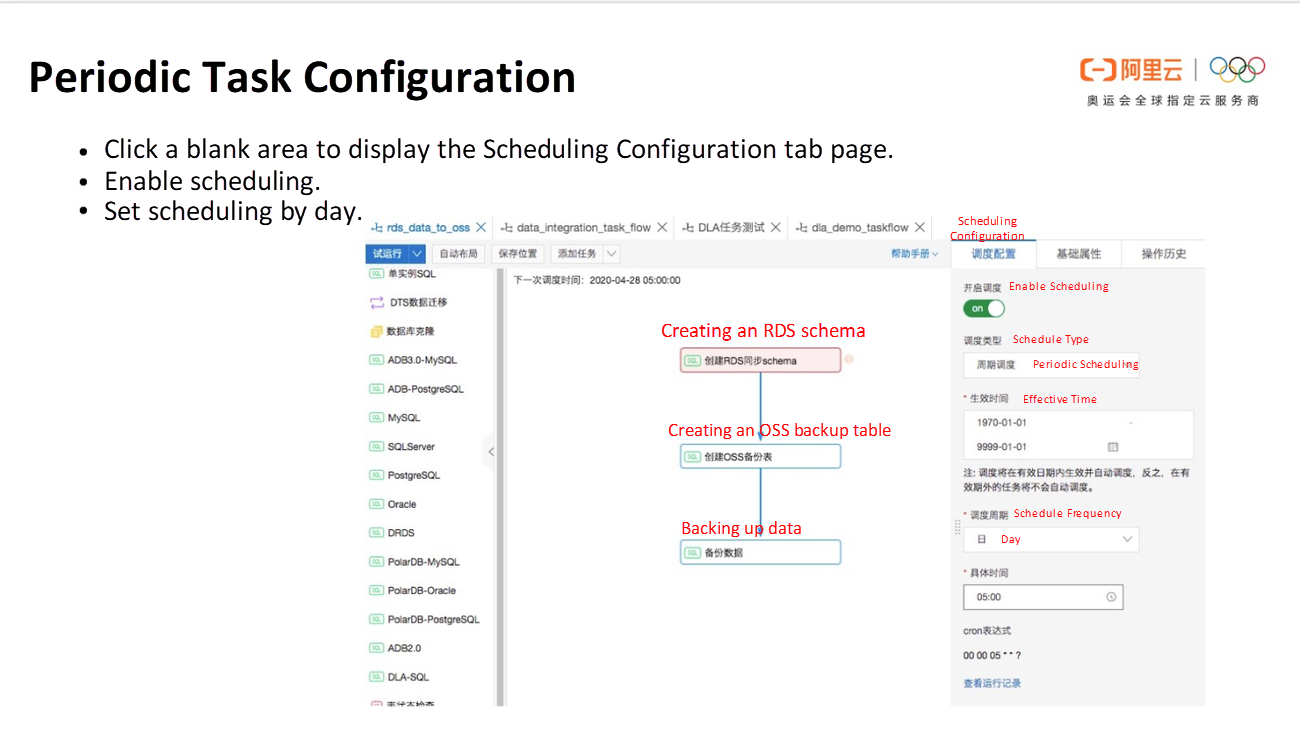

In the rds_data_to_oss task flow, create the following three task nodes of the DLA-SQL type in sequence:

After these nodes are created, you can add lines between the nodes to orchestrate the node execution sequence and generate a complete task flow.

The following sections describe the settings for each node.

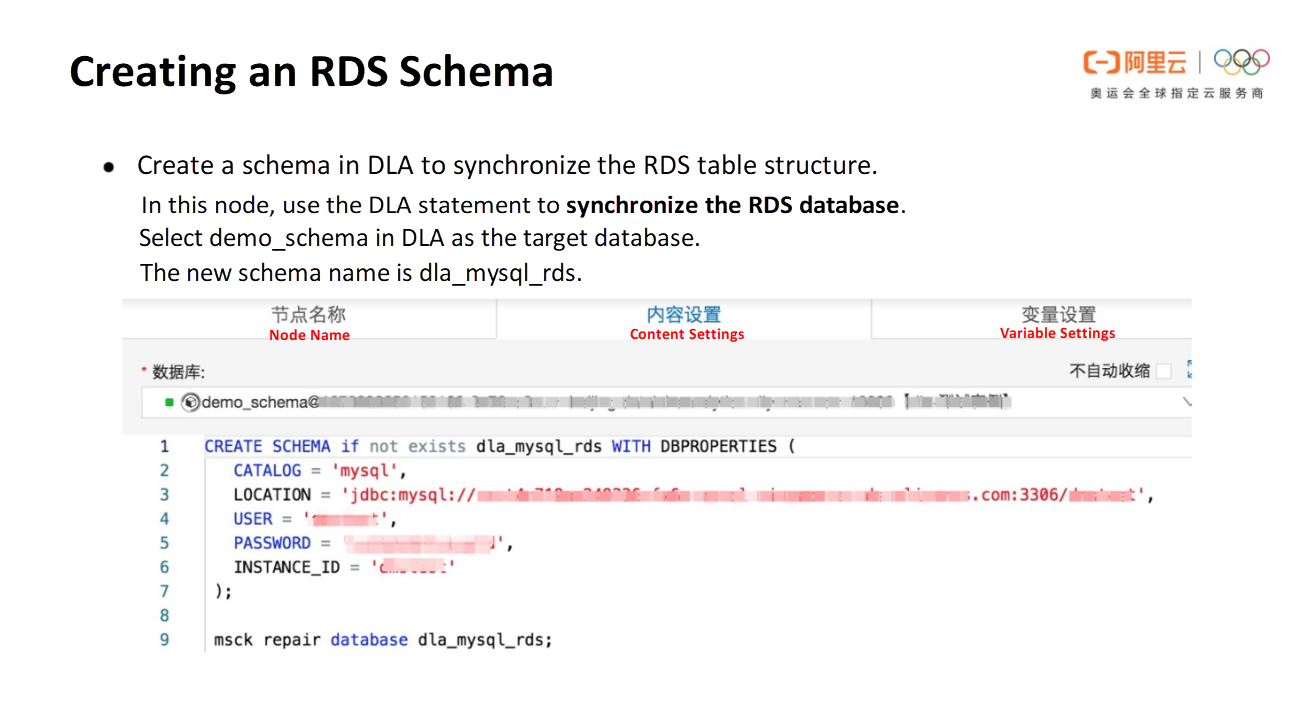

Node 1: Creating an RDS Schema

To enable DLA to access data in your ApsaraDB RDS for MySQL instance, you need to create a schema named dla_mysql_rds for ApsaraDB RDS for MySQL in DLA. We use SQL statements to create a schema. Click the first task node. On the Content Settings tab on the right, enter the following SQL statements. Some parameters need to be set based on the actual information of ApsaraDB RDS for MySQL.

CREATE SCHEMA IF NOT EXISTS dla_mysql_rds WITH DBPROPERTIES (

CATALOG = 'mysql',

LOCATION = 'jdbc:mysql://xxxxxx.rds.aliyuncs.com:3306/dmstest',

USER = 'dmstest',

PASSWORD = 'xxxxxxxxx',

INSTANCE_ID = 'xxxxxx'

);

MSCK REPAIR DATABASE dla_mysql_rds;To enable DLA to access data in your ApsaraDB RDS for MySQL instance, you also need to add the CIDR block of DLA (100.104.0.0/16) to the whitelist of your ApsaraDB RDS for MySQL instance. For more information on how to configure a whitelist for an ApsaraDB RDS for MySQL instance, see Configure a whitelist for an ApsaraDB RDS for MySQL instance.

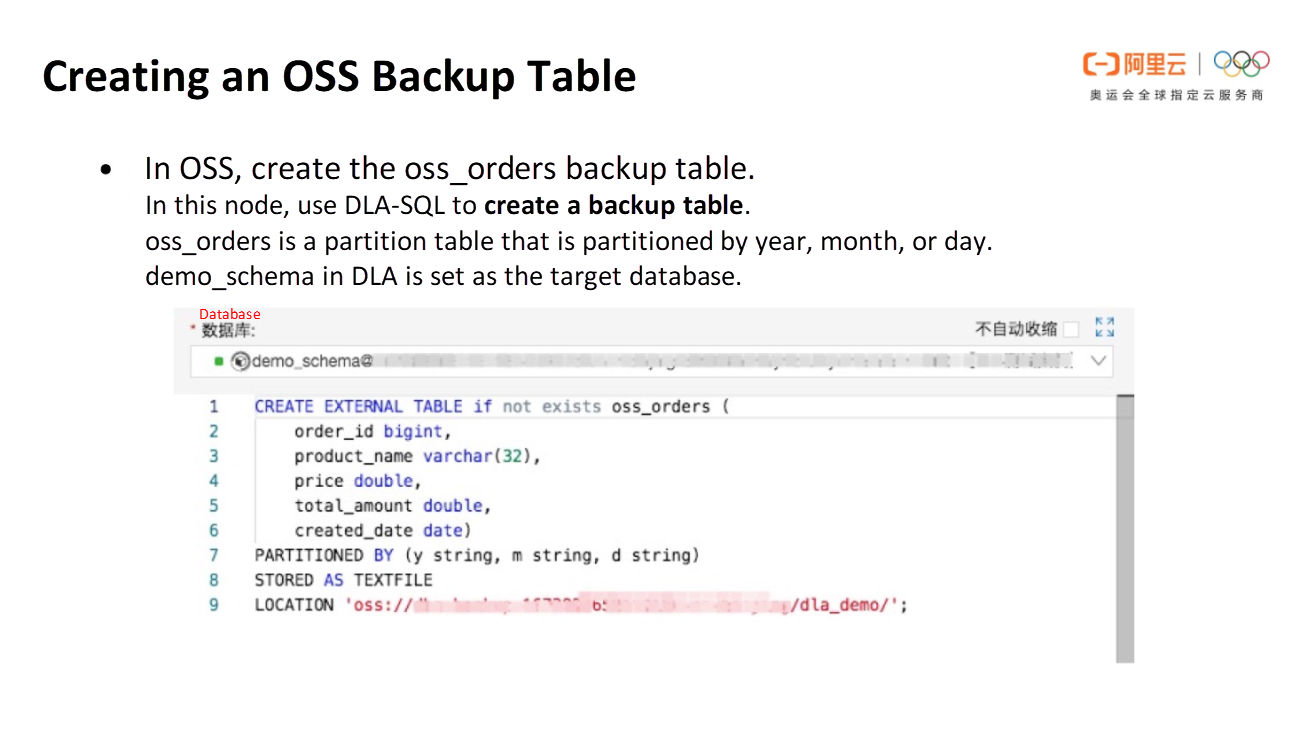

Node 2: Creating an OSS Backup Table

We need to create an OSS backup table (oss_orders) in DLA. Set demo_schema as the target database of this node, and enter the following SQL statement on the Content Settings tab. oss_orders has the same structure as orders in ApsaraDB RDS for MySQL. The only difference is that oss_orders is a partition table that is partitioned by year, month, or day.

CREATE EXTERNAL TABLE oss_orders (

order_id bigint,

product_name varchar(32),

price double,

total_amount double,

created_date date)

PARTITIONED BY (y string, m string, d string)

STORED AS TEXTFILE

LOCATION 'oss://xxxxxx/dla_demo/';The LOCATION parameter must be set to the OSS path for storing archived data.

Node 3: Backing Up Data

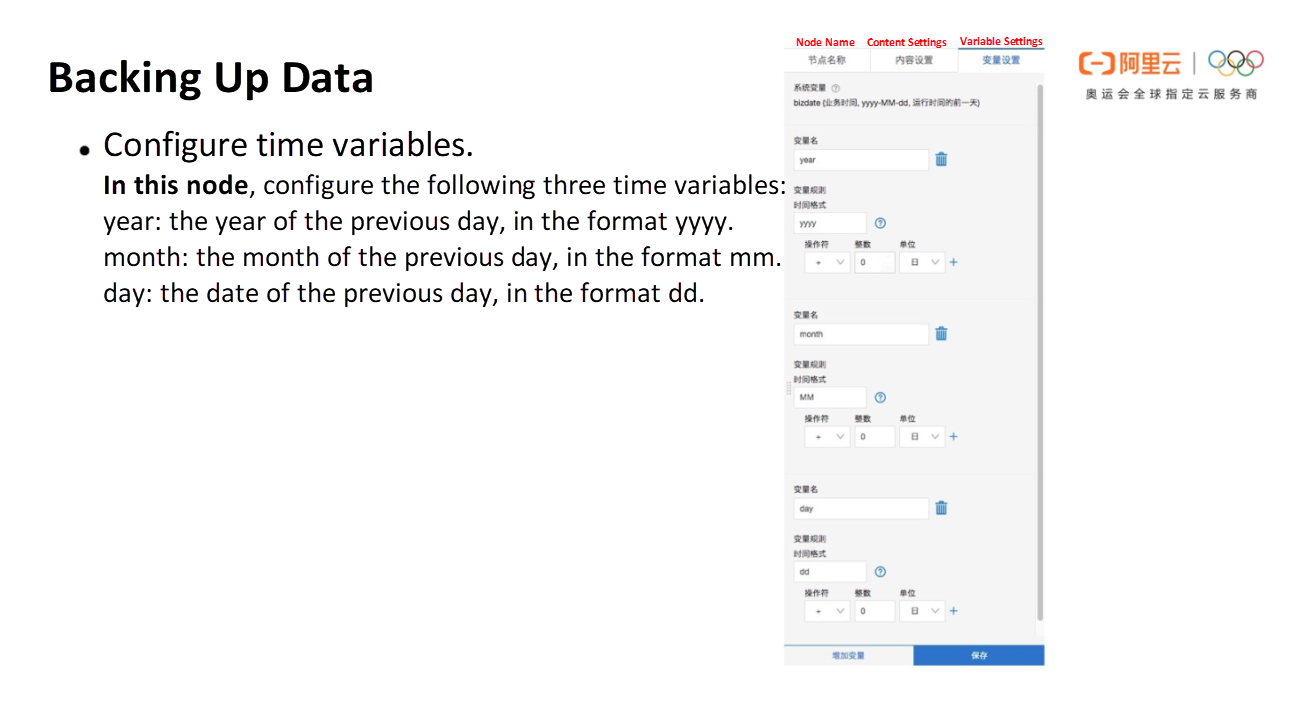

This node backs up data in ApsaraDB RDS for MySQL to OSS. To configure this node, you need to select demo_schema in DLA as the target database, set time variables, and write the backup SQL statement.

Configure the following three time variables:

• year: the year of the previous day, in the format yyyy.

• month: the month of the previous day, in the format mm.

• day: the date of the previous day, in the format dd.

Note: bizdate is a system parameter in DMS task orchestration. It indicates the previous day of the task running time. Other custom variables are automatically updated based on the task running time.

Set the SQL statement for data backup.

/* Create a temporary table. */

CREATE EXTERNAL TABLE oss_orders_tmp (

order_id bigint,

product_name varchar(32),

price double,

total_amount double,

created_date date)

STORED AS TEXTFILE

LOCATION 'oss://xxxxxx/dla_demo/y=${year}/m=${month}/d=${day}'

TBLPROPERTIES('auto.create.location'= 'true');

/* Back up daily data. */

insert into oss_orders_tmp

SELECT * FROM dla_mysql_rds.orders

where created_date = '${bizdate}';

/* Update the backup table partition information and delete the temporary table. */

msck repair table oss_orders;

drop table oss_orders_tmp;The preceding SQL script contains the following steps for data backup:

The SQL script properly uses the variable feature provided by DMS task orchestration and the OSS table partition feature of DLA. The temporary table corresponds to a partition of a global backup table in OSS. As the task is executed every day, the variable values are automatically updated to generate new OSS directories and partitions. The incremental data of ApsaraDB RDS for MySQL is automatically archived to the new partitions. For example, data in ApsaraDB RDS for MySQL whose created_date value is 2020-06-01 is archived to the oss://xxxxxx/dla_demo/y=2020/m=06/d=01 path.

Finally, we will show how you can configure task flow scheduling. Click a blank area in a task flow to display the Scheduling Configuration tab page on the right. Enable scheduling for the task flow, set the running time to an off-peak hour of ApsaraDB RDS for MySQL businesses, such as 5:00 a.m, and set the scheduling period to day. Then, the task flow will be automatically executed at 5:00 a.m every day. To view historical execution records of the task flow, click the O&M center on the left. The historical execution times and logs of the task flow will appear.

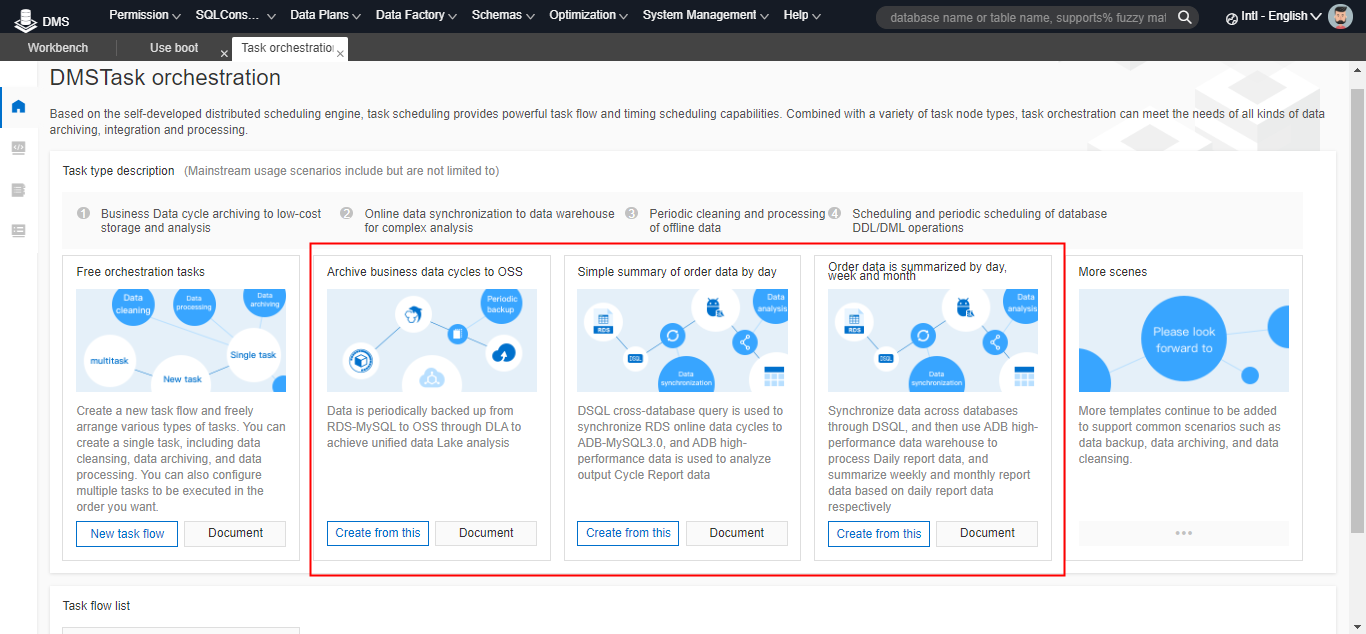

To reduce the time needed to manually create and configure task flows, DMS task orchestration provides various templates. Typically, these templates contain configured task nodes and SQL content based on the cases of DMS users and are designed to solve problems in different scenarios. For example, the description of periodic data archiving from ApsaraDB RDS for MySQL to OSS in Chapter 2 is derived from a well-known international car vendor.

You can use these templates to quickly create a runnable task flow with simple configurations, such as configuring target databases for task nodes and some parameters in SQL. Currently, we have launched multiple task flow templates, which can be viewed from the DMS task orchestration homepage. In the future, we will add more templates to make task orchestration easier and more convenient to use.

Data Management System (DMS) is a popular database tool among Alibaba Cloud users and can help them interconnect various network environments and manage and operate databases. In addition to common database operations, such as addition, deletion, editing, query, and DDL operations, more and more users need to archive, migrate, back up, and process data in databases. To meet these requirements, DMS launched the task orchestration feature. It establishes channels between databases and other systems or storage. As a result, databases are no longer data silos. In addition, DMS task orchestration provides various task types, task flows, and periodic scheduling capabilities to help you easily clean and process data in databases and turn the data into business intelliegnce.

Although DMS task orchestration was only recently released on the public cloud, it has already attracted a lot of users from various industries, including the Internet, retail, payment, transportation, automotive, and beauty. For more information about DMS task orchestration functions, log on to the DMS console or view DMS product documentation.

How STO Express Coped with Peak Demands during the 618 Shopping Festival Using PolarDB

Della Wardhani - February 23, 2026

ApsaraDB - August 8, 2024

ApsaraDB - May 19, 2025

ApsaraDB - November 7, 2025

ApsaraDB - November 17, 2020

ApsaraDB - August 19, 2025

DBStack

DBStack

DBStack is an all-in-one database management platform provided by Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB