By Ankit Kapoor, Database Senior Solution Architect

In this article, we are going to discuss how Sharding like architecture can be deployed in cloud native databases like PolarDB for MySQL or MySQL/Postgres, with the help of physical and logical databases. I will be mostly focusing on PolarDB-MySQL in this article, but if you are thinking to implement the same in MySQL or Postgres, you can simply replicate the entire method to these databases as well without any changes required.

Before we start, if you are not aware of what Alibaba Cloud's Data Management Service (DMS) is and its respective use cases are, you can refer to my previous articles: DMS 1 and DMS 2.

DMS, or Data Management Service, is a database research and development service platform developed by Alibaba Cloud and is a cloud native tool. With DMS, you can implement various management operations like Security, Auditing, Physical and logical database, Task Orchestration, Lock-less changes, Data Analysis, and more. You can also refer the official documentation for DMS in order to understand which databases and respective features it supports.

DMS has been adopted by various customers who are in the food delivery, e-commerce, financial, and gaming industries.

DMS usually comes in 3 different modes:

To know the differences of the 3 modes and their pricing models, you can refer to this document. We will not be focusing on the pricing models in this article.

Think of a table where you have trillions of records (and growing) and this table is frequently accessed in the form of Read and Write by several concurrent users. You would most likely encounter a situation where your system reaches the limit of its CPU, MEMORY and STORAGE ability to serve more requests related to this table. You may also notice that the CPU and STORAGE capacity reaching 100% frequently and the number of new incoming requests starting to be throttled. When you encounter such a situation, there are typically two solutions that you may adopt to overcome these issues:

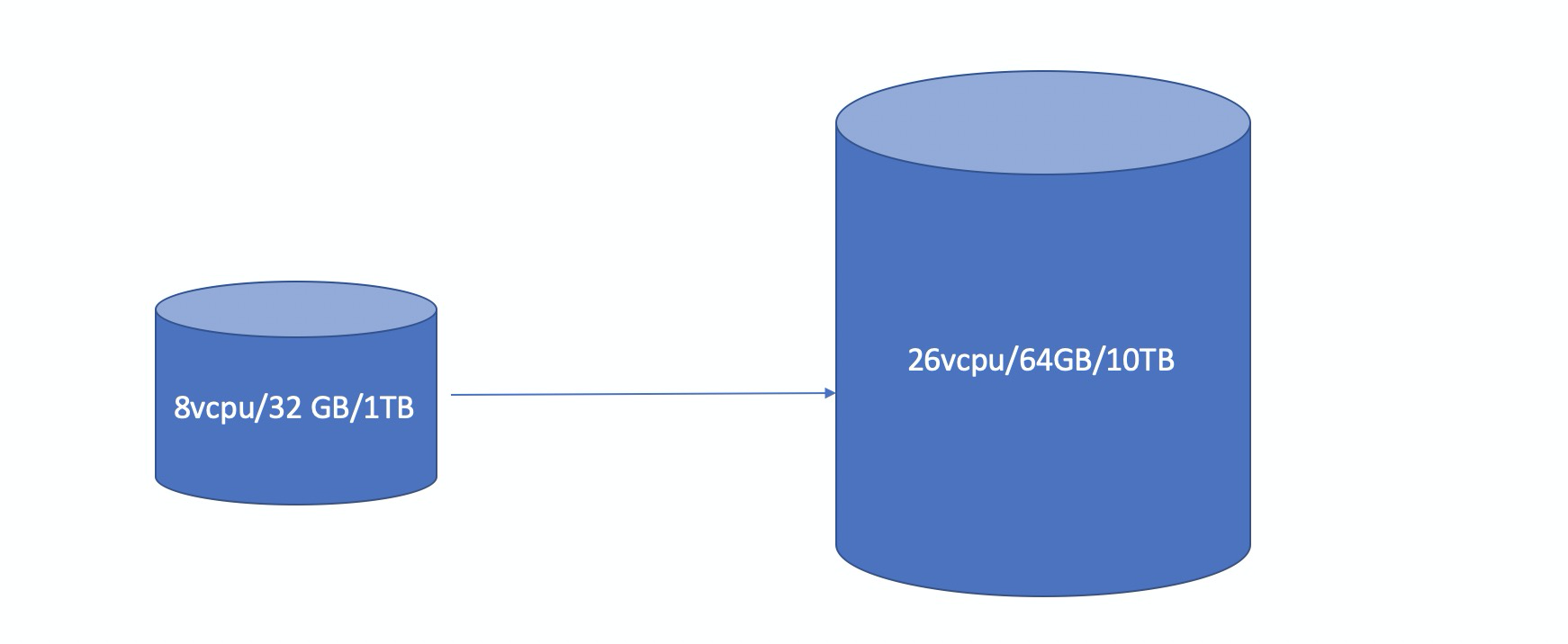

As the name suggests, vertical scaling simply increases the hardware resources of the machine where this heavy table resides. But given the speed and size at which data is being generated, this architecture may not be able to handle the data efficiently, and you may have to constantly increase the hardware resources.

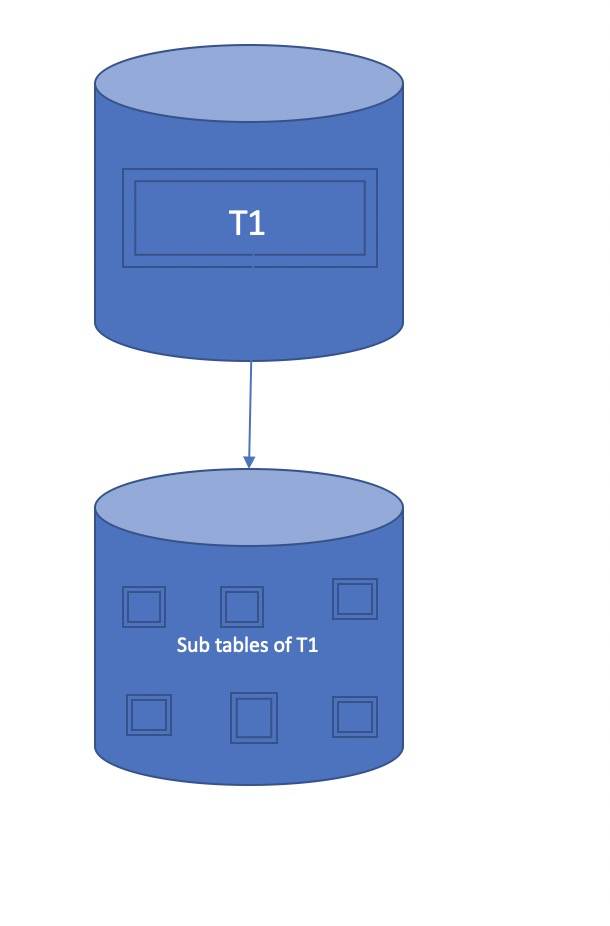

Think of a book that has millions of pages inside it. You decided to divide this book into 10 sub parts and specify the range of pages a sub book can have. For example, sub book 1 can have pages from 1-50000. To divide this book, you must decide on which unique attribute to divide this book so that it can span easily. Similarly, if we replace the book with a table we are able to partition it into various sub tables. But all these sub tables will reside in the same database. This architecture can help you to improve the performance, but it still is not a suitable solution for the scaling.

Partitioning

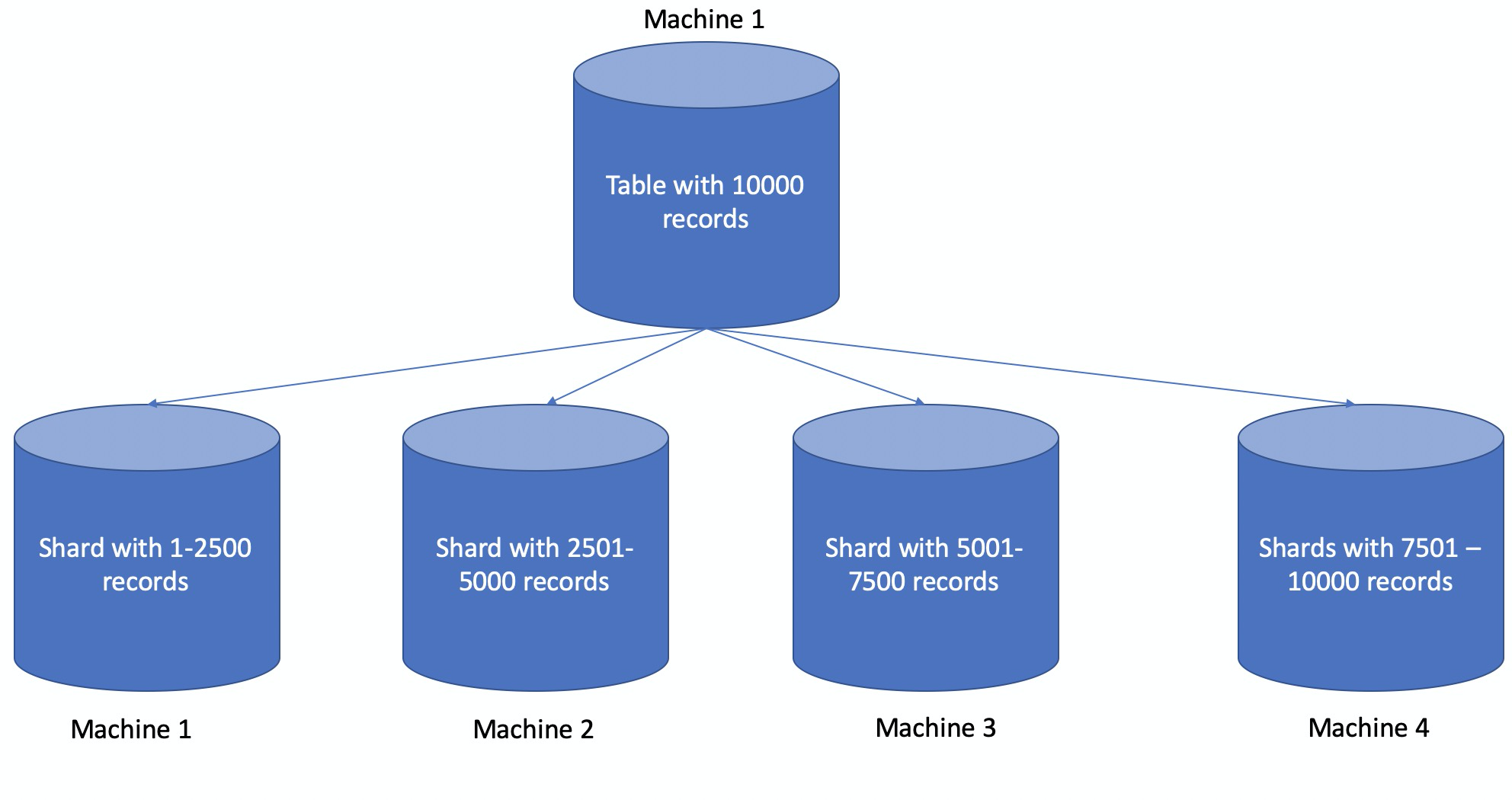

Let's now change the approach by applying what we learned in vertical scaling and partitioning. Instead of dividing the table and placing sub tables in the same server, let's put them on different physical nodes. And instead of increasing the hardware resource of one single physical machine (vertical scaling), we are going to add more physical machines.

This approach of adding more physical machines in the same environment is known as Horizontal Scaling, and dividing the table into smaller data sets and placing them on different physical machines is known as Sharding. These physical machines are also called shards.

As I mentioned before, to divide a table, we need to identify a unique key basis on which sharding will be done and then we also need to define the ranges of these keys to be in one particular shard. Please note that some database products like Redis do sharding automatically based on hashing algorithm.

Such architecture eliminates below challenges:

There are databases that doesn't support Sharding until unless you use your own solution or their paid solution. For example, MySQL server doesn't provide sharding until unless you use a MySQL cluster or NDB cluster. Let's think about below use cases before going in-depth to DMS.

You are running an e-commerce business in several countries. The database of each country is installed in different server. The common thing among all databases in different countries is that, it has same table name with same structure. For every region, there are several sub clusters. Now there is a situation when you need to add a column in one of your table. Being a developer or DBA, you will enter in every database executing alter statement to add this column. To make it fast, you can create a "for loop" which will enter in every database and execute alter and exit. But this is not an atomic operation.

You are running your database on the MySQL/ Postgres server or any other DB (be it RDS or on-prem) and you are planning to adopt the sharding plus partitioning-like structure without making any changes to your application code.

We will cover the solution of above 2 case studies in later section of this article. I feel it is better if I can introduce some basic concepts first so that we can implement above 2 case studies.

In DMS, there are few things you need to focus on in order to implement the above case studies:

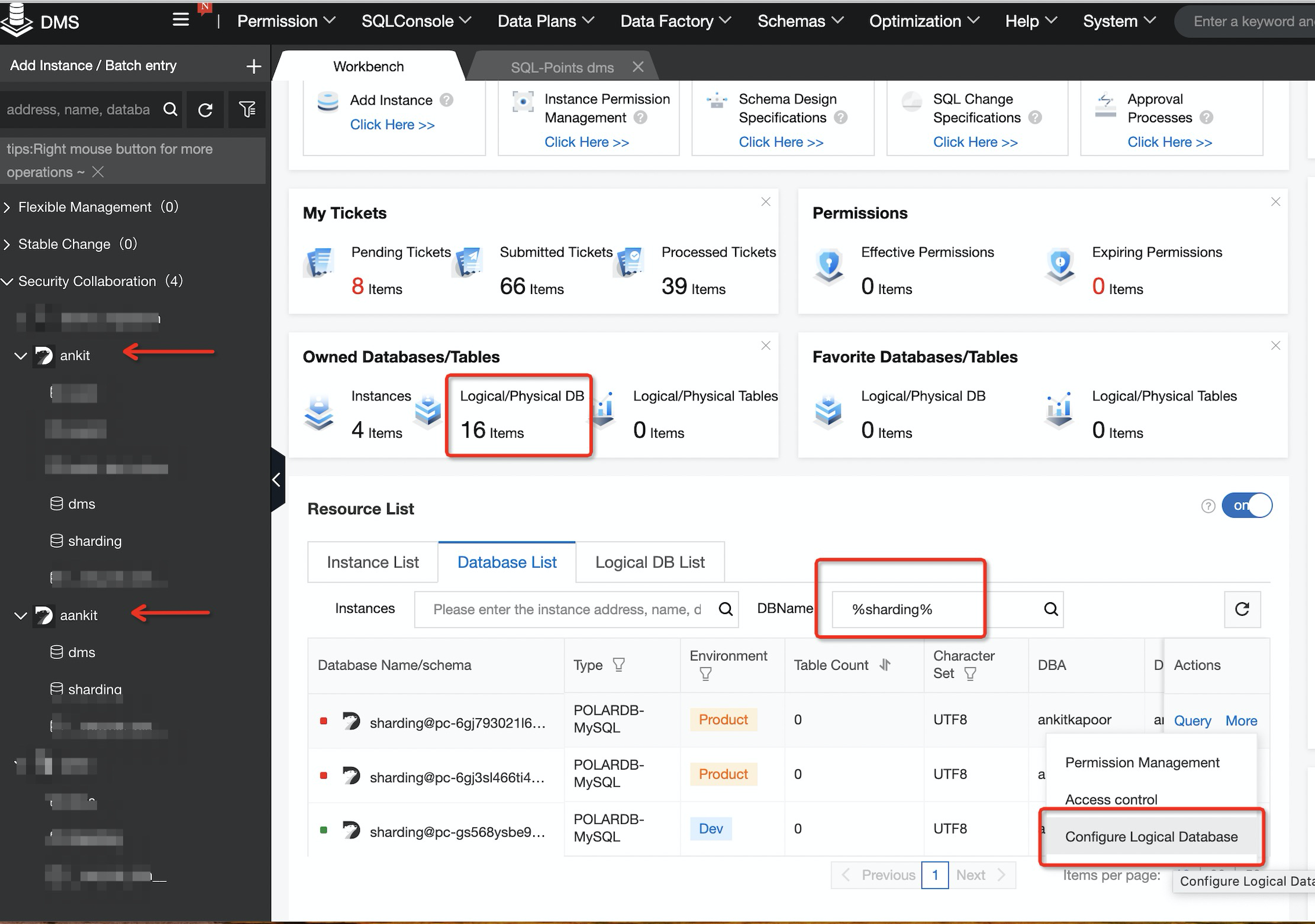

Yes, you are correct. This database doesn't actually exist and you cannot find under your base database. Logical database comprises of a single or multiple number of physical databases. Physical database is what you actually see in your database server. The figure below shows how I created a logical database from a PolarDB-MySQL database.

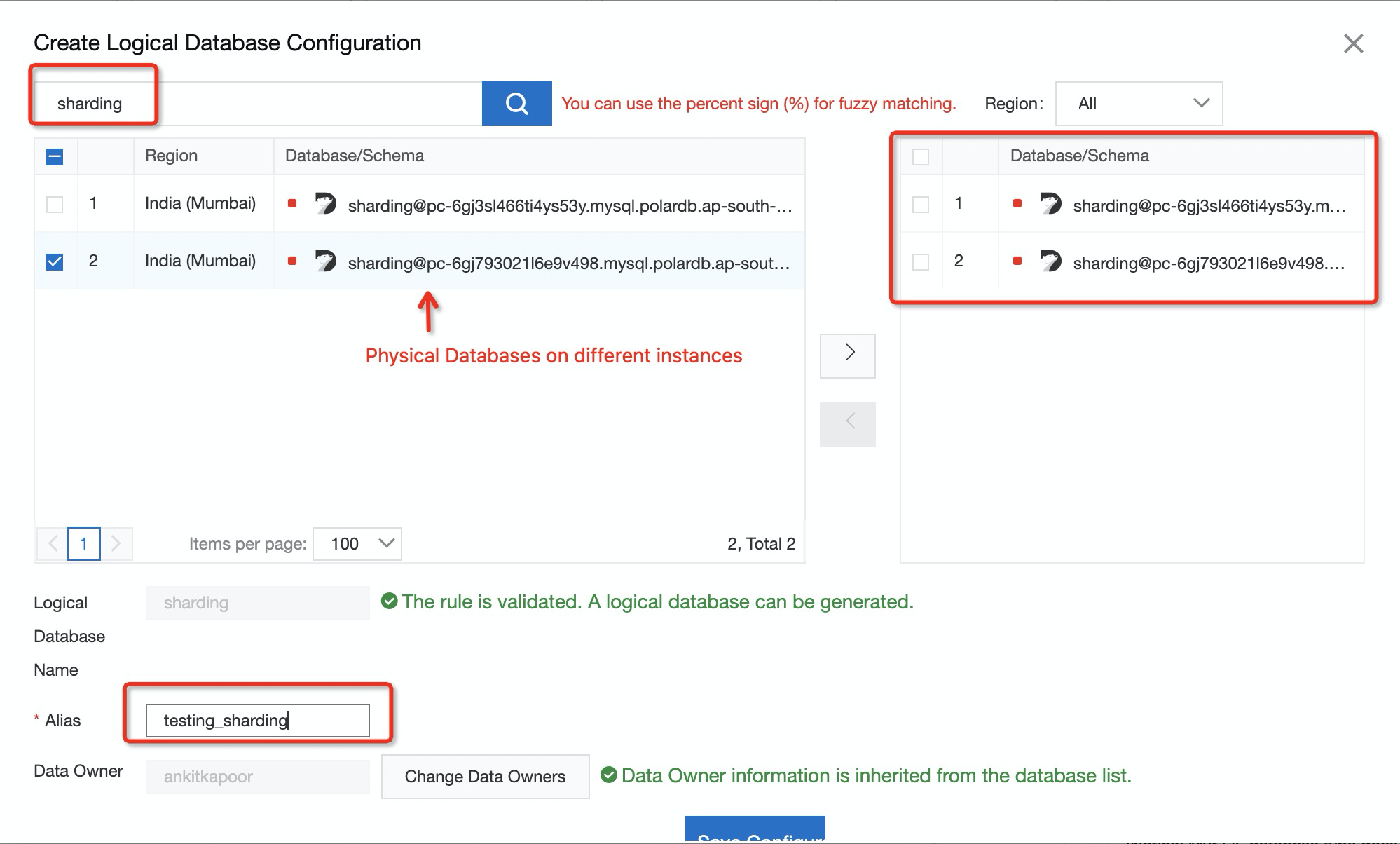

You need to enter the physical database name on which you are looking to create the logical database. For example, in the below scenario I have a physical database "sharding" in 2 different instances and on these 2 physical databases, I want to create a logical database so that I can create a sharding-like structure.

After clicking onConfigure Logical Database, you need to search for the database you are looking to add in your logical database. In my example below, I have successfully added 2 physical databases. Please note that the name of logical database will be same as physical database and it is automatically generated and can't be modified. For your own purpose of identification, you can add an alias.

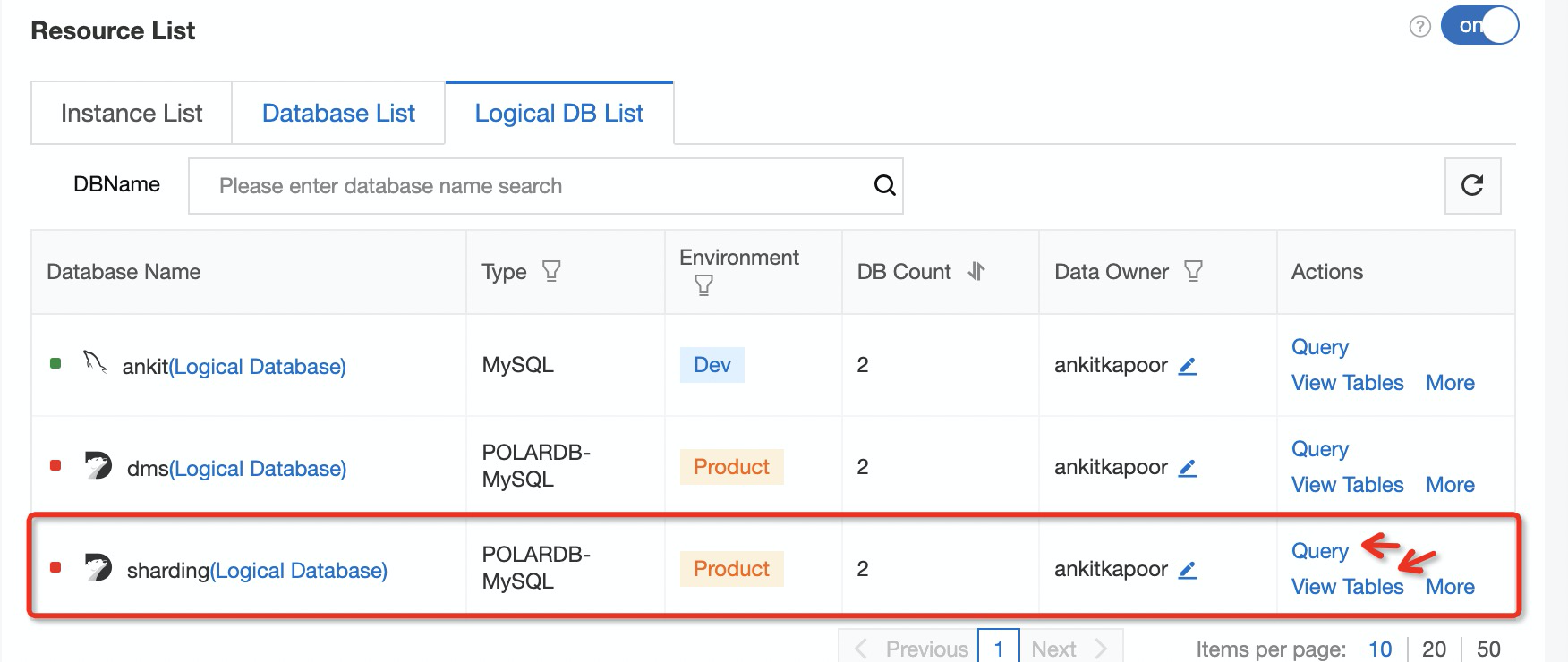

After the addition, I can see that my logical database has been created successfully:

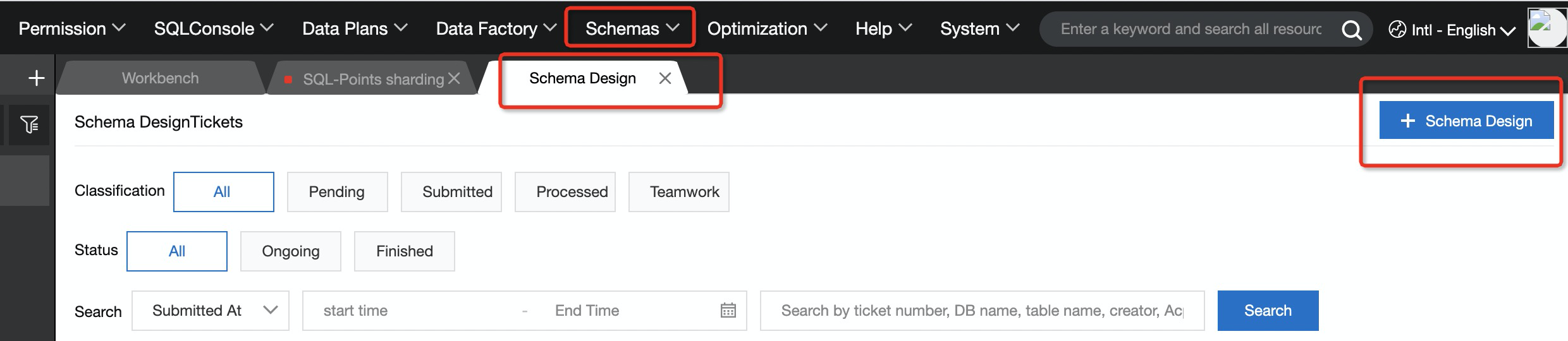

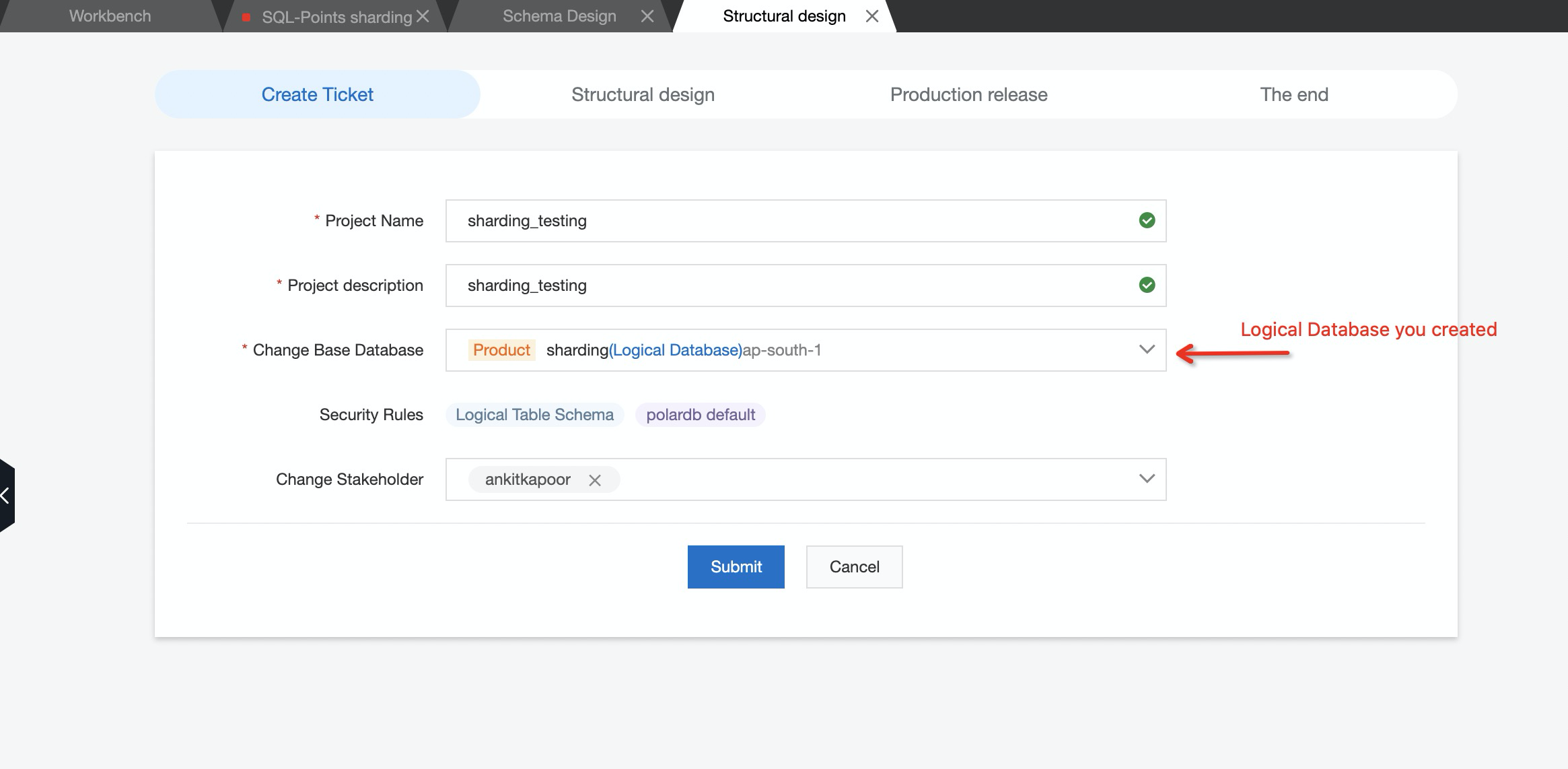

It is the same as a logical database. Logical tables are built on the physical tables and doesn't exist physically. One logical table can comprise of multiple physical tables, and DMS helps you to create a logical table. When you define a logical table, it is mandatory to define the logical expression as well so that DMS can identify how the topology will be. In other words, how physical tables will be segregated.

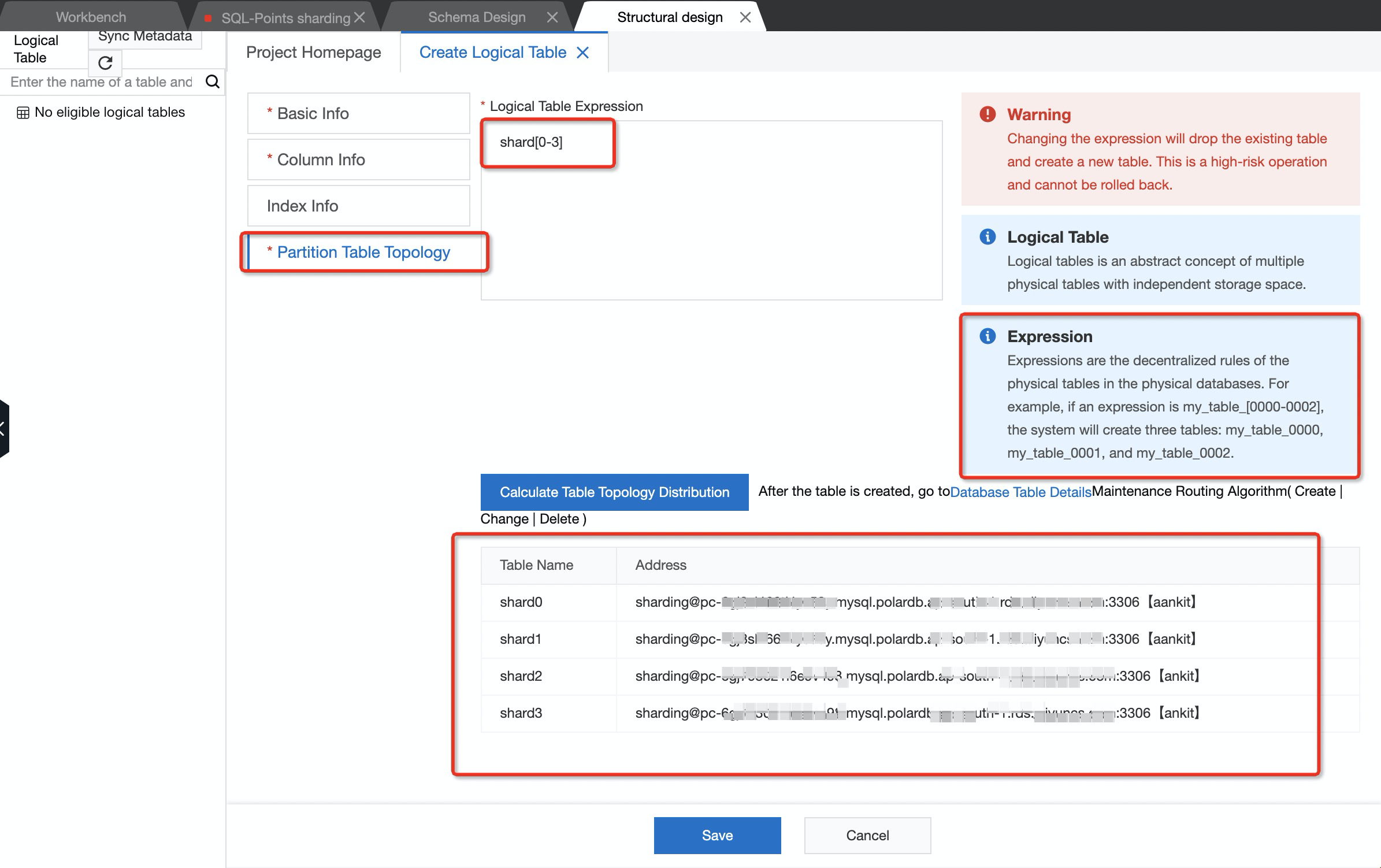

After defining the table name, column name and its type, you need to define the topology and number of physical tables to be created. Please note that DMS will distribute the number of tables equally to all the shards. If in case the number of tables cannot be distributed equally, DMS will return an error.

For example in below snapshot, shard[0-3]* will create 4 physical tables (0 meaning the first table) and then distribute first 2 table in first shard (physical database) and rest 2 will be in second shard. If in case you defined the logical expression as shard[0-4], DMS will return an error as it doesn't know how you want to distribute the tables.

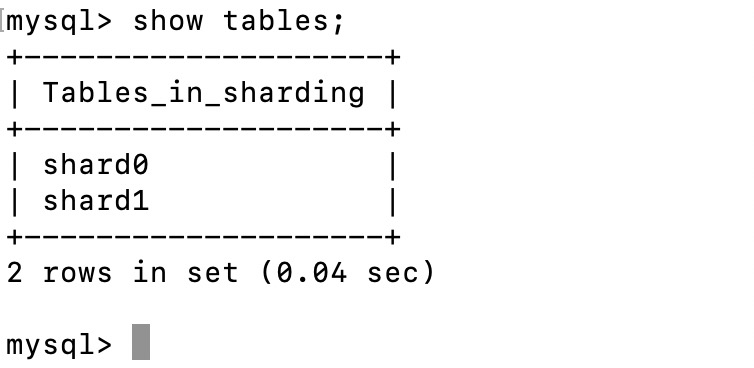

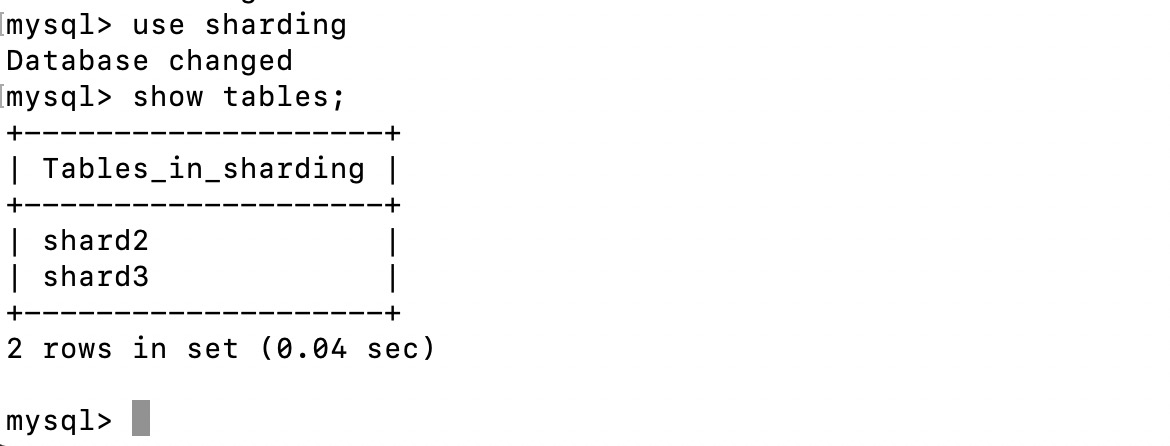

Once these changes are successfully executed, you should be able to see the physical tables in respective databases or shards.

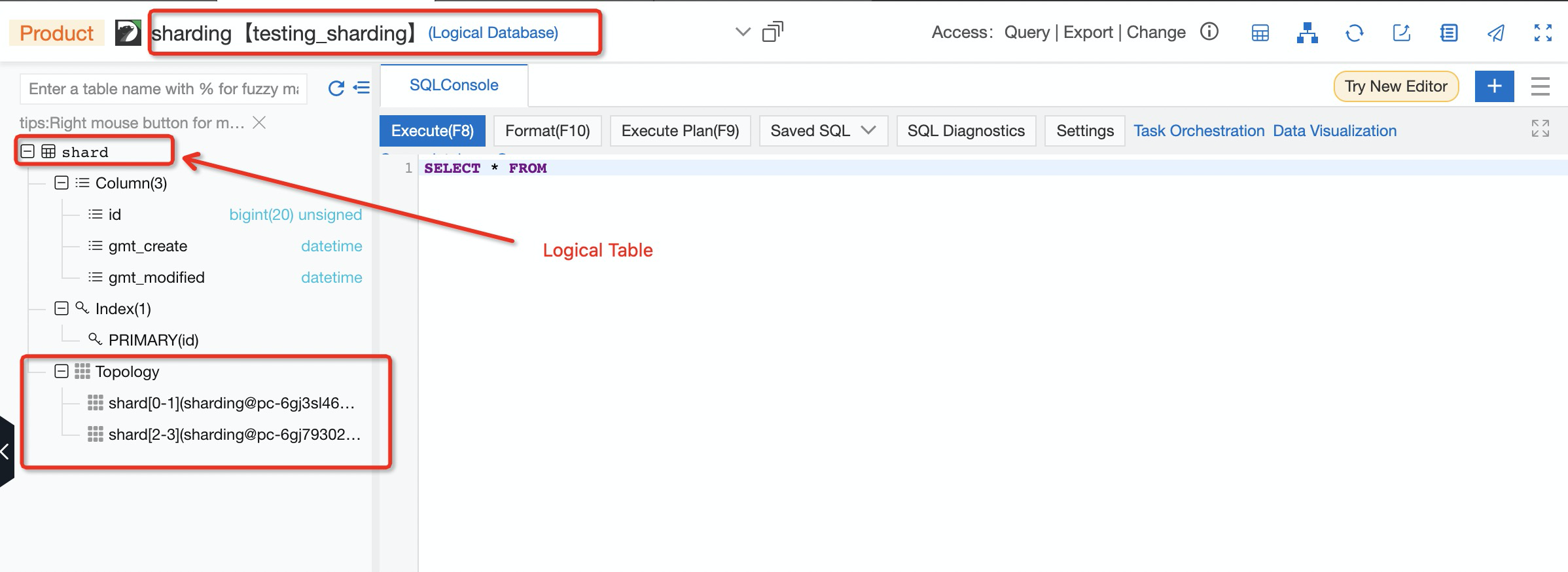

To check how a logical table looks like, you need to navigate through the SQL console of Logical database in DMS. In the image below, the logical table shard is being created and the topology can also be seen. To query the data, you can always use logical table instead of physical table.

Database Grouping (DG) is a process of grouping multiple physical databases in one group so that any changes being made will be done parallelly to all the respective databases. For example, DB1 and DB2 can be bound to a group G1. Any changes being made to the G1 will be executed on DB1 and DB2. We will explore this option while working on the solution of our case study 1

Since you already know how DMS is creating the logical tables, in order to route the data to a specific physical table we need to define the routing algorithm. We will explore this option while working on the solution of our case study 2.

Imagine we have 1000 database servers with same table structures and we need to add a column or we need to create a table with similar structure in all tables. Logging into every database server will be a nightmare. Here, leveraging Database Grouping can be useful.

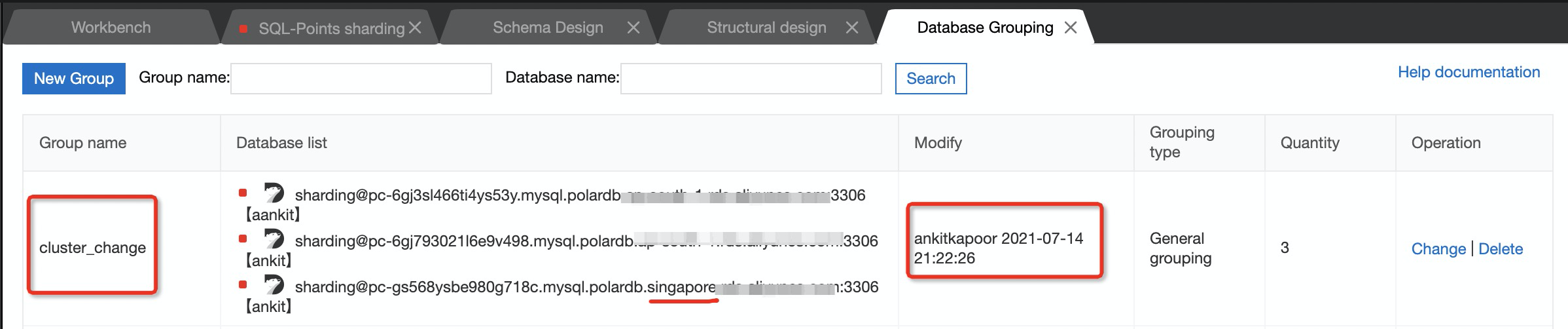

In the below example, I am considering 3 different database servers of PolarDB-MySQL (though you can consider any DB) and I need to create a table in both of these database servers. Among these 3 database servers, 2 are from India and one is from Singapore. Let us create a Group comprises of 3 databases. You can locate Database grouping under the System section of DMS.

In above group "Cluster change" I have created it with 3 different DB names located in different region.

Our purpose here is to add a table in one go. For this I have chosen to use "Normal data modify" in DMS and selected any of the above databases. Though DMS will prompt that the selected database is a part of group and any changes will execute in all databases.

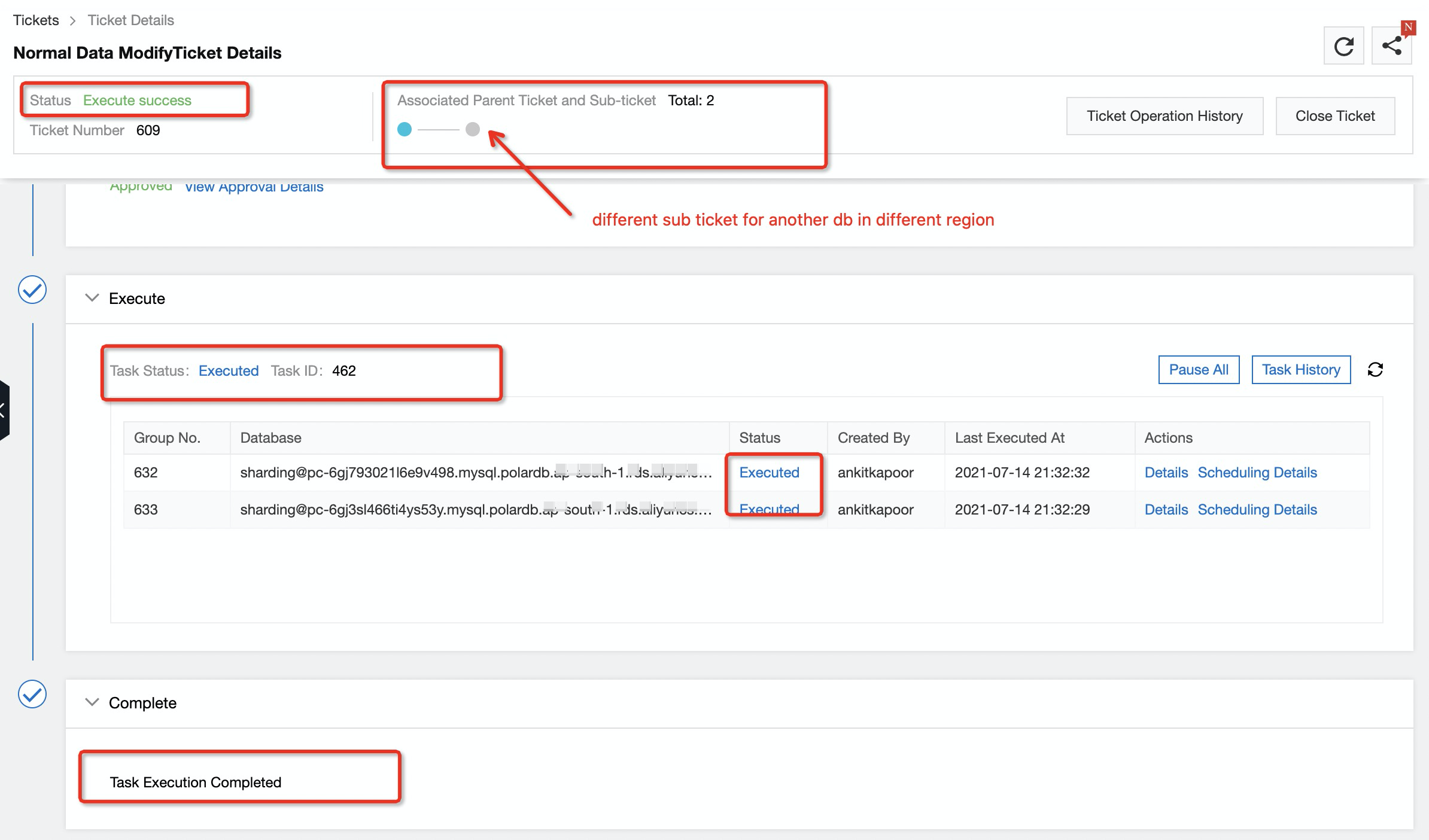

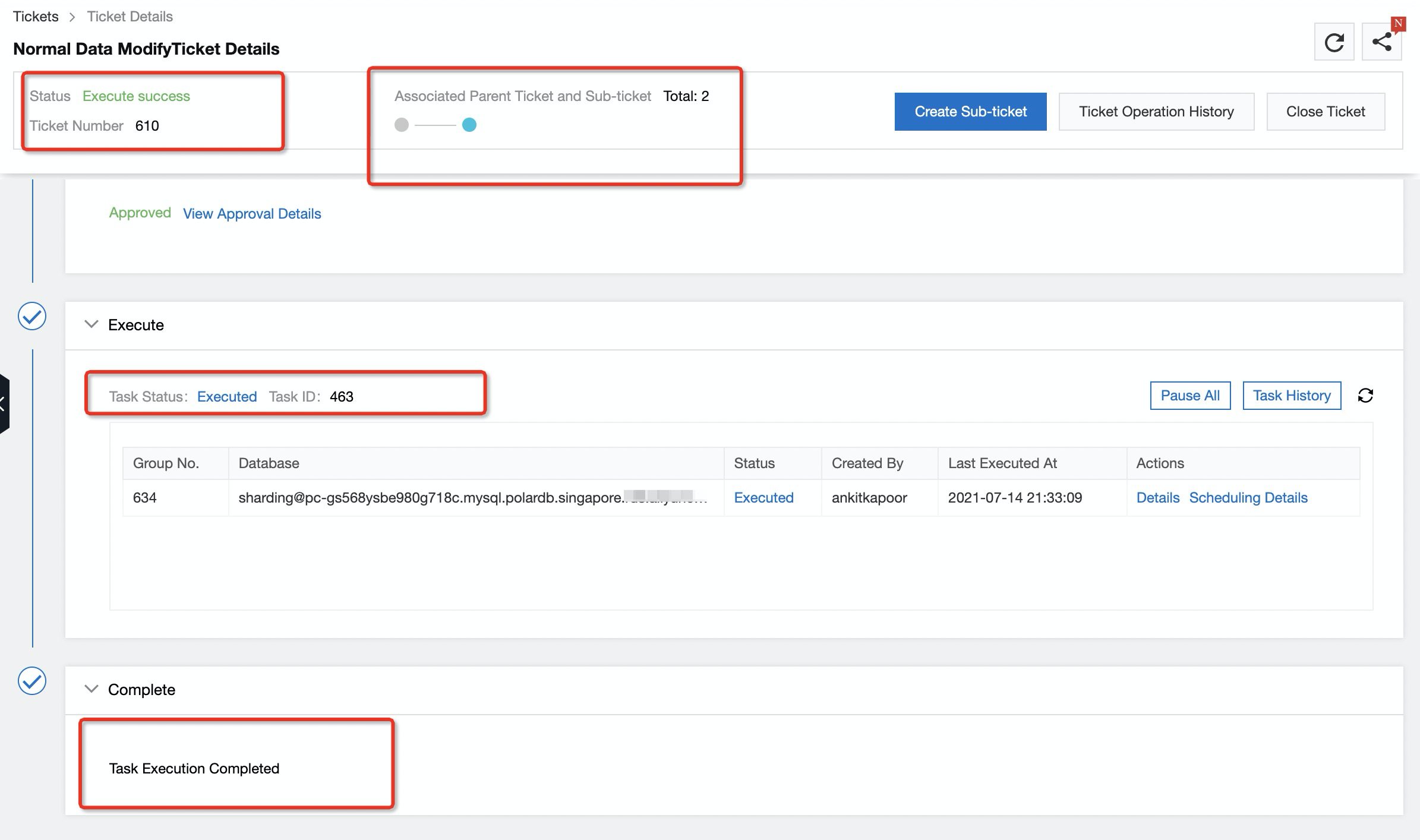

As we have selected databases from different region, 2 different sub ticket will get created. I have attached the below snapshot post successful execution.

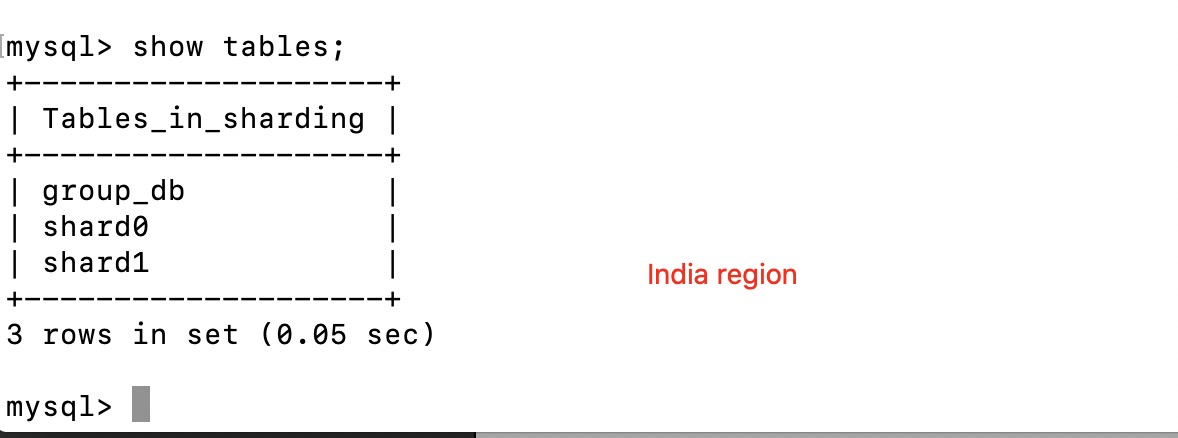

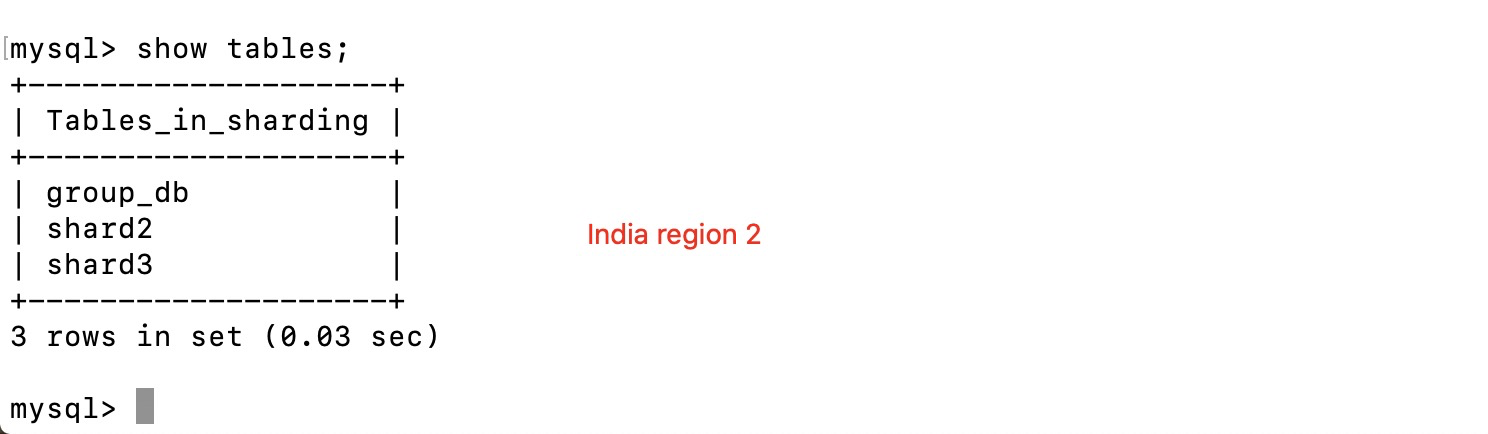

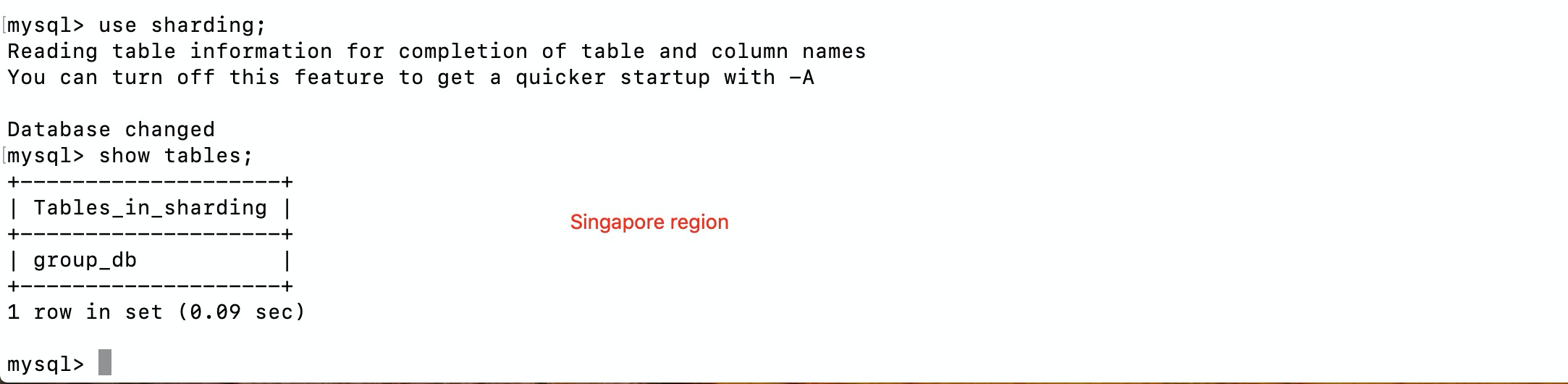

The same can be verified from the backend:

Since we already have the logical table in place, we like to define the routing algorithm to achieve our goal mentioned in case study 2.

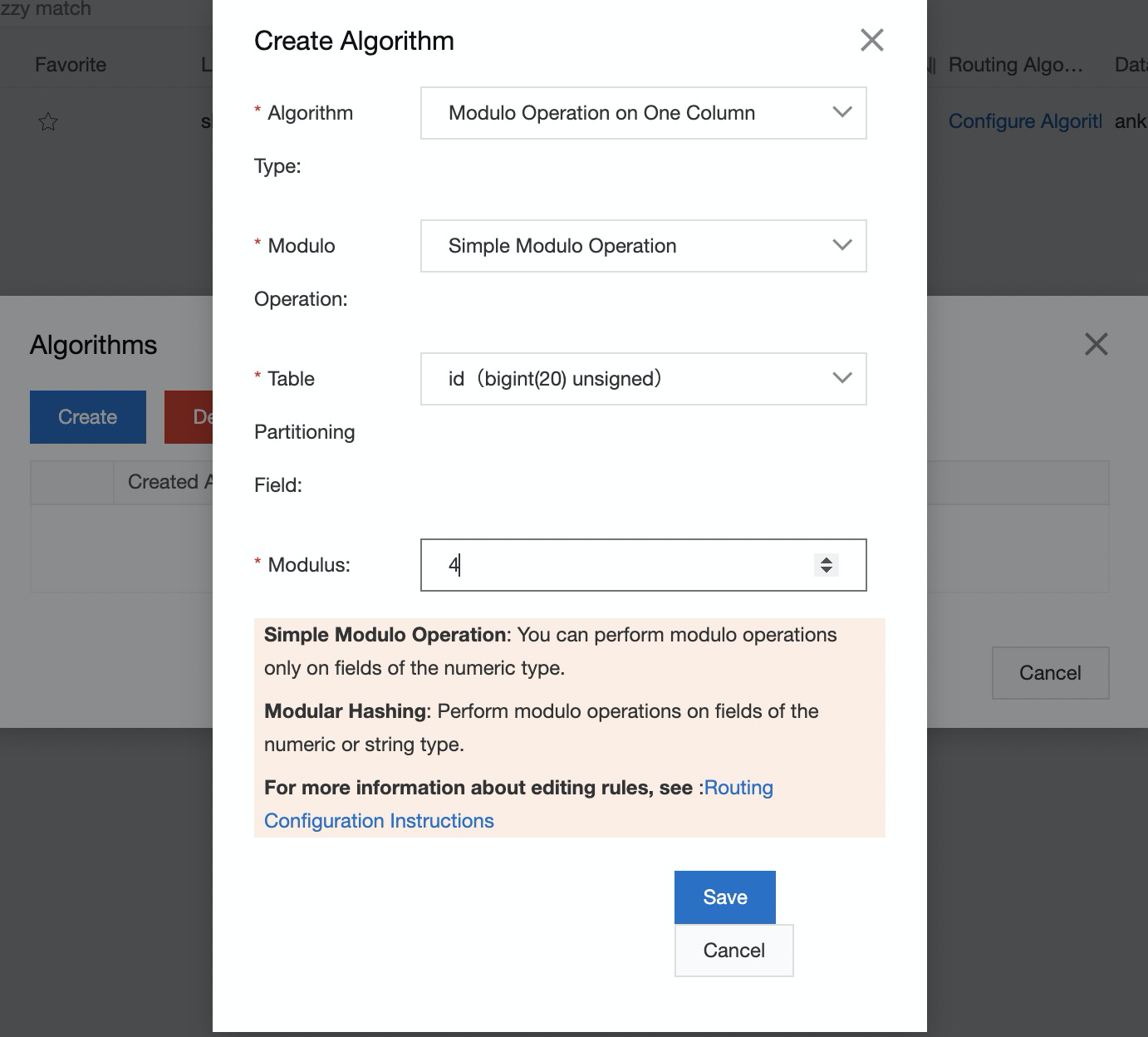

DMS allows us to use either built in algorithm or customized one. In this example I am using built-in one i.e. MODULO OPERATION OF ONE COLUMN.

Below are the parameters I have chosen to configure the routing:

Modulus defines the number of tables I have i.e. 4

Table portioning field is the sharding key (being automatically selected as it is pk). It can be multiple but then custom algorithm type needs to be chosen.

Modulo operation is how remainder value can be calculated (you can think it of as a mathematical term).

So, this is my expression on which routing algorithm will work "#id#%4".

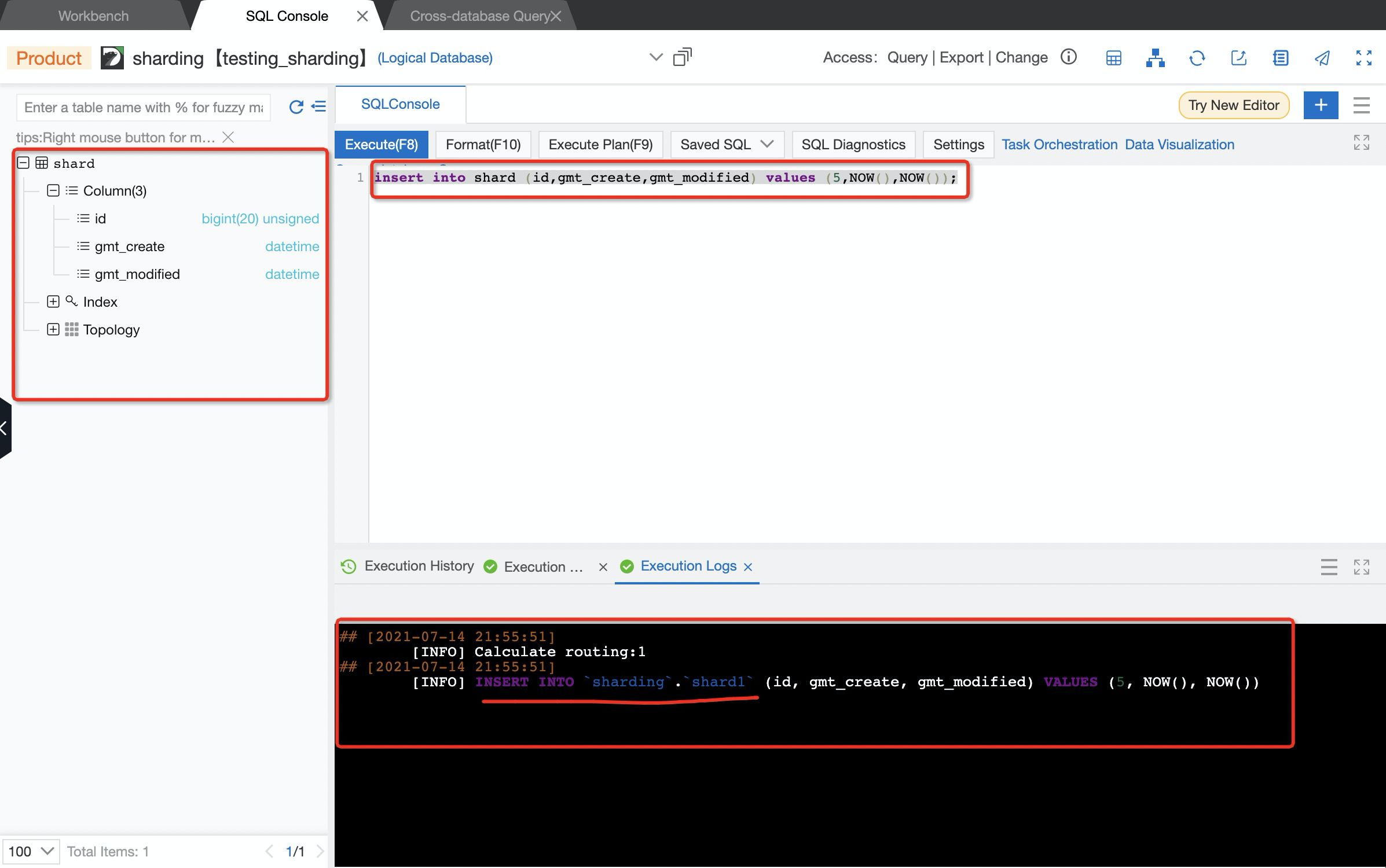

This means if I am inserting key with 5, then 5%4 will result in 1 and hence record will go in shard 1. Let us execute this and see how DMS behaves.

In above snapshot you can see that the record has been inserted in shard1 physical table as expected. Similarly you can design your own routing algorithms for individual tables. In others words 2 different tables can have different routing algorithms.

4 posts | 0 followers

FollowAlibaba Clouder - February 3, 2019

Alibaba Clouder - July 16, 2020

ApsaraDB - July 23, 2021

Alibaba Clouder - May 20, 2020

ApsaraDB - January 8, 2025

Alibaba Cloud Indonesia - March 23, 2023

4 posts | 0 followers

Follow PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn MoreMore Posts by Your Friend in a need