By Boben

In the Deisgn2Code (D2C) project, we usually extract metadata from design files using developer plugins of design tools such as Sketch and Photoshop. These plugins enable us to quickly extract native elements such as images, text, and shapes from design files. We then use these elements to build the frontend pages we need. However, many basic development components (such as forms, tables, and switches) are not supported by design tools. Although tools such as Sketch enable us to design the corresponding UI components flexibly, the DSL descriptions about UI components in Sketch are usually inaccurate. Therefore, we need to obtain accurate component descriptions in other ways. We believe that recognizing basic components through deep learning is one of the best.

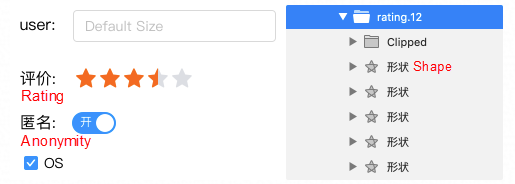

Design layers in Sketch

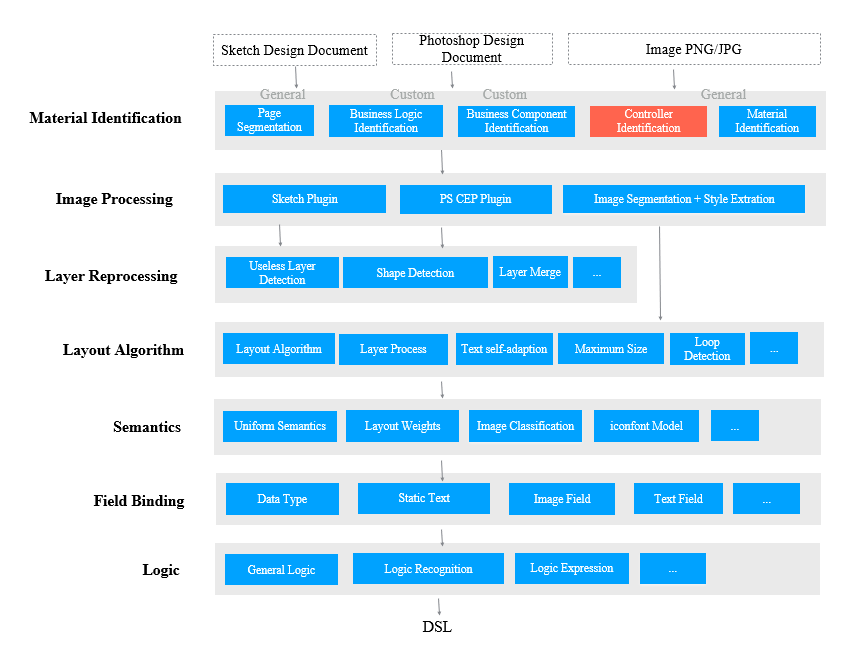

This article describes the basic component recognition capability in the material recognition layer of the frontend intelligent D2C project. This capability recognizes predefined basic components in images to help downstream technical systems optimize recognized layers' descriptions. For example, it helps a downstream technical system optimize layers' nested structure to generate a standard component tree and optimize layer description semantics.

Technical architecture of D2C recognition capabilities

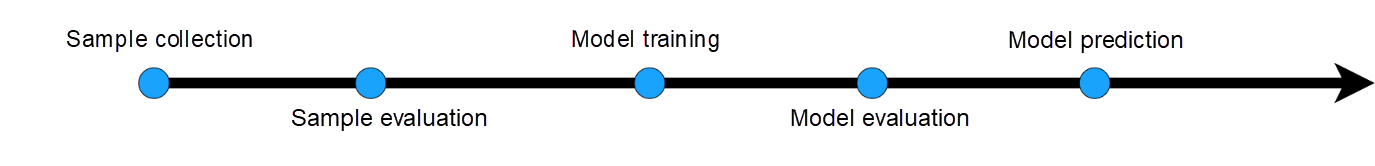

Similar to other algorithm engineering solutions, a D2C solution involves the entire process from sample collection to sample evaluation, model training, model evaluation, and model prediction.

Algorithm engineering pipeline

A good sample set is essential for a successful model. The sample quality determines the upper limit of model quality. We can optimize the model to get close to this upper limit. We can collect samples from various sources.

The basic component recognition model described in this article rarely needs to recognize the context of components. Therefore, we generate samples by using code. We use UI class libraries commonly used in the industry to ensure sample quality during programming.

Before generating samples, we need to classify the components to be recognized. We must comply with the following principles when generating samples:

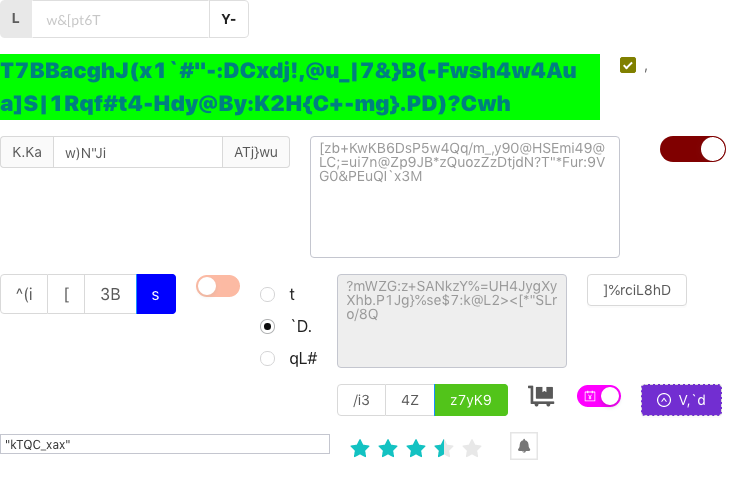

The following figure shows a simple sample:

A sample for basic component recognition

After generating samples, we need to evaluate their quality. We can perform operations such as data verification and category statistics calculation to assess the overall sample quality:

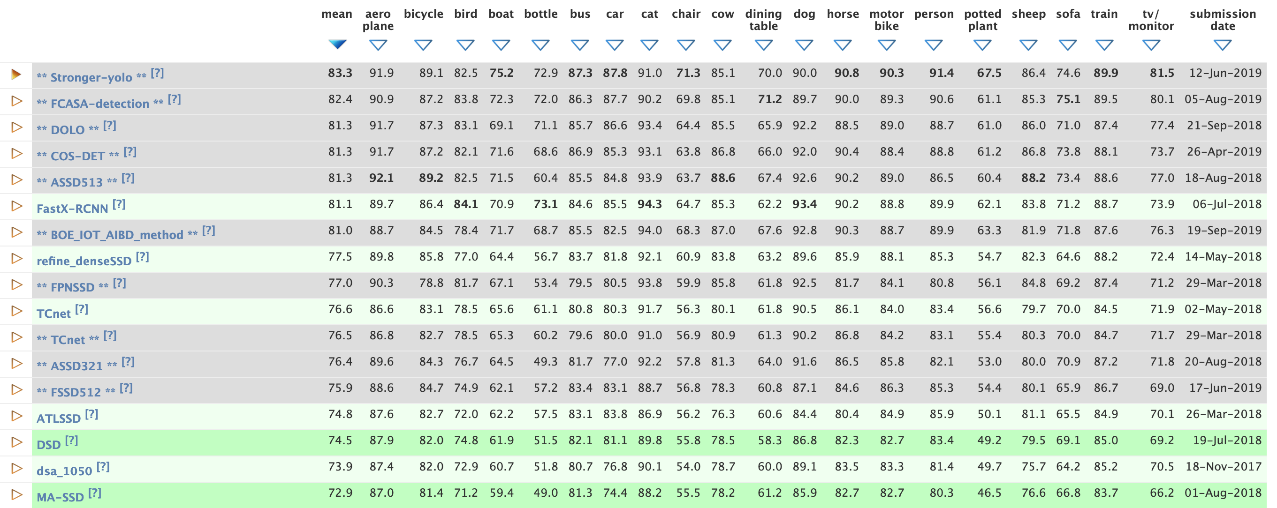

Based on a recent object-detection model report on PASCAL VOC datasets, we selected the YOLO One-Stage algorithm as the transfer learning algorithm for our basic component recognition model. This algorithm ranks first among the models listed in the report. We use this algorithm to do quick tests.

Data analysis results of object detection models

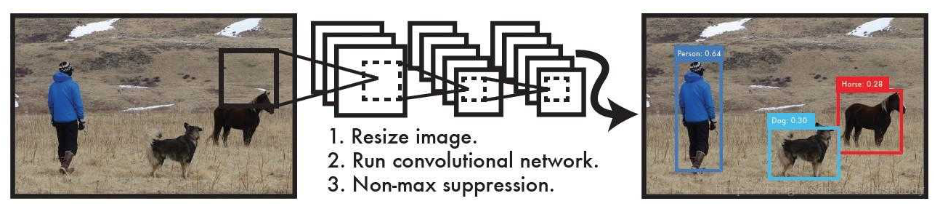

The You Only Look Once (YOLO) algorithm involves the following three steps:

1) Resize images to 416°¡416 (v3)

2) Learn the sample set's classification features by training a convolutional network

3) Perform non-max suppression during prediction to filter boxes

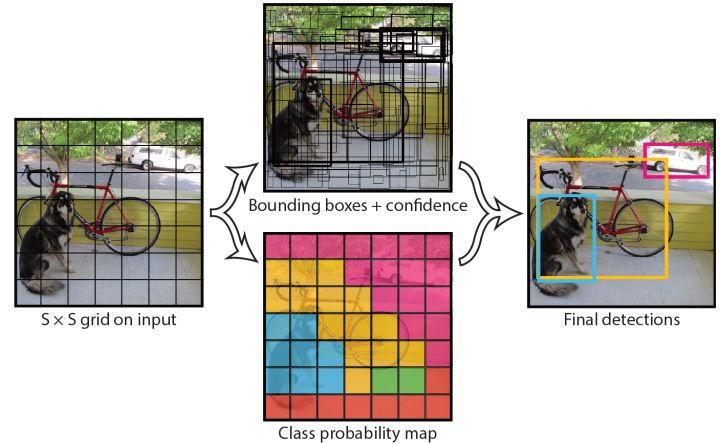

YOLO uses a separate CNN model for end-to-end object detection. Compared with two-stage object detectors (such as R-CNN), YOLO is faster in training and prediction because it is a single-stage detector. It splits each input image into an S°¡S grid and enables each cell of the grid to detect whether there is an object whose central point falls in the cell. Each cell predicts the object's bounding box and the bounding box's confidence score. The confidence score indicates the possibility of a bounding box containing the object and the bounding box's accuracy. YOLO integrates the prediction results of all cells to obtain the final prediction results. For more information about YOLO, visit the YOLO website.

During object detection for basic web components, we want the convolutional network to learn the features of each component type in the corresponding cell. Then the convolutional network will be able to differentiate between different types of components. Therefore, we must select component samples with different features to ensure that the convolution network will learn these differences.

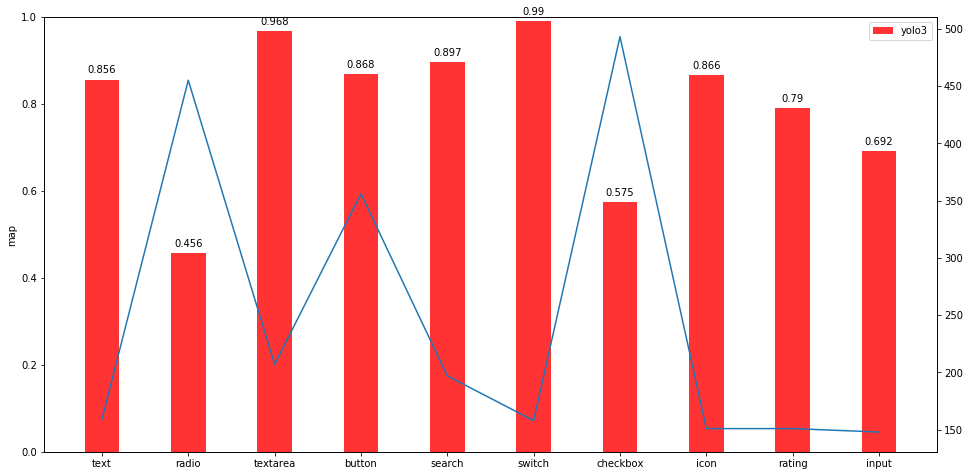

When evaluating an object detection model, we use the mean average precision (mAP) to measure the accuracy of the model trained with a COmparing Continuous Optimisers (COCO) dataset. We can select the model prediction results of some test set data and compare the result with the ground truth of such data to calculate the average precision (AP) for each category.

Then we can draw a chart for categories with an Intersection over Union (IoU) higher than 0.5. As you can see from the chart, the detection precision for small objects is low because it was significantly affected by some text elements. In the future, we can enhance the detection performance for small targets when pre-processing the samples.

Model-evaluation result based on mAP

We can optimize the training data that is used during model prediction to achieve better results. We can optimize the training data by resizing the images to a specific size during pre-processing. We use YOLO for transfer learning to do this. It resizes each input image to 416°¡416 for training and learning. The IoU result predicted by a model trained with resized images is much higher than that predicted by a model not trained with resized images (with a 10% better performance on the same test set).

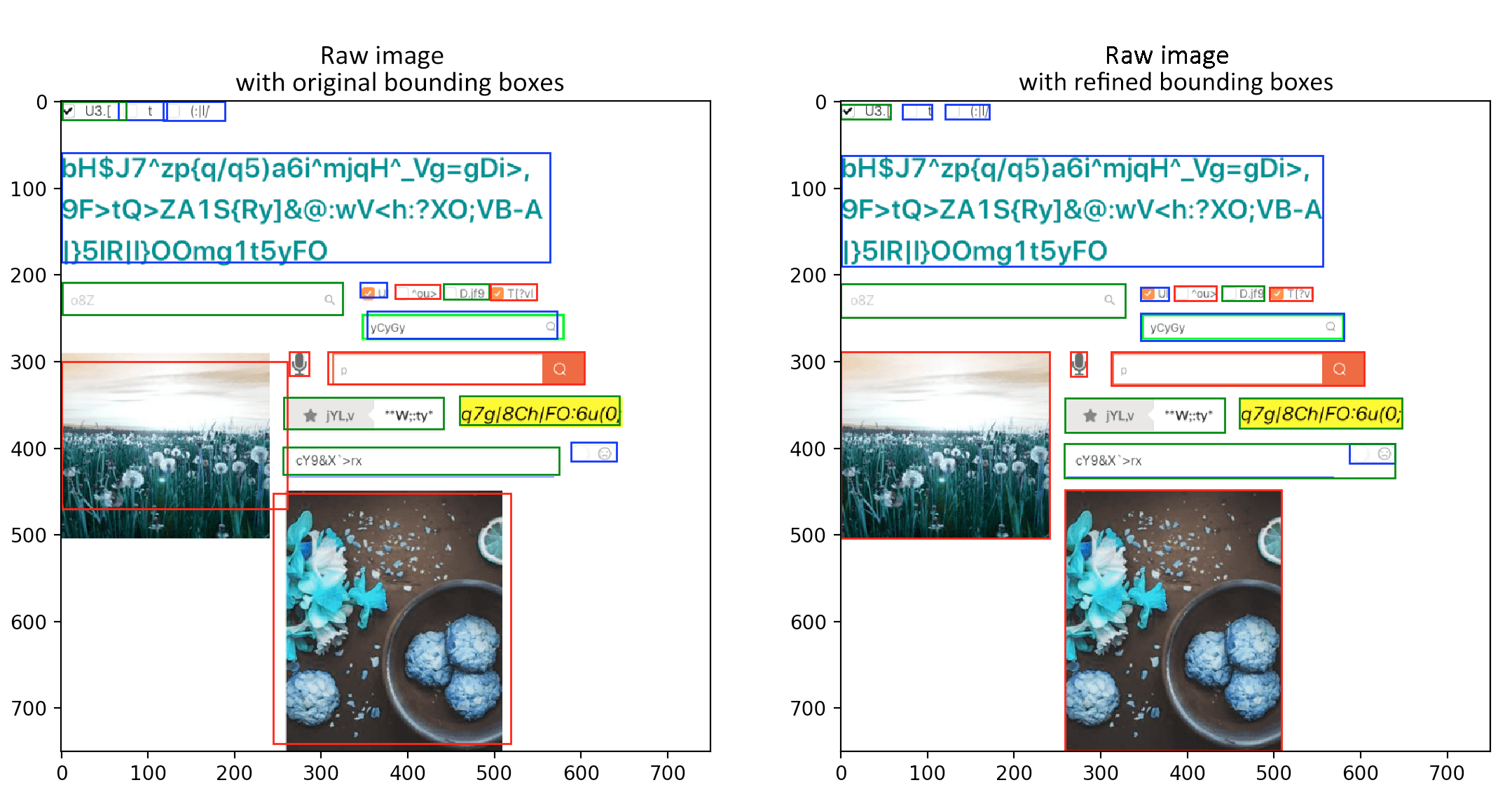

We can use a model trained with resized images to predict the results directly based on the input images and get rough bounding boxes. However, if we want the bounding boxes of UI components to be precise, we must refine the bounding boxes in each image using OpenCV. The following figure shows the comparison of bounding boxes before and after refining.

Optimization of the model prediction result

Currently, the D2C project's basic component recognition capability supports recognizing more than 20 types of UI components. In the future, we will invest more R&D efforts in the classification of refined samples and the measurement and expression of basic components' properties. We will also standardize the management of model data samples for unified output. In the future, you can recognize some basic components and modify specific expressions based on our external open-source sample sets.

Intelligently Generate Frontend Code from Design Files: Field Binding

Intelligently Generate Frontend Code from Design Files: Business Logic Generation

66 posts | 5 followers

FollowAlibaba F(x) Team - February 2, 2021

Alibaba F(x) Team - February 26, 2021

Alibaba F(x) Team - February 25, 2021

Alibaba F(x) Team - February 3, 2021

Alibaba Clouder - December 31, 2020

Alibaba F(x) Team - February 23, 2021

66 posts | 5 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba F(x) Team