By Bruce Wu

In comparison with self-built Elasticsearch, Logstash, and Kibana (ELK) services, Log Service has many advantages in terms of functionality, performance, scale, and cost. For more information, see Comprehensive comparison between self-built ELK and Log Service. You can migrate data stored in Elasticsearch to Log Service by using a one-line command.

As the name suggests, data migration refers to migrating data from one data source to another. Based on whether the data source storage engines are the same, data migration is divided into homogenous migration and heterogeneous migration. According to the migration type, data migration can be further divided into full-data migration and incremental data migration.

Currently, many data migration solutions provided by different cloud computing vendors are available on the market, such as AWS DMS, Azure DMA, and Alibaba Cloud Data Transmission Service (DTS). These solutions mainly address data migration problems between relational databases, and have not covered the Elasticsearch scenario yet.

To migrate data from Elasticsearch, the Log Service team provides a solution based on aliyun-log-python-sdk and aliyun-log-cli. This solution mainly addresses full-data migration of historical data.

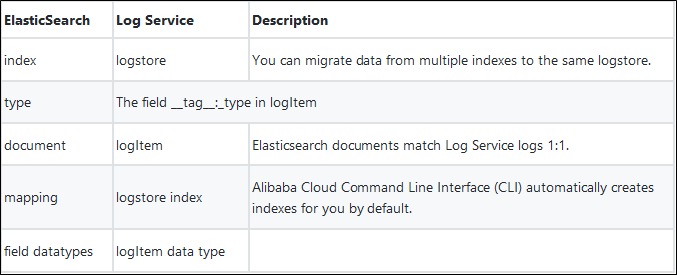

Data model of Elasticsearch consists of some concepts, such as index, type, document, mapping, and field datatypes. The mapping relationships between these concepts and Log Service data types are provided in the following table.

For more information about the mapping relationships, see Data type mapping.

The following video shows you how to migrate NGINX access logs from Elasticsearch to Log Service, and how to query and analyze these logs by using CLI.

aliyun log es_migration --hosts=<your_es> --project_name=<your_project> --indexes=filebeat-* --logstore_index_mappings='{"nginx-access": "filebeat-*"}' --time_reference=@timestamp1. Query for the status code count on a daily basis.

* | SELECT date_trunc('day' , __time__) as t, "nginx.access.response_code" AS status, COUNT(1) AS count GROUP BY status, t ORDER BY t ASC2. Query for the countries and regions where the requests originated.

* | SELECT ip_to_country("nginx.access.remote_ip") as country, count(1) as count GROUP BY countryThe performance of CLI mainly depends on the speed of reading data from Elasticsearch and that of writing data to Log Service.

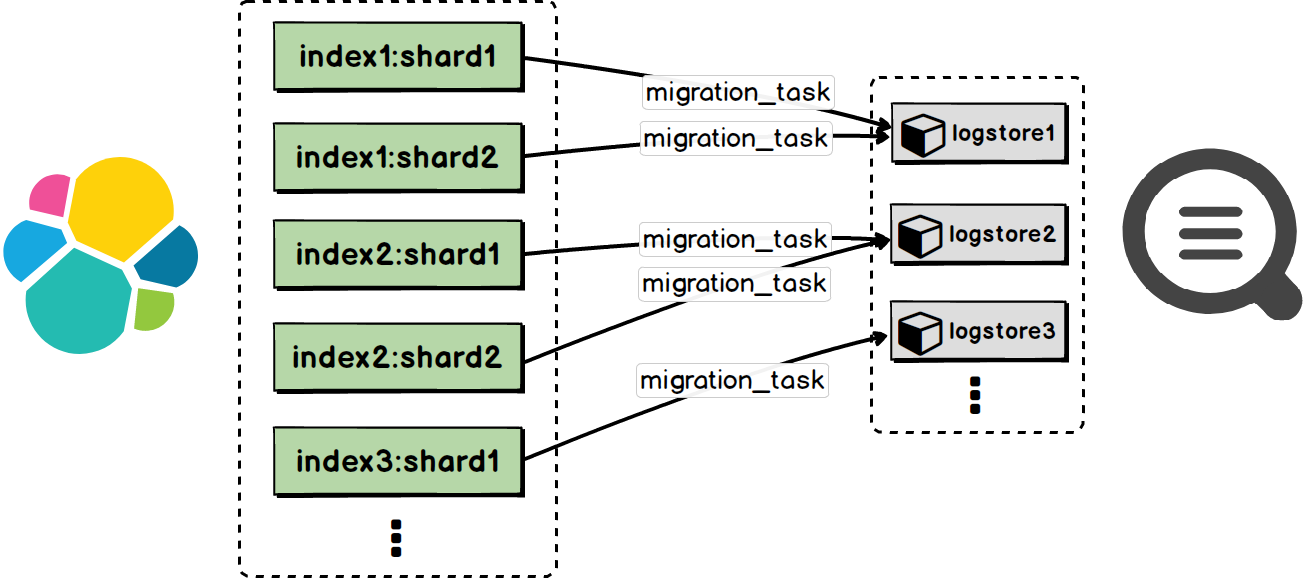

Each index of Elasticsearch consists of multiple shards. CLI creates a data migration task for every shard of each index, and submits these tasks to the internal process pool for execution. You can specify the size of the pool by setting the pool_size parameter. Theoretically, a target index with more shards should have a higher overall throughput.

Log Service has shards, too. Each shard provides a writing capacity of 5 MB/s or 500 writes/s. You can enable more shards for your logstore to improve the speed of writing data to Log Service.

Assume that the target Elasticsearch index has only one shard. The logstore of Log Service also has only one shard. The size of each document to be migrated is 100 Bytes. Then the average data migration speed would be 3 MB/s.

3 Ways to Migrate Java Logs to the Cloud: Log4J, LogBack, and Producer Lib

57 posts | 11 followers

FollowAlibaba Clouder - July 20, 2020

Alibaba Clouder - January 6, 2021

Alibaba Cloud Storage - June 19, 2019

Alibaba Clouder - December 30, 2020

Alibaba Clouder - April 17, 2018

Alibaba Clouder - December 29, 2020

57 posts | 11 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More ISV Solutions for Cloud Migration

ISV Solutions for Cloud Migration

Alibaba Cloud offers Independent Software Vendors (ISVs) the optimal cloud migration solutions to ready your cloud business with the shortest path.

Learn MoreMore Posts by Alibaba Cloud Storage